Hongbo Sun

MARS2 2025 Challenge on Multimodal Reasoning: Datasets, Methods, Results, Discussion, and Outlook

Sep 17, 2025

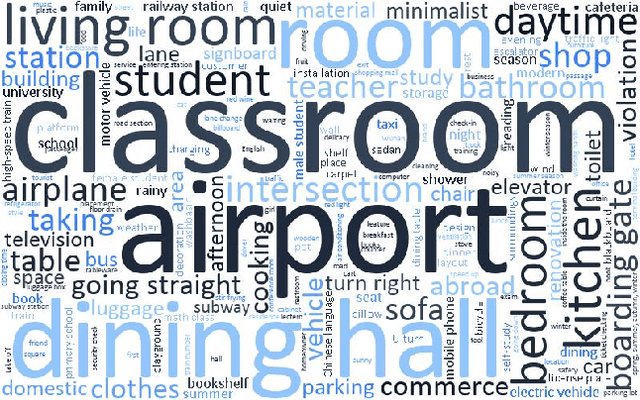

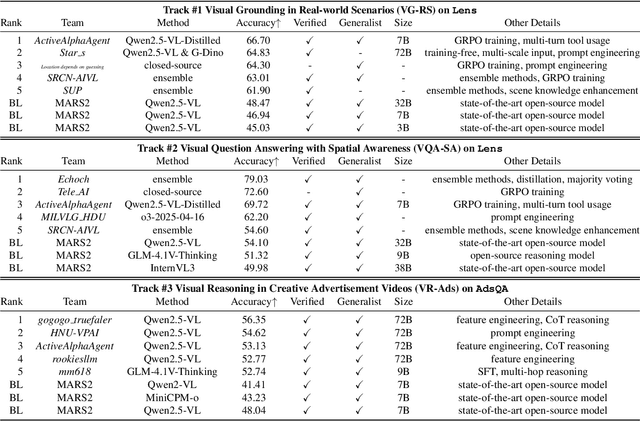

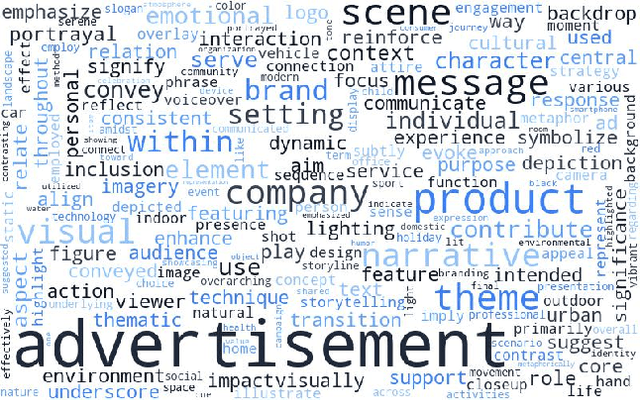

Abstract:This paper reviews the MARS2 2025 Challenge on Multimodal Reasoning. We aim to bring together different approaches in multimodal machine learning and LLMs via a large benchmark. We hope it better allows researchers to follow the state-of-the-art in this very dynamic area. Meanwhile, a growing number of testbeds have boosted the evolution of general-purpose large language models. Thus, this year's MARS2 focuses on real-world and specialized scenarios to broaden the multimodal reasoning applications of MLLMs. Our organizing team released two tailored datasets Lens and AdsQA as test sets, which support general reasoning in 12 daily scenarios and domain-specific reasoning in advertisement videos, respectively. We evaluated 40+ baselines that include both generalist MLLMs and task-specific models, and opened up three competition tracks, i.e., Visual Grounding in Real-world Scenarios (VG-RS), Visual Question Answering with Spatial Awareness (VQA-SA), and Visual Reasoning in Creative Advertisement Videos (VR-Ads). Finally, 76 teams from the renowned academic and industrial institutions have registered and 40+ valid submissions (out of 1200+) have been included in our ranking lists. Our datasets, code sets (40+ baselines and 15+ participants' methods), and rankings are publicly available on the MARS2 workshop website and our GitHub organization page https://github.com/mars2workshop/, where our updates and announcements of upcoming events will be continuously provided.

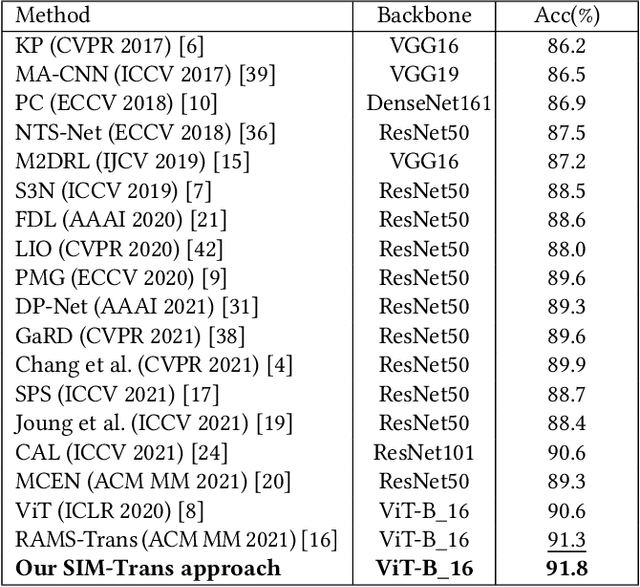

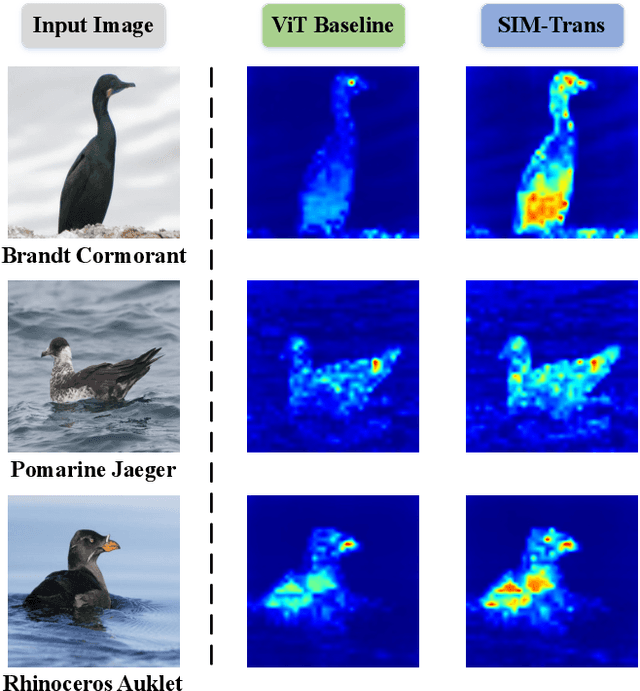

SIM-Trans: Structure Information Modeling Transformer for Fine-grained Visual Categorization

Aug 31, 2022

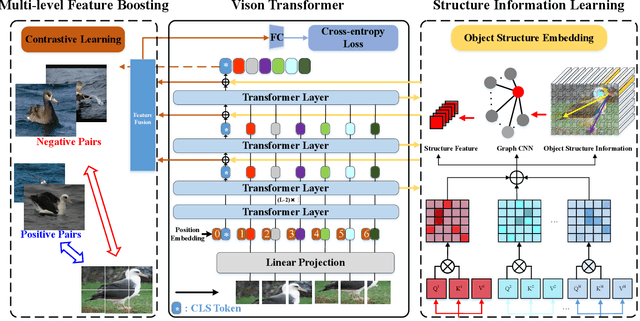

Abstract:Fine-grained visual categorization (FGVC) aims at recognizing objects from similar subordinate categories, which is challenging and practical for human's accurate automatic recognition needs. Most FGVC approaches focus on the attention mechanism research for discriminative regions mining while neglecting their interdependencies and composed holistic object structure, which are essential for model's discriminative information localization and understanding ability. To address the above limitations, we propose the Structure Information Modeling Transformer (SIM-Trans) to incorporate object structure information into transformer for enhancing discriminative representation learning to contain both the appearance information and structure information. Specifically, we encode the image into a sequence of patch tokens and build a strong vision transformer framework with two well-designed modules: (i) the structure information learning (SIL) module is proposed to mine the spatial context relation of significant patches within the object extent with the help of the transformer's self-attention weights, which is further injected into the model for importing structure information; (ii) the multi-level feature boosting (MFB) module is introduced to exploit the complementary of multi-level features and contrastive learning among classes to enhance feature robustness for accurate recognition. The proposed two modules are light-weighted and can be plugged into any transformer network and trained end-to-end easily, which only depends on the attention weights that come with the vision transformer itself. Extensive experiments and analyses demonstrate that the proposed SIM-Trans achieves state-of-the-art performance on fine-grained visual categorization benchmarks. The code is available at https://github.com/PKU-ICST-MIPL/SIM-Trans_ACMMM2022.

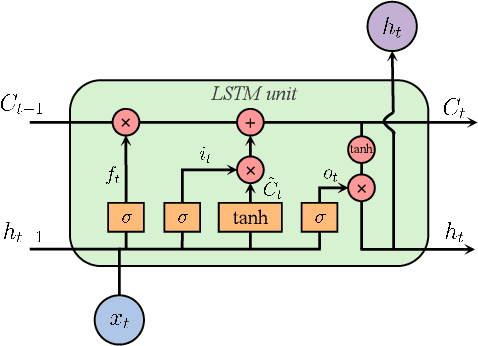

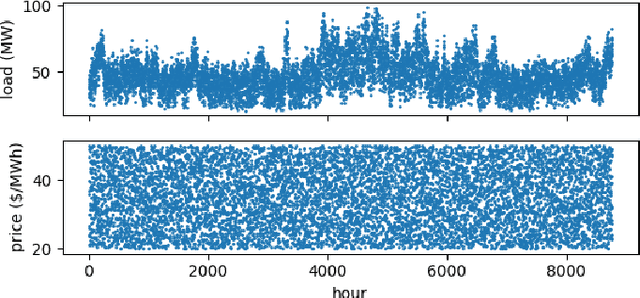

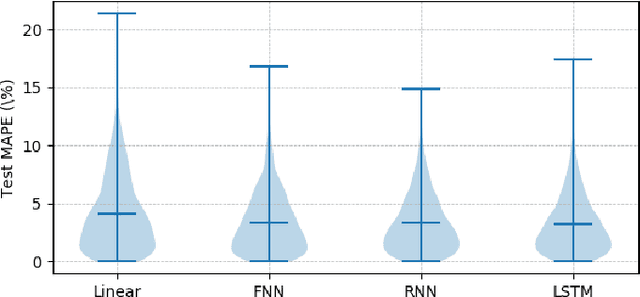

Learning Dynamical Demand Response Model in Real-Time Pricing Program

Dec 22, 2018

Abstract:Price responsiveness is a major feature of end use customers (EUCs) that participate in demand response (DR) programs, and has been conventionally modeled with static demand functions, which take the electricity price as the input and the aggregate energy consumption as the output. This, however, neglects the inherent temporal correlation of the EUC behaviors, and may result in large errors when predicting the actual responses of EUCs in real-time pricing (RTP) programs. In this paper, we propose a dynamical DR model so as to capture the temporal behavior of the EUCs. The states in the proposed dynamical DR model can be explicitly chosen, in which case the model can be represented by a linear function or a multi-layer feedforward neural network, or implicitly chosen, in which case the model can be represented by a recurrent neural network or a long short-term memory unit network. In both cases, the dynamical DR model can be learned from historical price and energy consumption data. Numerical simulation illustrated how the states are chosen and also showed the proposed dynamical DR model significantly outperforms the static ones.

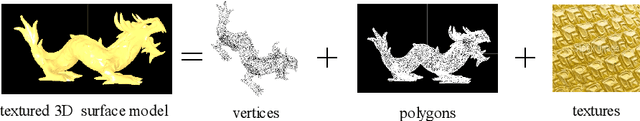

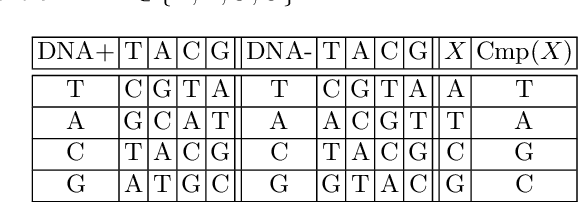

3D Textured Model Encryption via 3D Lu Chaotic Mapping

Sep 25, 2017

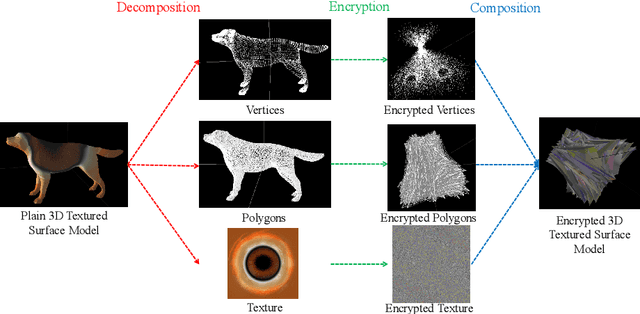

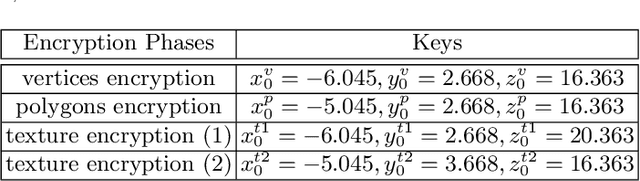

Abstract:In the coming Virtual/Augmented Reality (VR/AR) era, 3D contents will be popularized just as images and videos today. The security and privacy of these 3D contents should be taken into consideration. 3D contents contain surface models and solid models. The surface models include point clouds, meshes and textured models. Previous work mainly focus on encryption of solid models, point clouds and meshes. This work focuses on the most complicated 3D textured model. We propose a 3D Lu chaotic mapping based encryption method of 3D textured model. We encrypt the vertexes, the polygons and the textures of 3D models separately using the 3D Lu chaotic mapping. Then the encrypted vertices, edges and texture maps are composited together to form the final encrypted 3D textured model. The experimental results reveal that our method can encrypt and decrypt 3D textured models correctly. In addition, our method can resistant several attacks such as brute-force attack and statistic attack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge