Bruno Brito

Lab2Car: A Versatile Wrapper for Deploying Experimental Planners in Complex Real-world Environments

Sep 14, 2024

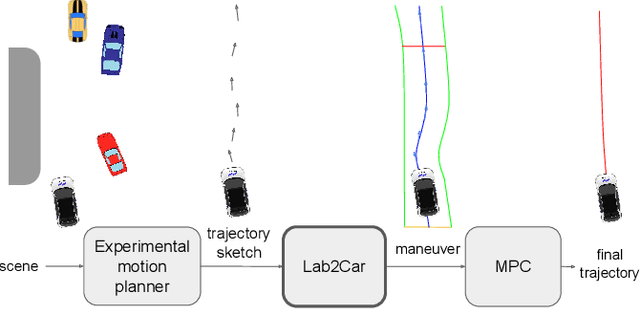

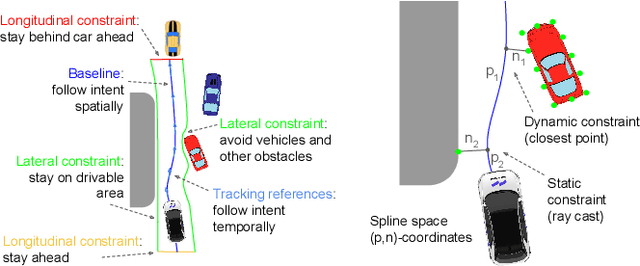

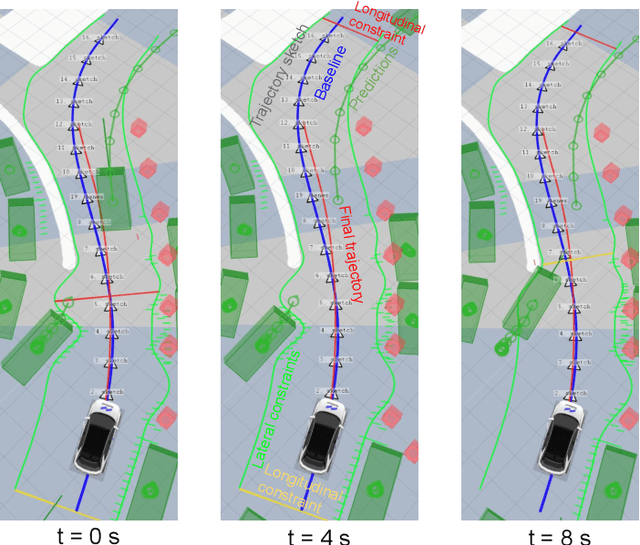

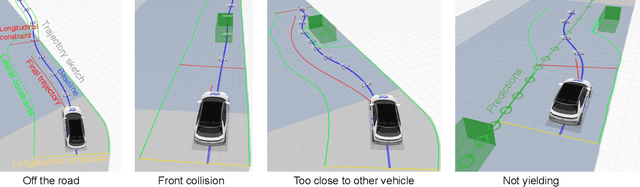

Abstract:Human-level autonomous driving is an ever-elusive goal, with planning and decision making -- the cognitive functions that determine driving behavior -- posing the greatest challenge. Despite a proliferation of promising approaches, progress is stifled by the difficulty of deploying experimental planners in naturalistic settings. In this work, we propose Lab2Car, an optimization-based wrapper that can take a trajectory sketch from an arbitrary motion planner and convert it to a safe, comfortable, dynamically feasible trajectory that the car can follow. This allows motion planners that do not provide such guarantees to be safely tested and optimized in real-world environments. We demonstrate the versatility of Lab2Car by using it to deploy a machine learning (ML) planner and a search-based planner on self-driving cars in Las Vegas. The resulting systems handle challenging scenarios, such as cut-ins, overtaking, and yielding, in complex urban environments like casino pick-up/drop-off areas. Our work paves the way for quickly deploying and evaluating candidate motion planners in realistic settings, ensuring rapid iteration and accelerating progress towards human-level autonomy.

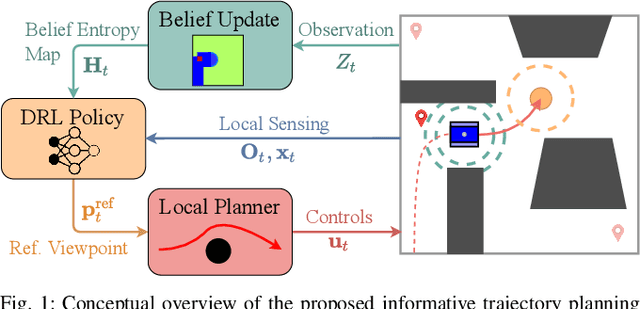

Where to Look Next: Learning Viewpoint Recommendations for Informative Trajectory Planning

Mar 04, 2022

Abstract:Search missions require motion planning and navigation methods for information gathering that continuously replan based on new observations of the robot's surroundings. Current methods for information gathering, such as Monte Carlo Tree Search, are capable of reasoning over long horizons, but they are computationally expensive. An alternative for fast online execution is to train, offline, an information gathering policy, which indirectly reasons about the information value of new observations. However, these policies lack safety guarantees and do not account for the robot dynamics. To overcome these limitations we train an information-aware policy via deep reinforcement learning, that guides a receding-horizon trajectory optimization planner. In particular, the policy continuously recommends a reference viewpoint to the local planner, such that the resulting dynamically feasible and collision-free trajectories lead to observations that maximize the information gain and reduce the uncertainty about the environment. In simulation tests in previously unseen environments, our method consistently outperforms greedy next-best-view policies and achieves competitive performance compared to Monte Carlo Tree Search, in terms of information gains and coverage time, with a reduction in execution time by three orders of magnitude.

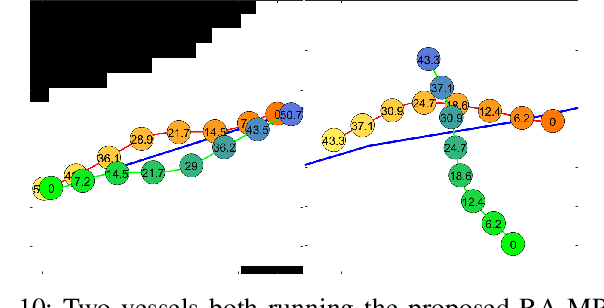

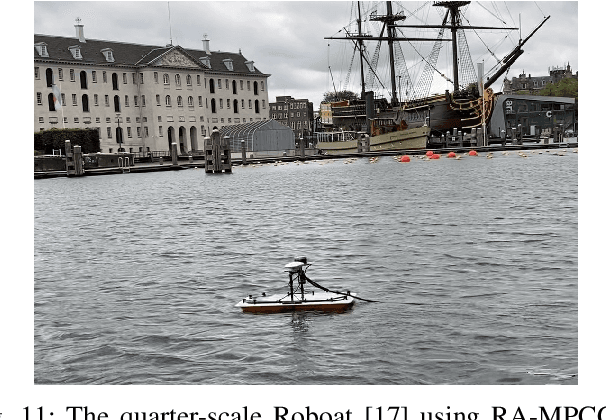

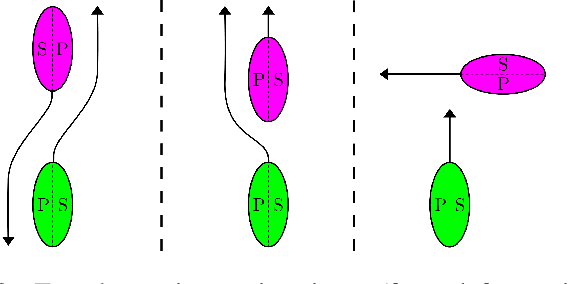

Regulations Aware Motion Planning for Autonomous Surface Vessels in Urban Canals

Feb 24, 2022

Abstract:In unstructured urban canals, regulation-aware interactions with other vessels are essential for collision avoidance and social compliance. In this paper, we propose a regulations aware motion planning framework for Autonomous Surface Vessels (ASVs) that accounts for dynamic and static obstacles. Our method builds upon local model predictive contouring control (LMPCC) to generate motion plans satisfying kino-dynamic and collision constraints in real-time while including regulation awareness. To incorporate regulations in the planning stage, we propose a cost function encouraging compliance with rules describing interactions with other vessels similar to COLlision avoidance REGulations at sea (COLREGs). These regulations are essential to make an ASV behave in a predictable and socially compliant manner with regard to other vessels. We compare the framework against baseline methods and show more effective regulation-compliance avoidance of moving obstacles with our motion planner. Additionally, we present experimental results in an outdoor environment

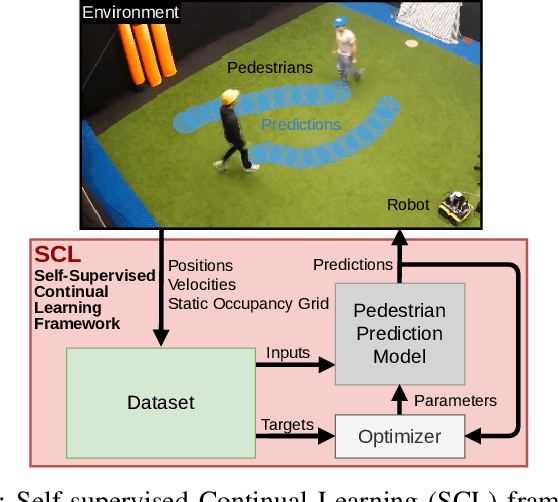

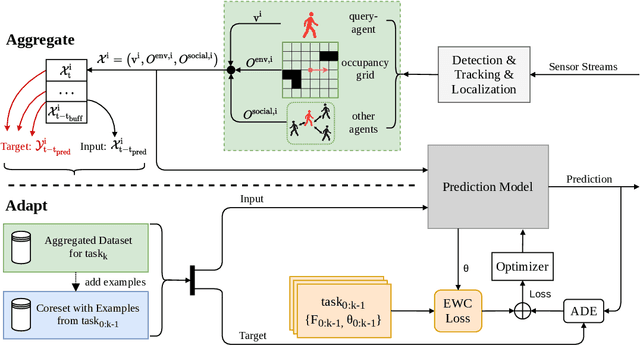

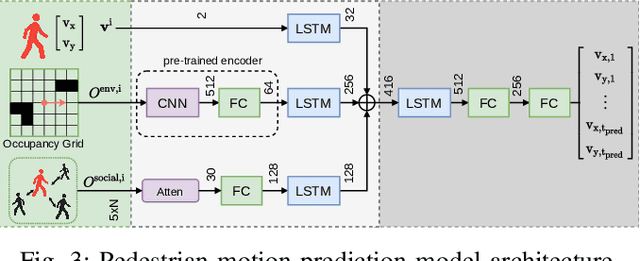

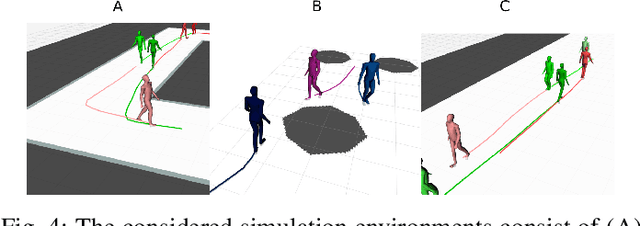

Improving Pedestrian Prediction Models with Self-Supervised Continual Learning

Feb 15, 2022

Abstract:Autonomous mobile robots require accurate human motion predictions to safely and efficiently navigate among pedestrians, whose behavior may adapt to environmental changes. This paper introduces a self-supervised continual learning framework to improve data-driven pedestrian prediction models online across various scenarios continuously. In particular, we exploit online streams of pedestrian data, commonly available from the robot's detection and tracking pipeline, to refine the prediction model and its performance in unseen scenarios. To avoid the forgetting of previously learned concepts, a problem known as catastrophic forgetting, our framework includes a regularization loss to penalize changes of model parameters that are important for previous scenarios and retrains on a set of previous examples to retain past knowledge. Experimental results on real and simulation data show that our approach can improve prediction performance in unseen scenarios while retaining knowledge from seen scenarios when compared to naively training the prediction model online.

Decentralized Probabilistic Multi-Robot Collision Avoidance Using Buffered Uncertainty-Aware Voronoi Cells

Jan 11, 2022

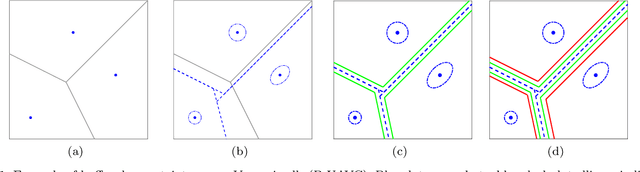

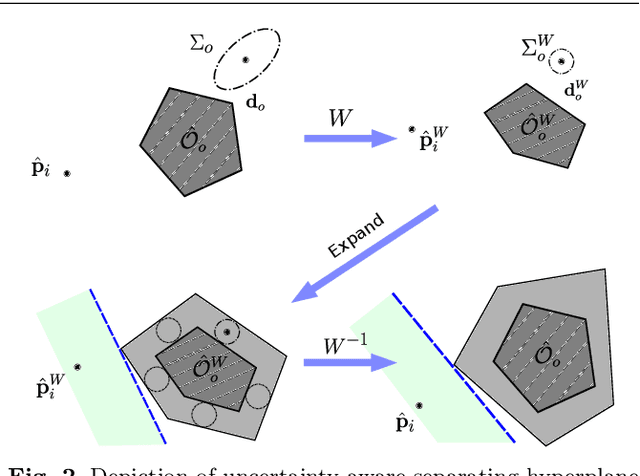

Abstract:In this paper, we present a decentralized and communication-free collision avoidance approach for multi-robot systems that accounts for both robot localization and sensing uncertainties. The approach relies on the computation of an uncertainty-aware safe region for each robot to navigate among other robots and static obstacles in the environment, under the assumption of Gaussian-distributed uncertainty. In particular, at each time step, we construct a chance-constrained buffered uncertainty-aware Voronoi cell (B-UAVC) for each robot given a specified collision probability threshold. Probabilistic collision avoidance is achieved by constraining the motion of each robot to be within its corresponding B-UAVC, i.e. the collision probability between the robots and obstacles remains below the specified threshold. The proposed approach is decentralized, communication-free, scalable with the number of robots and robust to robots' localization and sensing uncertainties. We applied the approach to single-integrator, double-integrator, differential-drive robots, and robots with general nonlinear dynamics. Extensive simulations and experiments with a team of ground vehicles, quadrotors, and heterogeneous robot teams are performed to analyze and validate the proposed approach.

Learning Interaction-aware Guidance Policies for Motion Planning in Dense Traffic Scenarios

Jul 09, 2021

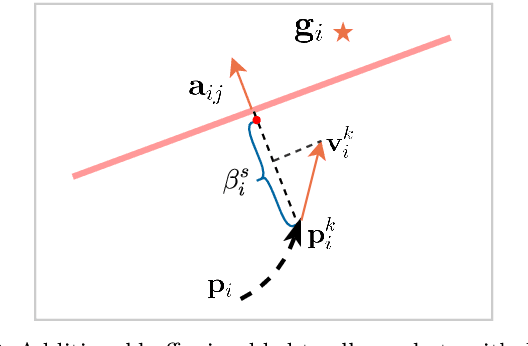

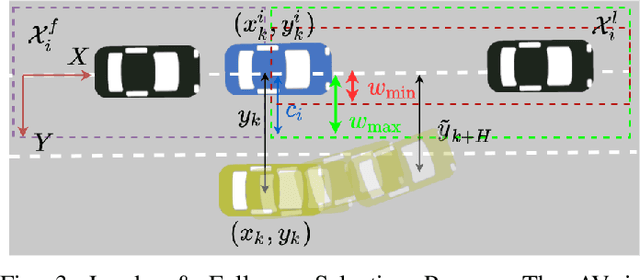

Abstract:Autonomous navigation in dense traffic scenarios remains challenging for autonomous vehicles (AVs) because the intentions of other drivers are not directly observable and AVs have to deal with a wide range of driving behaviors. To maneuver through dense traffic, AVs must be able to reason how their actions affect others (interaction model) and exploit this reasoning to navigate through dense traffic safely. This paper presents a novel framework for interaction-aware motion planning in dense traffic scenarios. We explore the connection between human driving behavior and their velocity changes when interacting. Hence, we propose to learn, via deep Reinforcement Learning (RL), an interaction-aware policy providing global guidance about the cooperativeness of other vehicles to an optimization-based planner ensuring safety and kinematic feasibility through constraint satisfaction. The learned policy can reason and guide the local optimization-based planner with interactive behavior to pro-actively merge in dense traffic while remaining safe in case the other vehicles do not yield. We present qualitative and quantitative results in highly interactive simulation environments (highway merging and unprotected left turns) against two baseline approaches, a learning-based and an optimization-based method. The presented results demonstrate that our method significantly reduces the number of collisions and increases the success rate with respect to both learning-based and optimization-based baselines.

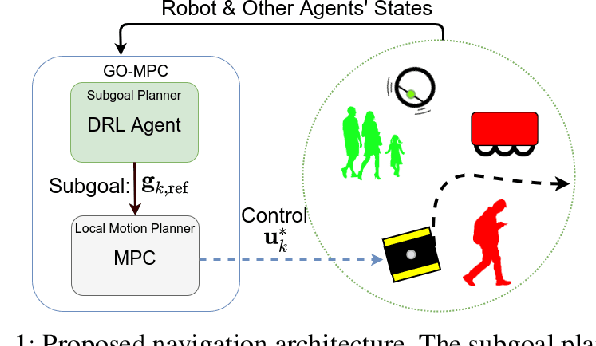

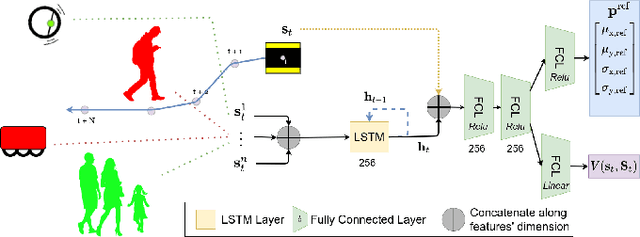

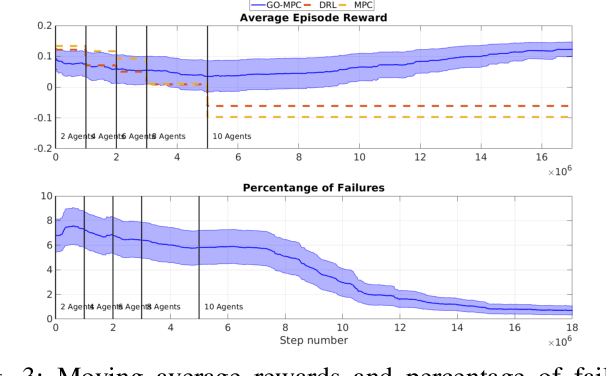

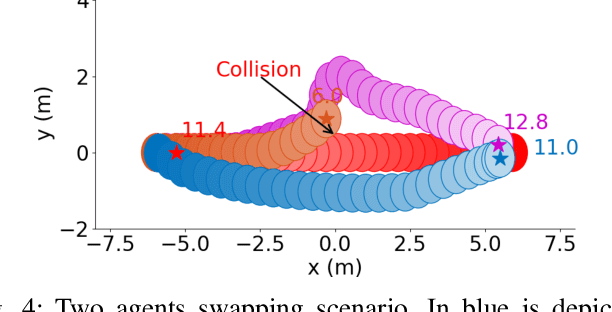

Where to go next: Learning a Subgoal Recommendation Policy for Navigation Among Pedestrians

Feb 26, 2021

Abstract:Robotic navigation in environments shared with other robots or humans remains challenging because the intentions of the surrounding agents are not directly observable and the environment conditions are continuously changing. Local trajectory optimization methods, such as model predictive control (MPC), can deal with those changes but require global guidance, which is not trivial to obtain in crowded scenarios. This paper proposes to learn, via deep Reinforcement Learning (RL), an interaction-aware policy that provides long-term guidance to the local planner. In particular, in simulations with cooperative and non-cooperative agents, we train a deep network to recommend a subgoal for the MPC planner. The recommended subgoal is expected to help the robot in making progress towards its goal and accounts for the expected interaction with other agents. Based on the recommended subgoal, the MPC planner then optimizes the inputs for the robot satisfying its kinodynamic and collision avoidance constraints. Our approach is shown to substantially improve the navigation performance in terms of number of collisions as compared to prior MPC frameworks, and in terms of both travel time and number of collisions compared to deep RL methods in cooperative, competitive and mixed multiagent scenarios.

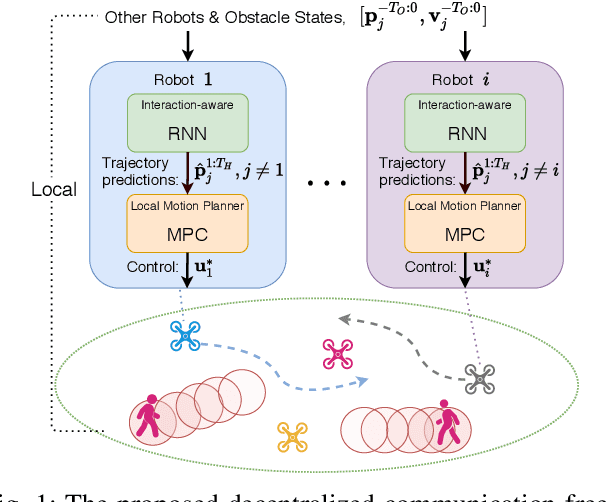

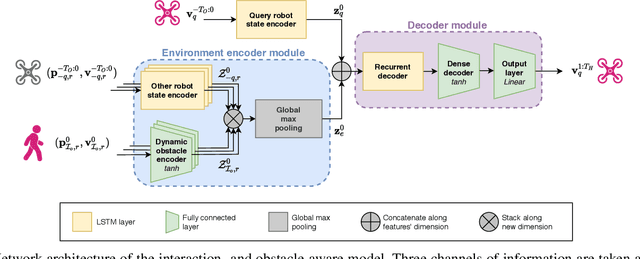

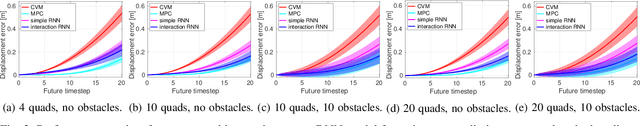

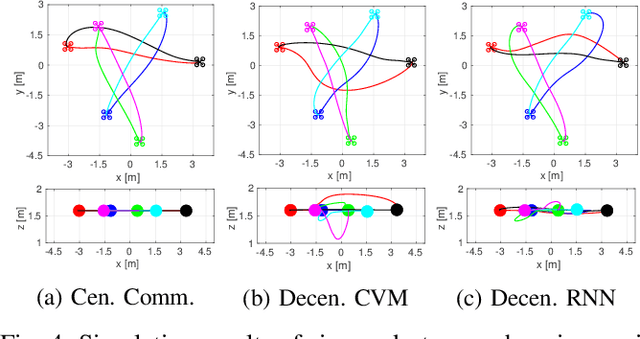

Learning Interaction-Aware Trajectory Predictions for Decentralized Multi-Robot Motion Planning in Dynamic Environments

Feb 23, 2021

Abstract:This paper presents a data-driven decentralized trajectory optimization approach for multi-robot motion planning in dynamic environments. When navigating in a shared space, each robot needs accurate motion predictions of neighboring robots to achieve predictive collision avoidance. These motion predictions can be obtained among robots by sharing their future planned trajectories with each other via communication. However, such communication may not be available nor reliable in practice. In this paper, we introduce a novel trajectory prediction model based on recurrent neural networks (RNN) that can learn multi-robot motion behaviors from demonstrated trajectories generated using a centralized sequential planner. The learned model can run efficiently online for each robot and provide interaction-aware trajectory predictions of its neighbors based on observations of their history states. We then incorporate the trajectory prediction model into a decentralized model predictive control (MPC) framework for multi-robot collision avoidance. Simulation results show that our decentralized approach can achieve a comparable level of performance to a centralized planner while being communication-free and scalable to a large number of robots. We also validate our approach with a team of quadrotors in real-world experiments.

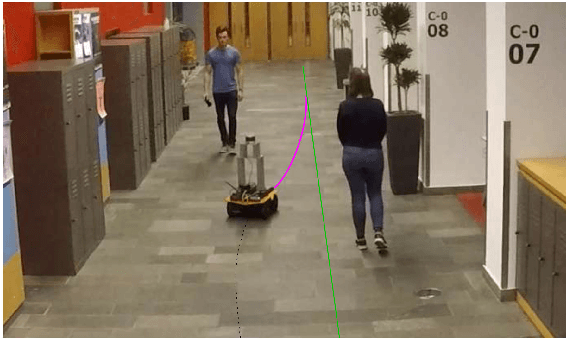

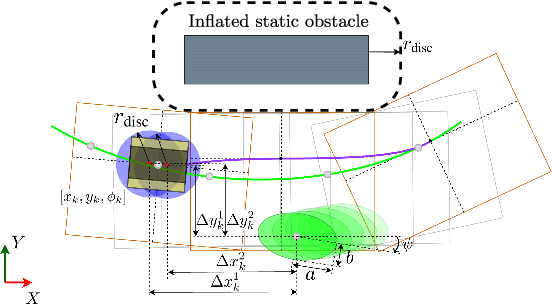

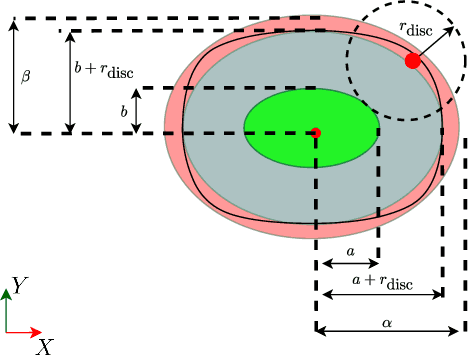

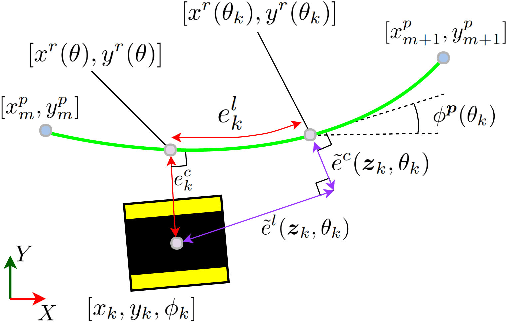

Model Predictive Contouring Control for Collision Avoidance in Unstructured Dynamic Environments

Oct 20, 2020

Abstract:This paper presents a method for local motion planning in unstructured environments with static and moving obstacles, such as humans. Given a reference path and speed, our optimization-based receding-horizon approach computes a local trajectory that minimizes the tracking error while avoiding obstacles. We build on nonlinear model-predictive contouring control (MPCC) and extend it to incorporate a static map by computing, online, a set of convex regions in free space. We model moving obstacles as ellipsoids and provide a correct bound to approximate the collision region, given by the Minkowsky sum of an ellipse and a circle. Our framework is agnostic to the robot model. We present experimental results with a mobile robot navigating in indoor environments populated with humans. Our method is executed fully onboard without the need of external support and can be applied to other robot morphologies such as autonomous cars.

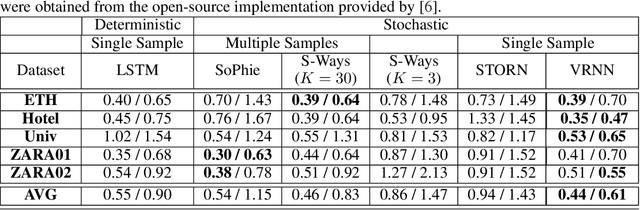

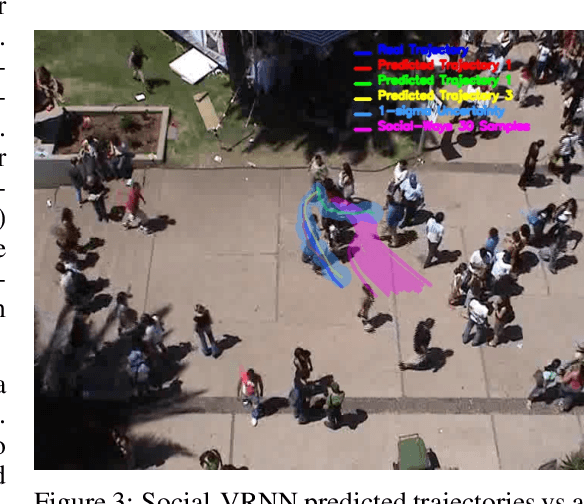

Social-VRNN: One-Shot Multi-modal Trajectory Prediction for Interacting Pedestrians

Oct 18, 2020

Abstract:Prediction of human motions is key for safe navigation of autonomous robots among humans. In cluttered environments, several motion hypotheses may exist for a pedestrian, due to its interactions with the environment and other pedestrians. Previous works for estimating multiple motion hypotheses require a large number of samples which limits their applicability in real-time motion planning. In this paper, we present a variational learning approach for interaction-aware and multi-modal trajectory prediction based on deep generative neural networks. Our approach can achieve faster convergence and requires significantly fewer samples comparing to state-of-the-art methods. Experimental results on real and simulation data show that our model can effectively learn to infer different trajectories. We compare our method with three baseline approaches and present performance results demonstrating that our generative model can achieve higher accuracy for trajectory prediction by producing diverse trajectories.

* Accepted, 12 pages, 4 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge