Boris Naujoks

Benchmarking that Matters: Rethinking Benchmarking for Practical Impact

Nov 15, 2025Abstract:Benchmarking has driven scientific progress in Evolutionary Computation, yet current practices fall short of real-world needs. Widely used synthetic suites such as BBOB and CEC isolate algorithmic phenomena but poorly reflect the structure, constraints, and information limitations of continuous and mixed-integer optimization problems in practice. This disconnect leads to the misuse of benchmarking suites for competitions, automated algorithm selection, and industrial decision-making, despite these suites being designed for different purposes. We identify key gaps in current benchmarking practices and tooling, including limited availability of real-world-inspired problems, missing high-level features, and challenges in multi-objective and noisy settings. We propose a vision centered on curated real-world-inspired benchmarks, practitioner-accessible feature spaces and community-maintained performance databases. Real progress requires coordinated effort: A living benchmarking ecosystem that evolves with real-world insights and supports both scientific understanding and industrial use.

Tools for Landscape Analysis of Optimisation Problems in Procedural Content Generation for Games

Feb 16, 2023

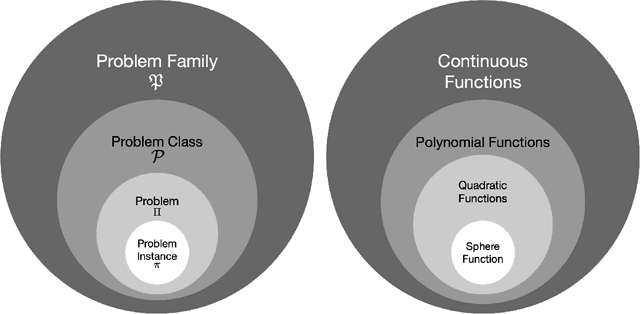

Abstract:The term Procedural Content Generation (PCG) refers to the (semi-)automatic generation of game content by algorithmic means, and its methods are becoming increasingly popular in game-oriented research and industry. A special class of these methods, which is commonly known as search-based PCG, treats the given task as an optimisation problem. Such problems are predominantly tackled by evolutionary algorithms. We will demonstrate in this paper that obtaining more information about the defined optimisation problem can substantially improve our understanding of how to approach the generation of content. To do so, we present and discuss three efficient analysis tools, namely diagonal walks, the estimation of high-level properties, as well as problem similarity measures. We discuss the purpose of each of the considered methods in the context of PCG and provide guidelines for the interpretation of the results received. This way we aim to provide methods for the comparison of PCG approaches and eventually, increase the quality and practicality of generated content in industry.

Optimally Weighted Ensembles of Regression Models: Exact Weight Optimization and Applications

Jun 22, 2022

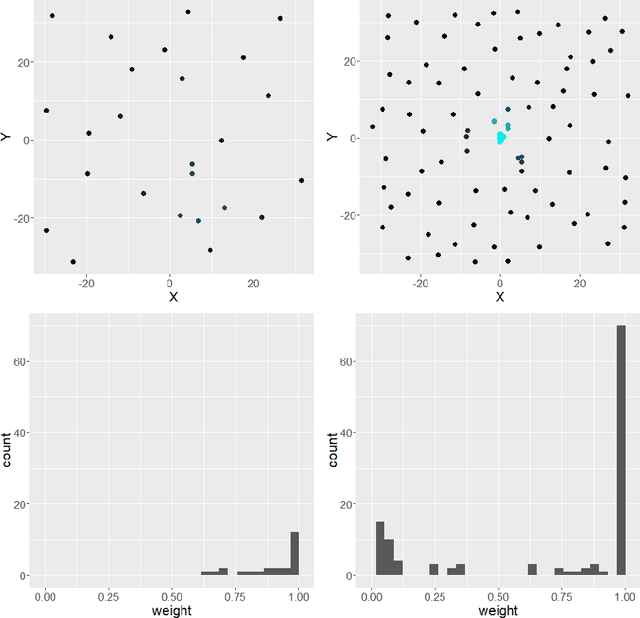

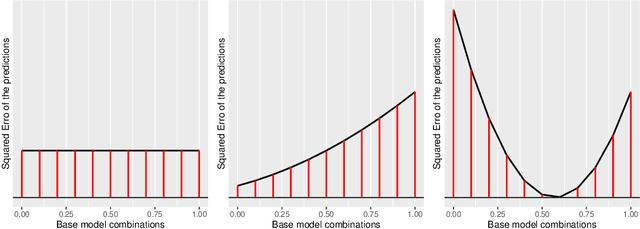

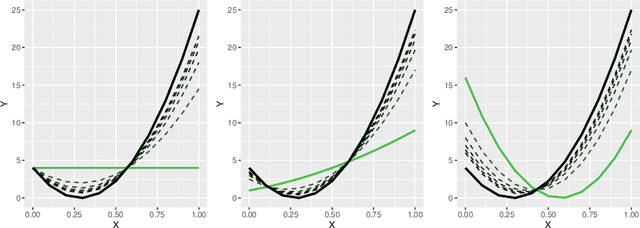

Abstract:Automated model selection is often proposed to users to choose which machine learning model (or method) to apply to a given regression task. In this paper, we show that combining different regression models can yield better results than selecting a single ('best') regression model, and outline an efficient method that obtains optimally weighted convex linear combination from a heterogeneous set of regression models. More specifically, in this paper, a heuristic weight optimization, used in a preceding conference paper, is replaced by an exact optimization algorithm using convex quadratic programming. We prove convexity of the quadratic programming formulation for the straightforward formulation and for a formulation with weighted data points. The novel weight optimization is not only (more) exact but also more efficient. The methods we develop in this paper are implemented and made available via github-open source. They can be executed on commonly available hardware and offer a transparent and easy to interpret interface. The results indicate that the approach outperforms model selection methods on a range of data sets, including data sets with mixed variable type from drug discovery applications.

Automating Speedrun Routing: Overview and Vision

Jun 02, 2021Abstract:Speedrunning in general means to play a video game fast, i.e. using all means at one's disposal to achieve a given goal in the least amount of time possible. To do so, a speedrun must be planned in advance, or routed, as it is referred to by the community. This paper focuses on discovering challenges and defining models needed when trying to approach the problem of routing algorithmically. It provides an overview of relevant speedrunning literature, extracting vital information and formulating criticism. Important categorizations are pointed out and a nomenclature is build to support professional discussion. Different concepts of graph representations are presented and their potential is discussed with regard to solving the speedrun routing optimization problem. Visions both for problem modeling as well as solving are presented and assessed regarding suitability and expected challenges. This results in a vision of potential solutions and what will be addressed in the future.

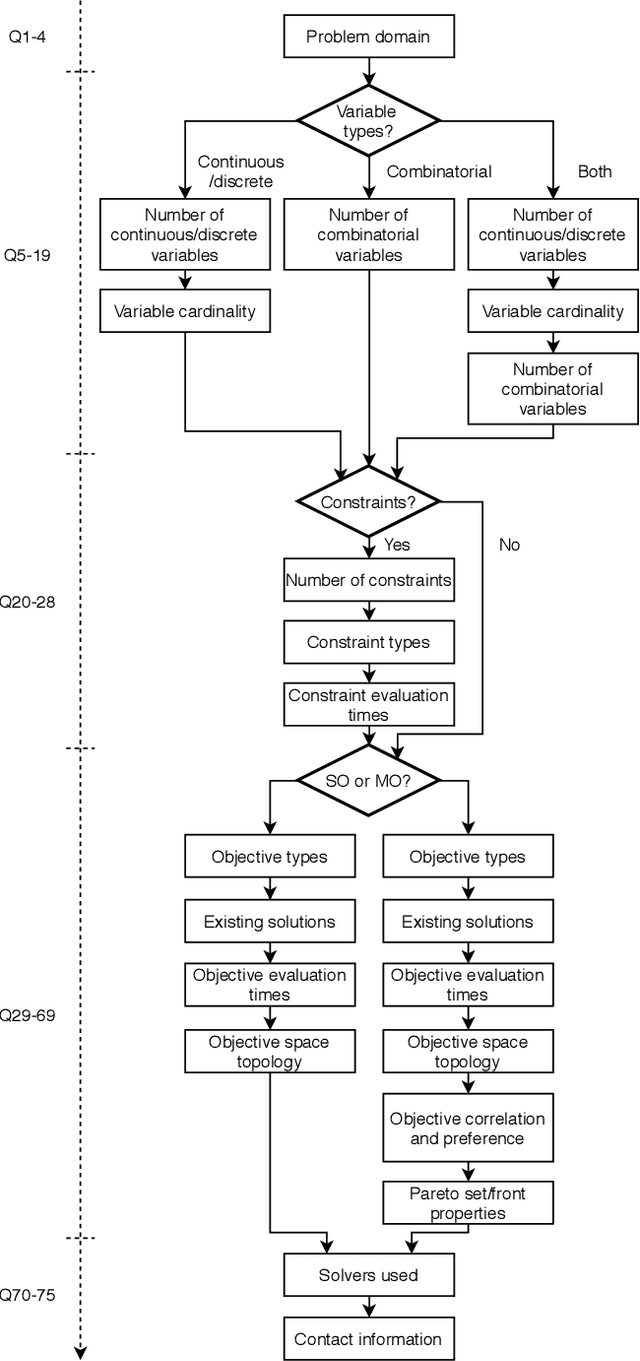

Identifying Properties of Real-World Optimisation Problems through a Questionnaire

Nov 11, 2020

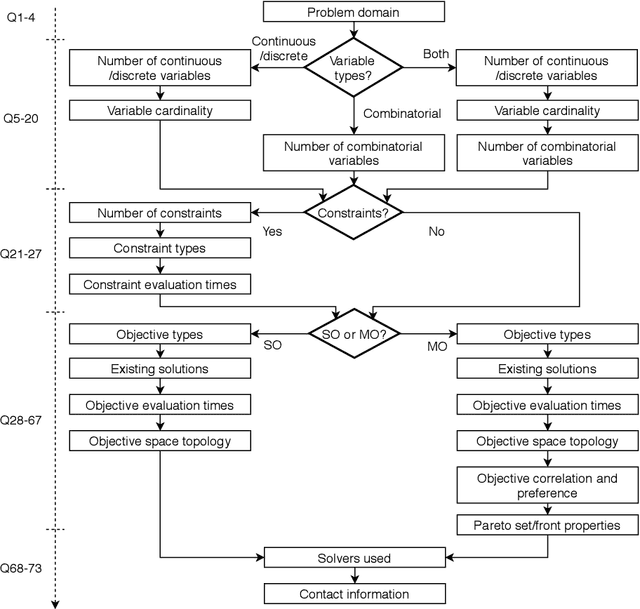

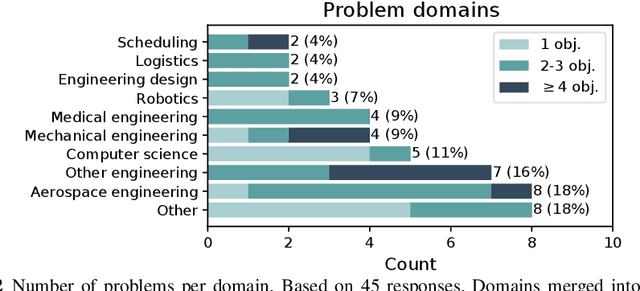

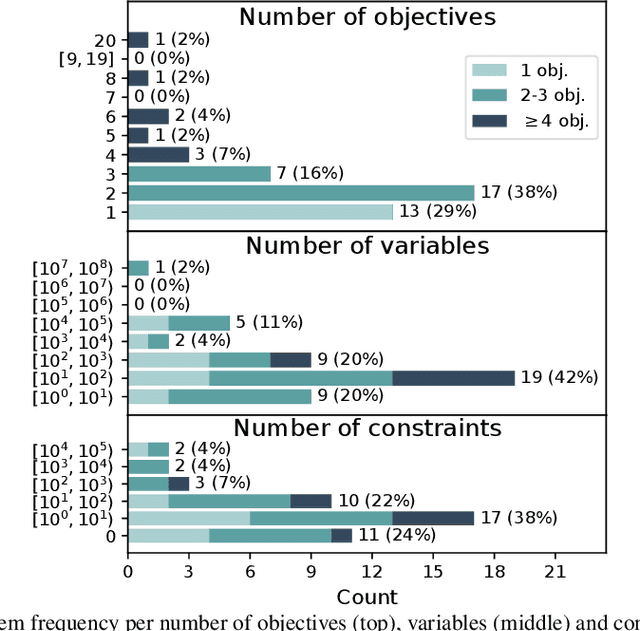

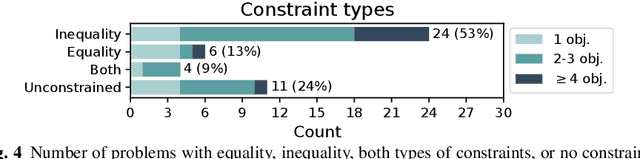

Abstract:Optimisation algorithms are commonly compared on benchmarks to get insight into performance differences. However, it is not clear how closely benchmarks match the properties of real-world problems because these properties are largely unknown. This work investigates the properties of real-world problems through a questionnaire to enable the design of future benchmark problems that more closely resemble those found in the real world. The results, while not representative, show that many problems possess at least one of the following properties: they are constrained, deterministic, have only continuous variables, require substantial computation times for both the objectives and the constraints, or allow a limited number of evaluations. Properties like known optimal solutions and analytical gradients are rarely available, limiting the options in guiding the optimisation process. These are all important aspects to consider when designing realistic benchmark problems. At the same time, objective functions are often reported to be black-box and since many problem properties are unknown the design of realistic benchmarks is difficult. To further improve the understanding of real-world problems, readers working on a real-world optimisation problem are encouraged to fill out the questionnaire: https://tinyurl.com/opt-survey

Benchmarking in Optimization: Best Practice and Open Issues

Jul 07, 2020

Abstract:This survey compiles ideas and recommendations from more than a dozen researchers with different backgrounds and from different institutes around the world. Promoting best practice in benchmarking is its main goal. The article discusses eight essential topics in benchmarking: clearly stated goals, well-specified problems, suitable algorithms, adequate performance measures, thoughtful analysis, effective and efficient designs, comprehensible presentations, and guaranteed reproducibility. The final goal is to provide well-accepted guidelines (rules) that might be useful for authors and reviewers. As benchmarking in optimization is an active and evolving field of research this manuscript is meant to co-evolve over time by means of periodic updates.

Towards Game-Playing AI Benchmarks via Performance Reporting Standards

Jul 06, 2020

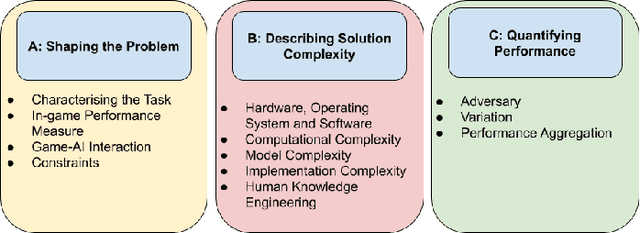

Abstract:While games have been used extensively as milestones to evaluate game-playing AI, there exists no standardised framework for reporting the obtained observations. As a result, it remains difficult to draw general conclusions about the strengths and weaknesses of different game-playing AI algorithms. In this paper, we propose reporting guidelines for AI game-playing performance that, if followed, provide information suitable for unbiased comparisons between different AI approaches. The vision we describe is to build benchmarks and competitions based on such guidelines in order to be able to draw more general conclusions about the behaviour of different AI algorithms, as well as the types of challenges different games pose.

Towards Realistic Optimization Benchmarks: A Questionnaire on the Properties of Real-World Problems

Apr 14, 2020

Abstract:Benchmarks are a useful tool for empirical performance comparisons. However, one of the main shortcomings of existing benchmarks is that it remains largely unclear how they relate to real-world problems. What does an algorithm's performance on a benchmark say about its potential on a specific real-world problem? This work aims to identify properties of real-world problems through a questionnaire on real-world single-, multi-, and many-objective optimization problems. Based on initial responses, a few challenges that have to be considered in the design of realistic benchmarks can already be identified. A key point for future work is to gather more responses to the questionnaire to allow an analysis of common combinations of properties. In turn, such common combinations can then be included in improved benchmark suites. To gather more data, the reader is invited to participate in the questionnaire at: https://tinyurl.com/opt-survey

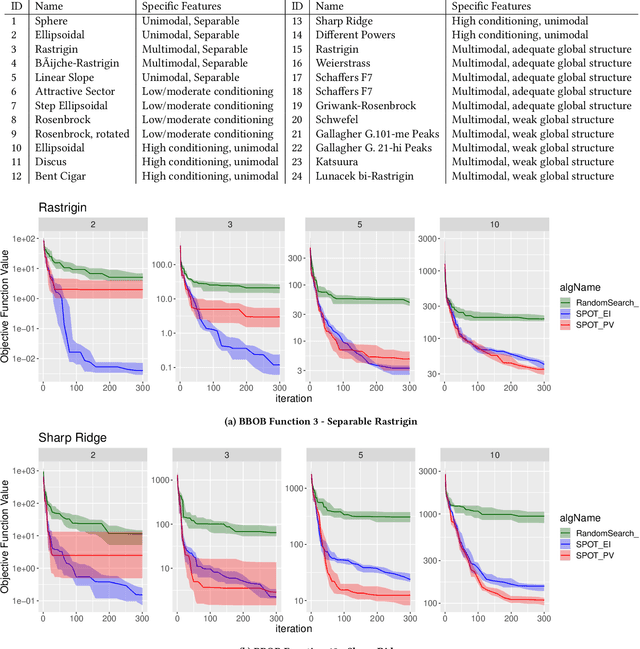

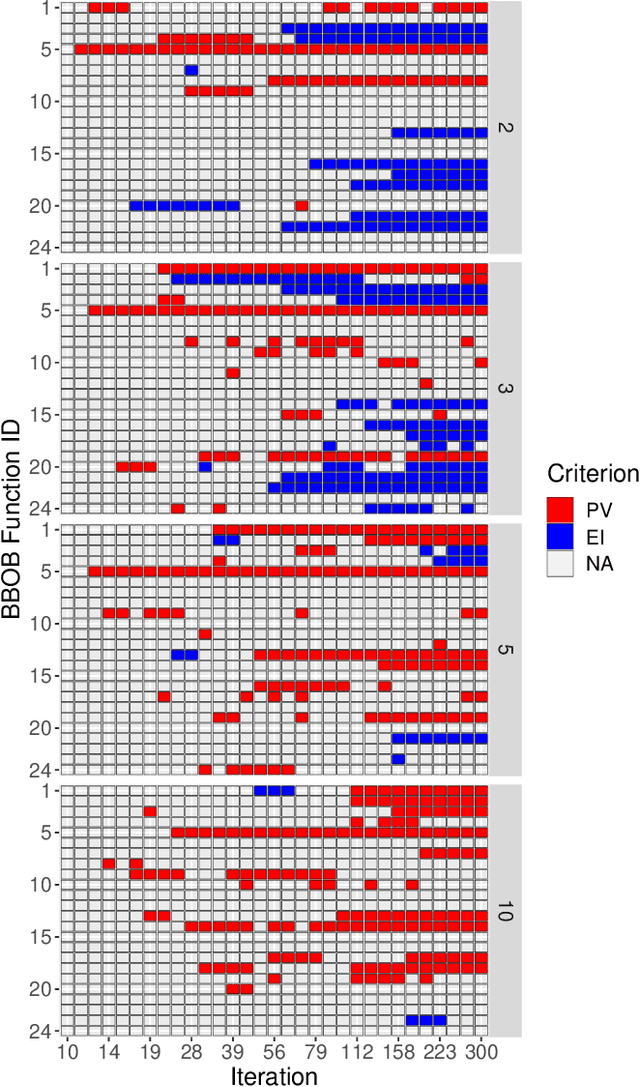

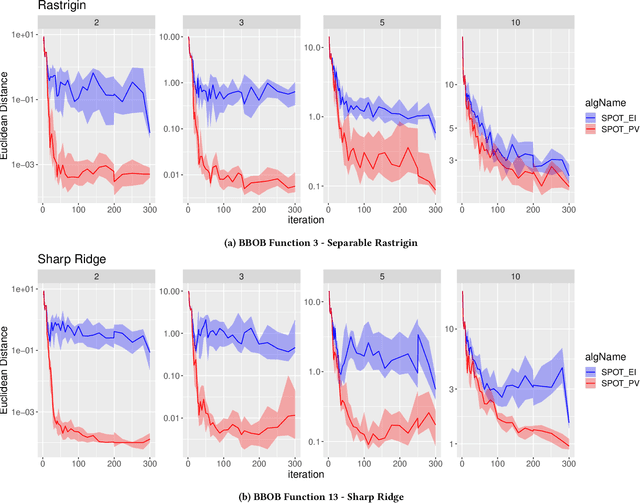

Expected Improvement versus Predicted Value in Surrogate-Based Optimization

Feb 17, 2020

Abstract:Surrogate-based optimization relies on so-called infill criteria (acquisition functions) to decide which point to evaluate next. When Kriging is used as the surrogate model of choice (also called Bayesian optimization), one of the most frequently chosen criteria is expected improvement. We argue that the popularity of expected improvement largely relies on its theoretical properties rather than empirically validated performance. Few results from the literature show evidence, that under certain conditions, expected improvement may perform worse than something as simple as the predicted value of the surrogate model. We benchmark both infill criteria in an extensive empirical study on the `BBOB' function set. This investigation includes a detailed study of the impact of problem dimensionality on algorithm performance. The results support the hypothesis that exploration loses importance with increasing problem dimensionality. A statistical analysis reveals that the purely exploitative search with the predicted value criterion performs better on most problems of five or higher dimensions. Possible reasons for these results are discussed. In addition, we give an in-depth guide for choosing the infill criteria based on prior knowledge about the problem at hand, its dimensionality, and the available budget.

Surrogate-Assisted Partial Order-based Evolutionary Optimisation

Nov 01, 2016

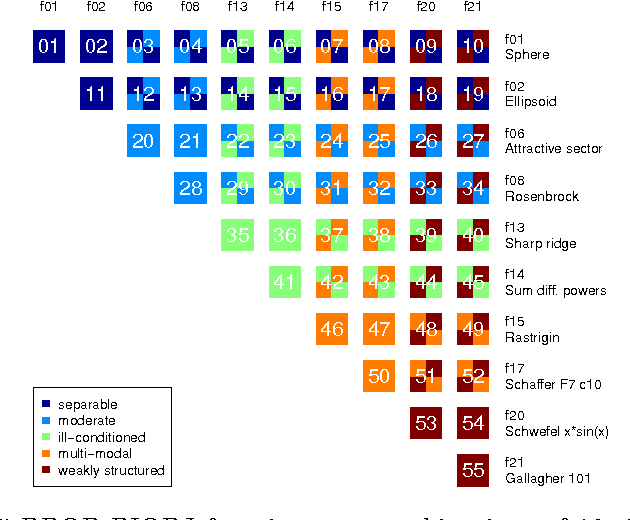

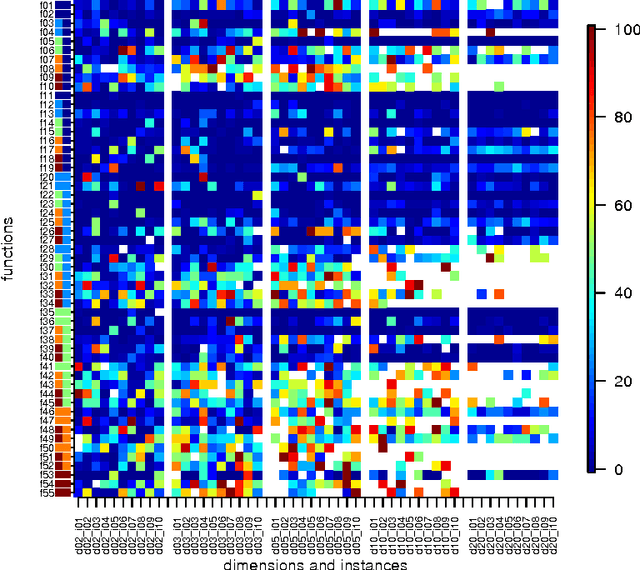

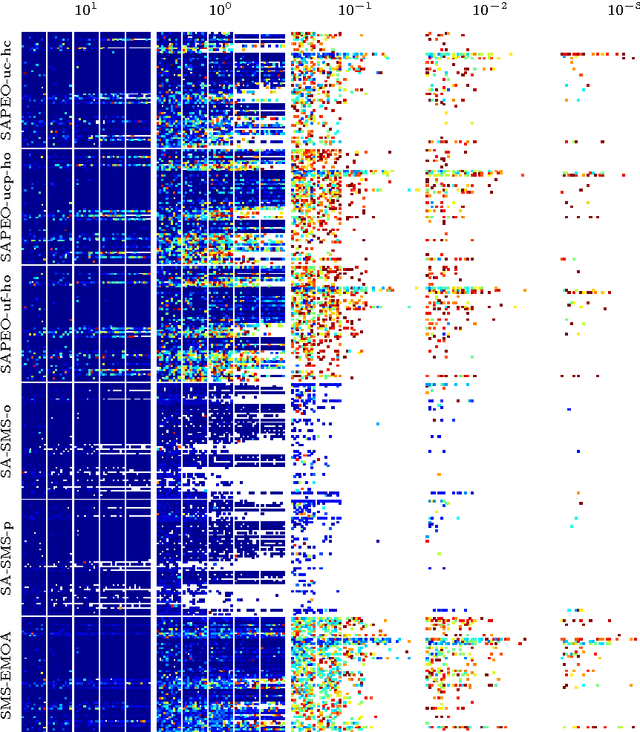

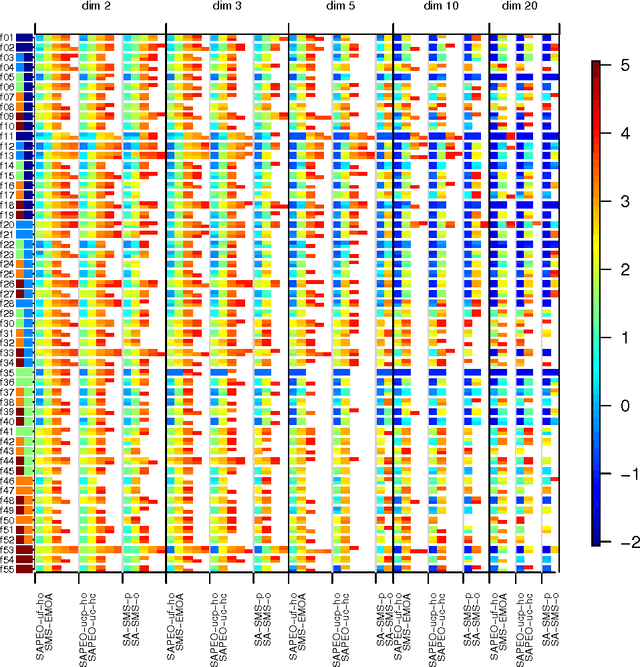

Abstract:In this paper, we propose a novel approach (SAPEO) to support the survival selection process in multi-objective evolutionary algorithms with surrogate models - it dynamically chooses individuals to evaluate exactly based on the model uncertainty and the distinctness of the population. We introduce variants that differ in terms of the risk they allow when doing survival selection. Here, the anytime performance of different SAPEO variants is evaluated in conjunction with an SMS-EMOA using the BBOB bi-objective benchmark. We compare the obtained results with the performance of the regular SMS-EMOA, as well as another surrogate-assisted approach. The results open up general questions about the applicability and required conditions for surrogate-assisted multi-objective evolutionary algorithms to be tackled in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge