Tea Tušar

Characterization of Constrained Continuous Multiobjective Optimization Problems: A Performance Space Perspective

Feb 04, 2023

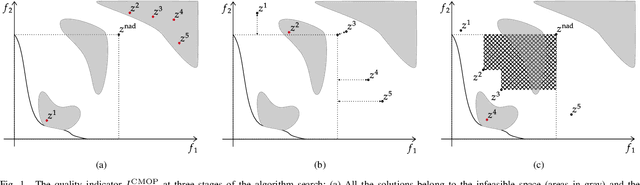

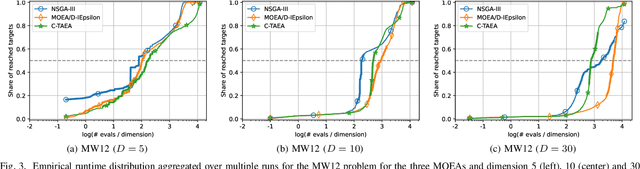

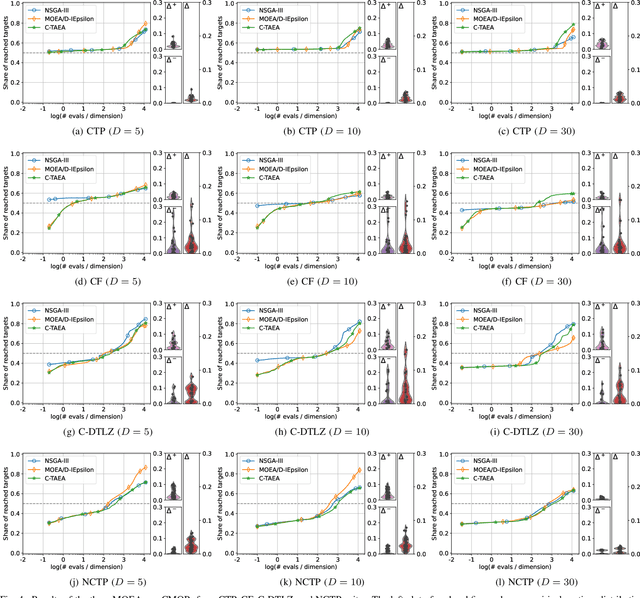

Abstract:Constrained multiobjective optimization has gained much interest in the past few years. However, constrained multiobjective optimization problems (CMOPs) are still unsatisfactorily understood. Consequently, the choice of adequate CMOPs for benchmarking is difficult and lacks a formal background. This paper addresses this issue by exploring CMOPs from a performance space perspective. First, it presents a novel performance assessment approach designed explicitly for constrained multiobjective optimization. This methodology offers a first attempt to simultaneously measure the performance in approximating the Pareto front and constraint satisfaction. Secondly, it proposes an approach to measure the capability of the given optimization problem to differentiate among algorithm performances. Finally, this approach is used to contrast eight frequently used artificial test suites of CMOPs. The experimental results reveal which suites are more efficient in discerning between three well-known multiobjective optimization algorithms. Benchmark designers can use these results to select the most appropriate CMOPs for their needs.

Characterization of Constrained Continuous Multiobjective Optimization Problems: A Feature Space Perspective

Sep 09, 2021

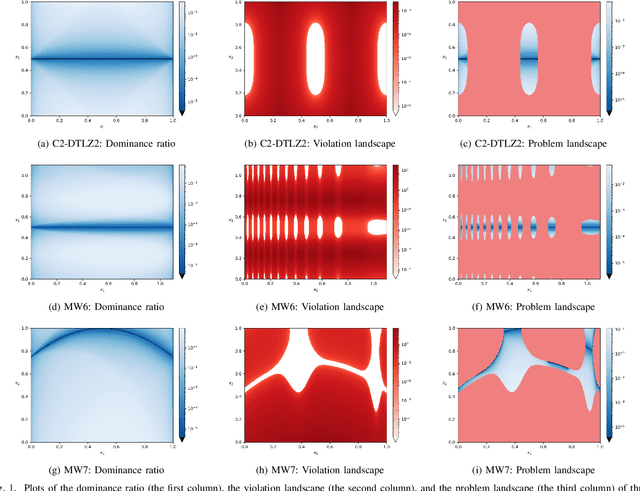

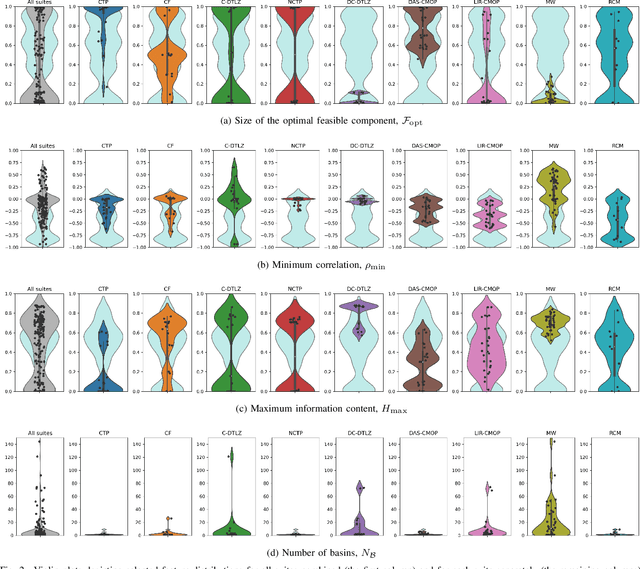

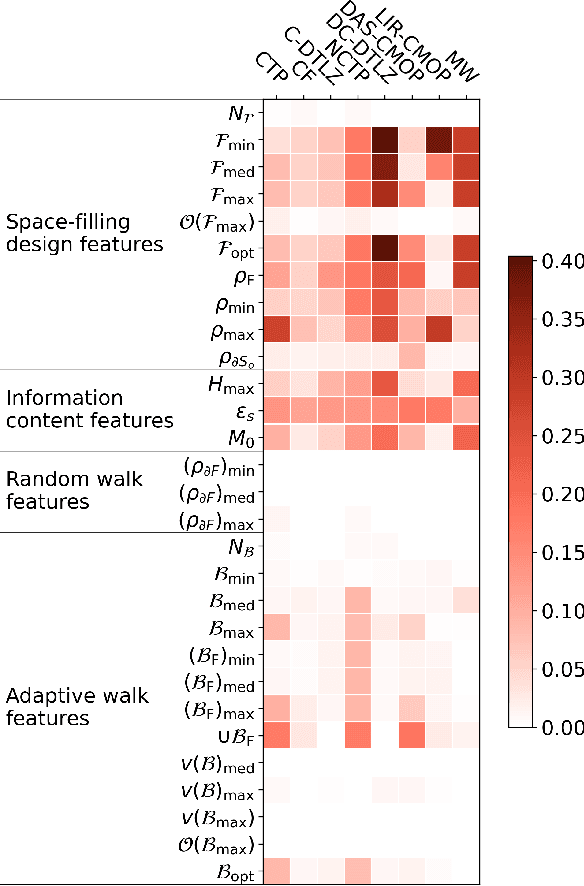

Abstract:Despite the increasing interest in constrained multiobjective optimization in recent years, constrained multiobjective optimization problems (CMOPs) are still unsatisfactory understood and characterized. For this reason, the selection of appropriate CMOPs for benchmarking is difficult and lacks a formal background. We address this issue by extending landscape analysis to constrained multiobjective optimization. By employing four exploratory landscape analysis techniques, we propose 29 landscape features (of which 19 are novel) to characterize CMOPs. These landscape features are then used to compare eight frequently used artificial test suites against a recently proposed suite consisting of real-world problems based on physical models. The experimental results reveal that the artificial test problems fail to adequately represent some realistic characteristics, such as strong negative correlation between the objectives and constraints. Moreover, our findings show that all the studied artificial test suites have advantages and limitations, and that no "perfect" suite exists. Benchmark designers can use the obtained results to select or generate appropriate CMOP instances based on the characteristics they want to explore.

Identifying Properties of Real-World Optimisation Problems through a Questionnaire

Nov 11, 2020

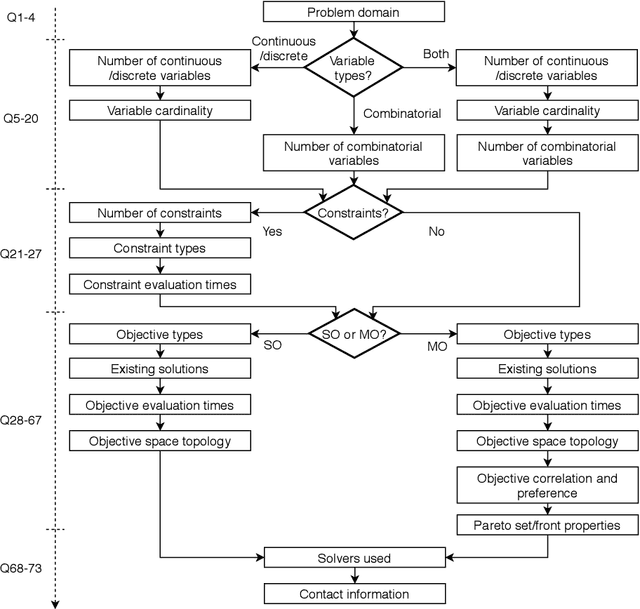

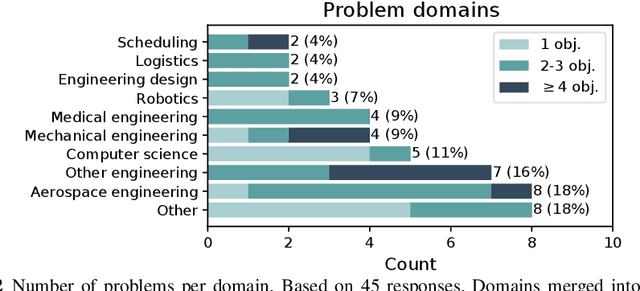

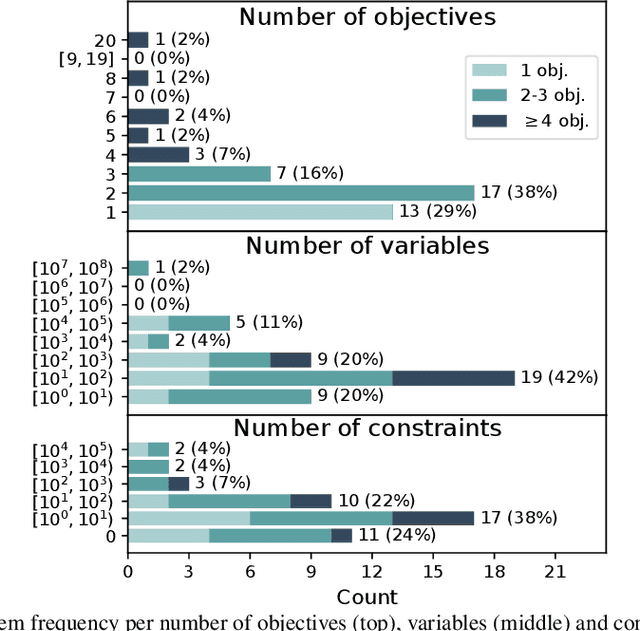

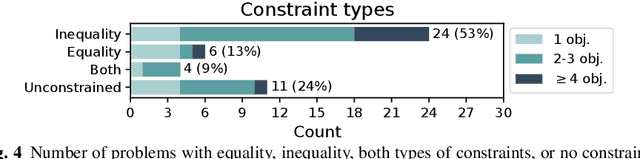

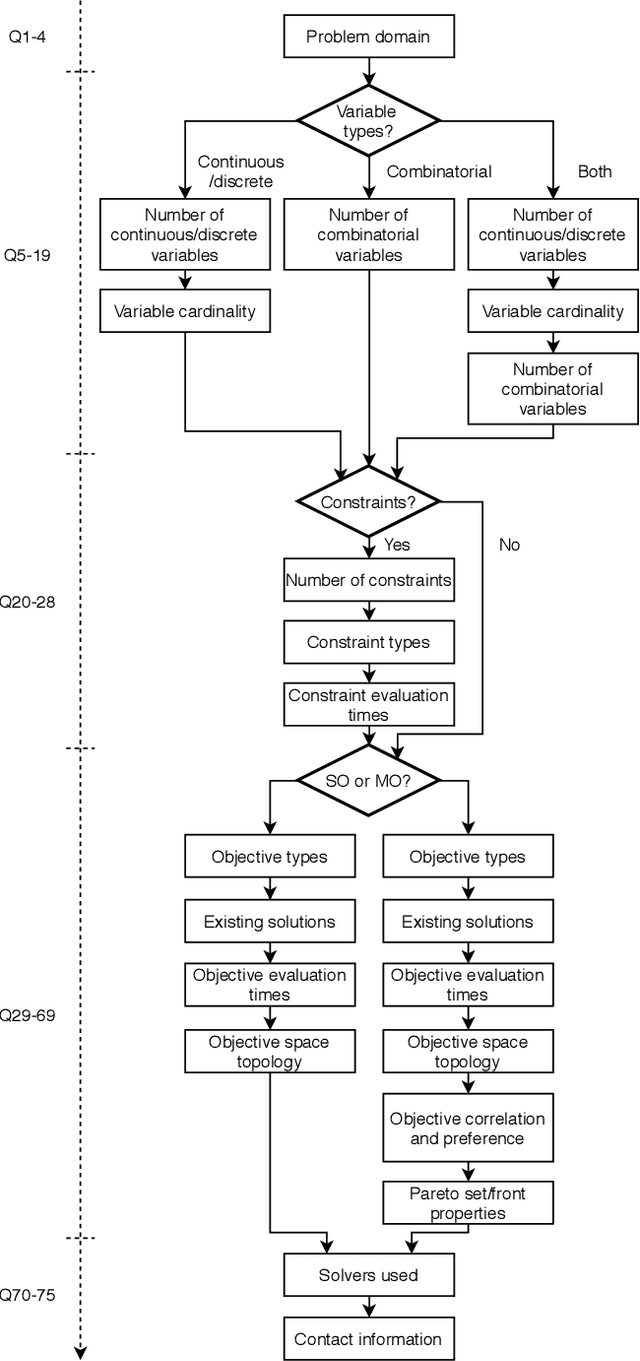

Abstract:Optimisation algorithms are commonly compared on benchmarks to get insight into performance differences. However, it is not clear how closely benchmarks match the properties of real-world problems because these properties are largely unknown. This work investigates the properties of real-world problems through a questionnaire to enable the design of future benchmark problems that more closely resemble those found in the real world. The results, while not representative, show that many problems possess at least one of the following properties: they are constrained, deterministic, have only continuous variables, require substantial computation times for both the objectives and the constraints, or allow a limited number of evaluations. Properties like known optimal solutions and analytical gradients are rarely available, limiting the options in guiding the optimisation process. These are all important aspects to consider when designing realistic benchmark problems. At the same time, objective functions are often reported to be black-box and since many problem properties are unknown the design of realistic benchmarks is difficult. To further improve the understanding of real-world problems, readers working on a real-world optimisation problem are encouraged to fill out the questionnaire: https://tinyurl.com/opt-survey

Towards Realistic Optimization Benchmarks: A Questionnaire on the Properties of Real-World Problems

Apr 14, 2020

Abstract:Benchmarks are a useful tool for empirical performance comparisons. However, one of the main shortcomings of existing benchmarks is that it remains largely unclear how they relate to real-world problems. What does an algorithm's performance on a benchmark say about its potential on a specific real-world problem? This work aims to identify properties of real-world problems through a questionnaire on real-world single-, multi-, and many-objective optimization problems. Based on initial responses, a few challenges that have to be considered in the design of realistic benchmarks can already be identified. A key point for future work is to gather more responses to the questionnaire to allow an analysis of common combinations of properties. In turn, such common combinations can then be included in improved benchmark suites. To gather more data, the reader is invited to participate in the questionnaire at: https://tinyurl.com/opt-survey

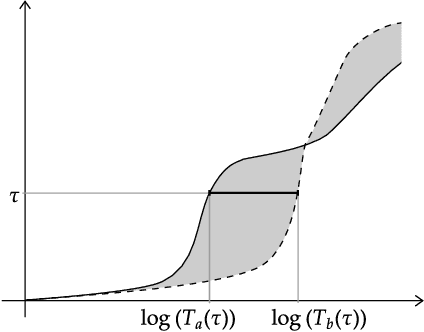

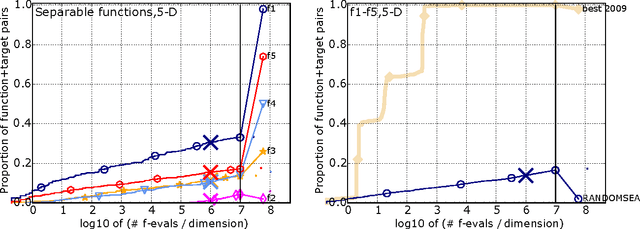

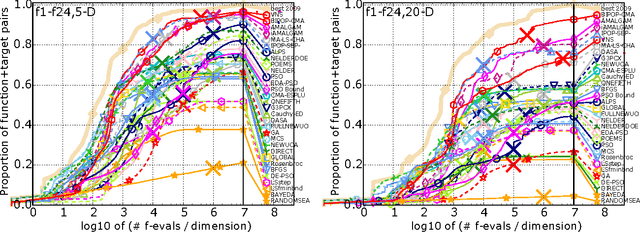

COCO: Performance Assessment

May 11, 2016

Abstract:We present an any-time performance assessment for benchmarking numerical optimization algorithms in a black-box scenario, applied within the COCO benchmarking platform. The performance assessment is based on runtimes measured in number of objective function evaluations to reach one or several quality indicator target values. We argue that runtime is the only available measure with a generic, meaningful, and quantitative interpretation. We discuss the choice of the target values, runlength-based targets, and the aggregation of results by using simulated restarts, averages, and empirical distribution functions.

Biobjective Performance Assessment with the COCO Platform

May 05, 2016

Abstract:This document details the rationales behind assessing the performance of numerical black-box optimizers on multi-objective problems within the COCO platform and in particular on the biobjective test suite bbob-biobj. The evaluation is based on a hypervolume of all non-dominated solutions in the archive of candidate solutions and measures the runtime until the hypervolume value succeeds prescribed target values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge