Aljoša Vodopija

Characterization of Constrained Continuous Multiobjective Optimization Problems: A Performance Space Perspective

Feb 04, 2023

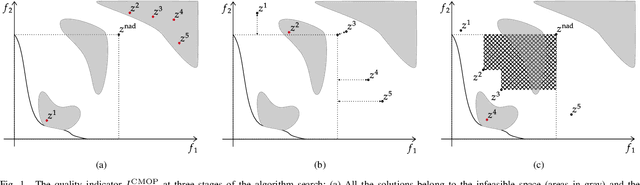

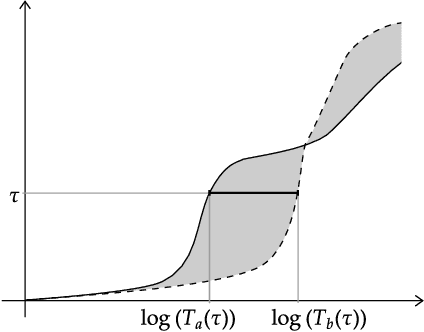

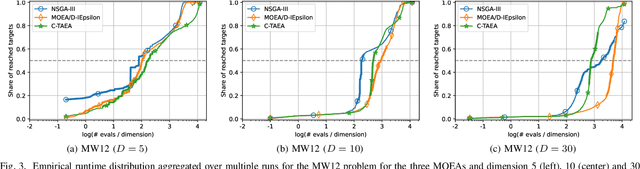

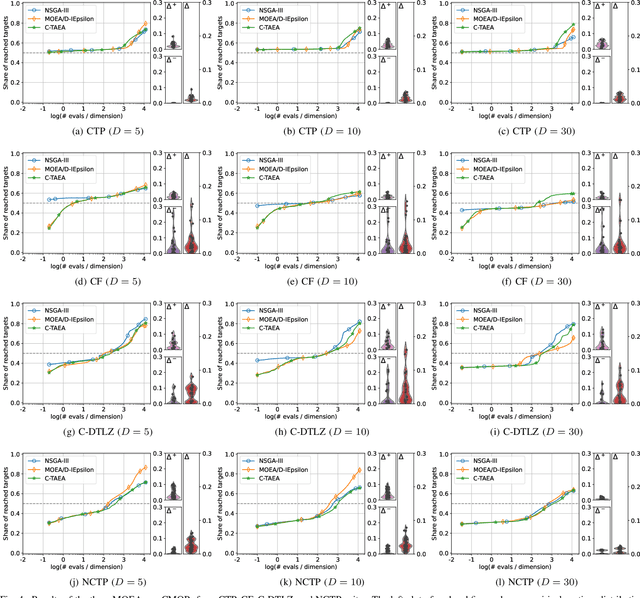

Abstract:Constrained multiobjective optimization has gained much interest in the past few years. However, constrained multiobjective optimization problems (CMOPs) are still unsatisfactorily understood. Consequently, the choice of adequate CMOPs for benchmarking is difficult and lacks a formal background. This paper addresses this issue by exploring CMOPs from a performance space perspective. First, it presents a novel performance assessment approach designed explicitly for constrained multiobjective optimization. This methodology offers a first attempt to simultaneously measure the performance in approximating the Pareto front and constraint satisfaction. Secondly, it proposes an approach to measure the capability of the given optimization problem to differentiate among algorithm performances. Finally, this approach is used to contrast eight frequently used artificial test suites of CMOPs. The experimental results reveal which suites are more efficient in discerning between three well-known multiobjective optimization algorithms. Benchmark designers can use these results to select the most appropriate CMOPs for their needs.

Characterization of Constrained Continuous Multiobjective Optimization Problems: A Feature Space Perspective

Sep 09, 2021

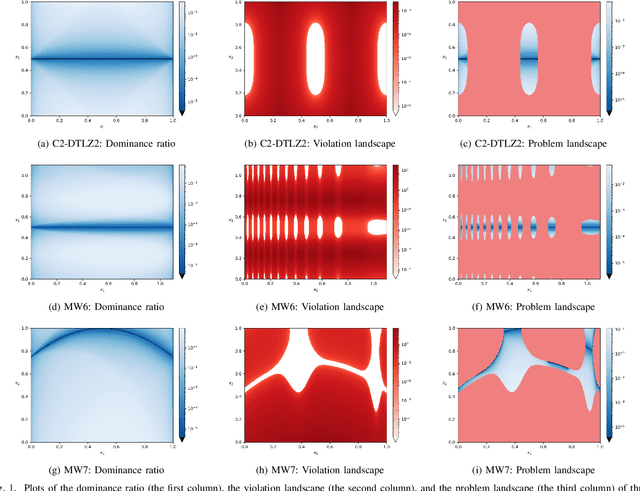

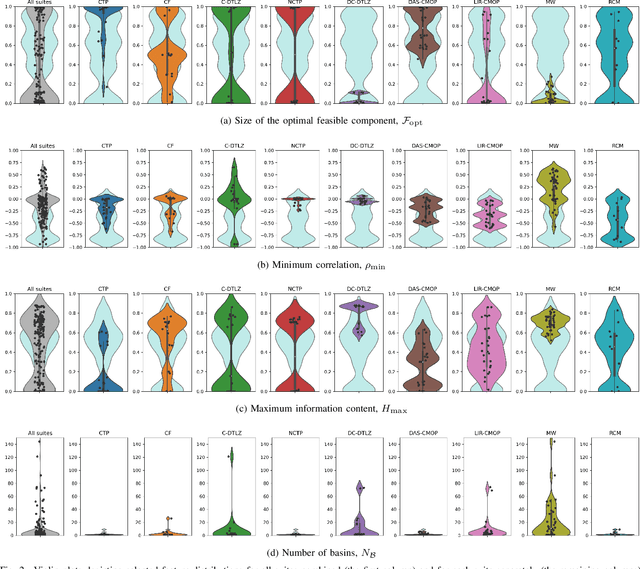

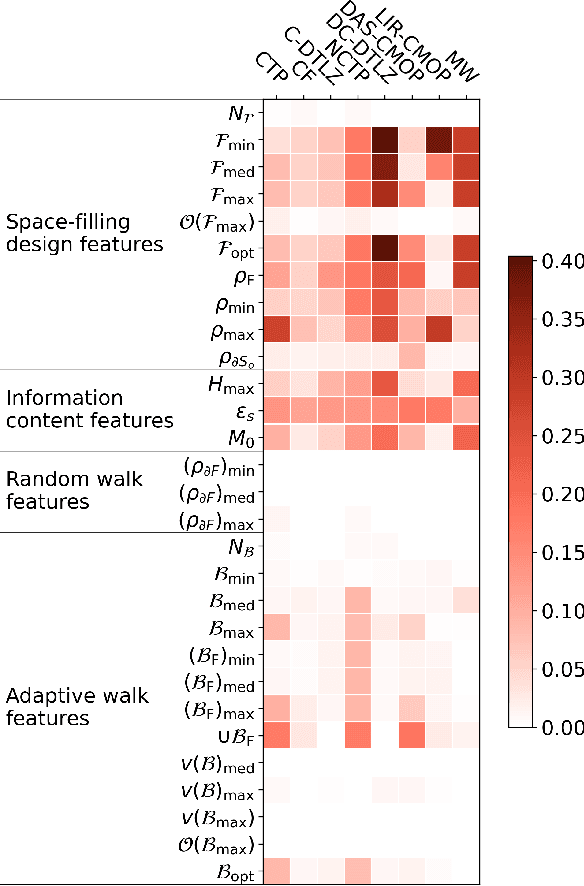

Abstract:Despite the increasing interest in constrained multiobjective optimization in recent years, constrained multiobjective optimization problems (CMOPs) are still unsatisfactory understood and characterized. For this reason, the selection of appropriate CMOPs for benchmarking is difficult and lacks a formal background. We address this issue by extending landscape analysis to constrained multiobjective optimization. By employing four exploratory landscape analysis techniques, we propose 29 landscape features (of which 19 are novel) to characterize CMOPs. These landscape features are then used to compare eight frequently used artificial test suites against a recently proposed suite consisting of real-world problems based on physical models. The experimental results reveal that the artificial test problems fail to adequately represent some realistic characteristics, such as strong negative correlation between the objectives and constraints. Moreover, our findings show that all the studied artificial test suites have advantages and limitations, and that no "perfect" suite exists. Benchmark designers can use the obtained results to select or generate appropriate CMOP instances based on the characteristics they want to explore.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge