Bing Zhu

Multipath Extended Target Tracking with Labeled Random Finite Sets

Feb 03, 2026Abstract:High-resolution radar sensors are critical for autonomous systems but pose significant challenges to traditional tracking algorithms due to the generation of multiple measurements per object and the presence of multipath effects. Existing solutions often rely on the point target assumption or treat multipath measurements as clutter, whereas current extended target trackers often lack the capability to maintain trajectory continuity in complex multipath environments. To address these limitations, this paper proposes the multipath extended target generalized labeled multi-Bernoulli (MPET-GLMB) filter. A unified Bayesian framework based on labeled random finite set theory is derived to jointly model target existence, measurement partitioning, and the association between measurements, targets, and propagation paths. This formulation enables simultaneous trajectory estimation for both targets and reflectors without requiring heuristic post-processing. To enhance computational efficiency, a joint prediction and update implementation based on Gibbs sampling is developed. Furthermore, a measurement-driven adaptive birth model is introduced to initialize tracks without prior knowledge of target positions. Experimental results from simulated scenarios and real-world automotive radar data demonstrate that the proposed filter outperforms state-of-the-art methods, achieving superior state estimation accuracy and robust trajectory maintenance in dynamic multipath environments.

CC-OR-Net: A Unified Framework for LTV Prediction through Structural Decoupling

Jan 15, 2026Abstract:Customer Lifetime Value (LTV) prediction, a central problem in modern marketing, is characterized by a unique zero-inflated and long-tail data distribution. This distribution presents two fundamental challenges: (1) the vast majority of low-to-medium value users numerically overwhelm the small but critically important segment of high-value "whale" users, and (2) significant value heterogeneity exists even within the low-to-medium value user base. Common approaches either rely on rigid statistical assumptions or attempt to decouple ranking and regression using ordered buckets; however, they often enforce ordinality through loss-based constraints rather than inherent architectural design, failing to balance global accuracy with high-value precision. To address this gap, we propose \textbf{C}onditional \textbf{C}ascaded \textbf{O}rdinal-\textbf{R}esidual Networks \textbf{(CC-OR-Net)}, a novel unified framework that achieves a more robust decoupling through \textbf{structural decomposition}, where ranking is architecturally guaranteed. CC-OR-Net integrates three specialized components: a \textit{structural ordinal decomposition module} for robust ranking, an \textit{intra-bucket residual module} for fine-grained regression, and a \textit{targeted high-value augmentation module} for precision on top-tier users. Evaluated on real-world datasets with over 300M users, CC-OR-Net achieves a superior trade-off across all key business metrics, outperforming state-of-the-art methods in creating a holistic and commercially valuable LTV prediction solution.

VP-AutoTest: A Virtual-Physical Fusion Autonomous Driving Testing Platform

Dec 08, 2025Abstract:The rapid development of autonomous vehicles has led to a surge in testing demand. Traditional testing methods, such as virtual simulation, closed-course, and public road testing, face several challenges, including unrealistic vehicle states, limited testing capabilities, and high costs. These issues have prompted increasing interest in virtual-physical fusion testing. However, despite its potential, virtual-physical fusion testing still faces challenges, such as limited element types, narrow testing scope, and fixed evaluation metrics. To address these challenges, we propose the Virtual-Physical Testing Platform for Autonomous Vehicles (VP-AutoTest), which integrates over ten types of virtual and physical elements, including vehicles, pedestrians, and roadside infrastructure, to replicate the diversity of real-world traffic participants. The platform also supports both single-vehicle interaction and multi-vehicle cooperation testing, employing adversarial testing and parallel deduction to accelerate fault detection and explore algorithmic limits, while OBU and Redis communication enable seamless vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) cooperation across all levels of cooperative automation. Furthermore, VP-AutoTest incorporates a multidimensional evaluation framework and AI-driven expert systems to conduct comprehensive performance assessment and defect diagnosis. Finally, by comparing virtual-physical fusion test results with real-world experiments, the platform performs credibility self-evaluation to ensure both the fidelity and efficiency of autonomous driving testing. Please refer to the website for the full testing functionalities on the autonomous driving public service platform OnSite:https://www.onsite.com.cn.

RadarGaussianDet3D: An Efficient and Effective Gaussian-based 3D Detector with 4D Automotive Radars

Sep 19, 2025Abstract:4D automotive radars have gained increasing attention for autonomous driving due to their low cost, robustness, and inherent velocity measurement capability. However, existing 4D radar-based 3D detectors rely heavily on pillar encoders for BEV feature extraction, where each point contributes to only a single BEV grid, resulting in sparse feature maps and degraded representation quality. In addition, they also optimize bounding box attributes independently, leading to sub-optimal detection accuracy. Moreover, their inference speed, while sufficient for high-end GPUs, may fail to meet the real-time requirement on vehicle-mounted embedded devices. To overcome these limitations, an efficient and effective Gaussian-based 3D detector, namely RadarGaussianDet3D is introduced, leveraging Gaussian primitives and distributions as intermediate representations for radar points and bounding boxes. In RadarGaussianDet3D, a novel Point Gaussian Encoder (PGE) is designed to transform each point into a Gaussian primitive after feature aggregation and employs the 3D Gaussian Splatting (3DGS) technique for BEV rasterization, yielding denser feature maps. PGE exhibits exceptionally low latency, owing to the optimized algorithm for point feature aggregation and fast rendering of 3DGS. In addition, a new Box Gaussian Loss (BGL) is proposed, which converts bounding boxes into 3D Gaussian distributions and measures their distance to enable more comprehensive and consistent optimization. Extensive experiments on TJ4DRadSet and View-of-Delft demonstrate that RadarGaussianDet3D achieves state-of-the-art detection accuracy while delivering substantially faster inference, highlighting its potential for real-time deployment in autonomous driving.

LXLv2: Enhanced LiDAR Excluded Lean 3D Object Detection with Fusion of 4D Radar and Camera

Feb 20, 2025Abstract:As the previous state-of-the-art 4D radar-camera fusion-based 3D object detection method, LXL utilizes the predicted image depth distribution maps and radar 3D occupancy grids to assist the sampling-based image view transformation. However, the depth prediction lacks accuracy and consistency, and the concatenation-based fusion in LXL impedes the model robustness. In this work, we propose LXLv2, where modifications are made to overcome the limitations and improve the performance. Specifically, considering the position error in radar measurements, we devise a one-to-many depth supervision strategy via radar points, where the radar cross section (RCS) value is further exploited to adjust the supervision area for object-level depth consistency. Additionally, a channel and spatial attention-based fusion module named CSAFusion is introduced to improve feature adaptiveness. Experimental results on the View-of-Delft and TJ4DRadSet datasets show that the proposed LXLv2 can outperform LXL in detection accuracy, inference speed and robustness, demonstrating the effectiveness of the model.

Vision-Language Models Meet Meteorology: Developing Models for Extreme Weather Events Detection with Heatmaps

Jun 14, 2024

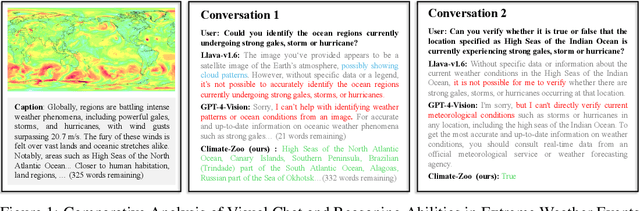

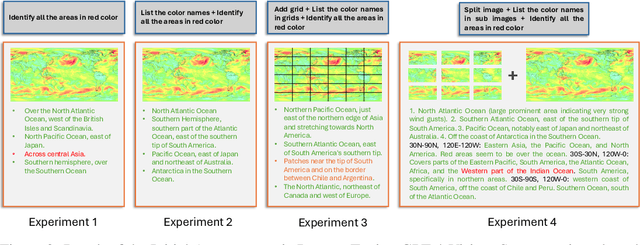

Abstract:Real-time detection and prediction of extreme weather protect human lives and infrastructure. Traditional methods rely on numerical threshold setting and manual interpretation of weather heatmaps with Geographic Information Systems (GIS), which can be slow and error-prone. Our research redefines Extreme Weather Events Detection (EWED) by framing it as a Visual Question Answering (VQA) problem, thereby introducing a more precise and automated solution. Leveraging Vision-Language Models (VLM) to simultaneously process visual and textual data, we offer an effective aid to enhance the analysis process of weather heatmaps. Our initial assessment of general-purpose VLMs (e.g., GPT-4-Vision) on EWED revealed poor performance, characterized by low accuracy and frequent hallucinations due to inadequate color differentiation and insufficient meteorological knowledge. To address these challenges, we introduce ClimateIQA, the first meteorological VQA dataset, which includes 8,760 wind gust heatmaps and 254,040 question-answer pairs covering four question types, both generated from the latest climate reanalysis data. We also propose Sparse Position and Outline Tracking (SPOT), an innovative technique that leverages OpenCV and K-Means clustering to capture and depict color contours in heatmaps, providing ClimateIQA with more accurate color spatial location information. Finally, we present Climate-Zoo, the first meteorological VLM collection, which adapts VLMs to meteorological applications using the ClimateIQA dataset. Experiment results demonstrate that models from Climate-Zoo substantially outperform state-of-the-art general VLMs, achieving an accuracy increase from 0% to over 90% in EWED verification. The datasets and models in this study are publicly available for future climate science research: https://github.com/AlexJJJChen/Climate-Zoo.

FinTextQA: A Dataset for Long-form Financial Question Answering

May 16, 2024

Abstract:Accurate evaluation of financial question answering (QA) systems necessitates a comprehensive dataset encompassing diverse question types and contexts. However, current financial QA datasets lack scope diversity and question complexity. This work introduces FinTextQA, a novel dataset for long-form question answering (LFQA) in finance. FinTextQA comprises 1,262 high-quality, source-attributed QA pairs extracted and selected from finance textbooks and government agency websites.Moreover, we developed a Retrieval-Augmented Generation (RAG)-based LFQA system, comprising an embedder, retriever, reranker, and generator. A multi-faceted evaluation approach, including human ranking, automatic metrics, and GPT-4 scoring, was employed to benchmark the performance of different LFQA system configurations under heightened noisy conditions. The results indicate that: (1) Among all compared generators, Baichuan2-7B competes closely with GPT-3.5-turbo in accuracy score; (2) The most effective system configuration on our dataset involved setting the embedder, retriever, reranker, and generator as Ada2, Automated Merged Retrieval, Bge-Reranker-Base, and Baichuan2-7B, respectively; (3) models are less susceptible to noise after the length of contexts reaching a specific threshold.

ProbRadarM3F: mmWave Radar based Human Skeletal Pose Estimation with Probability Map Guided Multi-Format Feature Fusion

May 08, 2024

Abstract:Millimetre wave (mmWave) radar is a non-intrusive privacy and relatively convenient and inexpensive device, which has been demonstrated to be applicable in place of RGB cameras in human indoor pose estimation tasks. However, mmWave radar relies on the collection of reflected signals from the target, and the radar signals containing information is difficult to be fully applied. This has been a long-standing hindrance to the improvement of pose estimation accuracy. To address this major challenge, this paper introduces a probability map guided multi-format feature fusion model, ProbRadarM3F. This is a novel radar feature extraction framework using a traditional FFT method in parallel with a probability map based positional encoding method. ProbRadarM3F fuses the traditional heatmap features and the positional features, then effectively achieves the estimation of 14 keypoints of the human body. Experimental evaluation on the HuPR dataset proves the effectiveness of the model proposed in this paper, outperforming other methods experimented on this dataset with an AP of 69.9 %. The emphasis of our study is focusing on the position information that is not exploited before in radar singal. This provides direction to investigate other potential non-redundant information from mmWave rader.

LiDAR Point Cloud-based Multiple Vehicle Tracking with Probabilistic Measurement-Region Association

Mar 12, 2024

Abstract:Multiple extended target tracking (ETT) has gained increasing attention due to the development of high-precision LiDAR and radar sensors in automotive applications. For LiDAR point cloud-based vehicle tracking, this paper presents a probabilistic measurement-region association (PMRA) ETT model, which can describe the complex measurement distribution by partitioning the target extent into different regions. The PMRA model overcomes the drawbacks of previous data-region association (DRA) models by eliminating the approximation error of constrained estimation and using continuous integrals to more reliably calculate the association probabilities. Furthermore, the PMRA model is integrated with the Poisson multi-Bernoulli mixture (PMBM) filter for tracking multiple vehicles. Simulation results illustrate the superior estimation accuracy of the proposed PMRA-PMBM filter in terms of both positions and extents of the vehicles comparing with PMBM filters using the gamma Gaussian inverse Wishart and DRA implementations.

Which Framework is Suitable for Online 3D Multi-Object Tracking for Autonomous Driving with Automotive 4D Imaging Radar?

Sep 13, 2023

Abstract:Online 3D multi-object tracking (MOT) has recently received significant research interests due to the expanding demand of 3D perception in advanced driver assistance systems (ADAS) and autonomous driving (AD). Among the existing 3D MOT frameworks for ADAS and AD, conventional point object tracking (POT) framework using the tracking-by-detection (TBD) strategy has been well studied and accepted for LiDAR and 4D imaging radar point clouds. In contrast, extended object tracking (EOT), another important framework which accepts the joint-detection-and-tracking (JDT) strategy, has rarely been explored for online 3D MOT applications. This paper provides the first systematical investigation of the EOT framework for online 3D MOT in real-world ADAS and AD scenarios. Specifically, the widely accepted TBD-POT framework, the recently investigated JDT-EOT framework, and our proposed TBD-EOT framework are compared via extensive evaluations on two open source 4D imaging radar datasets: View-of-Delft and TJ4DRadSet. Experiment results demonstrate that the conventional TBD-POT framework remains preferable for online 3D MOT with high tracking performance and low computational complexity, while the proposed TBD-EOT framework has the potential to outperform it in certain situations. However, the results also show that the JDT-EOT framework encounters multiple problems and performs inadequately in evaluation scenarios. After analyzing the causes of these phenomena based on various evaluation metrics and visualizations, we provide possible guidelines to improve the performance of these MOT frameworks on real-world data. These provide the first benchmark and important insights for the future development of 4D imaging radar-based online 3D MOT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge