Bhawna Paliwal

CROSS-JEM: Accurate and Efficient Cross-encoders for Short-text Ranking Tasks

Sep 15, 2024Abstract:Ranking a set of items based on their relevance to a given query is a core problem in search and recommendation. Transformer-based ranking models are the state-of-the-art approaches for such tasks, but they score each query-item independently, ignoring the joint context of other relevant items. This leads to sub-optimal ranking accuracy and high computational costs. In response, we propose Cross-encoders with Joint Efficient Modeling (CROSS-JEM), a novel ranking approach that enables transformer-based models to jointly score multiple items for a query, maximizing parameter utilization. CROSS-JEM leverages (a) redundancies and token overlaps to jointly score multiple items, that are typically short-text phrases arising in search and recommendations, and (b) a novel training objective that models ranking probabilities. CROSS-JEM achieves state-of-the-art accuracy and over 4x lower ranking latency over standard cross-encoders. Our contributions are threefold: (i) we highlight the gap between the ranking application's need for scoring thousands of items per query and the limited capabilities of current cross-encoders; (ii) we introduce CROSS-JEM for joint efficient scoring of multiple items per query; and (iii) we demonstrate state-of-the-art accuracy on standard public datasets and a proprietary dataset. CROSS-JEM opens up new directions for designing tailored early-attention-based ranking models that incorporate strict production constraints such as item multiplicity and latency.

Visual Representations of Physiological Signals for Fake Video Detection

Jul 18, 2022

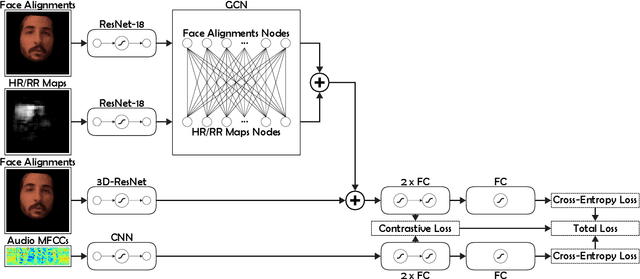

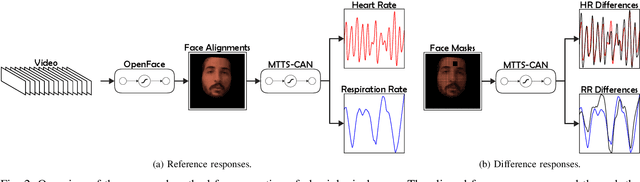

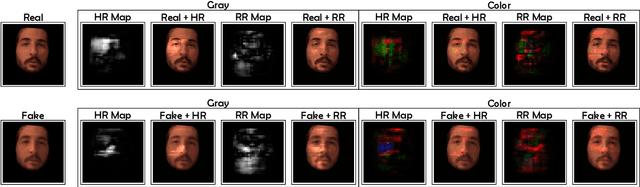

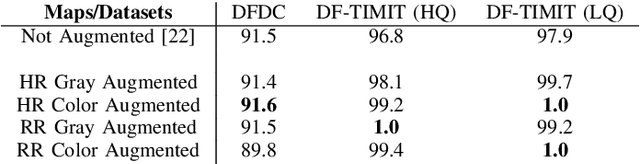

Abstract:Realistic fake videos are a potential tool for spreading harmful misinformation given our increasing online presence and information intake. This paper presents a multimodal learning-based method for detection of real and fake videos. The method combines information from three modalities - audio, video, and physiology. We investigate two strategies for combining the video and physiology modalities, either by augmenting the video with information from the physiology or by novelly learning the fusion of those two modalities with a proposed Graph Convolutional Network architecture. Both strategies for combining the two modalities rely on a novel method for generation of visual representations of physiological signals. The detection of real and fake videos is then based on the dissimilarity between the audio and modified video modalities. The proposed method is evaluated on two benchmark datasets and the results show significant increase in detection performance compared to previous methods.

NGAME: Negative Mining-aware Mini-batching for Extreme Classification

Jul 10, 2022

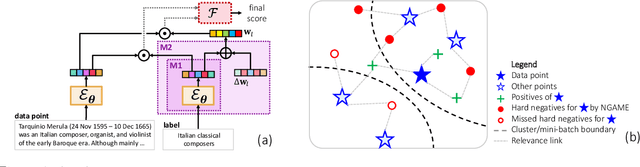

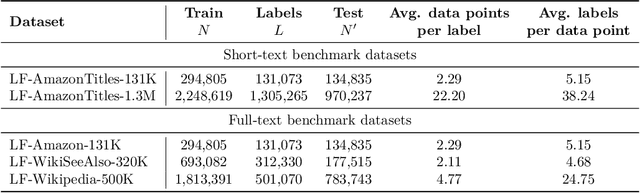

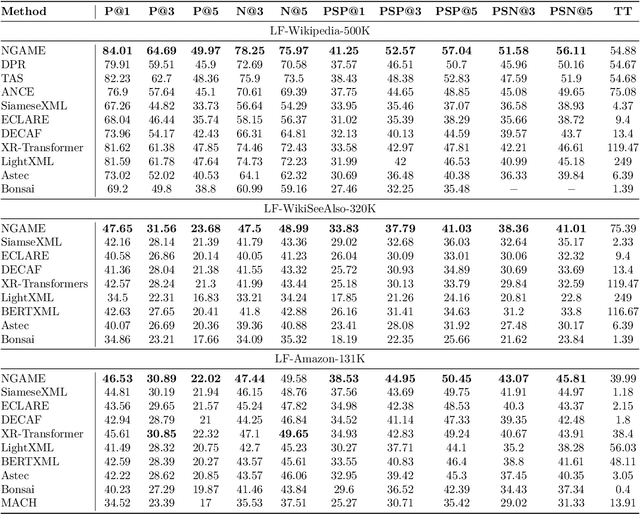

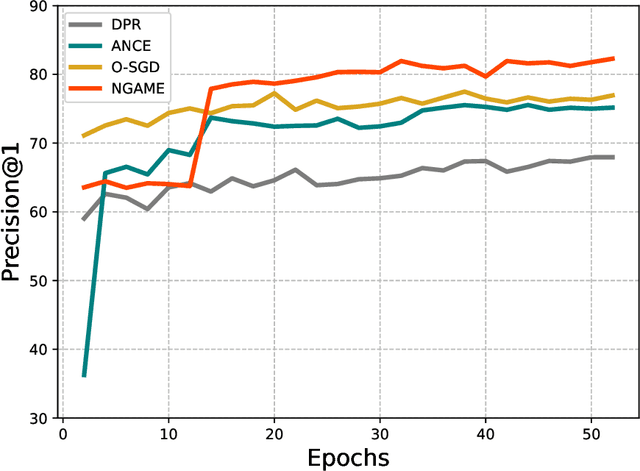

Abstract:Extreme Classification (XC) seeks to tag data points with the most relevant subset of labels from an extremely large label set. Performing deep XC with dense, learnt representations for data points and labels has attracted much attention due to its superiority over earlier XC methods that used sparse, hand-crafted features. Negative mining techniques have emerged as a critical component of all deep XC methods that allow them to scale to millions of labels. However, despite recent advances, training deep XC models with large encoder architectures such as transformers remains challenging. This paper identifies that memory overheads of popular negative mining techniques often force mini-batch sizes to remain small and slow training down. In response, this paper introduces NGAME, a light-weight mini-batch creation technique that offers provably accurate in-batch negative samples. This allows training with larger mini-batches offering significantly faster convergence and higher accuracies than existing negative sampling techniques. NGAME was found to be up to 16% more accurate than state-of-the-art methods on a wide array of benchmark datasets for extreme classification, as well as 3% more accurate at retrieving search engine queries in response to a user webpage visit to show personalized ads. In live A/B tests on a popular search engine, NGAME yielded up to 23% gains in click-through-rates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge