Baojin Huang

ProJudge: A Multi-Modal Multi-Discipline Benchmark and Instruction-Tuning Dataset for MLLM-based Process Judges

Mar 09, 2025Abstract:As multi-modal large language models (MLLMs) frequently exhibit errors when solving scientific problems, evaluating the validity of their reasoning processes is critical for ensuring reliability and uncovering fine-grained model weaknesses. Since human evaluation is laborious and costly, prompting MLLMs as automated process judges has become a common practice. However, the reliability of these model-based judges remains uncertain. To address this, we introduce ProJudgeBench, the first comprehensive benchmark specifically designed for evaluating abilities of MLLM-based process judges. ProJudgeBench comprises 2,400 test cases and 50,118 step-level labels, spanning four scientific disciplines with diverse difficulty levels and multi-modal content. In ProJudgeBench, each step is meticulously annotated by human experts for correctness, error type, and explanation, enabling a systematic evaluation of judges' capabilities to detect, classify and diagnose errors. Evaluation on ProJudgeBench reveals a significant performance gap between open-source and proprietary models. To bridge this gap, we further propose ProJudge-173k, a large-scale instruction-tuning dataset, and a Dynamic Dual-Phase fine-tuning strategy that encourages models to explicitly reason through problem-solving before assessing solutions. Both contributions significantly enhance the process evaluation capabilities of open-source models. All the resources will be released to foster future research of reliable multi-modal process evaluation.

Deepfake Face Traceability with Disentangling Reversing Network

Jul 08, 2022

Abstract:Deepfake face not only violates the privacy of personal identity, but also confuses the public and causes huge social harm. The current deepfake detection only stays at the level of distinguishing true and false, and cannot trace the original genuine face corresponding to the fake face, that is, it does not have the ability to trace the source of evidence. The deepfake countermeasure technology for judicial forensics urgently calls for deepfake traceability. This paper pioneers an interesting question about face deepfake, active forensics that "know it and how it happened". Given that deepfake faces do not completely discard the features of original faces, especially facial expressions and poses, we argue that original faces can be approximately speculated from their deepfake counterparts. Correspondingly, we design a disentangling reversing network that decouples latent space features of deepfake faces under the supervision of fake-original face pair samples to infer original faces in reverse.

A Spatio-Temporal Identity Verification Method for Person-Action Instance Search in Movies

Oct 30, 2021

Abstract:As one of the challenging problems in video search, Person-Action Instance Search (INS) aims to retrieve shots with specific person carrying out specific action from massive video shots. Existing methods mainly include two steps: First, two individual INS branches, i.e., person INS and action INS, are separately conducted to compute the initial person and action ranking scores; Second, both scores are directly fused to generate the final ranking list. However, direct aggregation of two individual INS scores cannot guarantee the identity consistency between person and action. For example, a shot with "Pat is standing" and "Ian is sitting on couch" may be erroneously understood as "Pat is sitting on couch" or "Ian is standing". To address the above identity inconsistency problem (IIP), we study a spatio-temporal identity verification method. Specifically, in the spatial dimension, we propose an identity consistency verification scheme to optimize the direct fusion score of person INS and action INS. The motivation originates from an observation that face detection results usually locate in the identity-consistent action bounding boxes. Moreover, in the temporal dimension, considering the complex filming condition, we propose an inter-frame detection extension operation to interpolate missing face/action detection results in successive video frames. The proposed method is evaluated on the large scale TRECVID INS dataset, and the experimental results show that our method can effectively mitigate the IIP and surpass the existing second places in both TRECVID 2019 and 2020 INS tasks.

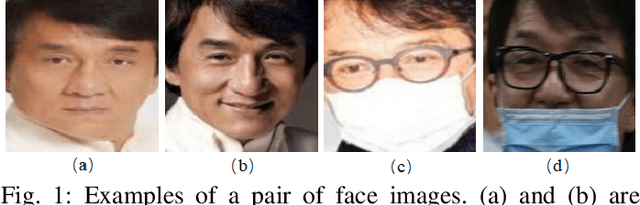

When Face Recognition Meets Occlusion: A New Benchmark

Mar 04, 2021

Abstract:The existing face recognition datasets usually lack occlusion samples, which hinders the development of face recognition. Especially during the COVID-19 coronavirus epidemic, wearing a mask has become an effective means of preventing the virus spread. Traditional CNN-based face recognition models trained on existing datasets are almost ineffective for heavy occlusion. To this end, we pioneer a simulated occlusion face recognition dataset. In particular, we first collect a variety of glasses and masks as occlusion, and randomly combine the occlusion attributes (occlusion objects, textures,and colors) to achieve a large number of more realistic occlusion types. We then cover them in the proper position of the face image with the normal occlusion habit. Furthermore, we reasonably combine original normal face images and occluded face images to form our final dataset, termed as Webface-OCC. It covers 804,704 face images of 10,575 subjects, with diverse occlusion types to ensure its diversity and stability. Extensive experiments on public datasets show that the ArcFace retrained by our dataset significantly outperforms the state-of-the-arts. Webface-OCC is available at https://github.com/Baojin-Huang/Webface-OCC.

Multi-Scale Progressive Fusion Network for Single Image Deraining

Mar 28, 2020

Abstract:Rain streaks in the air appear in various blurring degrees and resolutions due to different distances from their positions to the camera. Similar rain patterns are visible in a rain image as well as its multi-scale (or multi-resolution) versions, which makes it possible to exploit such complementary information for rain streak representation. In this work, we explore the multi-scale collaborative representation for rain streaks from the perspective of input image scales and hierarchical deep features in a unified framework, termed multi-scale progressive fusion network (MSPFN) for single image rain streak removal. For similar rain streaks at different positions, we employ recurrent calculation to capture the global texture, thus allowing to explore the complementary and redundant information at the spatial dimension to characterize target rain streaks. Besides, we construct multi-scale pyramid structure, and further introduce the attention mechanism to guide the fine fusion of this correlated information from different scales. This multi-scale progressive fusion strategy not only promotes the cooperative representation, but also boosts the end-to-end training. Our proposed method is extensively evaluated on several benchmark datasets and achieves state-of-the-art results. Moreover, we conduct experiments on joint deraining, detection, and segmentation tasks, and inspire a new research direction of vision task-driven image deraining. The source code is available at \url{https://github.com/kuihua/MSPFN}.

Masked Face Recognition Dataset and Application

Mar 23, 2020

Abstract:In order to effectively prevent the spread of COVID-19 virus, almost everyone wears a mask during coronavirus epidemic. This almost makes conventional facial recognition technology ineffective in many cases, such as community access control, face access control, facial attendance, facial security checks at train stations, etc. Therefore, it is very urgent to improve the recognition performance of the existing face recognition technology on the masked faces. Most current advanced face recognition approaches are designed based on deep learning, which depend on a large number of face samples. However, at present, there are no publicly available masked face recognition datasets. To this end, this work proposes three types of masked face datasets, including Masked Face Detection Dataset (MFDD), Real-world Masked Face Recognition Dataset (RMFRD) and Simulated Masked Face Recognition Dataset (SMFRD). Among them, to the best of our knowledge, RMFRD is currently theworld's largest real-world masked face dataset. These datasets are freely available to industry and academia, based on which various applications on masked faces can be developed. The multi-granularity masked face recognition model we developed achieves 95% accuracy, exceeding the results reported by the industry. Our datasets are available at: https://github.com/X-zhangyang/Real-World-Masked-Face-Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge