Ashish Sharma

FiMI: A Domain-Specific Language Model for Indian Finance Ecosystem

Feb 05, 2026Abstract:We present FiMI (Finance Model for India), a domain-specialized financial language model developed for Indian digital payment systems. We develop two model variants: FiMI Base and FiMI Instruct. FiMI adapts the Mistral Small 24B architecture through a multi-stage training pipeline, beginning with continuous pre-training on 68 Billion tokens of curated financial, multilingual (English, Hindi, Hinglish), and synthetic data. This is followed by instruction fine-tuning and domain-specific supervised fine-tuning focused on multi-turn, tool-driven conversations that model real-world workflows, such as transaction disputes and mandate lifecycle management. Evaluations reveal that FiMI Base achieves a 20% improvement over the Mistral Small 24B Base model on finance reasoning benchmark, while FiMI Instruct outperforms the Mistral Small 24B Instruct model by 87% on domain-specific tool-calling. Moreover, FiMI achieves these significant domain gains while maintaining comparable performance to models of similar size on general benchmarks.

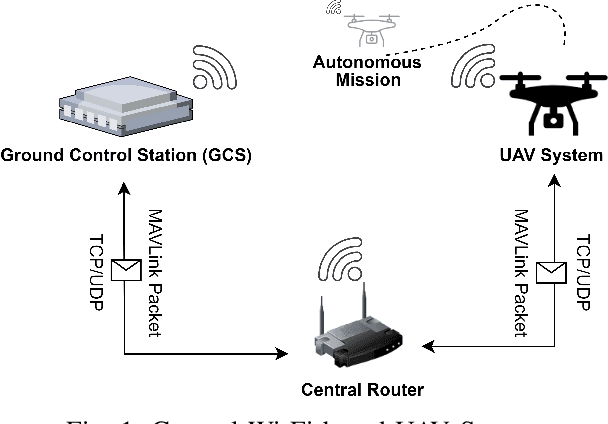

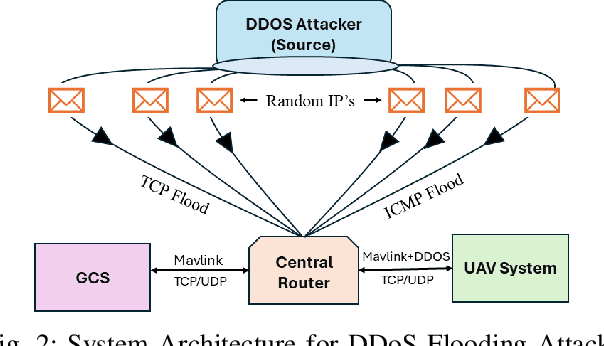

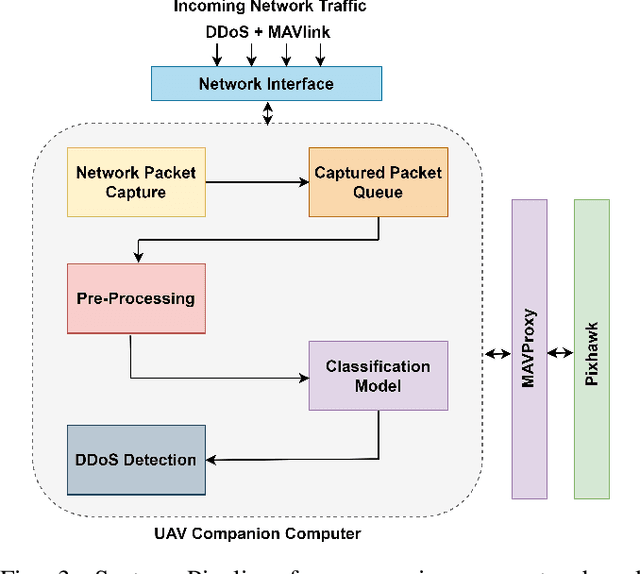

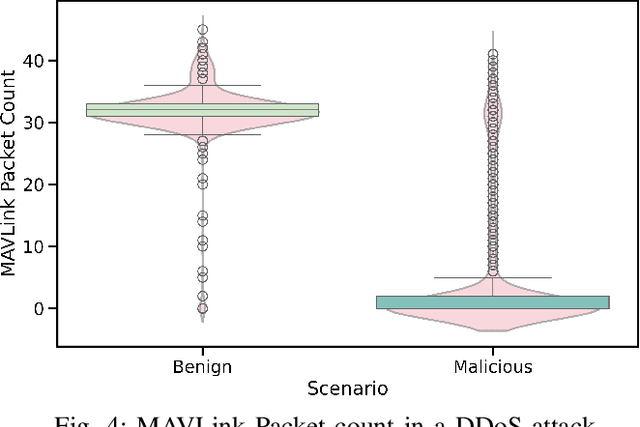

Attention Meets UAVs: A Comprehensive Evaluation of DDoS Detection in Low-Cost UAVs

Jun 28, 2024

Abstract:This paper explores the critical issue of enhancing cybersecurity measures for low-cost, Wi-Fi-based Unmanned Aerial Vehicles (UAVs) against Distributed Denial of Service (DDoS) attacks. In the current work, we have explored three variants of DDoS attacks, namely Transmission Control Protocol (TCP), Internet Control Message Protocol (ICMP), and TCP + ICMP flooding attacks, and developed a detection mechanism that runs on the companion computer of the UAV system. As a part of the detection mechanism, we have evaluated various machine learning, and deep learning algorithms, such as XGBoost, Isolation Forest, Long Short-Term Memory (LSTM), Bidirectional-LSTM (Bi-LSTM), LSTM with attention, Bi-LSTM with attention, and Time Series Transformer (TST) in terms of various classification metrics. Our evaluation reveals that algorithms with attention mechanisms outperform their counterparts in general, and TST stands out as the most efficient model with a run time of 0.1 seconds. TST has demonstrated an F1 score of 0.999, 0.997, and 0.943 for TCP, ICMP, and TCP + ICMP flooding attacks respectively. In this work, we present the necessary steps required to build an on-board DDoS detection mechanism. Further, we also present the ablation study to identify the best TST hyperparameters for DDoS detection, and we have also underscored the advantage of adapting learnable positional embeddings in TST for DDoS detection with an improvement in F1 score from 0.94 to 0.99.

Correcting misinformation on social media with a large language model

Mar 17, 2024

Abstract:Misinformation undermines public trust in science and democracy, particularly on social media where inaccuracies can spread rapidly. Experts and laypeople have shown to be effective in correcting misinformation by manually identifying and explaining inaccuracies. Nevertheless, this approach is difficult to scale, a concern as technologies like large language models (LLMs) make misinformation easier to produce. LLMs also have versatile capabilities that could accelerate misinformation correction; however, they struggle due to a lack of recent information, a tendency to produce plausible but false content and references, and limitations in addressing multimodal information. To address these issues, we propose MUSE, an LLM augmented with access to and credibility evaluation of up-to-date information. By retrieving contextual evidence and refutations, MUSE can provide accurate and trustworthy explanations and references. It also describes visuals and conducts multimodal searches for correcting multimodal misinformation. We recruit fact-checking and journalism experts to evaluate corrections to real social media posts across 13 dimensions, ranging from the factuality of explanation to the relevance of references. The results demonstrate MUSE's ability to correct misinformation promptly after appearing on social media; overall, MUSE outperforms GPT-4 by 37% and even high-quality corrections from laypeople by 29%. This work underscores the potential of LLMs to combat real-world misinformation effectively and efficiently.

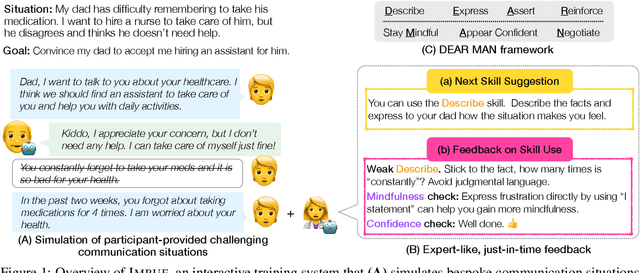

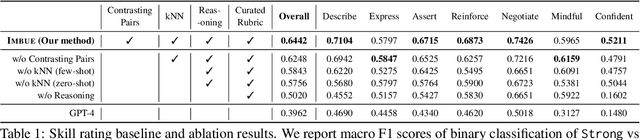

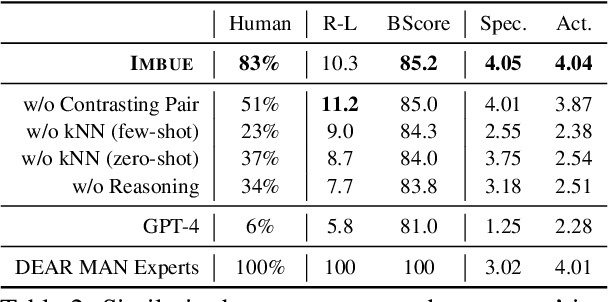

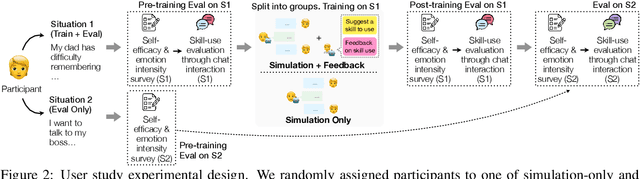

IMBUE: Improving Interpersonal Effectiveness through Simulation and Just-in-time Feedback with Human-Language Model Interaction

Feb 19, 2024

Abstract:Navigating certain communication situations can be challenging due to individuals' lack of skills and the interference of strong emotions. However, effective learning opportunities are rarely accessible. In this work, we conduct a human-centered study that uses language models to simulate bespoke communication training and provide just-in-time feedback to support the practice and learning of interpersonal effectiveness skills. We apply the interpersonal effectiveness framework from Dialectical Behavioral Therapy (DBT), DEAR MAN, which focuses on both conversational and emotional skills. We present IMBUE, an interactive training system that provides feedback 25% more similar to experts' feedback, compared to that generated by GPT-4. IMBUE is the first to focus on communication skills and emotion management simultaneously, incorporate experts' domain knowledge in providing feedback, and be grounded in psychology theory. Through a randomized trial of 86 participants, we find that IMBUE's simulation-only variant significantly improves participants' self-efficacy (up to 17%) and reduces negative emotions (up to 25%). With IMBUE's additional just-in-time feedback, participants demonstrate 17% improvement in skill mastery, along with greater enhancements in self-efficacy (27% more) and reduction of negative emotions (16% more) compared to simulation-only. The improvement in skill mastery is the only measure that is transferred to new and more difficult situations; situation specific training is necessary for improving self-efficacy and emotion reduction.

A Computational Framework for Behavioral Assessment of LLM Therapists

Jan 01, 2024

Abstract:The emergence of ChatGPT and other large language models (LLMs) has greatly increased interest in utilizing LLMs as therapists to support individuals struggling with mental health challenges. However, due to the lack of systematic studies, our understanding of how LLM therapists behave, i.e., ways in which they respond to clients, is significantly limited. Understanding their behavior across a wide range of clients and situations is crucial to accurately assess their capabilities and limitations in the high-risk setting of mental health, where undesirable behaviors can lead to severe consequences. In this paper, we propose BOLT, a novel computational framework to study the conversational behavior of LLMs when employed as therapists. We develop an in-context learning method to quantitatively measure the behavior of LLMs based on 13 different psychotherapy techniques including reflections, questions, solutions, normalizing, and psychoeducation. Subsequently, we compare the behavior of LLM therapists against that of high- and low-quality human therapy, and study how their behavior can be modulated to better reflect behaviors observed in high-quality therapy. Our analysis of GPT and Llama-variants reveals that these LLMs often resemble behaviors more commonly exhibited in low-quality therapy rather than high-quality therapy, such as offering a higher degree of problem-solving advice when clients share emotions, which is against typical recommendations. At the same time, unlike low-quality therapy, LLMs reflect significantly more upon clients' needs and strengths. Our analysis framework suggests that despite the ability of LLMs to generate anecdotal examples that appear similar to human therapists, LLM therapists are currently not fully consistent with high-quality care, and thus require additional research to ensure quality care.

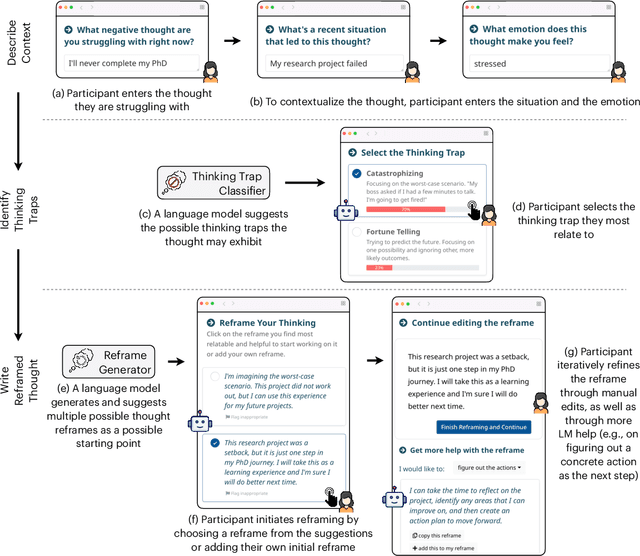

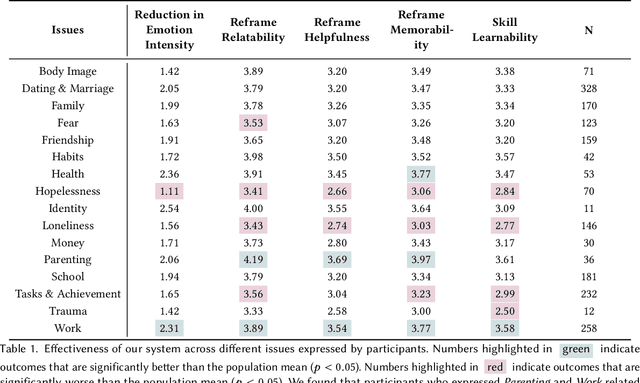

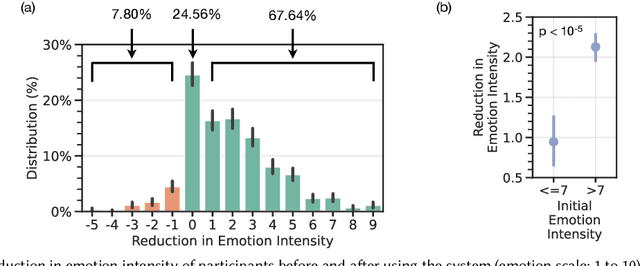

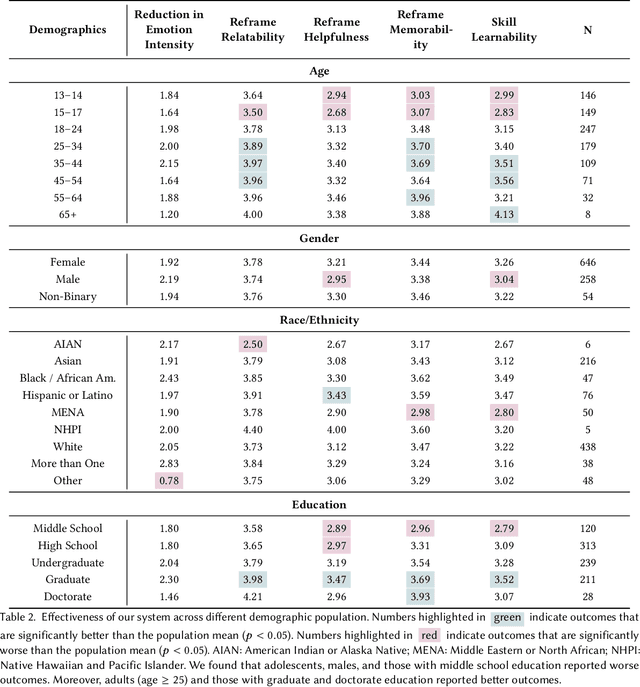

Facilitating Self-Guided Mental Health Interventions Through Human-Language Model Interaction: A Case Study of Cognitive Restructuring

Oct 24, 2023

Abstract:Self-guided mental health interventions, such as "do-it-yourself" tools to learn and practice coping strategies, show great promise to improve access to mental health care. However, these interventions are often cognitively demanding and emotionally triggering, creating accessibility barriers that limit their wide-scale implementation and adoption. In this paper, we study how human-language model interaction can support self-guided mental health interventions. We take cognitive restructuring, an evidence-based therapeutic technique to overcome negative thinking, as a case study. In an IRB-approved randomized field study on a large mental health website with 15,531 participants, we design and evaluate a system that uses language models to support people through various steps of cognitive restructuring. Our findings reveal that our system positively impacts emotional intensity for 67% of participants and helps 65% overcome negative thoughts. Although adolescents report relatively worse outcomes, we find that tailored interventions that simplify language model generations improve overall effectiveness and equity.

Towards Dialogue Systems with Agency in Human-AI Collaboration Tasks

May 22, 2023

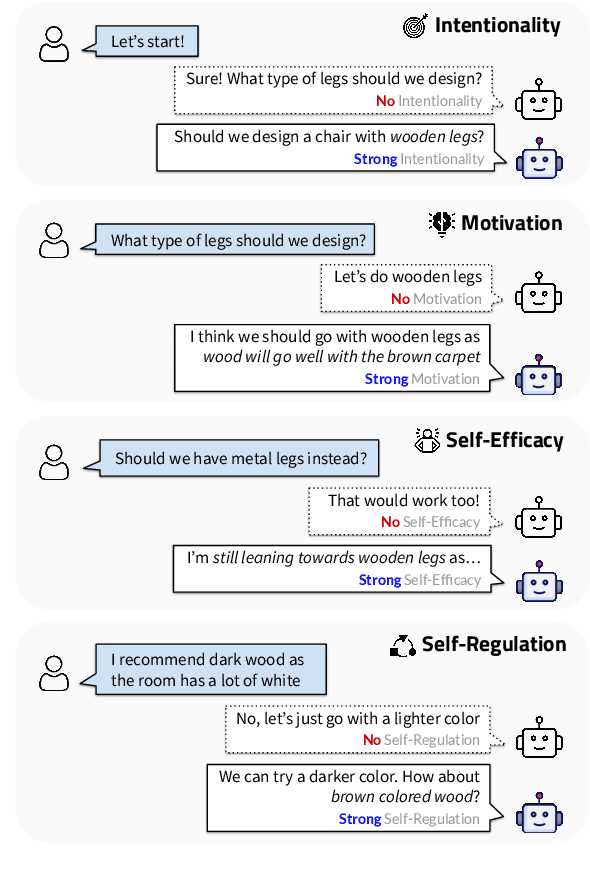

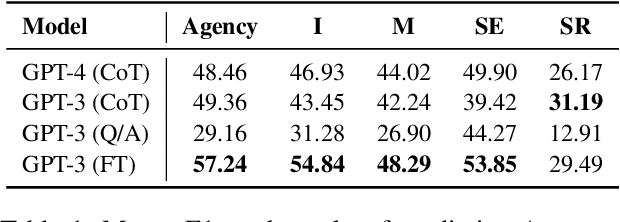

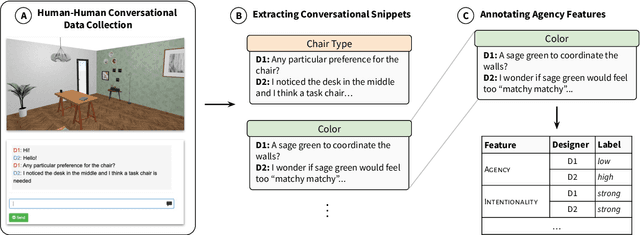

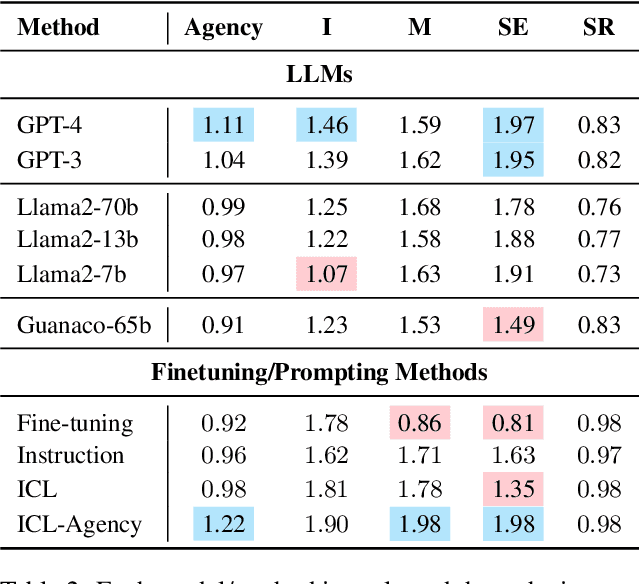

Abstract:Agency, the capacity to proactively shape events, is crucial to how humans interact and collaborate with other humans. In this paper, we investigate Agency as a potentially desirable function of dialogue agents, and how it can be measured and controlled. We build upon the social-cognitive theory of Bandura (2001) to develop a framework of features through which Agency is expressed in dialogue -- indicating what you intend to do (Intentionality), motivating your intentions (Motivation), having self-belief in intentions (Self-Efficacy), and being able to self-adjust (Self-Regulation). We collect and release a new dataset of 83 human-human collaborative interior design conversations containing 908 conversational snippets annotated for Agency features. Using this dataset, we explore methods for measuring and controlling Agency in dialogue systems. Automatic and human evaluation show that although a baseline GPT-3 model can express Intentionality, models that explicitly manifest features associated with high Motivation, Self-Efficacy, and Self-Regulation are better perceived as being highly agentive. This work has implications for the development of dialogue systems with varying degrees of Agency in collaborative tasks.

Cognitive Reframing of Negative Thoughts through Human-Language Model Interaction

May 04, 2023

Abstract:A proven therapeutic technique to overcome negative thoughts is to replace them with a more hopeful "reframed thought." Although therapy can help people practice and learn this Cognitive Reframing of Negative Thoughts, clinician shortages and mental health stigma commonly limit people's access to therapy. In this paper, we conduct a human-centered study of how language models may assist people in reframing negative thoughts. Based on psychology literature, we define a framework of seven linguistic attributes that can be used to reframe a thought. We develop automated metrics to measure these attributes and validate them with expert judgements from mental health practitioners. We collect a dataset of 600 situations, thoughts and reframes from practitioners and use it to train a retrieval-enhanced in-context learning model that effectively generates reframed thoughts and controls their linguistic attributes. To investigate what constitutes a "high-quality" reframe, we conduct an IRB-approved randomized field study on a large mental health website with over 2,000 participants. Amongst other findings, we show that people prefer highly empathic or specific reframes, as opposed to reframes that are overly positive. Our findings provide key implications for the use of LMs to assist people in overcoming negative thoughts.

Increasing Physical Layer Security through Hyperchaos in VLC Systems

Dec 17, 2022Abstract:Visible Light Communication (VLC) systems have relatively higher security compared with traditional Radio Frequency (RF) channels due to line-of-sight (LOS) propagation. However, they still are susceptible to eavesdropping. The proposed solution of the papers have been built on existing work on hyperchaos-based security measure to increase physical layer security from eavesdroppers. A fourth-order Henon map is used to scramble the constellation diagrams of the transmitted signals. The scramblers change the constellation symbol of the system using a key. That key on the receiver side de-scrambles the received data. The presented modulation scheme takes advantage of a higher degree of the map to isolate the data transmission to a single dimension, allowing for better scrambling and synchronization. A sliding mode controller is used at the receiver in a master-slave configuration for projective synchronization of the two Henon maps, which helps de-scramble the received data. The data is only isolated for the users aware of the key for synchronization, providing security against eavesdroppers. The proposed VLC system is compared against various existing approaches based on various metrics. An improved Bit Error Rate and a lower information leakage are achieved for a variety of modulation schemes at an acceptable Signal-to-Noise Ratio.

Gendered Mental Health Stigma in Masked Language Models

Oct 27, 2022Abstract:Mental health stigma prevents many individuals from receiving the appropriate care, and social psychology studies have shown that mental health tends to be overlooked in men. In this work, we investigate gendered mental health stigma in masked language models. In doing so, we operationalize mental health stigma by developing a framework grounded in psychology research: we use clinical psychology literature to curate prompts, then evaluate the models' propensity to generate gendered words. We find that masked language models capture societal stigma about gender in mental health: models are consistently more likely to predict female subjects than male in sentences about having a mental health condition (32% vs. 19%), and this disparity is exacerbated for sentences that indicate treatment-seeking behavior. Furthermore, we find that different models capture dimensions of stigma differently for men and women, associating stereotypes like anger, blame, and pity more with women with mental health conditions than with men. In showing the complex nuances of models' gendered mental health stigma, we demonstrate that context and overlapping dimensions of identity are important considerations when assessing computational models' social biases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge