Artem Lukoianov

Locality in Image Diffusion Models Emerges from Data Statistics

Sep 11, 2025

Abstract:Among generative models, diffusion models are uniquely intriguing due to the existence of a closed-form optimal minimizer of their training objective, often referred to as the optimal denoiser. However, diffusion using this optimal denoiser merely reproduces images in the training set and hence fails to capture the behavior of deep diffusion models. Recent work has attempted to characterize this gap between the optimal denoiser and deep diffusion models, proposing analytical, training-free models that can generate images that resemble those generated by a trained UNet. The best-performing method hypothesizes that shift equivariance and locality inductive biases of convolutional neural networks are the cause of the performance gap, hence incorporating these assumptions into its analytical model. In this work, we present evidence that the locality in deep diffusion models emerges as a statistical property of the image dataset, not due to the inductive bias of convolutional neural networks. Specifically, we demonstrate that an optimal parametric linear denoiser exhibits similar locality properties to the deep neural denoisers. We further show, both theoretically and experimentally, that this locality arises directly from the pixel correlations present in natural image datasets. Finally, we use these insights to craft an analytical denoiser that better matches scores predicted by a deep diffusion model than the prior expert-crafted alternative.

Scalable Message Passing Neural Networks: No Need for Attention in Large Graph Representation Learning

Oct 29, 2024Abstract:We propose Scalable Message Passing Neural Networks (SMPNNs) and demonstrate that, by integrating standard convolutional message passing into a Pre-Layer Normalization Transformer-style block instead of attention, we can produce high-performing deep message-passing-based Graph Neural Networks (GNNs). This modification yields results competitive with the state-of-the-art in large graph transductive learning, particularly outperforming the best Graph Transformers in the literature, without requiring the otherwise computationally and memory-expensive attention mechanism. Our architecture not only scales to large graphs but also makes it possible to construct deep message-passing networks, unlike simple GNNs, which have traditionally been constrained to shallow architectures due to oversmoothing. Moreover, we provide a new theoretical analysis of oversmoothing based on universal approximation which we use to motivate SMPNNs. We show that in the context of graph convolutions, residual connections are necessary for maintaining the universal approximation properties of downstream learners and that removing them can lead to a loss of universality.

Score Distillation via Reparametrized DDIM

May 24, 2024Abstract:While 2D diffusion models generate realistic, high-detail images, 3D shape generation methods like Score Distillation Sampling (SDS) built on these 2D diffusion models produce cartoon-like, over-smoothed shapes. To help explain this discrepancy, we show that the image guidance used in Score Distillation can be understood as the velocity field of a 2D denoising generative process, up to the choice of a noise term. In particular, after a change of variables, SDS resembles a high-variance version of Denoising Diffusion Implicit Models (DDIM) with a differently-sampled noise term: SDS introduces noise i.i.d. randomly at each step, while DDIM infers it from the previous noise predictions. This excessive variance can lead to over-smoothing and unrealistic outputs. We show that a better noise approximation can be recovered by inverting DDIM in each SDS update step. This modification makes SDS's generative process for 2D images almost identical to DDIM. In 3D, it removes over-smoothing, preserves higher-frequency detail, and brings the generation quality closer to that of 2D samplers. Experimentally, our method achieves better or similar 3D generation quality compared to other state-of-the-art Score Distillation methods, all without training additional neural networks or multi-view supervision, and providing useful insights into relationship between 2D and 3D asset generation with diffusion models.

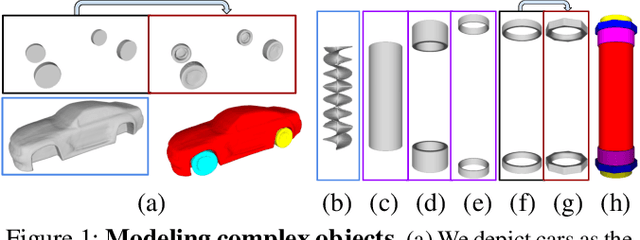

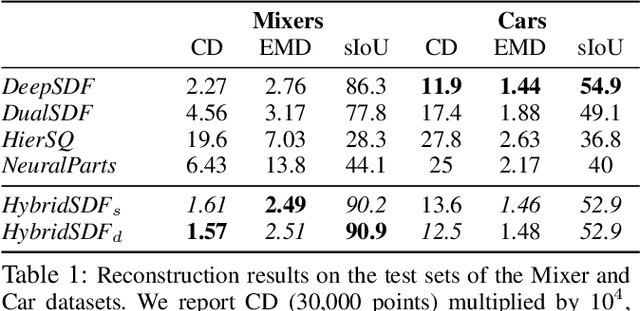

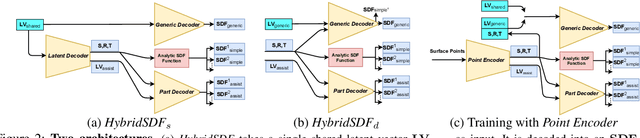

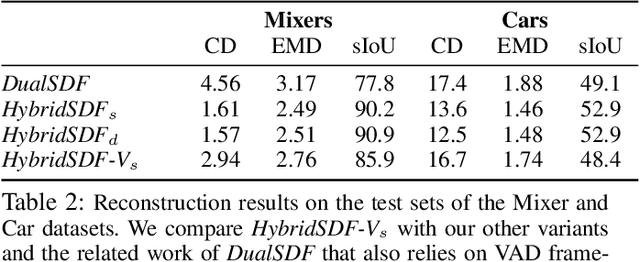

HybridSDF: Combining Free Form Shapes and Geometric Primitives for effective Shape Manipulation

Sep 24, 2021

Abstract:CAD modeling typically involves the use of simple geometric primitives whereas recent advances in deep-learning based 3D surface modeling have opened new shape design avenues. Unfortunately, these advances have not yet been accepted by the CAD community because they cannot be integrated into engineering workflows. To remedy this, we propose a novel approach to effectively combining geometric primitives and free-form surfaces represented by implicit surfaces for accurate modeling that preserves interpretability, enforces consistency, and enables easy manipulation.

DeepMesh: Differentiable Iso-Surface Extraction

Jun 20, 2021

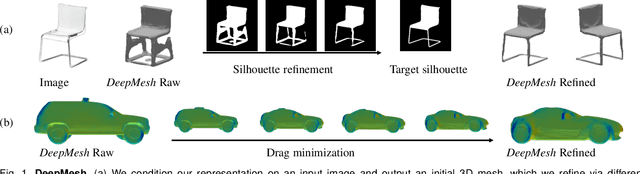

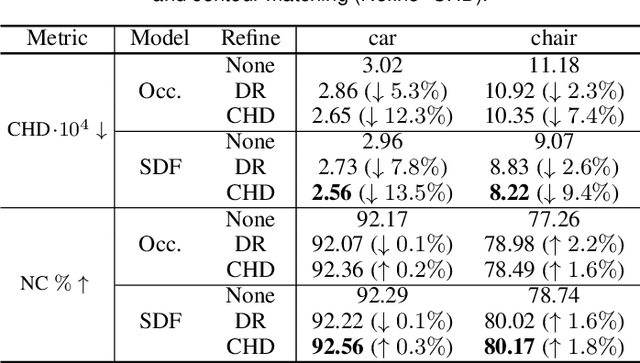

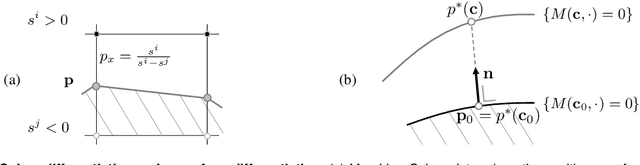

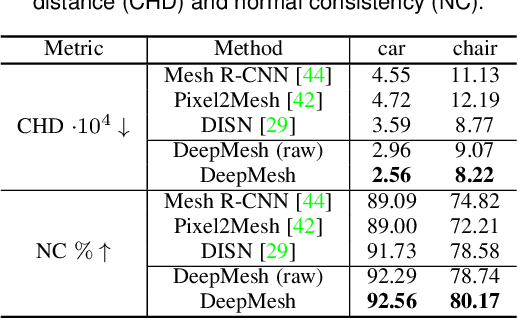

Abstract:Geometric Deep Learning has recently made striking progress with the advent of continuous Deep Implicit Fields. They allow for detailed modeling of watertight surfaces of arbitrary topology while not relying on a 3D Euclidean grid, resulting in a learnable parameterization that is unlimited in resolution. Unfortunately, these methods are often unsuitable for applications that require an explicit mesh-based surface representation because converting an implicit field to such a representation relies on the Marching Cubes algorithm, which cannot be differentiated with respect to the underlying implicit field. In this work, we remove this limitation and introduce a differentiable way to produce explicit surface mesh representations from Deep Implicit Fields. Our key insight is that by reasoning on how implicit field perturbations impact local surface geometry, one can ultimately differentiate the 3D location of surface samples with respect to the underlying deep implicit field. We exploit this to define DeepMesh -- end-to-end differentiable mesh representation that can vary its topology. We use two different applications to validate our theoretical insight: Single view 3D Reconstruction via Differentiable Rendering and Physically-Driven Shape Optimization. In both cases our end-to-end differentiable parameterization gives us an edge over state-of-the-art algorithms.

MeshSDF: Differentiable Iso-Surface Extraction

Jun 06, 2020

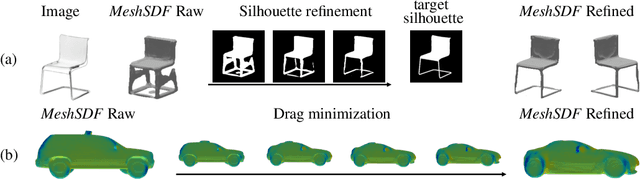

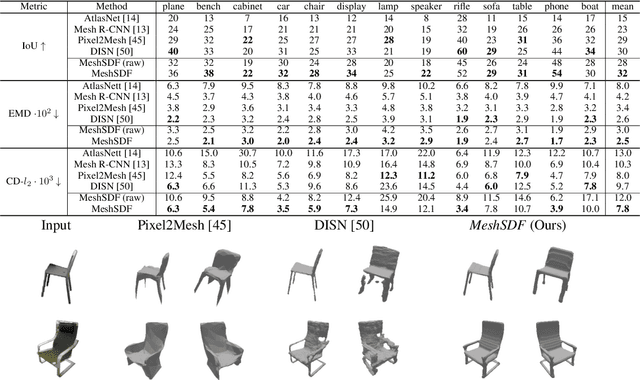

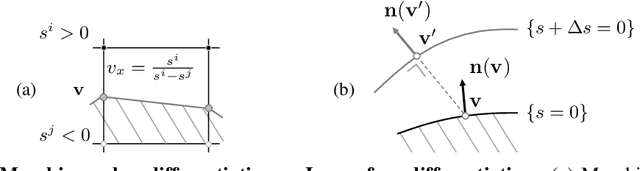

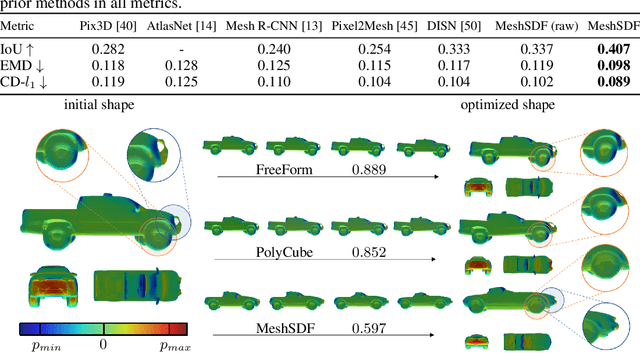

Abstract:Geometric Deep Learning has recently made striking progress with the advent of continuous Deep Implicit Fields. They allow for detailed modeling of watertight surfaces of arbitrary topology while not relying on a 3D Euclidean grid, resulting in a learnable parameterization that is not limited in resolution. Unfortunately, these methods are often not suitable for applications that require an explicit mesh-based surface representation because converting an implicit field to such a representation relies on the Marching Cubes algorithm, which cannot be differentiated with respect to the underlying implicit field. In this work, we remove this limitation and introduce a differentiable way to produce explicit surface mesh representations from Deep Signed Distance Functions. Our key insight is that by reasoning on how implicit field perturbations impact local surface geometry, one can ultimately differentiate the 3D location of surface samples with respect to the underlying deep implicit field. We exploit this to define MeshSDF, an end-to-end differentiable mesh representation which can vary its topology. We use two different applications to validate our theoretical insight: Single-View Reconstruction via Differentiable Rendering and Physically-Driven Shape Optimization. In both cases our differentiable parameterization gives us an edge over state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge