Andriy Sarabakha

Modelling of Underwater Vehicles using Physics-Informed Neural Networks with Control

Apr 28, 2025

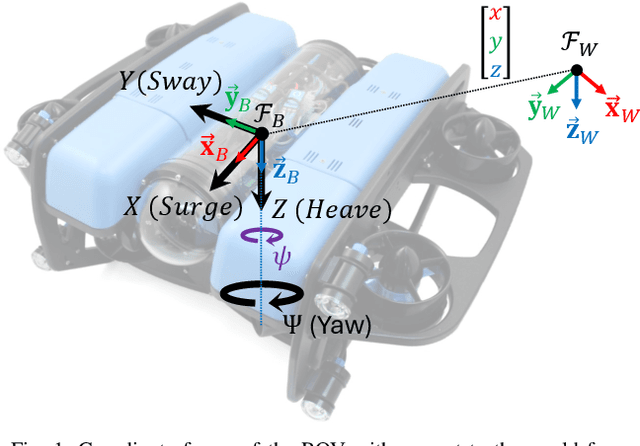

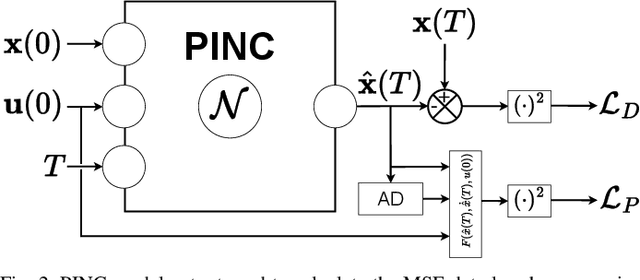

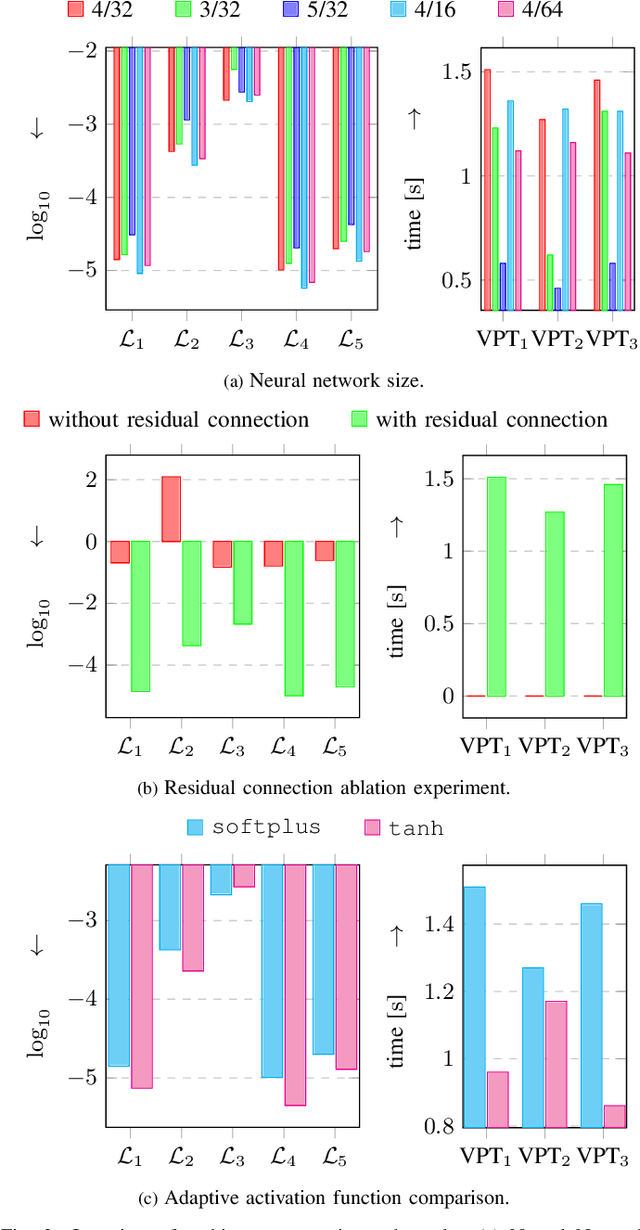

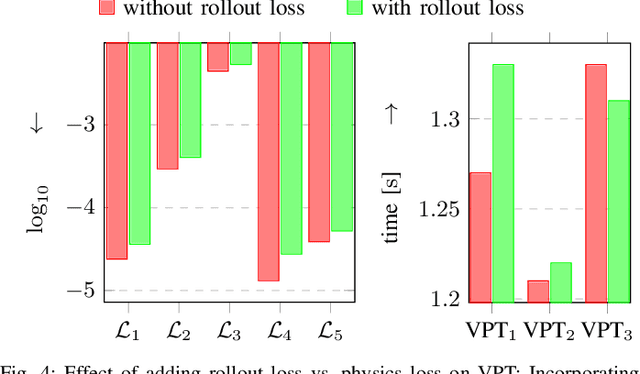

Abstract:Physics-informed neural networks (PINNs) integrate physical laws with data-driven models to improve generalization and sample efficiency. This work introduces an open-source implementation of the Physics-Informed Neural Network with Control (PINC) framework, designed to model the dynamics of an underwater vehicle. Using initial states, control actions, and time inputs, PINC extends PINNs to enable physically consistent transitions beyond the training domain. Various PINC configurations are tested, including differing loss functions, gradient-weighting schemes, and hyperparameters. Validation on a simulated underwater vehicle demonstrates more accurate long-horizon predictions compared to a non-physics-informed baseline

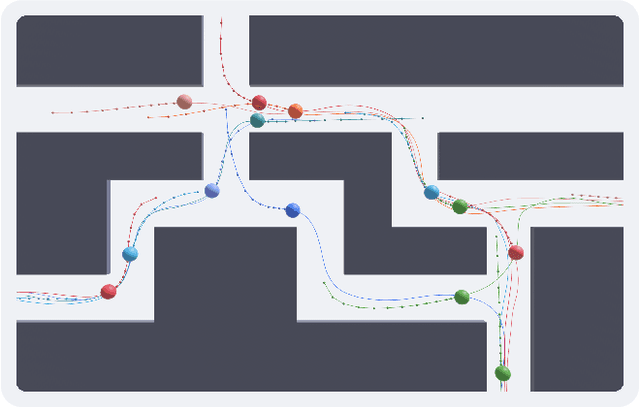

Multi-Agent Path Planning in Complex Environments using Gaussian Belief Propagation with Global Path Finding

Feb 27, 2025

Abstract:Multi-agent path planning is a critical challenge in robotics, requiring agents to navigate complex environments while avoiding collisions and optimizing travel efficiency. This work addresses the limitations of existing approaches by combining Gaussian belief propagation with path integration and introducing a novel tracking factor to ensure strict adherence to global paths. The proposed method is tested with two different global path-planning approaches: rapidly exploring random trees and a structured planner, which leverages predefined lane structures to improve coordination. A simulation environment was developed to validate the proposed method across diverse scenarios, each posing unique challenges in navigation and communication. Simulation results demonstrate that the tracking factor reduces path deviation by 28% in single-agent and 16% in multi-agent scenarios, highlighting its effectiveness in improving multi-agent coordination, especially when combined with structured global planning.

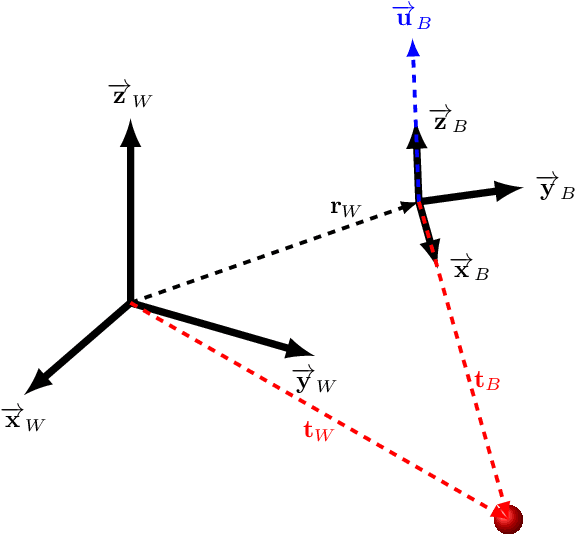

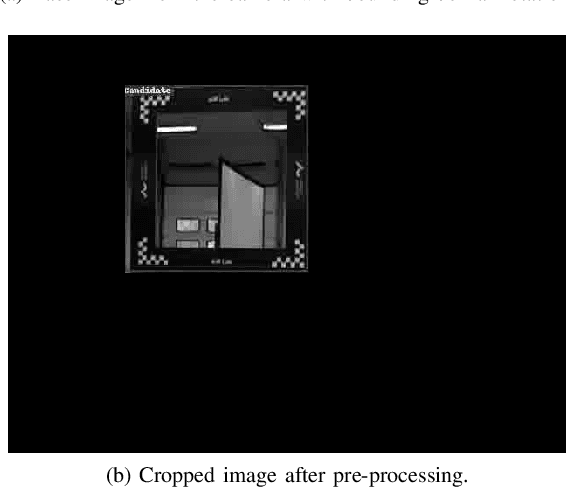

Continual Learning for Robust Gate Detection under Dynamic Lighting in Autonomous Drone Racing

May 02, 2024

Abstract:In autonomous and mobile robotics, a principal challenge is resilient real-time environmental perception, particularly in situations characterized by unknown and dynamic elements, as exemplified in the context of autonomous drone racing. This study introduces a perception technique for detecting drone racing gates under illumination variations, which is common during high-speed drone flights. The proposed technique relies upon a lightweight neural network backbone augmented with capabilities for continual learning. The envisaged approach amalgamates predictions of the gates' positional coordinates, distance, and orientation, encapsulating them into a cohesive pose tuple. A comprehensive number of tests serve to underscore the efficacy of this approach in confronting diverse and challenging scenarios, specifically those involving variable lighting conditions. The proposed methodology exhibits notable robustness in the face of illumination variations, thereby substantiating its effectiveness.

anafi_ros: from Off-the-Shelf Drones to Research Platforms

Mar 03, 2023Abstract:The off-the-shelf drones are simple to operate and easy to maintain aerial systems. However, due to proprietary flight software, these drones usually do not provide any open-source interface which can enable them for autonomous flight in research or teaching. This work introduces a package for ROS1 and ROS2 for straightforward interfacing with off-the-shelf drones from the Parrot ANAFI family. The developed ROS package is hardware agnostic, allowing connecting seamlessly to all four supported drone models. This framework can connect with the same ease to a single drone or a team of drones from the same ground station. The developed package was intensively tested at the limits of the drones' capabilities and thoughtfully documented to facilitate its use by other research groups worldwide.

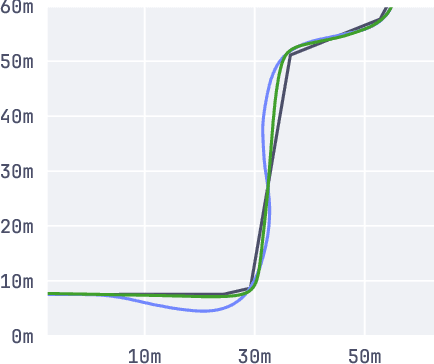

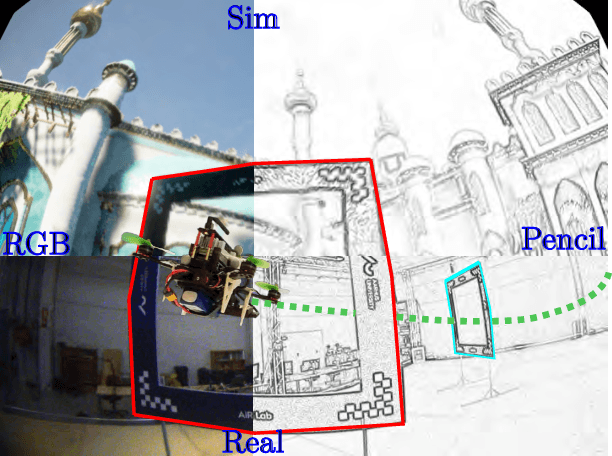

PencilNet: Zero-Shot Sim-to-Real Transfer Learning for Robust Gate Perception in Autonomous Drone Racing

Jul 28, 2022

Abstract:In autonomous and mobile robotics, one of the main challenges is the robust on-the-fly perception of the environment, which is often unknown and dynamic, like in autonomous drone racing. In this work, we propose a novel deep neural network-based perception method for racing gate detection -- PencilNet -- which relies on a lightweight neural network backbone on top of a pencil filter. This approach unifies predictions of the gates' 2D position, distance, and orientation in a single pose tuple. We show that our method is effective for zero-shot sim-to-real transfer learning that does not need any real-world training samples. Moreover, our framework is highly robust to illumination changes commonly seen under rapid flight compared to state-of-art methods. A thorough set of experiments demonstrates the effectiveness of this approach in multiple challenging scenarios, where the drone completes various tracks under different lighting conditions.

Towards Remote Robotic Competitions: An Internet-Connected Task Board and Dashboard

Jan 24, 2022

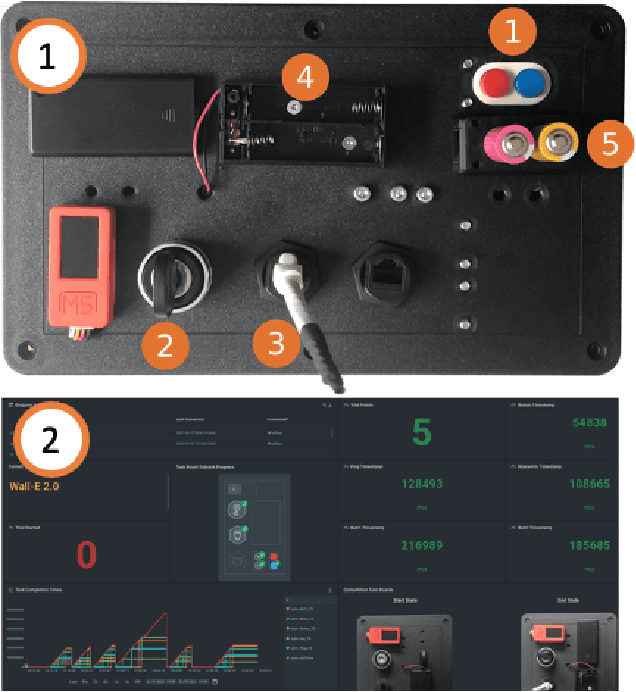

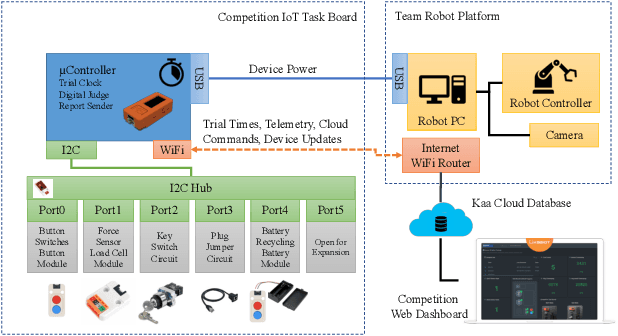

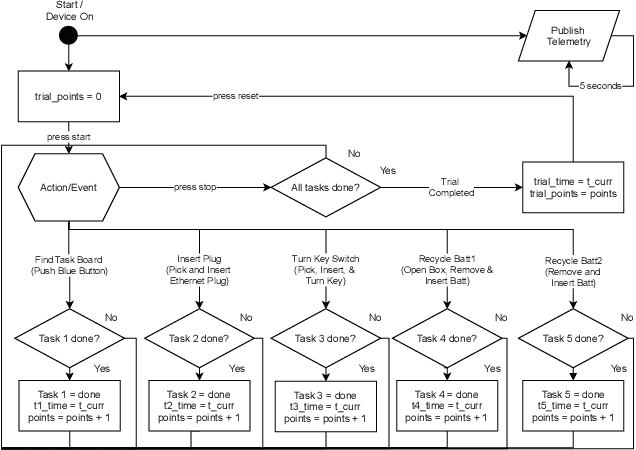

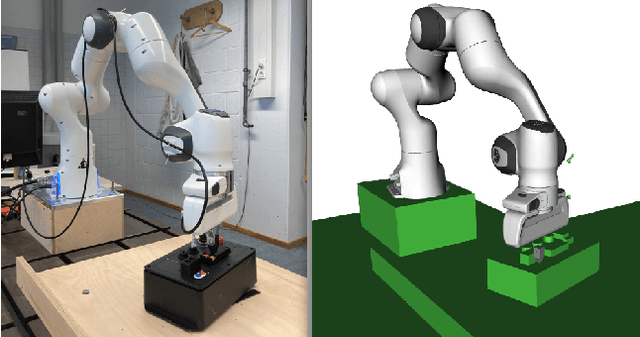

Abstract:In this work we present a platform to assess robot platform skills using an internet-of-things (IoT) task board device to aggregate performances across remote sites. We demonstrate a concept for a modular, scale-able device and web dashboard enabling remote competitions as an alternative to in-person robot competitions. We share data from nine robot platforms located across four continents in three manipulation task categories of object localization, object insertion, and component disassembly through an organized international robot competition - the Robothon Grand Challenge. This paper discusses the design of an electronic task board, the strategies implemented by the top-performing teams and compares their results with a benchmark solution to the presented task board. Through this platform, we demonstrate fully remote, online competitions can generate innovative robotic solutions and tested a tool for measuring remote performances. Using the open-sourced task board code and design files, the reader can reproduce the benchmark solution or configure the platform for their own use case and share their results transparently without transporting their robot platform.

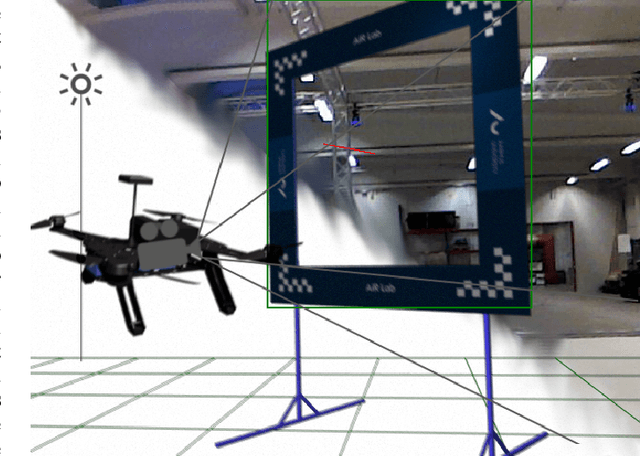

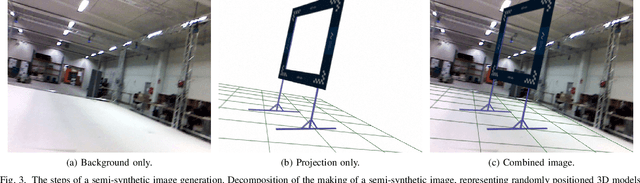

Image Generation for Efficient Neural Network Training in Autonomous Drone Racing

Aug 06, 2020

Abstract:Drone racing is a recreational sport in which the goal is to pass through a sequence of gates in a minimum amount of time while avoiding collisions. In autonomous drone racing, one must accomplish this task by flying fully autonomously in an unknown environment by relying only on computer vision methods for detecting the target gates. Due to the challenges such as background objects and varying lighting conditions, traditional object detection algorithms based on colour or geometry tend to fail. Convolutional neural networks offer impressive advances in computer vision but require an immense amount of data to learn. Collecting this data is a tedious process because the drone has to be flown manually, and the data collected can suffer from sensor failures. In this work, a semi-synthetic dataset generation method is proposed, using a combination of real background images and randomised 3D renders of the gates, to provide a limitless amount of training samples that do not suffer from those drawbacks. Using the detection results, a line-of-sight guidance algorithm is used to cross the gates. In several experimental real-time tests, the proposed framework successfully demonstrates fast and reliable detection and navigation.

Online Deep Learning for Improved Trajectory Tracking of Unmanned Aerial Vehicles Using Expert Knowledge

May 26, 2019

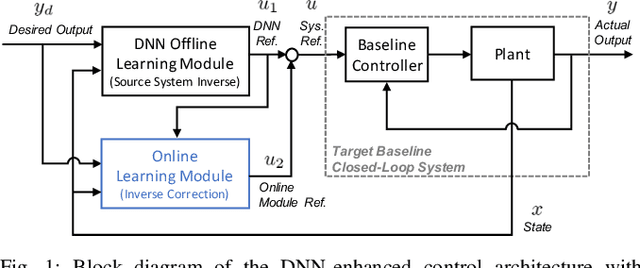

Abstract:This work presents an online learning-based control method for improved trajectory tracking of unmanned aerial vehicles using both deep learning and expert knowledge. The proposed method does not require the exact model of the system to be controlled, and it is robust against variations in system dynamics as well as operational uncertainties. The learning is divided into two phases: offline (pre-)training and online (post-)training. In the former, a conventional controller performs a set of trajectories and, based on the input-output dataset, the deep neural network (DNN)-based controller is trained. In the latter, the trained DNN, which mimics the conventional controller, controls the system. Unlike the existing papers in the literature, the network is still being trained for different sets of trajectories which are not used in the training phase of DNN. Thanks to the rule-base, which contains the expert knowledge, the proposed framework learns the system dynamics and operational uncertainties in real-time. The experimental results show that the proposed online learning-based approach gives better trajectory tracking performance when compared to the only offline trained network.

Knowledge Transfer Between Robots with Similar Dynamics for High-Accuracy Impromptu Trajectory Tracking

Mar 30, 2019

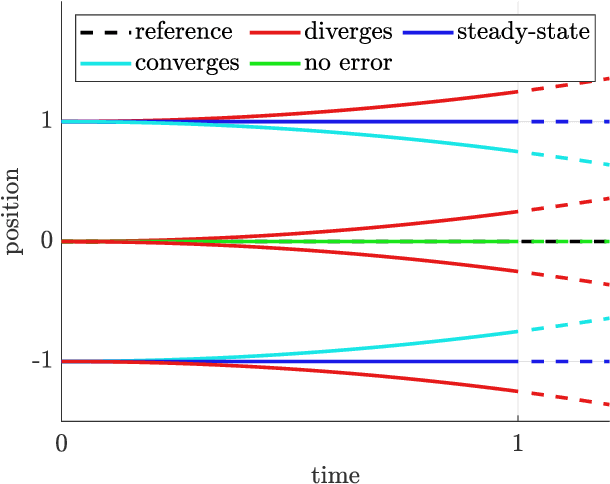

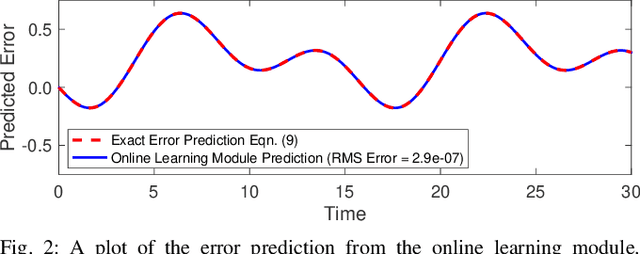

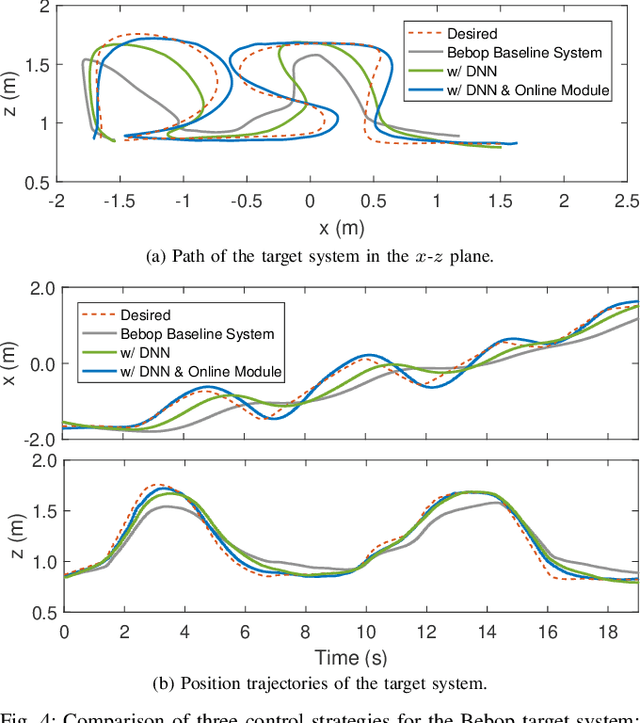

Abstract:In this paper, we propose an online learning approach that enables the inverse dynamics model learned for a source robot to be transferred to a target robot (e.g., from one quadrotor to another quadrotor with different mass or aerodynamic properties). The goal is to leverage knowledge from the source robot such that the target robot achieves high-accuracy trajectory tracking on arbitrary trajectories from the first attempt with minimal data recollection and training. Most existing approaches for multi-robot knowledge transfer are based on post-analysis of datasets collected from both robots. In this work, we study the feasibility of impromptu transfer of models across robots by learning an error prediction module online. In particular, we analytically derive the form of the mapping to be learned by the online module for exact tracking, propose an approach for characterizing similarity between robots, and use these results to analyze the stability of the overall system. The proposed approach is illustrated in simulation and verified experimentally on two different quadrotors performing impromptu trajectory tracking tasks, where the quadrotors are required to accurately track arbitrary hand-drawn trajectories from the first attempt.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge