Huy Xuan Pham

Visual Tracking Nonlinear Model Predictive Control Method for Autonomous Wind Turbine Inspection

Oct 21, 2023

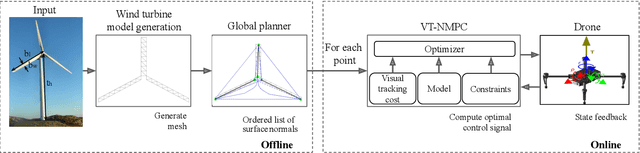

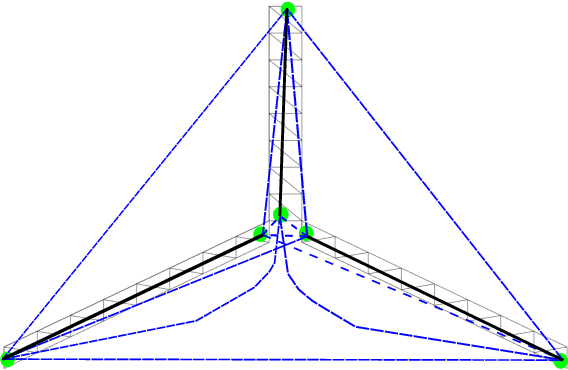

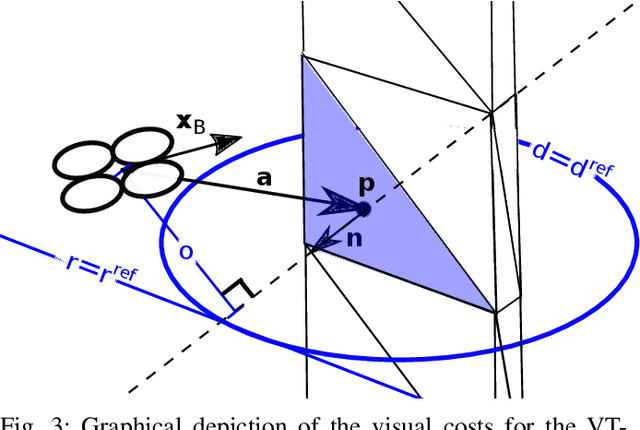

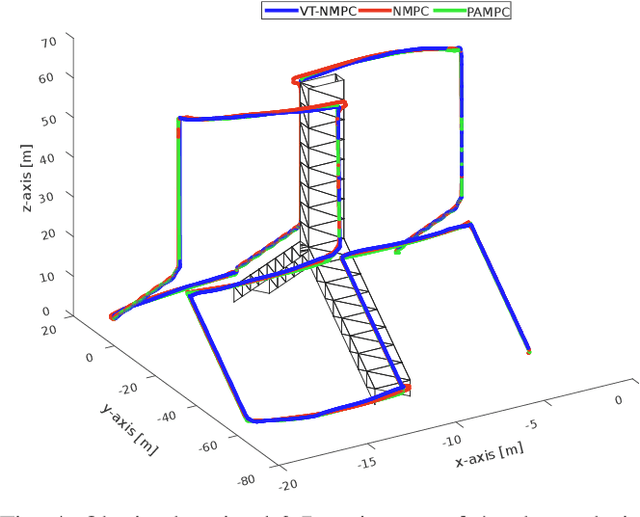

Abstract:Automated visual inspection of on-and offshore wind turbines using aerial robots provides several benefits, namely, a safe working environment by circumventing the need for workers to be suspended high above the ground, reduced inspection time, preventive maintenance, and access to hard-to-reach areas. A novel nonlinear model predictive control (NMPC) framework alongside a global wind turbine path planner is proposed to achieve distance-optimal coverage for wind turbine inspection. Unlike traditional MPC formulations, visual tracking NMPC (VT-NMPC) is designed to track an inspection surface, instead of a position and heading trajectory, thereby circumventing the need to provide an accurate predefined trajectory for the drone. An additional capability of the proposed VT-NMPC method is that by incorporating inspection requirements as visual tracking costs to minimize, it naturally achieves the inspection task successfully while respecting the physical constraints of the drone. Multiple simulation runs and real-world tests demonstrate the efficiency and efficacy of the proposed automated inspection framework, which outperforms the traditional MPC designs, by providing full coverage of the target wind turbine blades as well as its robustness to changing wind conditions. The implementation codes are open-sourced.

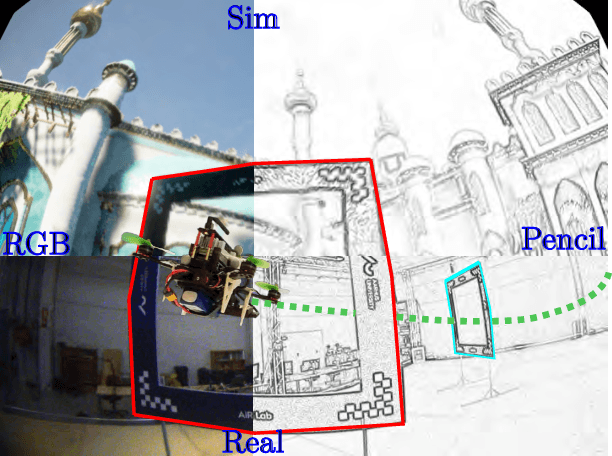

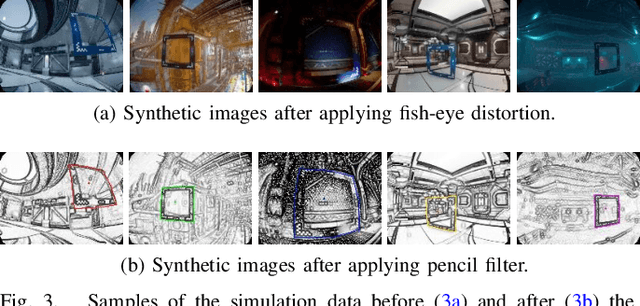

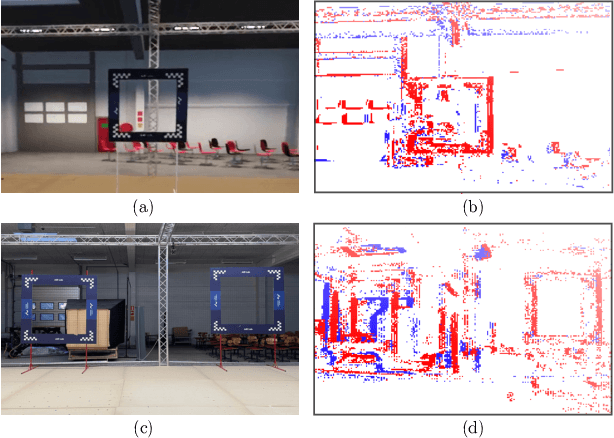

PencilNet: Zero-Shot Sim-to-Real Transfer Learning for Robust Gate Perception in Autonomous Drone Racing

Jul 28, 2022

Abstract:In autonomous and mobile robotics, one of the main challenges is the robust on-the-fly perception of the environment, which is often unknown and dynamic, like in autonomous drone racing. In this work, we propose a novel deep neural network-based perception method for racing gate detection -- PencilNet -- which relies on a lightweight neural network backbone on top of a pencil filter. This approach unifies predictions of the gates' 2D position, distance, and orientation in a single pose tuple. We show that our method is effective for zero-shot sim-to-real transfer learning that does not need any real-world training samples. Moreover, our framework is highly robust to illumination changes commonly seen under rapid flight compared to state-of-art methods. A thorough set of experiments demonstrates the effectiveness of this approach in multiple challenging scenarios, where the drone completes various tracks under different lighting conditions.

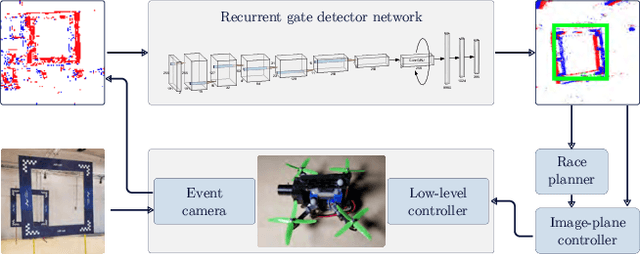

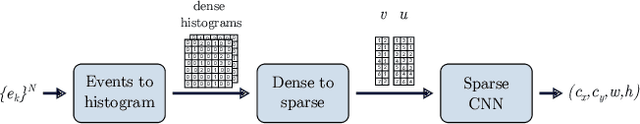

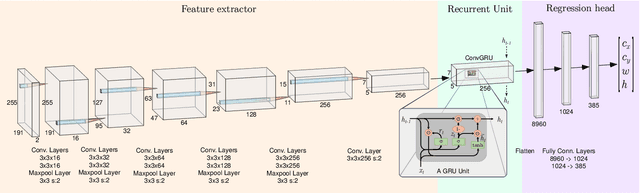

Event-based Navigation for Autonomous Drone Racing with Sparse Gated Recurrent Network

Apr 05, 2022

Abstract:Event-based vision has already revolutionized the perception task for robots by promising faster response, lower energy consumption, and lower bandwidth without introducing motion blur. In this work, a novel deep learning method based on gated recurrent units utilizing sparse convolutions for detecting gates in a race track is proposed using event-based vision for the autonomous drone racing problem. We demonstrate the efficiency and efficacy of the perception pipeline on a real robot platform that can safely navigate a typical autonomous drone racing track in real-time. Throughout the experiments, we show that the event-based vision with the proposed gated recurrent unit and pretrained models on simulated event data significantly improve the gate detection precision. Furthermore, an event-based drone racing dataset consisting of both simulated and real data sequences is publicly released.

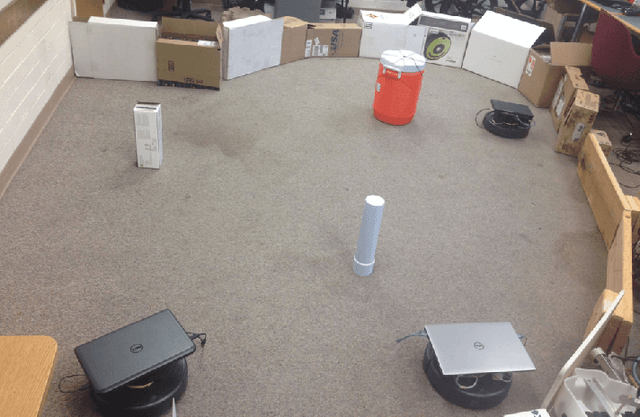

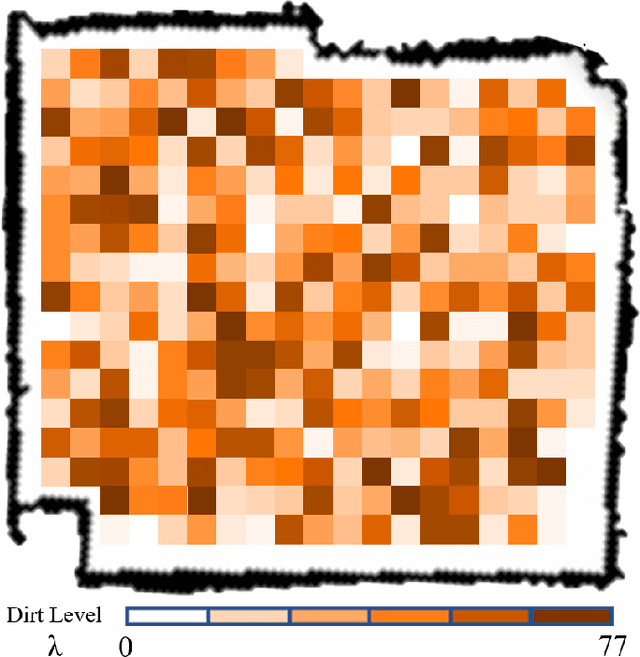

A Multi-Robotic System for Environmental Cleaning

Nov 02, 2018

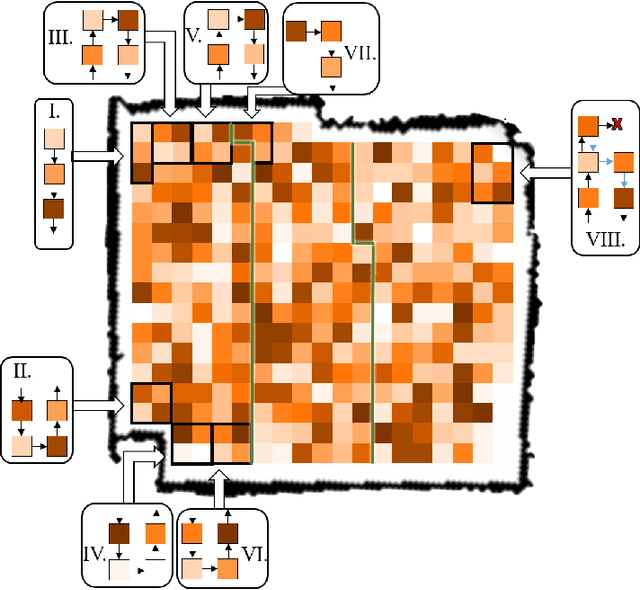

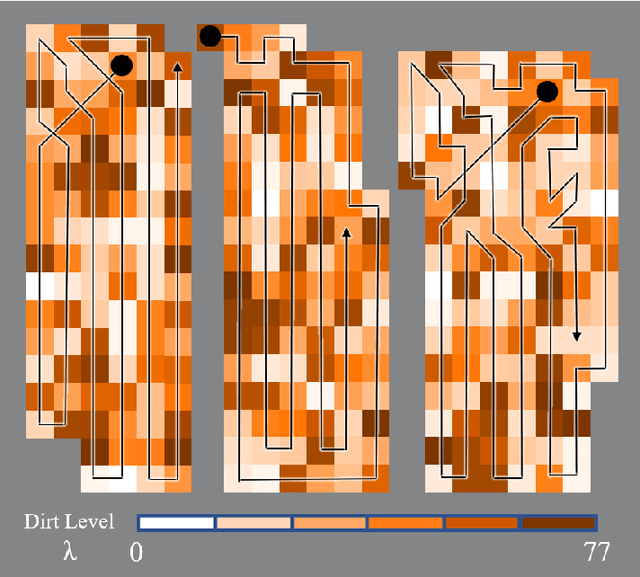

Abstract:There is a lot of waste in an industrial environment that could cause harmful effects to both the products and the workers resulting in product defects, itchy eyes or chronic obstructive pulmonary disease, etc. While automative cleaning robots could be used, the environment is often too big for one robot to clean alone in addition to the fact that it does not have adequate stored dirt capacity. We present a multi-robotic dirt cleaning system algorithm for multiple automatic iRobot Creates teaming to efficiently clean an environment. Moreover, since some spaces in the environment are clean while others are dirty, our multi-robotic system possesses a path planning algorithm to allow the robot team to clean efficiently by spending more time on the area with higher dirt level. Overall, our multi-robotic system outperforms the single robot system in time efficiency while having almost the same total battery usage and cleaning efficiency result.

Cooperative and Distributed Reinforcement Learning of Drones for Field Coverage

Sep 16, 2018

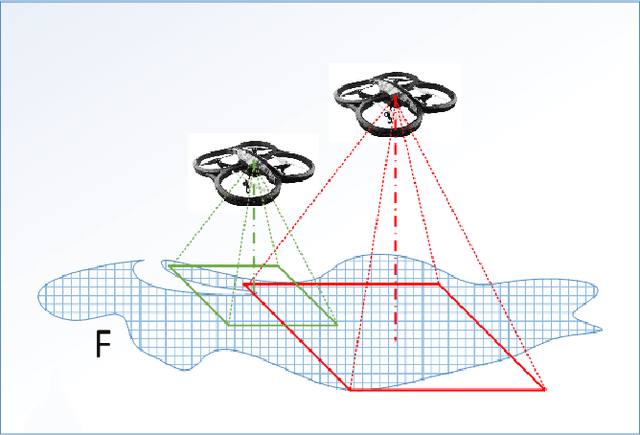

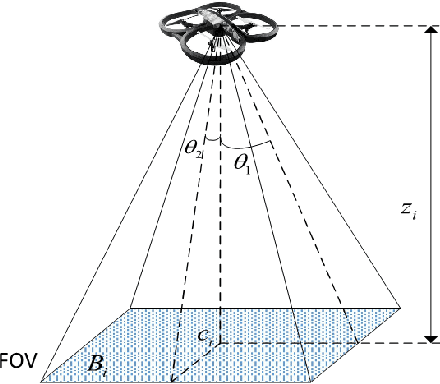

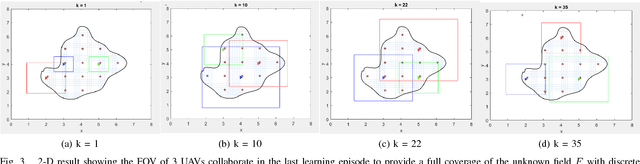

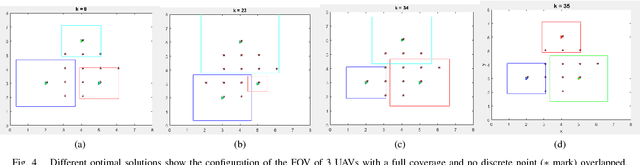

Abstract:This paper proposes a distributed Multi-Agent Reinforcement Learning (MARL) algorithm for a team of Unmanned Aerial Vehicles (UAVs). The proposed MARL algorithm allows UAVs to learn cooperatively to provide a full coverage of an unknown field of interest while minimizing the overlapping sections among their field of views. Two challenges in MARL for such a system are discussed in the paper: firstly, the complex dynamic of the joint-actions of the UAV team, that will be solved using game-theoretic correlated equilibrium, and secondly, the challenge in huge dimensional state space representation will be tackled with efficient function approximation techniques. We also provide our experimental results in detail with both simulation and physical implementation to show that the UAV team can successfully learn to accomplish the task.

A Distributed Control Framework of Multiple Unmanned Aerial Vehicles for Dynamic Wildfire Tracking

Mar 20, 2018

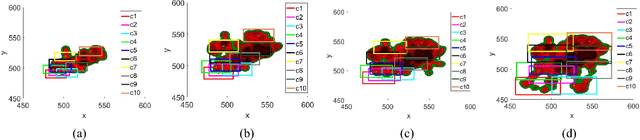

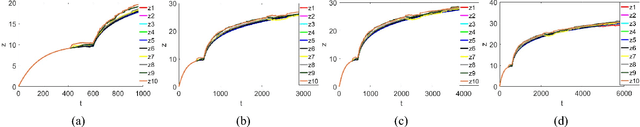

Abstract:Wild-land fire fighting is a hazardous job. A key task for firefighters is to observe the "fire front" to chart the progress of the fire and areas that will likely spread next. Lack of information of the fire front causes many accidents. Using Unmanned Aerial Vehicles (UAVs) to cover wildfire is promising because it can replace humans in hazardous fire tracking and significantly reduce operation costs. In this paper we propose a distributed control framework designed for a team of UAVs that can closely monitor a wildfire in open space, and precisely track its development. The UAV team, designed for flexible deployment, can effectively avoid in-flight collisions and cooperate well with neighbors. They can maintain a certain height level to the ground for safe flight above fire. Experimental results are conducted to demonstrate the capabilities of the UAV team in covering a spreading wildfire.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge