David Feil-Seifer

SAR4SLPs: An Asynchronous Survey of Speech-Language Pathologists' Perspectives on Socially Assistive Robots

Apr 22, 2025Abstract:Socially Assistive Robots (SARs) offer unique opportunities within speech language pathology (SLP) education and practice by supporting interactive interventions for children with communication disorders. This paper explores the implementation of SAR4SLPs (Socially Assistive Robots for Speech-Language Pathologists) to investigate aspects such as engagement, therapeutic strategy discipline, and consistent intervention support. We assessed the current application of technology to clinical and educational settings, especially with respect to how SLPs might use SAR in their therapeutic work. An asynchronous remote community (ARC) collaborated with a cohort of practicing SLPs to consider the feasibility, potential effectiveness, and anticipated challenges with implementing SARs in day-to-day interventions and as practice facilitators. We focus in particular on the expressive functionality of SARs, modeling a foundational strategy that SLPs employ across various intervention targets. This paper highlights clinician-driven insights and design implications for developing SARs that support specific treatment goals through collaborative and iterative design.

Design Activity for Robot Faces: Evaluating Child Responses To Expressive Faces

Apr 10, 2025Abstract:Facial expressiveness plays a crucial role in a robot's ability to engage and interact with children. Prior research has shown that expressive robots can enhance child engagement during human-robot interactions. However, many robots used in therapy settings feature non-personalized, static faces designed with traditional facial feature considerations, which can limit the depth of interactions and emotional connections. Digital faces offer opportunities for personalization, yet the current landscape of robot face design lacks a dynamic, user-centered approach. Specifically, there is a significant research gap in designing robot faces based on child preferences. Instead, most robots in child-focused therapy spaces are developed from an adult-centric perspective. We present a novel study investigating the influence of child-drawn digital faces in child-robot interactions. This approach focuses on a design activity with children instructed to draw their own custom robot faces. We compare the perceptions of social intelligence (PSI) of two implementations: a generic digital face and a robot face, personalized using the user's drawn robot faces. The results of this study show the perceived social intelligence of a child-drawn robot was significantly higher compared to a generic face.

Through the Clutter: Exploring the Impact of Complex Environments on the Legibility of Robot Motion

May 31, 2024Abstract:The environments in which the collaboration of a robot would be the most helpful to a person are frequently uncontrolled and cluttered with many objects present. Legible robot arm motion is crucial in tasks like these in order to avoid possible collisions, improve the workflow and help ensure the safety of the person. Prior work in this area, however, focuses on solutions that are tested only in uncluttered environments and there are not many results taken from cluttered environments. In this research we present a measure for clutteredness based on an entropic measure of the environment, and a novel motion planner based on potential fields. Both our measures and the planner were tested in a cluttered environment meant to represent a more typical tool sorting task for which the person would collaborate with a robot. The in-person validation study with Baxter robots shows a significant improvement in legibility of our proposed legible motion planner compared to the current state-of-the-art legible motion planner in cluttered environments. Further, the results show a significant difference in the performance of the planners in cluttered and uncluttered environments, and the need to further explore legible motion in cluttered environments. We argue that the inconsistency of our results in cluttered environments with those obtained from uncluttered environments points out several important issues with the current research performed in the area of legible motion planners.

WIP: A Unit Testing Framework for Self-Guided Personalized Online Robotics Learning

May 18, 2024Abstract:Our ongoing development and deployment of an online robotics education platform highlighted a gap in providing an interactive, feedback-rich learning environment essential for mastering programming concepts in robotics, which they were not getting with the traditional code-simulate-turn in workflow. Since teaching resources are limited, students would benefit from feedback in real-time to find and fix their mistakes in the programming assignments. To address these concerns, this paper will focus on creating a system for unit testing while integrating it into the course workflow. We facilitate this real-time feedback by including unit testing in the design of programming assignments so students can understand and fix their errors on their own and without the prior help of instructors/TAs serving as a bottleneck. In line with the framework's personalized student-centered approach, this method makes it easier for students to revise, and debug their programming work, encouraging hands-on learning. The course workflow updated to include unit tests will strengthen the learning environment and make it more interactive so that students can learn how to program robots in a self-guided fashion.

Cognitive Approach to Hierarchical Task Selection for Human-Robot Interaction in Dynamic Environments

Sep 22, 2023Abstract:In an efficient and flexible human-robot collaborative work environment, a robot team member must be able to recognize both explicit requests and implied actions from human users. Identifying "what to do" in such cases requires an agent to have the ability to construct associations between objects, their actions, and the effect of actions on the environment. In this regard, semantic memory is being introduced to understand the explicit cues and their relationships with available objects and required skills to make "tea" and "sandwich". We have extended our previous hierarchical robot control architecture to add the capability to execute the most appropriate task based on both feedback from the user and the environmental context. To validate this system, two types of skills were implemented in the hierarchical task tree: 1) Tea making skills and 2) Sandwich making skills. During the conversation between the robot and the human, the robot was able to determine the hidden context using ontology and began to act accordingly. For instance, if the person says "I am thirsty" or "It is cold outside" the robot will start to perform the tea-making skill. In contrast, if the person says, "I am hungry" or "I need something to eat", the robot will make the sandwich. A humanoid robot Baxter was used for this experiment. We tested three scenarios with objects at different positions on the table for each skill. We observed that in all cases, the robot used only objects that were relevant to the skill.

A Schedule of Duties in the Cloud Space Using a Modified Salp Swarm Algorithm

Sep 18, 2023Abstract:Cloud computing is a concept introduced in the information technology era, with the main components being the grid, distributed, and valuable computing. The cloud is being developed continuously and, naturally, comes up with many challenges, one of which is scheduling. A schedule or timeline is a mechanism used to optimize the time for performing a duty or set of duties. A scheduling process is accountable for choosing the best resources for performing a duty. The main goal of a scheduling algorithm is to improve the efficiency and quality of the service while at the same time ensuring the acceptability and effectiveness of the targets. The task scheduling problem is one of the most important NP-hard issues in the cloud domain and, so far, many techniques have been proposed as solutions, including using genetic algorithms (GAs), particle swarm optimization, (PSO), and ant colony optimization (ACO). To address this problem, in this paper, one of the collective intelligence algorithms, called the Salp Swarm Algorithm (SSA), has been expanded, improved, and applied. The performance of the proposed algorithm has been compared with that of GAs, PSO, continuous ACO, and the basic SSA. The results show that our algorithm has generally higher performance than the other algorithms. For example, compared to the basic SSA, the proposed method has an average reduction of approximately 21% in makespan.

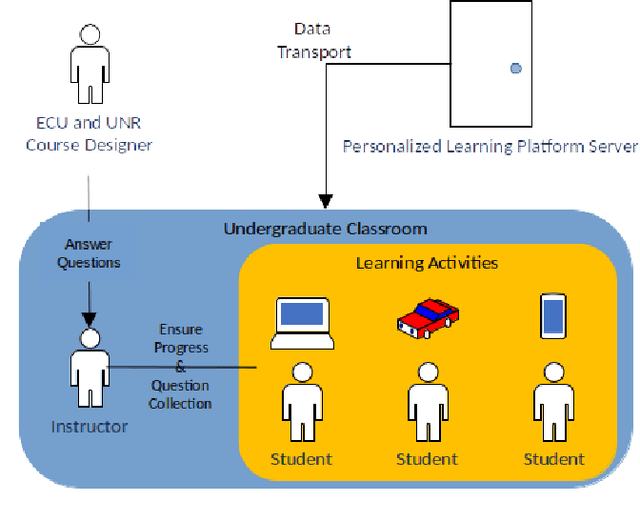

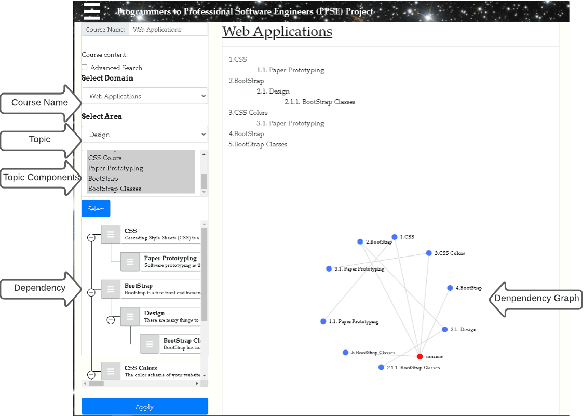

WIP: Development of a Student-Centered Personalized Learning Framework to Advance Undergraduate Robotics Education

Sep 10, 2023

Abstract:This paper presents a work-in-progress on a learn-ing system that will provide robotics students with a personalized learning environment. This addresses both the scarcity of skilled robotics instructors, particularly in community colleges and the expensive demand for training equipment. The study of robotics at the college level represents a wide range of interests, experiences, and aims. This project works to provide students the flexibility to adapt their learning to their own goals and prior experience. We are developing a system to enable robotics instruction through a web-based interface that is compatible with less expensive hardware. Therefore, the free distribution of teaching materials will empower educators. This project has the potential to increase the number of robotics courses offered at both two- and four-year schools and universities. The course materials are being designed with small units and a hierarchical dependency tree in mind; students will be able to customize their course of study based on the robotics skills they have already mastered. We present an evaluation of a five module mini-course in robotics. Students indicated that they had a positive experience with the online content. They also scored the experience highly on relatedness, mastery, and autonomy perspectives, demonstrating strong motivation potential for this approach.

* 5 pages, 2 figures, conference

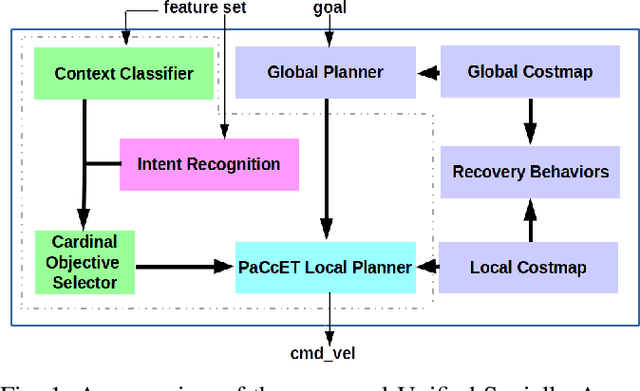

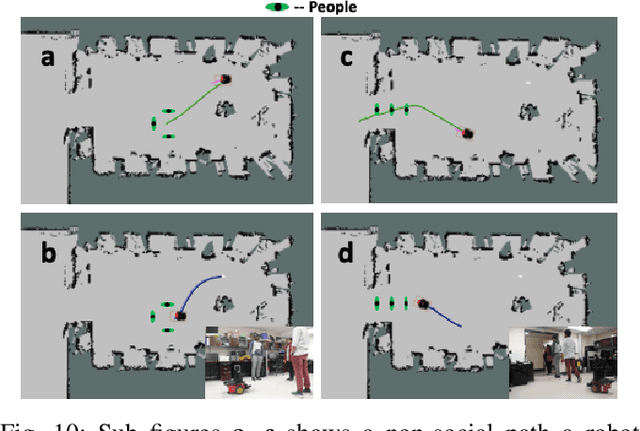

A Deep Learning Approach To Multi-Context Socially-Aware Navigation

Apr 20, 2021

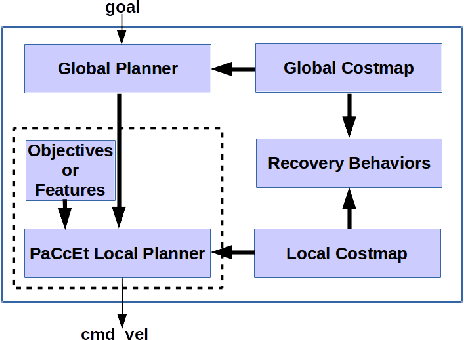

Abstract:We present a context classification pipeline to allow a robot to change its navigation strategy based on the observed social scenario. Socially-Aware Navigation considers social behavior in order to improve navigation around people. Most of the existing research uses different techniques to incorporate social norms into robot path planning for a single context. Methods that work for hallway behavior might not work for approaching people, and so on. We developed a high-level decision-making subsystem, a model-based context classifier, and a multi-objective optimization-based local planner to achieve socially-aware trajectories for autonomously sensed contexts. Using a context classification system, the robot can select social objectives that are later used by Pareto Concavity Elimination Transformation (PaCcET) based local planner to generate safe, comfortable, and socially-appropriate trajectories for its environment. This was tested and validated in multiple environments on a Pioneer mobile robot platform; results show that the robot was able to select and account for social objectives related to navigation autonomously.

Using Machine Learning Approach for Computational Substructure in Real-Time Hybrid Simulation

Apr 04, 2020

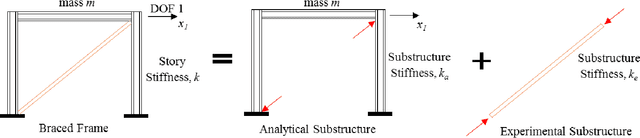

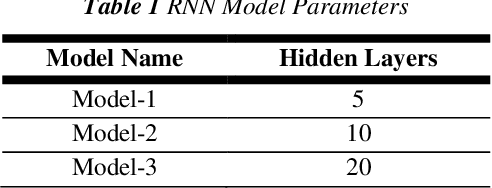

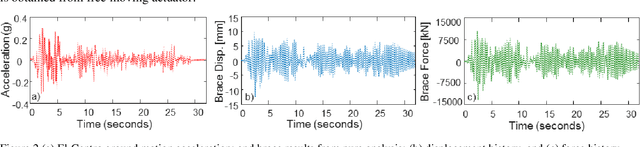

Abstract:Hybrid simulation (HS) is a widely used structural testing method that combines a computational substructure with a numerical model for well-understood components and an experimental substructure for other parts of the structure that are physically tested. One challenge for fast HS or real-time HS (RTHS) is associated with the analytical substructures of relatively complex structures, which could have large number of degrees of freedoms (DOFs), for instance. These large DOFs computations could be hard to perform in real-time, even with the all current hardware capacities. In this study, a metamodeling technique is proposed to represent the structural dynamic behavior of the analytical substructure. A preliminary study is conducted where a one-bay one-story concentrically braced frame (CBF) is tested under earthquake loading by using a compact HS setup at the University of Nevada, Reno. The experimental setup allows for using a small-scale brace as the experimental substructure combined with a steel frame at the prototype full-scale for the analytical substructure. Two different machine learning algorithms are evaluated to provide a valid and useful metamodeling solution for analytical substructure. The metamodels are trained with the available data that is obtained from the pure analytical solution of the prototype steel frame. The two algorithms used for developing the metamodels are: (1) linear regression (LR) model, and (2) basic recurrent neural network (RNN). The metamodels are first validated against the pure analytical response of the structure. Next, RTHS experiments are conducted by using metamodels. RTHS test results using both LR and RNN models are evaluated, and the advantages and disadvantages of these models are discussed.

Socially-Aware Navigation: A Non-linear Multi-Objective Optimization Approach

Nov 11, 2019

Abstract:Mobile robots are increasingly populating homes, hospitals, shopping malls, factory floors, and other human environments. Human society has social norms that people mutually accept, obeying these norms is an essential signal that someone is participating socially with respect to the rest of the population. For robots to be socially compatible with humans, it is crucial for robots to obey these social norms. In prior work, we demonstrated a Socially-Aware Navigation (SAN) planner, based on Pareto Concavity Elimination Transformation (PaCcET), in a hallway scenario, optimizing two objectives so that the robot does not invade the personal space of people. In this paper, we extend our PaCcET based SAN planner to multiple scenarios with more than two objectives. We modified the Robot Operating System's (ROS) navigation stack to include PaCcET in the local planning task. We show that our approach can accommodate multiple Human-Robot Interaction (HRI) scenarios. Using the proposed approach, we were able to achieve successful HRI in multiple scenarios like hallway interactions, an art gallery, waiting in a queue, and interacting with a group. We implemented our method on a simulated PR2 robot in a 2D simulator (Stage) and a pioneer-3DX mobile robot in the real-world to validate all the scenarios. A comprehensive set of experiments shows that our approach can handle multiple interaction scenarios on both holonomic and non-holonomic robots; hence, it can be a viable option for a Unified Socially-Aware Navigation (USAN).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge