Monica Nicolescu

Design Activity for Robot Faces: Evaluating Child Responses To Expressive Faces

Apr 10, 2025Abstract:Facial expressiveness plays a crucial role in a robot's ability to engage and interact with children. Prior research has shown that expressive robots can enhance child engagement during human-robot interactions. However, many robots used in therapy settings feature non-personalized, static faces designed with traditional facial feature considerations, which can limit the depth of interactions and emotional connections. Digital faces offer opportunities for personalization, yet the current landscape of robot face design lacks a dynamic, user-centered approach. Specifically, there is a significant research gap in designing robot faces based on child preferences. Instead, most robots in child-focused therapy spaces are developed from an adult-centric perspective. We present a novel study investigating the influence of child-drawn digital faces in child-robot interactions. This approach focuses on a design activity with children instructed to draw their own custom robot faces. We compare the perceptions of social intelligence (PSI) of two implementations: a generic digital face and a robot face, personalized using the user's drawn robot faces. The results of this study show the perceived social intelligence of a child-drawn robot was significantly higher compared to a generic face.

Through the Clutter: Exploring the Impact of Complex Environments on the Legibility of Robot Motion

May 31, 2024Abstract:The environments in which the collaboration of a robot would be the most helpful to a person are frequently uncontrolled and cluttered with many objects present. Legible robot arm motion is crucial in tasks like these in order to avoid possible collisions, improve the workflow and help ensure the safety of the person. Prior work in this area, however, focuses on solutions that are tested only in uncluttered environments and there are not many results taken from cluttered environments. In this research we present a measure for clutteredness based on an entropic measure of the environment, and a novel motion planner based on potential fields. Both our measures and the planner were tested in a cluttered environment meant to represent a more typical tool sorting task for which the person would collaborate with a robot. The in-person validation study with Baxter robots shows a significant improvement in legibility of our proposed legible motion planner compared to the current state-of-the-art legible motion planner in cluttered environments. Further, the results show a significant difference in the performance of the planners in cluttered and uncluttered environments, and the need to further explore legible motion in cluttered environments. We argue that the inconsistency of our results in cluttered environments with those obtained from uncluttered environments points out several important issues with the current research performed in the area of legible motion planners.

Performance Analysis of Keypoint Detectors and Binary Descriptors Under Varying Degrees of Photometric and Geometric Transformations

Dec 08, 2020

Abstract:Detecting image correspondences by feature matching forms the basis of numerous computer vision applications. Several detectors and descriptors have been presented in the past, addressing the efficient generation of features from interest points (keypoints) in an image. In this paper, we investigate eight binary descriptors (AKAZE, BoostDesc, BRIEF, BRISK, FREAK, LATCH, LUCID, and ORB) and eight interest point detector (AGAST, AKAZE, BRISK, FAST, HarrisLapalce, KAZE, ORB, and StarDetector). We have decoupled the detection and description phase to analyze the interest point detectors and then evaluate the performance of the pairwise combination of different detectors and descriptors. We conducted experiments on a standard dataset and analyzed the comparative performance of each method under different image transformations. We observed that: (1) the FAST, AGAST, ORB detectors were faster and detected more keypoints, (2) the AKAZE and KAZE detectors performed better under photometric changes while ORB was more robust against geometric changes, (3) in general, descriptors performed better when paired with the KAZE and AKAZE detectors, (4) the BRIEF, LUCID, ORB descriptors were relatively faster, and (5) none of the descriptors did particularly well under geometric transformations, only BRISK, FREAK, and AKAZE showed reasonable resiliency.

Socially-Aware Navigation: A Non-linear Multi-Objective Optimization Approach

Nov 11, 2019

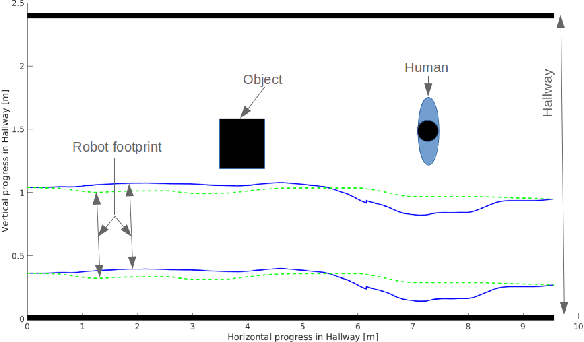

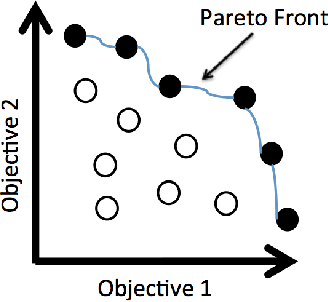

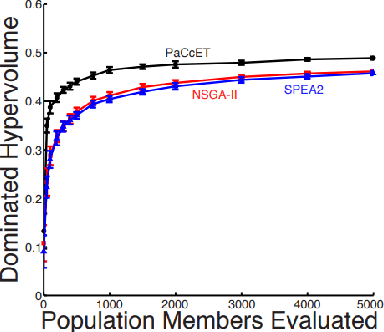

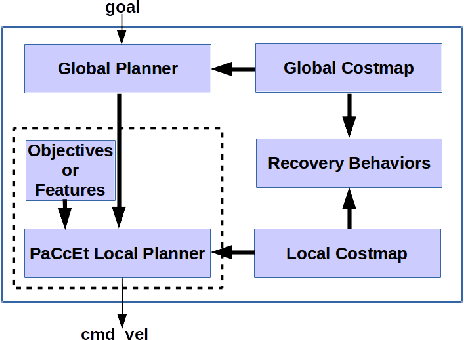

Abstract:Mobile robots are increasingly populating homes, hospitals, shopping malls, factory floors, and other human environments. Human society has social norms that people mutually accept, obeying these norms is an essential signal that someone is participating socially with respect to the rest of the population. For robots to be socially compatible with humans, it is crucial for robots to obey these social norms. In prior work, we demonstrated a Socially-Aware Navigation (SAN) planner, based on Pareto Concavity Elimination Transformation (PaCcET), in a hallway scenario, optimizing two objectives so that the robot does not invade the personal space of people. In this paper, we extend our PaCcET based SAN planner to multiple scenarios with more than two objectives. We modified the Robot Operating System's (ROS) navigation stack to include PaCcET in the local planning task. We show that our approach can accommodate multiple Human-Robot Interaction (HRI) scenarios. Using the proposed approach, we were able to achieve successful HRI in multiple scenarios like hallway interactions, an art gallery, waiting in a queue, and interacting with a group. We implemented our method on a simulated PR2 robot in a 2D simulator (Stage) and a pioneer-3DX mobile robot in the real-world to validate all the scenarios. A comprehensive set of experiments shows that our approach can handle multiple interaction scenarios on both holonomic and non-holonomic robots; hence, it can be a viable option for a Unified Socially-Aware Navigation (USAN).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge