Jonas le Fevre Sejersen

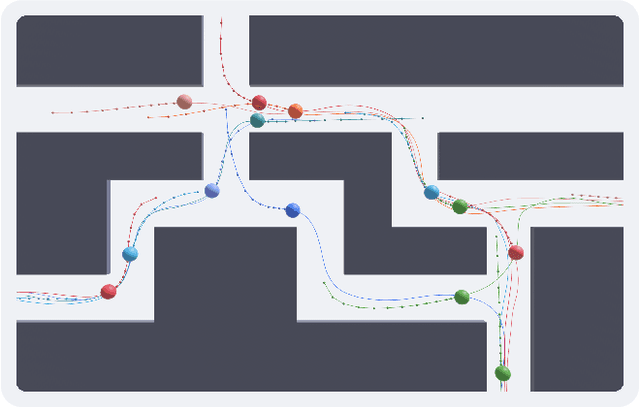

Multi-Agent Path Planning in Complex Environments using Gaussian Belief Propagation with Global Path Finding

Feb 27, 2025

Abstract:Multi-agent path planning is a critical challenge in robotics, requiring agents to navigate complex environments while avoiding collisions and optimizing travel efficiency. This work addresses the limitations of existing approaches by combining Gaussian belief propagation with path integration and introducing a novel tracking factor to ensure strict adherence to global paths. The proposed method is tested with two different global path-planning approaches: rapidly exploring random trees and a structured planner, which leverages predefined lane structures to improve coordination. A simulation environment was developed to validate the proposed method across diverse scenarios, each posing unique challenges in navigation and communication. Simulation results demonstrate that the tracking factor reduces path deviation by 28% in single-agent and 16% in multi-agent scenarios, highlighting its effectiveness in improving multi-agent coordination, especially when combined with structured global planning.

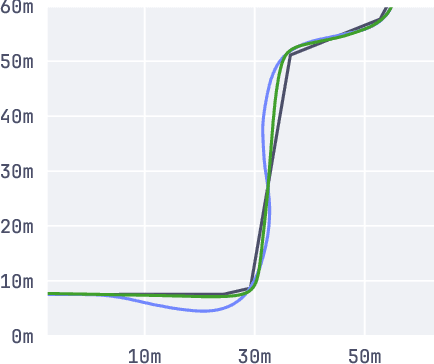

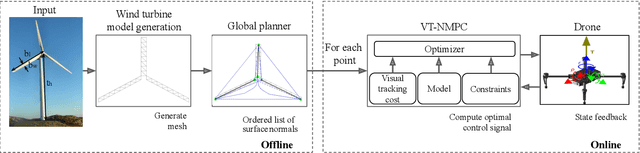

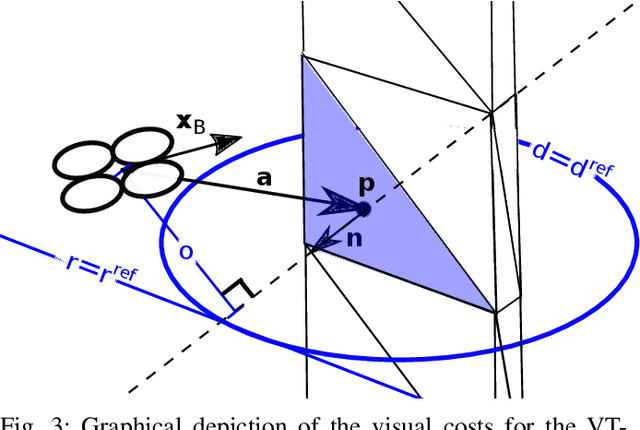

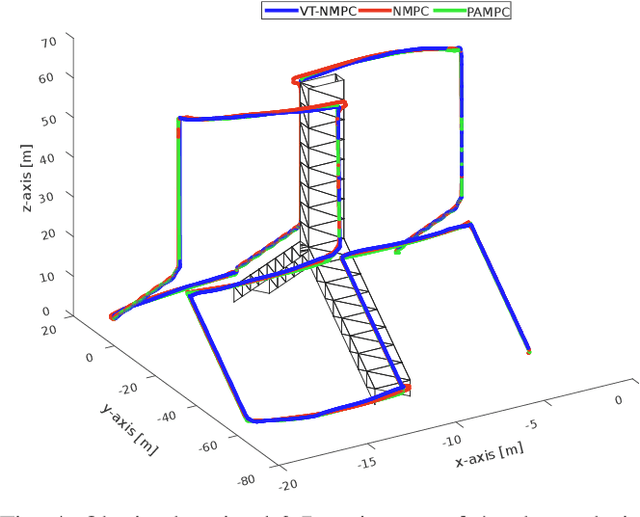

Visual Tracking Nonlinear Model Predictive Control Method for Autonomous Wind Turbine Inspection

Oct 21, 2023

Abstract:Automated visual inspection of on-and offshore wind turbines using aerial robots provides several benefits, namely, a safe working environment by circumventing the need for workers to be suspended high above the ground, reduced inspection time, preventive maintenance, and access to hard-to-reach areas. A novel nonlinear model predictive control (NMPC) framework alongside a global wind turbine path planner is proposed to achieve distance-optimal coverage for wind turbine inspection. Unlike traditional MPC formulations, visual tracking NMPC (VT-NMPC) is designed to track an inspection surface, instead of a position and heading trajectory, thereby circumventing the need to provide an accurate predefined trajectory for the drone. An additional capability of the proposed VT-NMPC method is that by incorporating inspection requirements as visual tracking costs to minimize, it naturally achieves the inspection task successfully while respecting the physical constraints of the drone. Multiple simulation runs and real-world tests demonstrate the efficiency and efficacy of the proposed automated inspection framework, which outperforms the traditional MPC designs, by providing full coverage of the target wind turbine blades as well as its robustness to changing wind conditions. The implementation codes are open-sourced.

UNav-Sim: A Visually Realistic Underwater Robotics Simulator and Synthetic Data-generation Framework

Oct 18, 2023

Abstract:Underwater robotic surveys can be costly due to the complex working environment and the need for various sensor modalities. While underwater simulators are essential, many existing simulators lack sufficient rendering quality, restricting their ability to transfer algorithms from simulation to real-world applications. To address this limitation, we introduce UNav-Sim, which, to the best of our knowledge, is the first simulator to incorporate the efficient, high-detail rendering of Unreal Engine 5 (UE5). UNav-Sim is open-source and includes an autonomous vision-based navigation stack. By supporting standard robotics tools like ROS, UNav-Sim enables researchers to develop and test algorithms for underwater environments efficiently.

Real-Time Volumetric-Semantic Exploration and Mapping: An Uncertainty-Aware Approach

Sep 03, 2021

Abstract:In this work we propose a holistic framework for autonomous aerial inspection tasks, using semantically-aware, yet, computationally efficient planning and mapping algorithms. The system leverages state-of-the-art receding horizon exploration techniques for next-best-view (NBV) planning with geometric and semantic segmentation information provided by state-of-the-art deep convolutional neural networks (DCNNs), with the goal of enriching environment representations. The contributions of this article are threefold, first we propose an efficient sensor observation model, and a reward function that encodes the expected information gains from the observations taken from specific view points. Second, we extend the reward function to incorporate not only geometric but also semantic probabilistic information, provided by a DCNN for semantic segmentation that operates in real-time. The incorporation of semantic information in the environment representation allows biasing exploration towards specific objects, while ignoring task-irrelevant ones during planning. Finally, we employ our approaches in an autonomous drone shipyard inspection task. A set of simulations in realistic scenarios demonstrate the efficacy and efficiency of the proposed framework when compared with the state-of-the-art.

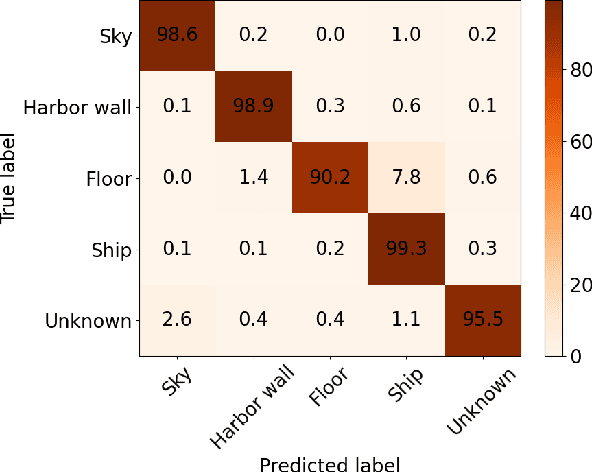

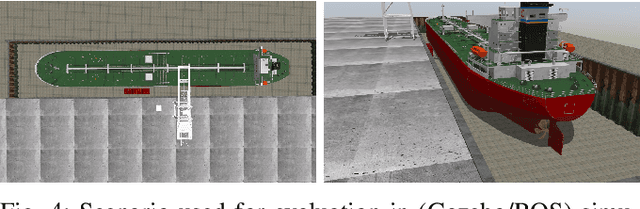

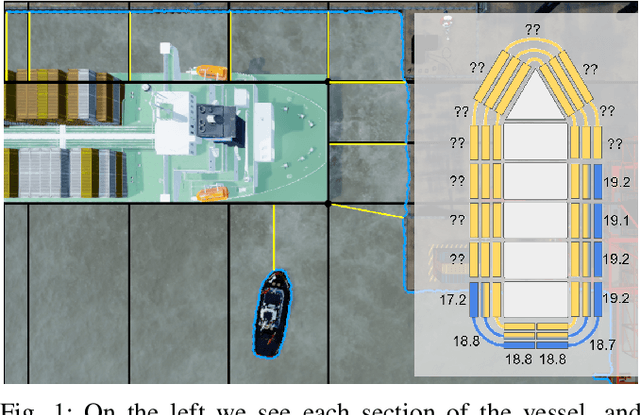

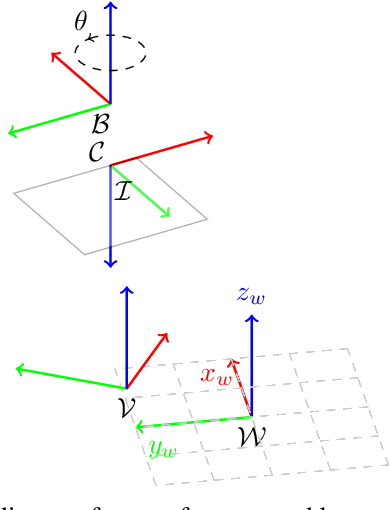

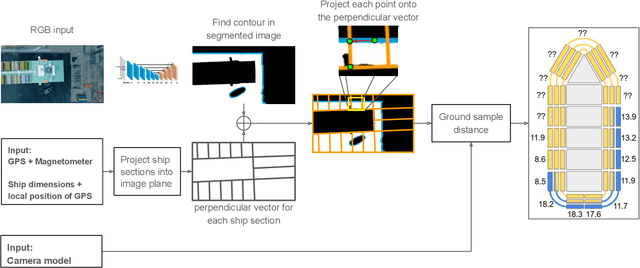

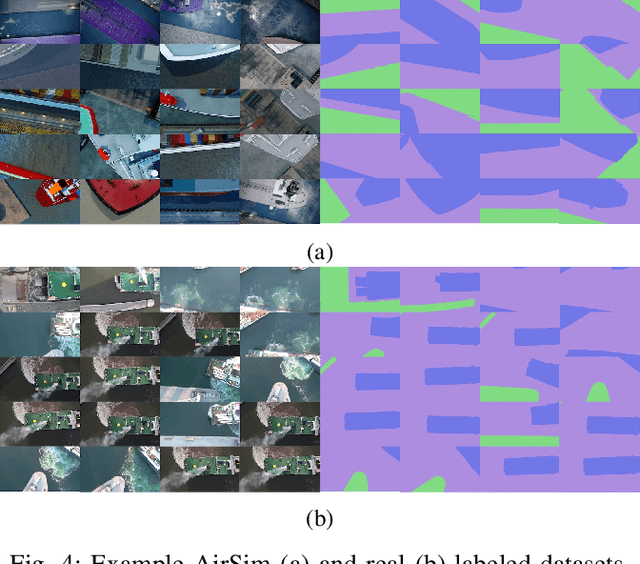

Safe Vessel Navigation Visually Aided by Autonomous Unmanned Aerial Vehicles in Congested Harbors and Waterways

Aug 09, 2021

Abstract:In the maritime sector, safe vessel navigation is of great importance, particularly in congested harbors and waterways. The focus of this work is to estimate the distance between an object of interest and potential obstacles using a companion UAV. The proposed approach fuses GPS data with long-range aerial images. First, we employ semantic segmentation DNN for discriminating the vessel of interest, water, and potential solid objects using raw image data. The network is trained with both real and images generated and automatically labeled from a realistic AirSim simulation environment. Then, the distances between the extracted vessel and non-water obstacle blobs are computed using a novel GSD estimation algorithm. To the best of our knowledge, this work is the first attempt to detect and estimate distances to unknown objects from long-range visual data captured with conventional RGB cameras and auxiliary absolute positioning systems (e.g. GPS). The simulation results illustrate the accuracy and efficacy of the proposed method for visually aided navigation of vessels assisted by UAV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge