Rui Pimentel de Figueiredo

A Complete System for Automated 3D Semantic-Geometric Mapping of Corrosion in Industrial Environments

Apr 21, 2024

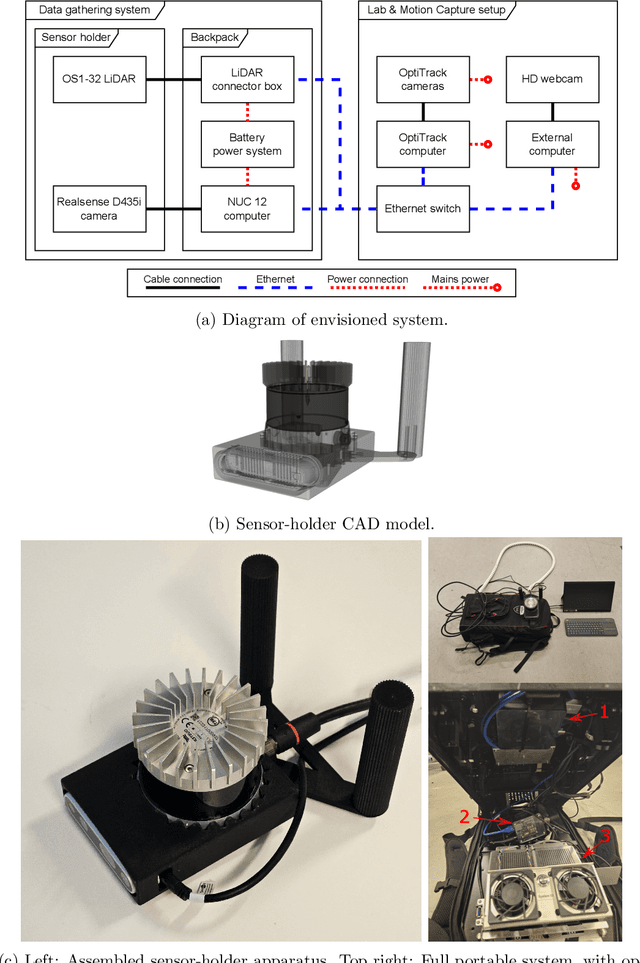

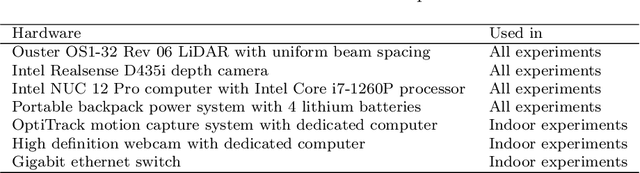

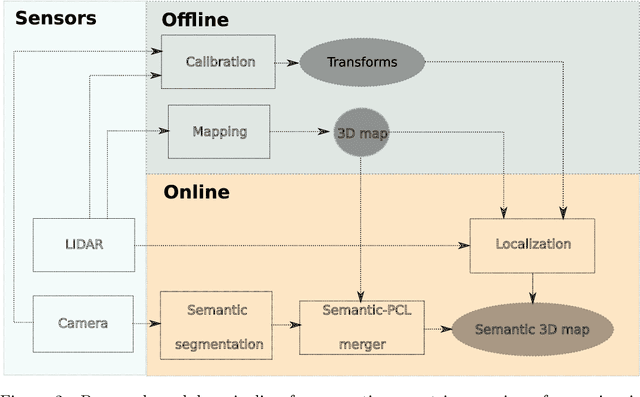

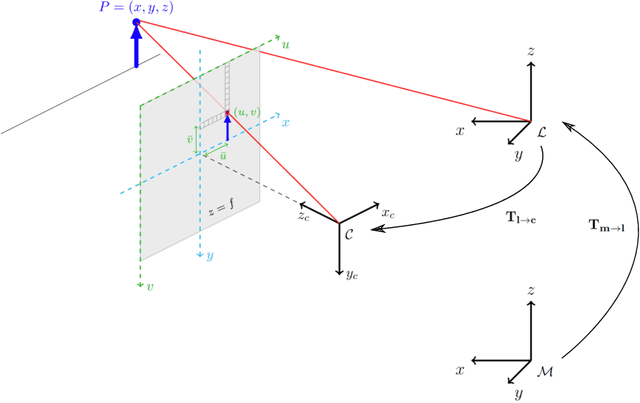

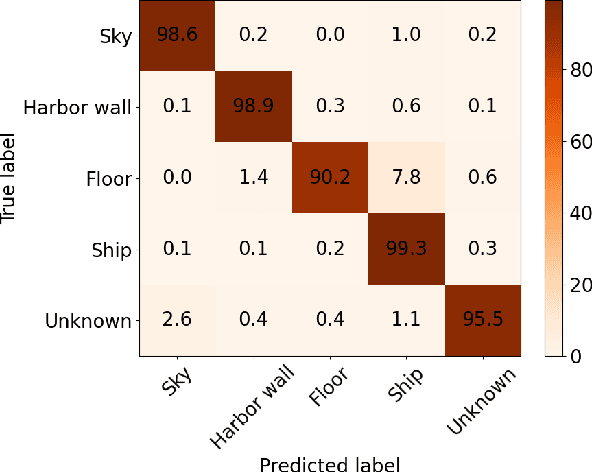

Abstract:Corrosion, a naturally occurring process leading to the deterioration of metallic materials, demands diligent detection for quality control and the preservation of metal-based objects, especially within industrial contexts. Traditional techniques for corrosion identification, including ultrasonic testing, radio-graphic testing, and magnetic flux leakage, necessitate the deployment of expensive and bulky equipment on-site for effective data acquisition. An unexplored alternative involves employing lightweight, conventional camera systems, and state-of-the-art computer vision methods for its identification. In this work, we propose a complete system for semi-automated corrosion identification and mapping in industrial environments. We leverage recent advances in LiDAR-based methods for localization and mapping, with vision-based semantic segmentation deep learning techniques, in order to build semantic-geometric maps of industrial environments. Unlike previous corrosion identification systems available in the literature, our designed multi-modal system is low-cost, portable, semi-autonomous and allows collecting large datasets by untrained personnel. A set of experiments in an indoor laboratory environment, demonstrate quantitatively the high accuracy of the employed LiDAR based 3D mapping and localization system, with less then $0.05m$ and 0.02m average absolute and relative pose errors. Also, our data-driven semantic segmentation model, achieves around 70\% precision when trained with our pixel-wise manually annotated dataset.

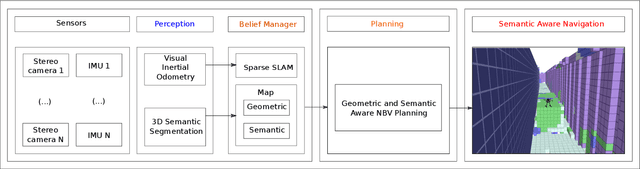

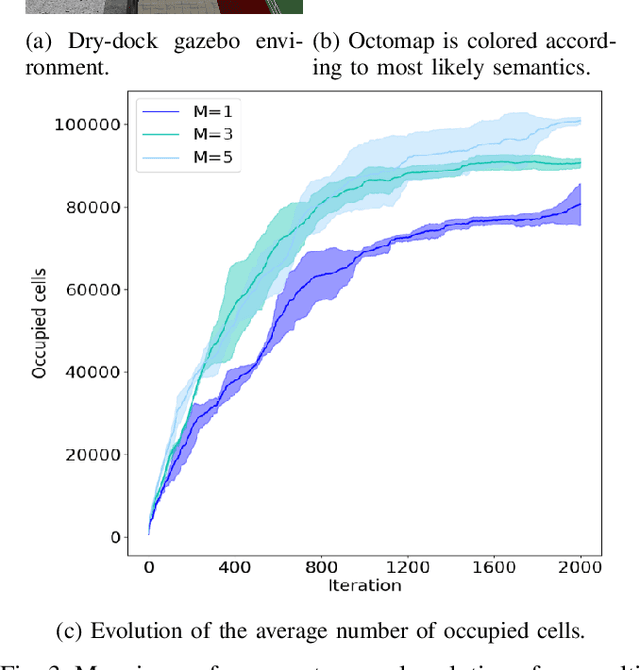

Real-Time Volumetric-Semantic Exploration and Mapping: An Uncertainty-Aware Approach

Sep 03, 2021

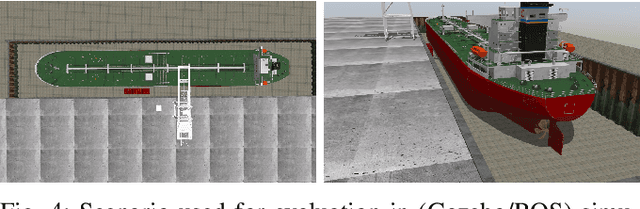

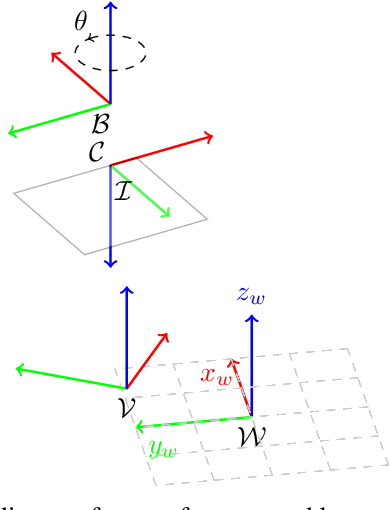

Abstract:In this work we propose a holistic framework for autonomous aerial inspection tasks, using semantically-aware, yet, computationally efficient planning and mapping algorithms. The system leverages state-of-the-art receding horizon exploration techniques for next-best-view (NBV) planning with geometric and semantic segmentation information provided by state-of-the-art deep convolutional neural networks (DCNNs), with the goal of enriching environment representations. The contributions of this article are threefold, first we propose an efficient sensor observation model, and a reward function that encodes the expected information gains from the observations taken from specific view points. Second, we extend the reward function to incorporate not only geometric but also semantic probabilistic information, provided by a DCNN for semantic segmentation that operates in real-time. The incorporation of semantic information in the environment representation allows biasing exploration towards specific objects, while ignoring task-irrelevant ones during planning. Finally, we employ our approaches in an autonomous drone shipyard inspection task. A set of simulations in realistic scenarios demonstrate the efficacy and efficiency of the proposed framework when compared with the state-of-the-art.

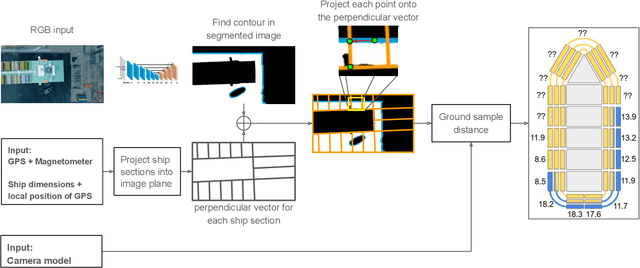

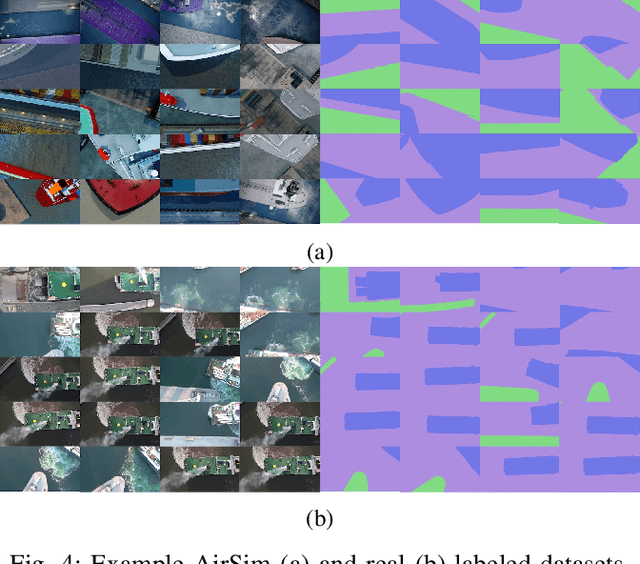

Safe Vessel Navigation Visually Aided by Autonomous Unmanned Aerial Vehicles in Congested Harbors and Waterways

Aug 09, 2021

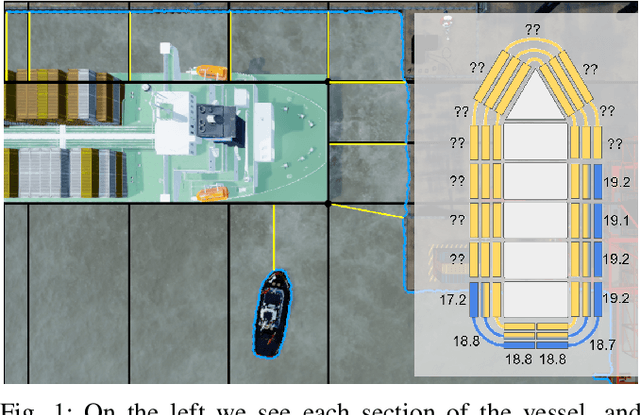

Abstract:In the maritime sector, safe vessel navigation is of great importance, particularly in congested harbors and waterways. The focus of this work is to estimate the distance between an object of interest and potential obstacles using a companion UAV. The proposed approach fuses GPS data with long-range aerial images. First, we employ semantic segmentation DNN for discriminating the vessel of interest, water, and potential solid objects using raw image data. The network is trained with both real and images generated and automatically labeled from a realistic AirSim simulation environment. Then, the distances between the extracted vessel and non-water obstacle blobs are computed using a novel GSD estimation algorithm. To the best of our knowledge, this work is the first attempt to detect and estimate distances to unknown objects from long-range visual data captured with conventional RGB cameras and auxiliary absolute positioning systems (e.g. GPS). The simulation results illustrate the accuracy and efficacy of the proposed method for visually aided navigation of vessels assisted by UAV.

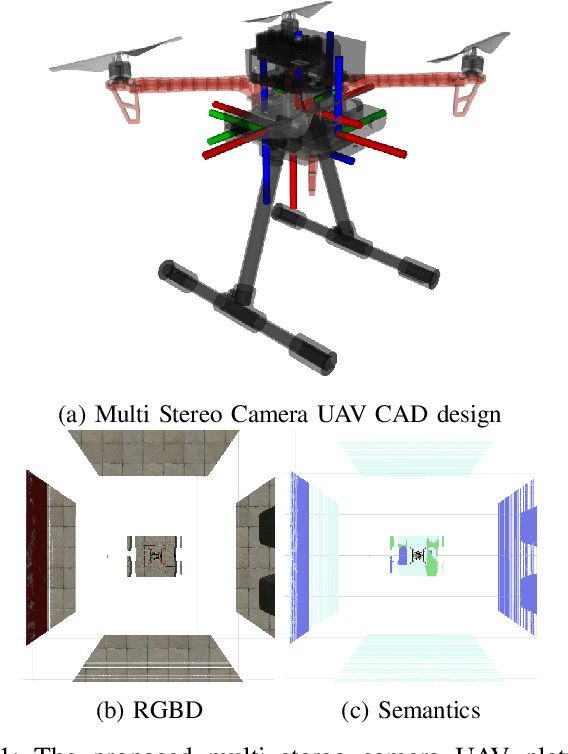

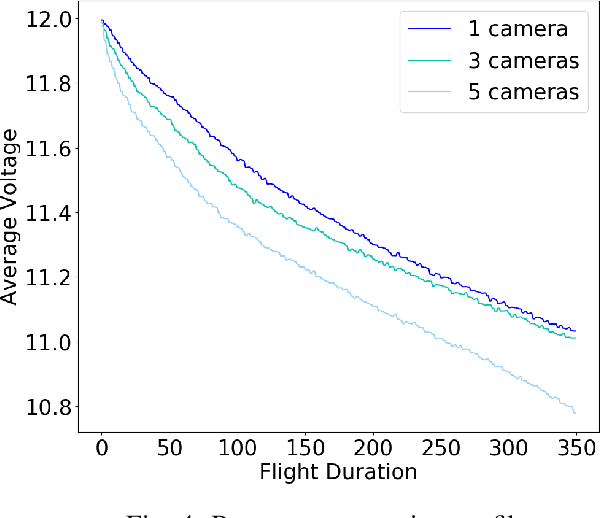

On the Advantages of Multiple Stereo Vision Camera Designs for Autonomous Drone Navigation

May 26, 2021

Abstract:In this work we showcase the design and assessment of the performance of a multi-camera UAV, when coupled with state-of-the-art planning and mapping algorithms for autonomous navigation. The system leverages state-of-the-art receding horizon exploration techniques for Next-Best-View (NBV) planning with 3D and semantic information, provided by a reconfigurable multi stereo camera system. We employ our approaches in an autonomous drone-based inspection task and evaluate them in an autonomous exploration and mapping scenario. We discuss the advantages and limitations of using multi stereo camera flying systems, and the trade-off between number of cameras and mapping performance.

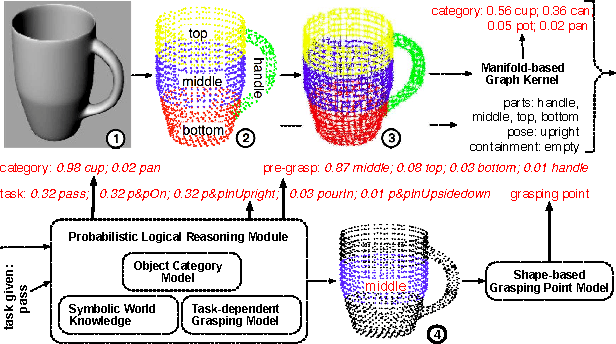

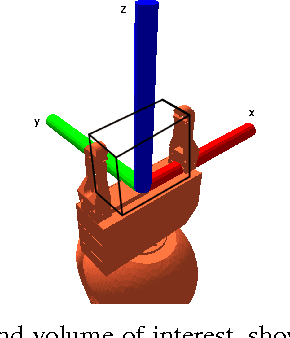

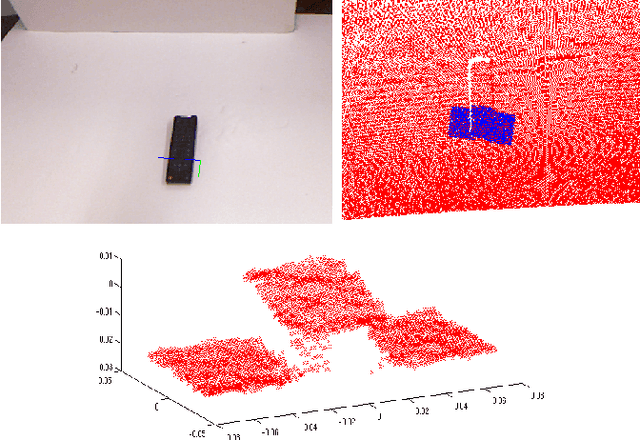

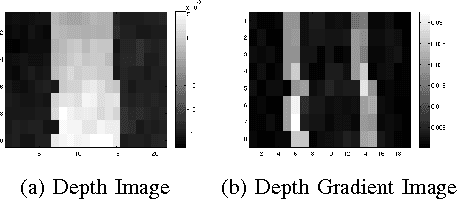

High-level Reasoning and Low-level Learning for Grasping: A Probabilistic Logic Pipeline

Nov 04, 2014

Abstract:While grasps must satisfy the grasping stability criteria, good grasps depend on the specific manipulation scenario: the object, its properties and functionalities, as well as the task and grasp constraints. In this paper, we consider such information for robot grasping by leveraging manifolds and symbolic object parts. Specifically, we introduce a new probabilistic logic module to first semantically reason about pre-grasp configurations with respect to the intended tasks. Further, a mapping is learned from part-related visual features to good grasping points. The probabilistic logic module makes use of object-task affordances and object/task ontologies to encode rules that generalize over similar object parts and object/task categories. The use of probabilistic logic for task-dependent grasping contrasts with current approaches that usually learn direct mappings from visual perceptions to task-dependent grasping points. We show the benefits of the full probabilistic logic pipeline experimentally and on a real robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge