Simon Bøgh

Kinematic Analysis and Integration of Vision Algorithms for a Mobile Manipulator Employed Inside a Self-Driving Laboratory

Oct 21, 2025Abstract:Recent advances in robotics and autonomous systems have broadened the use of robots in laboratory settings, including automated synthesis, scalable reaction workflows, and collaborative tasks in self-driving laboratories (SDLs). This paper presents a comprehensive development of a mobile manipulator designed to assist human operators in such autonomous lab environments. Kinematic modeling of the manipulator is carried out based on the Denavit Hartenberg (DH) convention and inverse kinematics solution is determined to enable precise and adaptive manipulation capabilities. A key focus of this research is enhancing the manipulator ability to reliably grasp textured objects as a critical component of autonomous handling tasks. Advanced vision-based algorithms are implemented to perform real-time object detection and pose estimation, guiding the manipulator in dynamic grasping and following tasks. In this work, we integrate a vision method that combines feature-based detection with homography-driven pose estimation, leveraging depth information to represent an object pose as a $2$D planar projection within $3$D space. This adaptive capability enables the system to accommodate variations in object orientation and supports robust autonomous manipulation across diverse environments. By enabling autonomous experimentation and human-robot collaboration, this work contributes to the scalability and reproducibility of next-generation chemical laboratories

* International Journal of Intelligent Robotics and Applications 2025

A Complete System for Automated 3D Semantic-Geometric Mapping of Corrosion in Industrial Environments

Apr 21, 2024

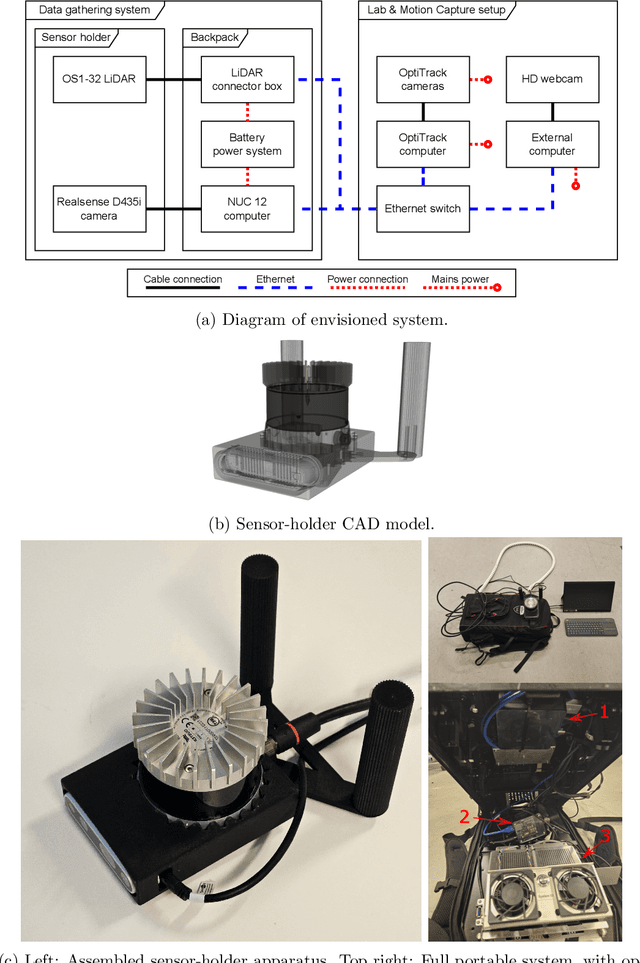

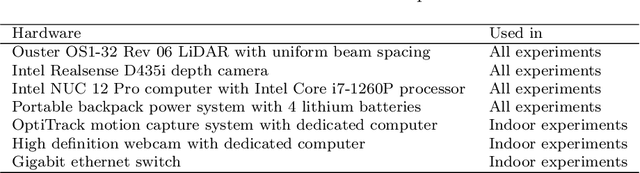

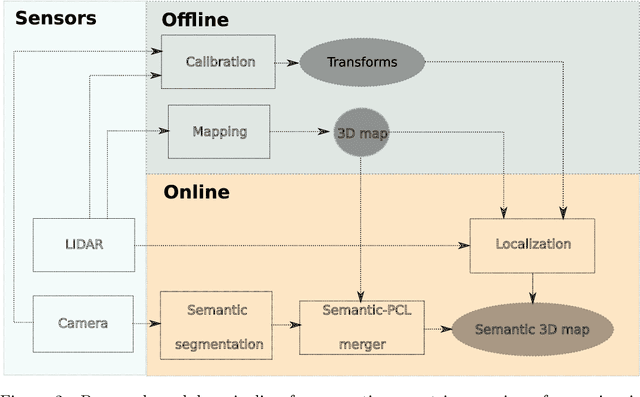

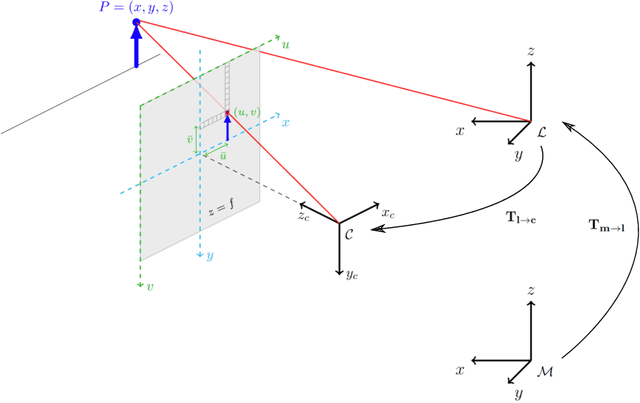

Abstract:Corrosion, a naturally occurring process leading to the deterioration of metallic materials, demands diligent detection for quality control and the preservation of metal-based objects, especially within industrial contexts. Traditional techniques for corrosion identification, including ultrasonic testing, radio-graphic testing, and magnetic flux leakage, necessitate the deployment of expensive and bulky equipment on-site for effective data acquisition. An unexplored alternative involves employing lightweight, conventional camera systems, and state-of-the-art computer vision methods for its identification. In this work, we propose a complete system for semi-automated corrosion identification and mapping in industrial environments. We leverage recent advances in LiDAR-based methods for localization and mapping, with vision-based semantic segmentation deep learning techniques, in order to build semantic-geometric maps of industrial environments. Unlike previous corrosion identification systems available in the literature, our designed multi-modal system is low-cost, portable, semi-autonomous and allows collecting large datasets by untrained personnel. A set of experiments in an indoor laboratory environment, demonstrate quantitatively the high accuracy of the employed LiDAR based 3D mapping and localization system, with less then $0.05m$ and 0.02m average absolute and relative pose errors. Also, our data-driven semantic segmentation model, achieves around 70\% precision when trained with our pixel-wise manually annotated dataset.

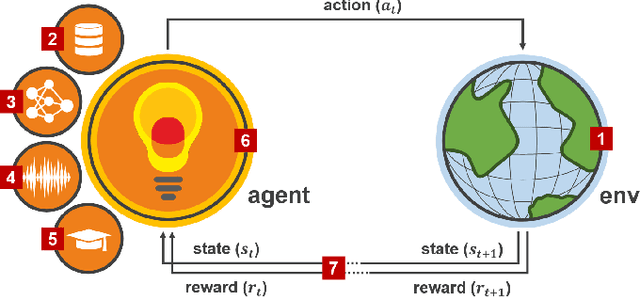

Teacher-Student Reinforcement Learning for Mapless Navigation using a Planetary Space Rover

Sep 22, 2023Abstract:We address the challenge of enhancing navigation autonomy for planetary space rovers using reinforcement learning (RL). The ambition of future space missions necessitates advanced autonomous navigation capabilities for rovers to meet mission objectives. RL's potential in robotic autonomy is evident, but its reliance on simulations poses a challenge. Transferring policies to real-world scenarios often encounters the "reality gap", disrupting the transition from virtual to physical environments. The reality gap is exacerbated in the context of mapless navigation on Mars and Moon-like terrains, where unpredictable terrains and environmental factors play a significant role. Effective navigation requires a method attuned to these complexities and real-world data noise. We introduce a novel two-stage RL approach using offline noisy data. Our approach employs a teacher-student policy learning paradigm, inspired by the "learning by cheating" method. The teacher policy is trained in simulation. Subsequently, the student policy is trained on noisy data, aiming to mimic the teacher's behaviors while being more robust to real-world uncertainties. Our policies are transferred to a custom-designed rover for real-world testing. Comparative analyses between the teacher and student policies reveal that our approach offers improved behavioral performance, heightened noise resilience, and more effective sim-to-real transfer.

A data-driven modular architecture with denoising autoencoders for health indicator construction in a manufacturing process

Aug 10, 2022

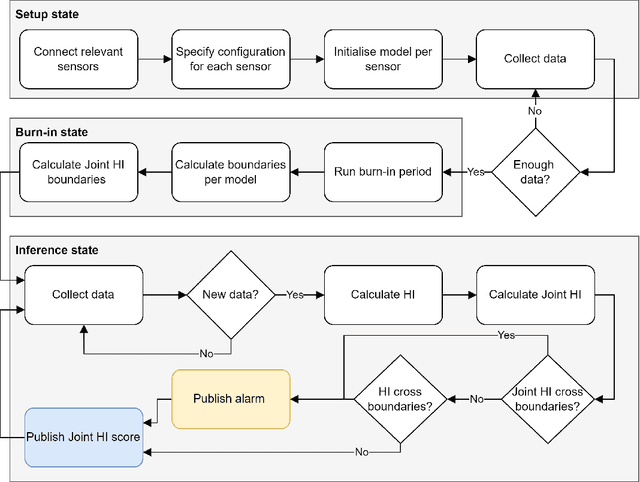

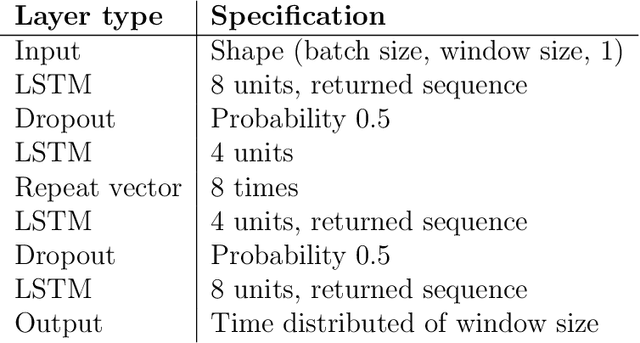

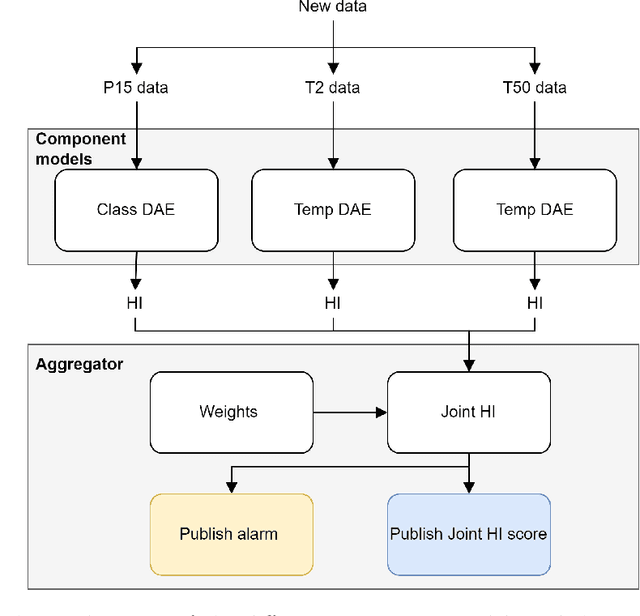

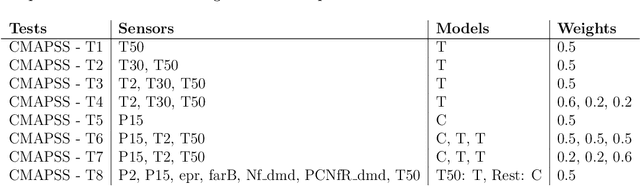

Abstract:Within the field of prognostics and health management (PHM), health indicators (HI) can be used to aid the production and, e.g. schedule maintenance and avoid failures. However, HI is often engineered to a specific process and typically requires large amounts of historical data for set-up. This is especially a challenge for SMEs, which often lack sufficient resources and knowledge to benefit from PHM. In this paper, we propose ModularHI, a modular approach in the construction of HI for a system without historical data. With ModularHI, the operator chooses which sensor inputs are available, and then ModularHI will compute a baseline model based on data collected during a burn-in state. This baseline model will then be used to detect if the system starts to degrade over time. We test the ModularHI on two open datasets, CMAPSS and N-CMAPSS. Results from the former dataset showcase our system's ability to detect degradation, while results from the latter point to directions for further research within the area. The results shows that our novel approach is able to detect system degradation without historical data.

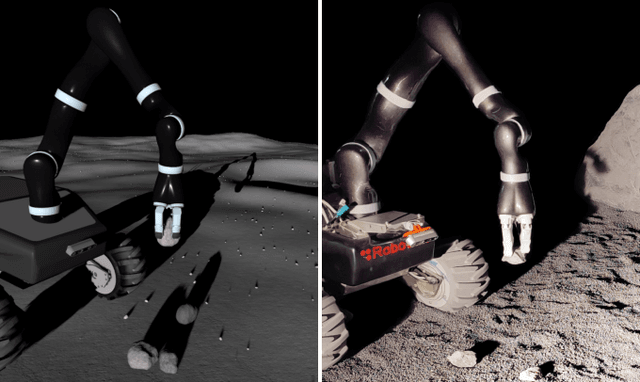

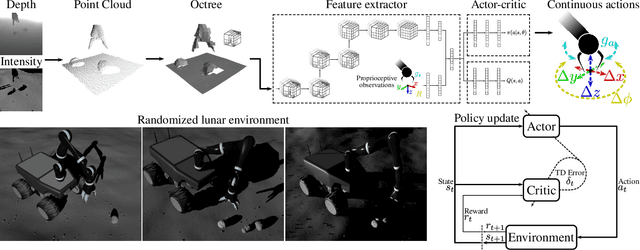

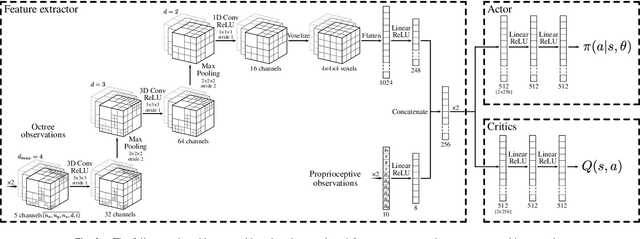

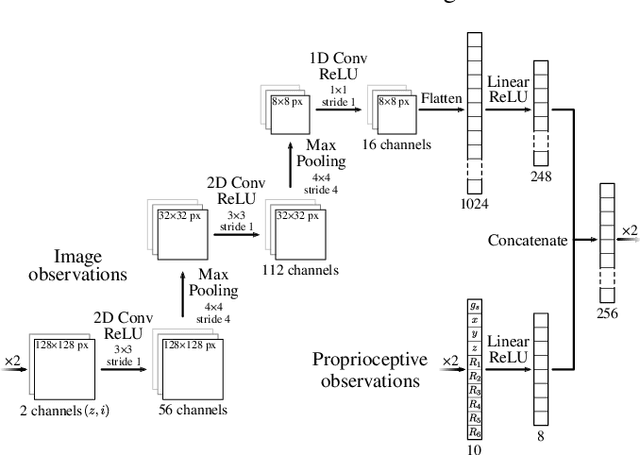

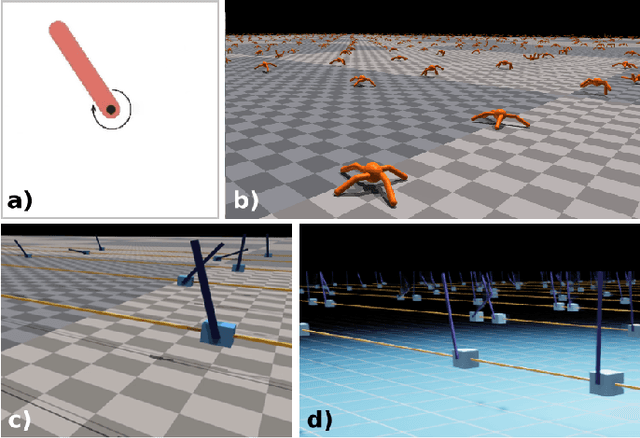

Learning to Grasp on the Moon from 3D Octree Observations with Deep Reinforcement Learning

Aug 01, 2022

Abstract:Extraterrestrial rovers with a general-purpose robotic arm have many potential applications in lunar and planetary exploration. Introducing autonomy into such systems is desirable for increasing the time that rovers can spend gathering scientific data and collecting samples. This work investigates the applicability of deep reinforcement learning for vision-based robotic grasping of objects on the Moon. A novel simulation environment with procedurally-generated datasets is created to train agents under challenging conditions in unstructured scenes with uneven terrain and harsh illumination. A model-free off-policy actor-critic algorithm is then employed for end-to-end learning of a policy that directly maps compact octree observations to continuous actions in Cartesian space. Experimental evaluation indicates that 3D data representations enable more effective learning of manipulation skills when compared to traditionally used image-based observations. Domain randomization improves the generalization of learned policies to novel scenes with previously unseen objects and different illumination conditions. To this end, we demonstrate zero-shot sim-to-real transfer by evaluating trained agents on a real robot in a Moon-analogue facility.

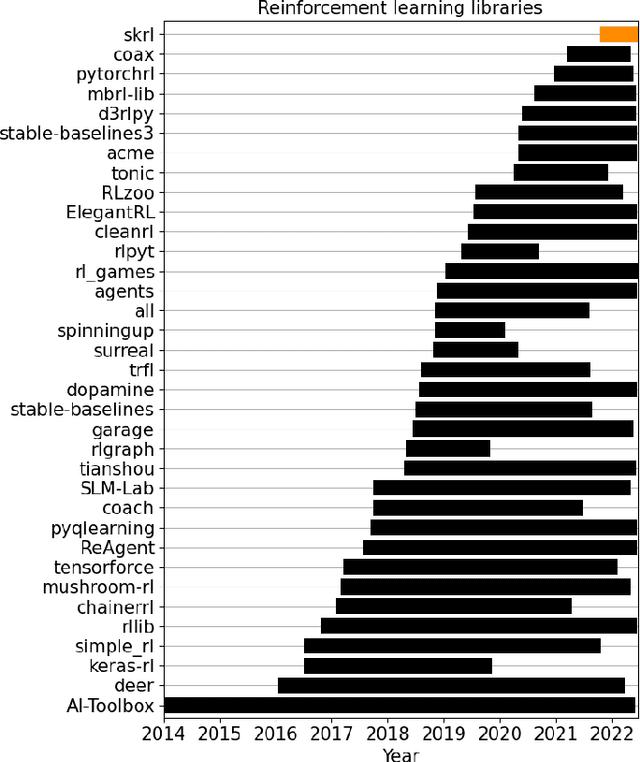

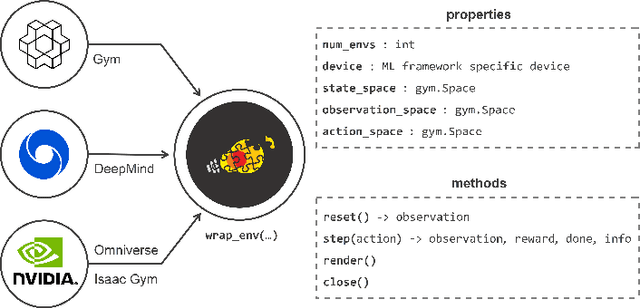

skrl: Modular and Flexible Library for Reinforcement Learning

Feb 08, 2022

Abstract:skrl is an open-source modular library for reinforcement learning written in Python and designed with a focus on readability, simplicity, and transparency of algorithm implementations. Apart from supporting environments that use the traditional OpenAI Gym interface, it allows loading, configuring, and operating NVIDIA Isaac Gym environments, enabling the parallel training of several agents with adjustable scopes, which may or may not share resources, in the same execution. The library's documentation can be found at https://skrl.readthedocs.io and its source code is available on GitHub at url{https://github.com/Toni-SM/skrl.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge