Erdal Kayacan

Monocular visual simultaneous localization and mapping: (r)evolution from geometry to deep learning-based pipelines

Mar 04, 2025

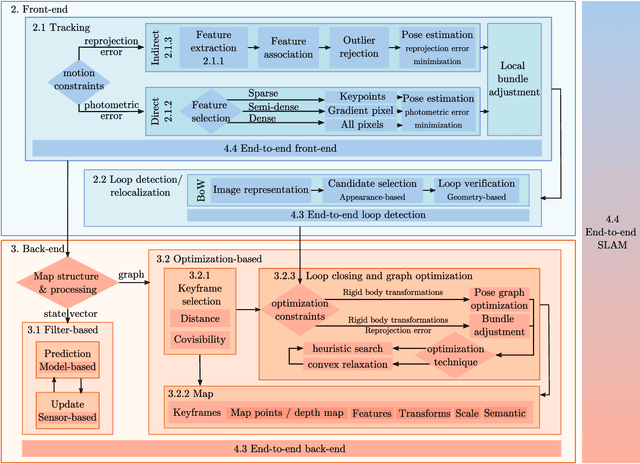

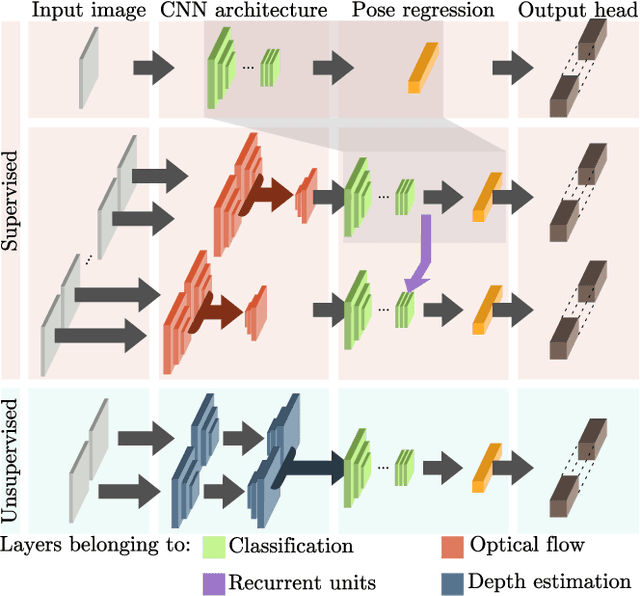

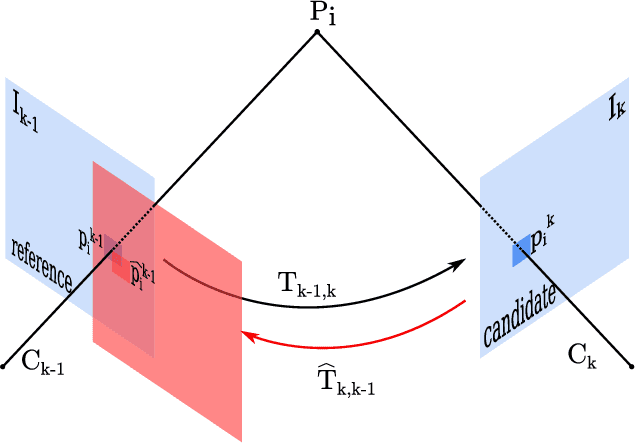

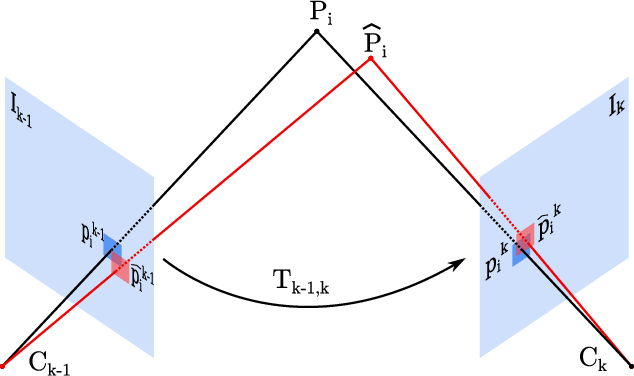

Abstract:With the rise of deep learning, there is a fundamental change in visual SLAM algorithms toward developing different modules trained as end-to-end pipelines. However, regardless of the implementation domain, visual SLAM's performance is subject to diverse environmental challenges, such as dynamic elements in outdoor environments, harsh imaging conditions in underwater environments, or blurriness in high-speed setups. These environmental challenges need to be identified to study the real-world viability of SLAM implementations. Motivated by the aforementioned challenges, this paper surveys the current state of visual SLAM algorithms according to the two main frameworks: geometry-based and learning-based SLAM. First, we introduce a general formulation of the SLAM pipeline that includes most of the implementations in the literature. Second, those implementations are classified and surveyed for geometry and learning-based SLAM. After that, environment-specific challenges are formulated to enable experimental evaluation of the resilience of different visual SLAM classes to varying imaging conditions. We address two significant issues in surveying visual SLAM, providing (1) a consistent classification of visual SLAM pipelines and (2) a robust evaluation of their performance under different deployment conditions. Finally, we give our take on future opportunities for visual SLAM implementations.

Continual Learning for Robust Gate Detection under Dynamic Lighting in Autonomous Drone Racing

May 02, 2024

Abstract:In autonomous and mobile robotics, a principal challenge is resilient real-time environmental perception, particularly in situations characterized by unknown and dynamic elements, as exemplified in the context of autonomous drone racing. This study introduces a perception technique for detecting drone racing gates under illumination variations, which is common during high-speed drone flights. The proposed technique relies upon a lightweight neural network backbone augmented with capabilities for continual learning. The envisaged approach amalgamates predictions of the gates' positional coordinates, distance, and orientation, encapsulating them into a cohesive pose tuple. A comprehensive number of tests serve to underscore the efficacy of this approach in confronting diverse and challenging scenarios, specifically those involving variable lighting conditions. The proposed methodology exhibits notable robustness in the face of illumination variations, thereby substantiating its effectiveness.

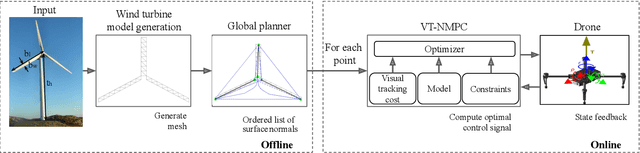

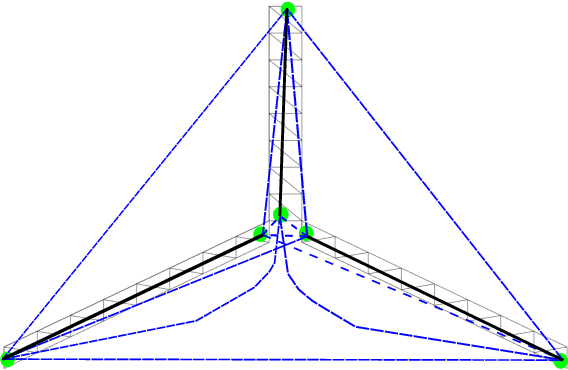

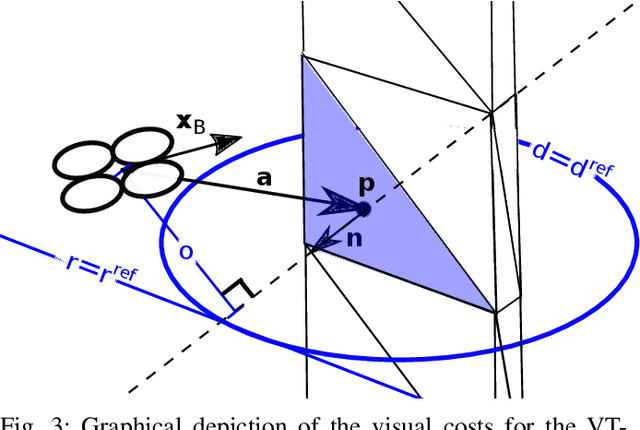

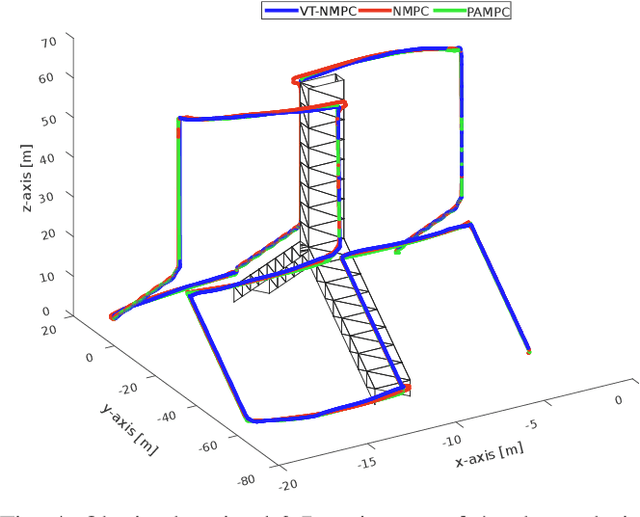

Visual Tracking Nonlinear Model Predictive Control Method for Autonomous Wind Turbine Inspection

Oct 21, 2023

Abstract:Automated visual inspection of on-and offshore wind turbines using aerial robots provides several benefits, namely, a safe working environment by circumventing the need for workers to be suspended high above the ground, reduced inspection time, preventive maintenance, and access to hard-to-reach areas. A novel nonlinear model predictive control (NMPC) framework alongside a global wind turbine path planner is proposed to achieve distance-optimal coverage for wind turbine inspection. Unlike traditional MPC formulations, visual tracking NMPC (VT-NMPC) is designed to track an inspection surface, instead of a position and heading trajectory, thereby circumventing the need to provide an accurate predefined trajectory for the drone. An additional capability of the proposed VT-NMPC method is that by incorporating inspection requirements as visual tracking costs to minimize, it naturally achieves the inspection task successfully while respecting the physical constraints of the drone. Multiple simulation runs and real-world tests demonstrate the efficiency and efficacy of the proposed automated inspection framework, which outperforms the traditional MPC designs, by providing full coverage of the target wind turbine blades as well as its robustness to changing wind conditions. The implementation codes are open-sourced.

UNav-Sim: A Visually Realistic Underwater Robotics Simulator and Synthetic Data-generation Framework

Oct 18, 2023

Abstract:Underwater robotic surveys can be costly due to the complex working environment and the need for various sensor modalities. While underwater simulators are essential, many existing simulators lack sufficient rendering quality, restricting their ability to transfer algorithms from simulation to real-world applications. To address this limitation, we introduce UNav-Sim, which, to the best of our knowledge, is the first simulator to incorporate the efficient, high-detail rendering of Unreal Engine 5 (UE5). UNav-Sim is open-source and includes an autonomous vision-based navigation stack. By supporting standard robotics tools like ROS, UNav-Sim enables researchers to develop and test algorithms for underwater environments efficiently.

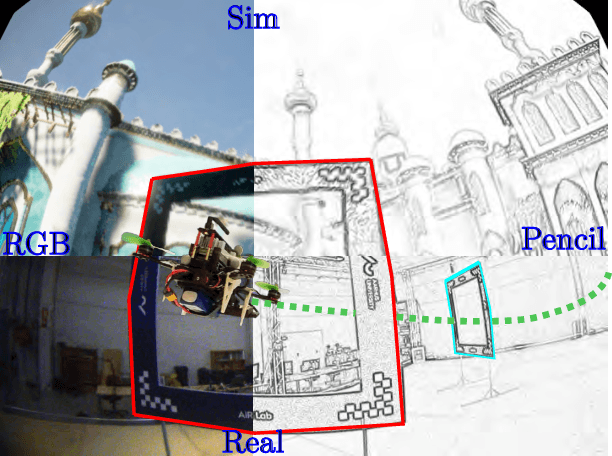

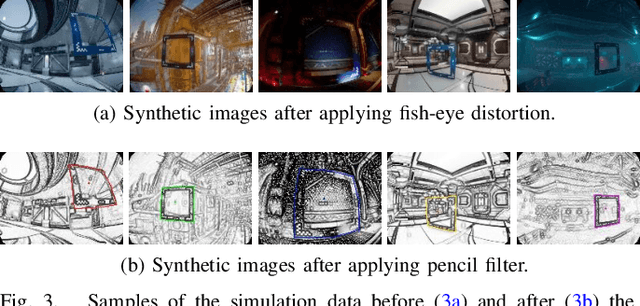

PencilNet: Zero-Shot Sim-to-Real Transfer Learning for Robust Gate Perception in Autonomous Drone Racing

Jul 28, 2022

Abstract:In autonomous and mobile robotics, one of the main challenges is the robust on-the-fly perception of the environment, which is often unknown and dynamic, like in autonomous drone racing. In this work, we propose a novel deep neural network-based perception method for racing gate detection -- PencilNet -- which relies on a lightweight neural network backbone on top of a pencil filter. This approach unifies predictions of the gates' 2D position, distance, and orientation in a single pose tuple. We show that our method is effective for zero-shot sim-to-real transfer learning that does not need any real-world training samples. Moreover, our framework is highly robust to illumination changes commonly seen under rapid flight compared to state-of-art methods. A thorough set of experiments demonstrates the effectiveness of this approach in multiple challenging scenarios, where the drone completes various tracks under different lighting conditions.

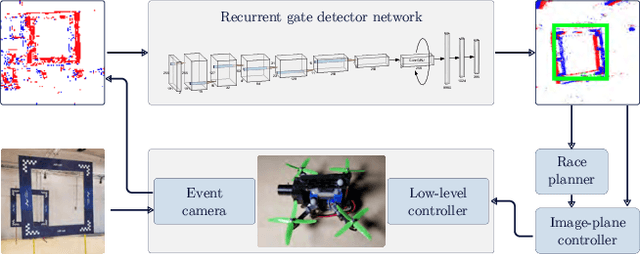

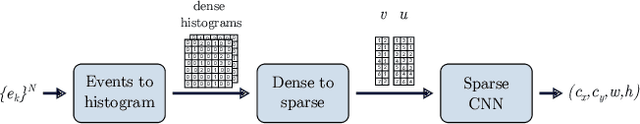

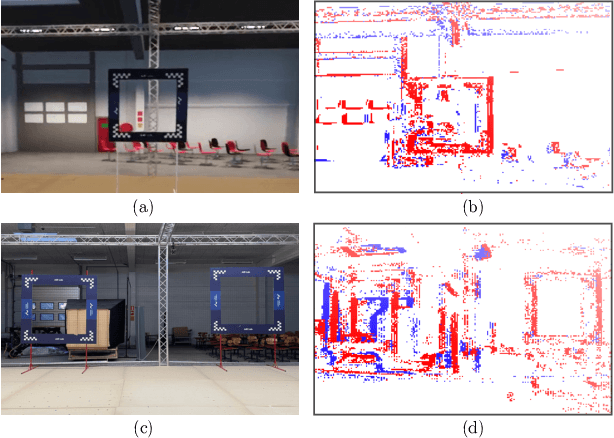

Event-based Navigation for Autonomous Drone Racing with Sparse Gated Recurrent Network

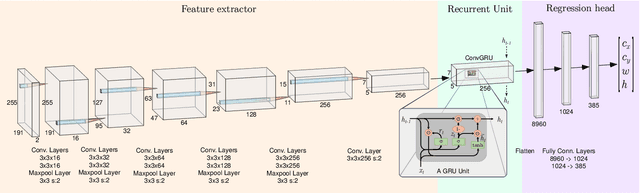

Apr 05, 2022

Abstract:Event-based vision has already revolutionized the perception task for robots by promising faster response, lower energy consumption, and lower bandwidth without introducing motion blur. In this work, a novel deep learning method based on gated recurrent units utilizing sparse convolutions for detecting gates in a race track is proposed using event-based vision for the autonomous drone racing problem. We demonstrate the efficiency and efficacy of the perception pipeline on a real robot platform that can safely navigate a typical autonomous drone racing track in real-time. Throughout the experiments, we show that the event-based vision with the proposed gated recurrent unit and pretrained models on simulated event data significantly improve the gate detection precision. Furthermore, an event-based drone racing dataset consisting of both simulated and real data sequences is publicly released.

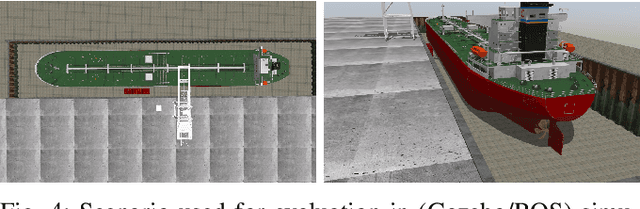

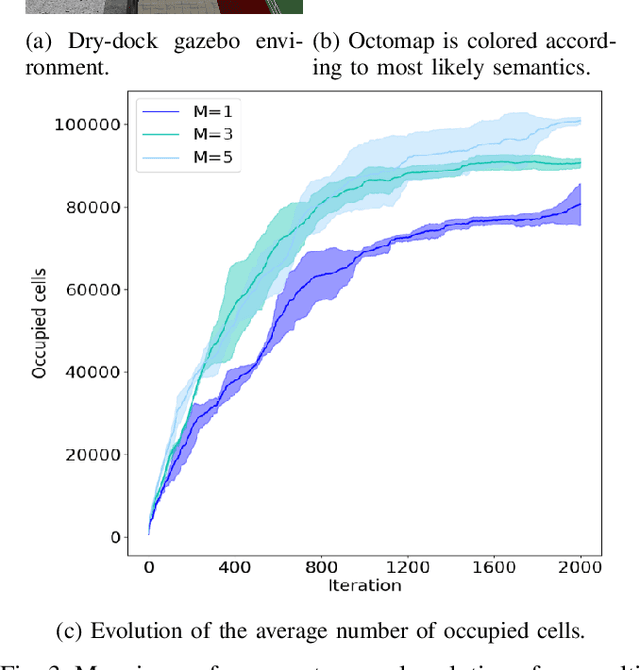

Real-Time Volumetric-Semantic Exploration and Mapping: An Uncertainty-Aware Approach

Sep 03, 2021

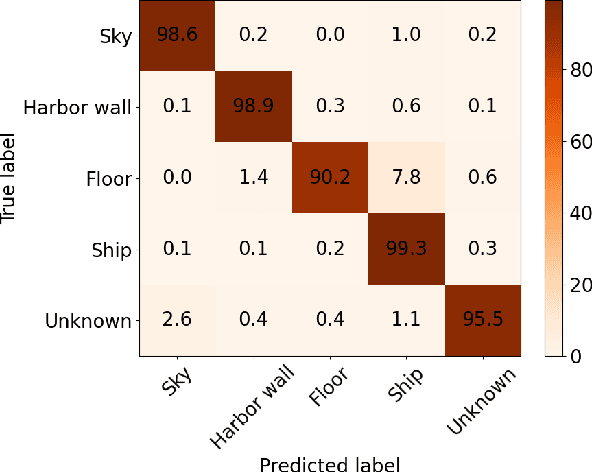

Abstract:In this work we propose a holistic framework for autonomous aerial inspection tasks, using semantically-aware, yet, computationally efficient planning and mapping algorithms. The system leverages state-of-the-art receding horizon exploration techniques for next-best-view (NBV) planning with geometric and semantic segmentation information provided by state-of-the-art deep convolutional neural networks (DCNNs), with the goal of enriching environment representations. The contributions of this article are threefold, first we propose an efficient sensor observation model, and a reward function that encodes the expected information gains from the observations taken from specific view points. Second, we extend the reward function to incorporate not only geometric but also semantic probabilistic information, provided by a DCNN for semantic segmentation that operates in real-time. The incorporation of semantic information in the environment representation allows biasing exploration towards specific objects, while ignoring task-irrelevant ones during planning. Finally, we employ our approaches in an autonomous drone shipyard inspection task. A set of simulations in realistic scenarios demonstrate the efficacy and efficiency of the proposed framework when compared with the state-of-the-art.

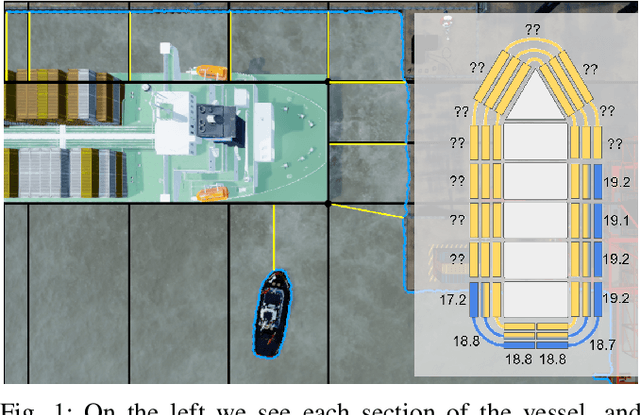

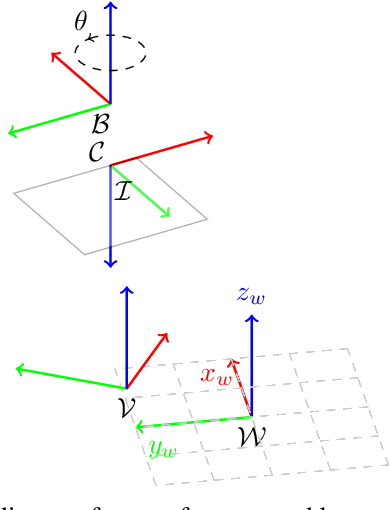

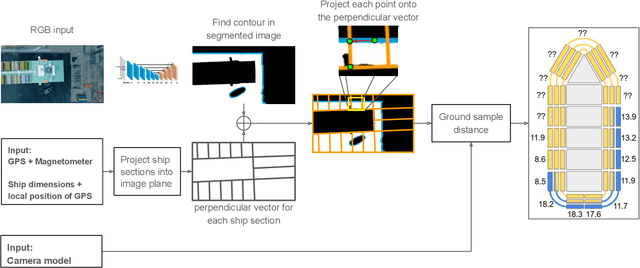

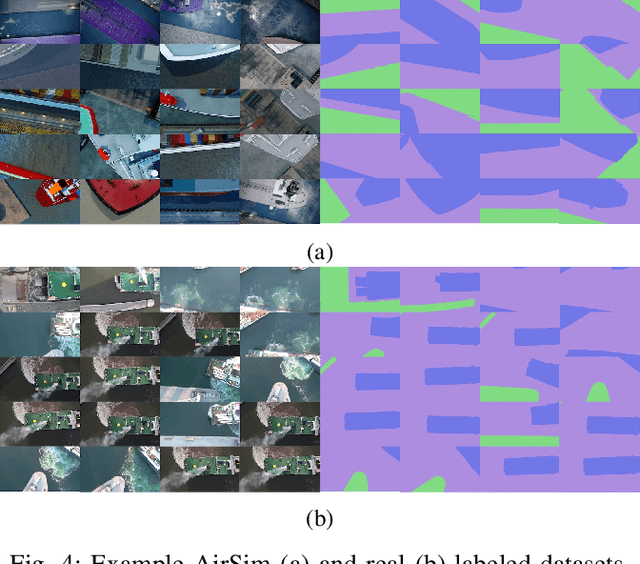

Safe Vessel Navigation Visually Aided by Autonomous Unmanned Aerial Vehicles in Congested Harbors and Waterways

Aug 09, 2021

Abstract:In the maritime sector, safe vessel navigation is of great importance, particularly in congested harbors and waterways. The focus of this work is to estimate the distance between an object of interest and potential obstacles using a companion UAV. The proposed approach fuses GPS data with long-range aerial images. First, we employ semantic segmentation DNN for discriminating the vessel of interest, water, and potential solid objects using raw image data. The network is trained with both real and images generated and automatically labeled from a realistic AirSim simulation environment. Then, the distances between the extracted vessel and non-water obstacle blobs are computed using a novel GSD estimation algorithm. To the best of our knowledge, this work is the first attempt to detect and estimate distances to unknown objects from long-range visual data captured with conventional RGB cameras and auxiliary absolute positioning systems (e.g. GPS). The simulation results illustrate the accuracy and efficacy of the proposed method for visually aided navigation of vessels assisted by UAV.

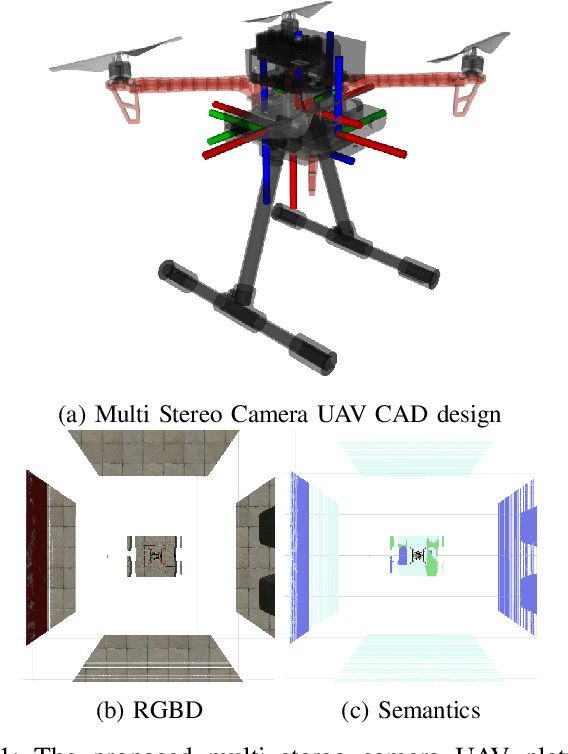

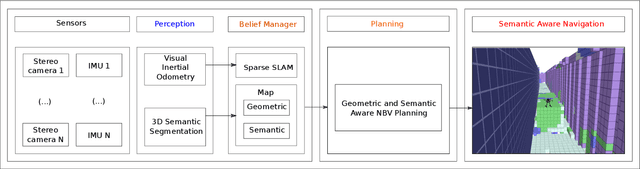

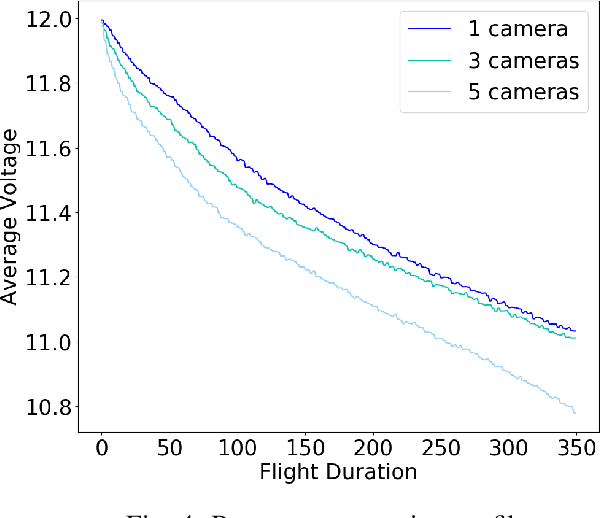

On the Advantages of Multiple Stereo Vision Camera Designs for Autonomous Drone Navigation

May 26, 2021

Abstract:In this work we showcase the design and assessment of the performance of a multi-camera UAV, when coupled with state-of-the-art planning and mapping algorithms for autonomous navigation. The system leverages state-of-the-art receding horizon exploration techniques for Next-Best-View (NBV) planning with 3D and semantic information, provided by a reconfigurable multi stereo camera system. We employ our approaches in an autonomous drone-based inspection task and evaluate them in an autonomous exploration and mapping scenario. We discuss the advantages and limitations of using multi stereo camera flying systems, and the trade-off between number of cameras and mapping performance.

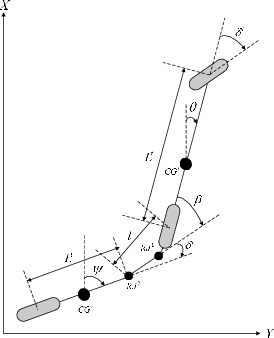

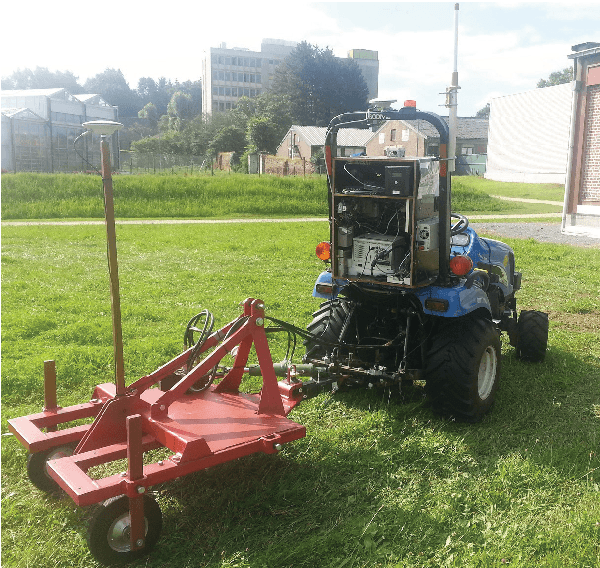

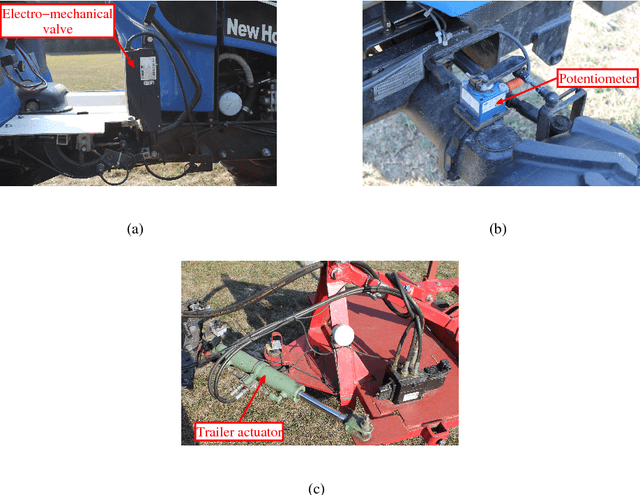

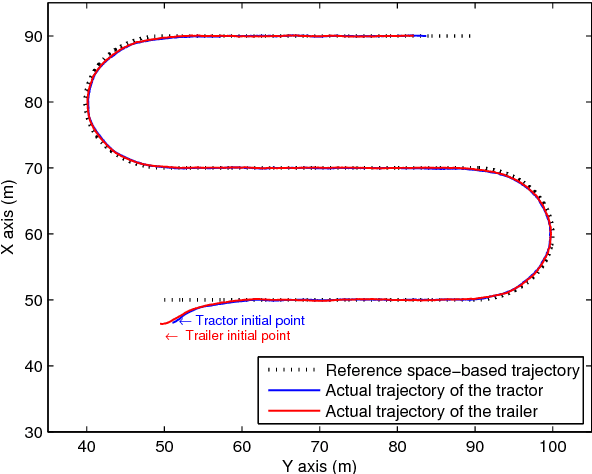

Distributed nonlinear model predictive control of an autonomous tractor-trailer system

Apr 20, 2021

Abstract:This paper addresses the trajectory tracking problem of an autonomous tractor-trailer system by using a fast distributed nonlinear model predictive control algorithm in combination with nonlinear moving horizon estimation for the state and parameter estimation in which constraints on the inputs and the states can be incorporated. The proposed control algorithm is capable of driving the tractor-trailer system to any desired trajectory ensuring high control accuracy and robustness against environmental disturbances.

* arXiv admin note: substantial text overlap with arXiv:2104.02063, arXiv:2104.01728

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge