Andrew P. Bradley

*: shared first/last authors

Automatic lesion detection, segmentation and characterization via 3D multiscale morphological sifting in breast MRI

Jul 07, 2020

Abstract:Previous studies on computer aided detection/diagnosis (CAD) in 4D breast magnetic resonance imaging (MRI) regard lesion detection, segmentation and characterization as separate tasks, and typically require users to manually select 2D MRI slices or regions of interest as the input. In this work, we present a breast MRI CAD system that can handle 4D multimodal breast MRI data, and integrate lesion detection, segmentation and characterization with no user intervention. The proposed CAD system consists of three major stages: region candidate generation, feature extraction and region candidate classification. Breast lesions are firstly extracted as region candidates using the novel 3D multiscale morphological sifting (MMS). The 3D MMS, which uses linear structuring elements to extract lesion-like patterns, can segment lesions from breast images accurately and efficiently. Analytical features are then extracted from all available 4D multimodal breast MRI sequences, including T1-, T2-weighted and DCE sequences, to represent the signal intensity, texture, morphological and enhancement kinetic characteristics of the region candidates. The region candidates are lastly classified as lesion or normal tissue by the random under-sampling boost (RUSboost), and as malignant or benign lesion by the random forest. Evaluated on a breast MRI dataset which contains a total of 117 cases with 95 malignant and 46 benign lesions, the proposed system achieves a true positive rate (TPR) of 0.90 at 3.19 false positives per patient (FPP) for lesion detection and a TPR of 0.91 at a FPP of 2.95 for identifying malignant lesions without any user intervention. The average dice similarity index (DSI) is 0.72 for lesion segmentation. Compared with previously proposed systems evaluated on the same breast MRI dataset, the proposed CAD system achieves a favourable performance in breast lesion detection and characterization.

Pre and Post-hoc Diagnosis and Interpretation of Malignancy from Breast DCE-MRI

Sep 25, 2018

Abstract:We propose a new method for breast cancer screening from DCE-MRI based on a post-hoc approach that is trained using weakly annotated data (i.e., labels are available only at the image level without any lesion delineation). Our proposed post-hoc method automatically diagnosis the whole volume and, for positive cases, it localizes the malignant lesions that led to such diagnosis. Conversely, traditional approaches follow a pre-hoc approach that initially localises suspicious areas that are subsequently classified to establish the breast malignancy -- this approach is trained using strongly annotated data (i.e., it needs a delineation and classification of all lesions in an image). Another goal of this paper is to establish the advantages and disadvantages of both approaches when applied to breast screening from DCE-MRI. Relying on experiments on a breast DCE-MRI dataset that contains scans of 117 patients, our results show that the post-hoc method is more accurate for diagnosing the whole volume per patient, achieving an AUC of 0.91, while the pre-hoc method achieves an AUC of 0.81. However, the performance for localising the malignant lesions remains challenging for the post-hoc method due to the weakly labelled dataset employed during training.

Model Agnostic Saliency for Weakly Supervised Lesion Detection from Breast DCE-MRI

Jul 23, 2018

Abstract:There is a heated debate on how to interpret the decisions provided by deep learning models (DLM), where the main approaches rely on the visualization of salient regions to interpret the DLM classification process. However, these approaches generally fail to satisfy three conditions for the problem of lesion detection from medical images: 1) for images with lesions, all salient regions should represent lesions, 2) for images containing no lesions, no salient region should be produced,and 3) lesions are generally small with relatively smooth borders. We propose a new model-agnostic paradigm to interpret DLM classification decisions supported by a novel definition of saliency that incorporates the conditions above. Our model-agnostic 1-class saliency detector (MASD) is tested on weakly supervised breast lesion detection from DCE-MRI, achieving state-of-the-art detection accuracy when compared to current visualization methods.

Training Medical Image Analysis Systems like Radiologists

Jun 12, 2018

Abstract:The training of medical image analysis systems using machine learning approaches follows a common script: collect and annotate a large dataset, train the classifier on the training set, and test it on a hold-out test set. This process bears no direct resemblance with radiologist training, which is based on solving a series of tasks of increasing difficulty, where each task involves the use of significantly smaller datasets than those used in machine learning. In this paper, we propose a novel training approach inspired by how radiologists are trained. In particular, we explore the use of meta-training that models a classifier based on a series of tasks. Tasks are selected using teacher-student curriculum learning, where each task consists of simple classification problems containing small training sets. We hypothesize that our proposed meta-training approach can be used to pre-train medical image analysis models. This hypothesis is tested on the automatic breast screening classification from DCE-MRI trained with weakly labeled datasets. The classification performance achieved by our approach is shown to be the best in the field for that application, compared to state of art baseline approaches: DenseNet, multiple instance learning and multi-task learning.

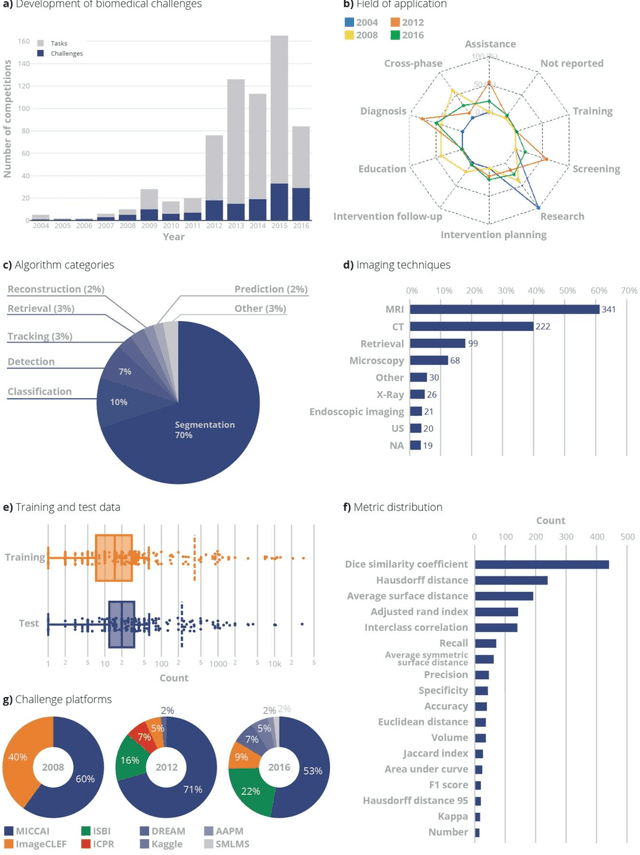

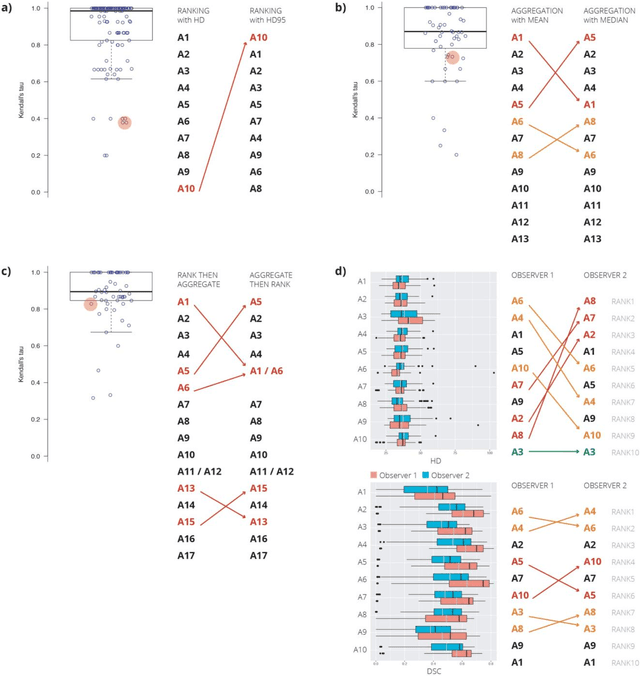

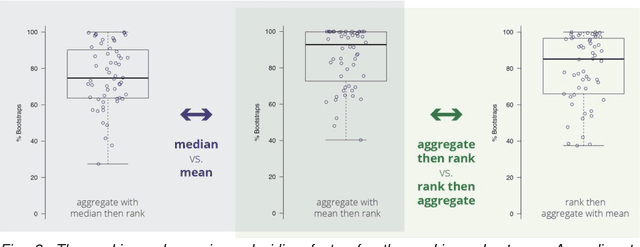

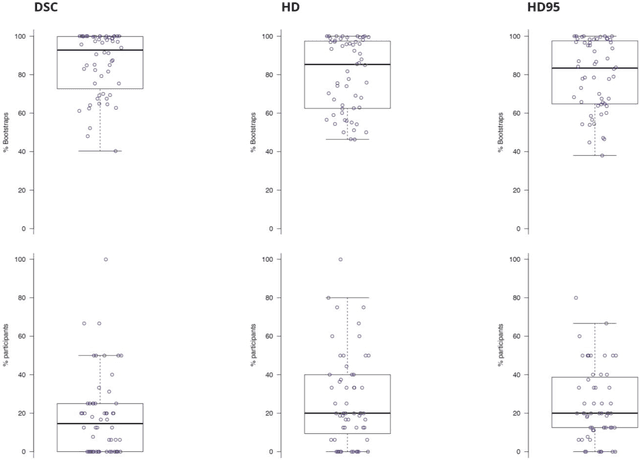

Is the winner really the best? A critical analysis of common research practice in biomedical image analysis competitions

Jun 06, 2018

Abstract:International challenges have become the standard for validation of biomedical image analysis methods. Given their scientific impact, it is surprising that a critical analysis of common practices related to the organization of challenges has not yet been performed. In this paper, we present a comprehensive analysis of biomedical image analysis challenges conducted up to now. We demonstrate the importance of challenges and show that the lack of quality control has critical consequences. First, reproducibility and interpretation of the results is often hampered as only a fraction of relevant information is typically provided. Second, the rank of an algorithm is generally not robust to a number of variables such as the test data used for validation, the ranking scheme applied and the observers that make the reference annotations. To overcome these problems, we recommend best practice guidelines and define open research questions to be addressed in the future.

Detecting hip fractures with radiologist-level performance using deep neural networks

Nov 17, 2017

Abstract:We developed an automated deep learning system to detect hip fractures from frontal pelvic x-rays, an important and common radiological task. Our system was trained on a decade of clinical x-rays (~53,000 studies) and can be applied to clinical data, automatically excluding inappropriate and technically unsatisfactory studies. We demonstrate diagnostic performance equivalent to a human radiologist and an area under the ROC curve of 0.994. Translated to clinical practice, such a system has the potential to increase the efficiency of diagnosis, reduce the need for expensive additional testing, expand access to expert level medical image interpretation, and improve overall patient outcomes.

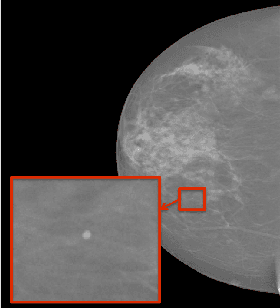

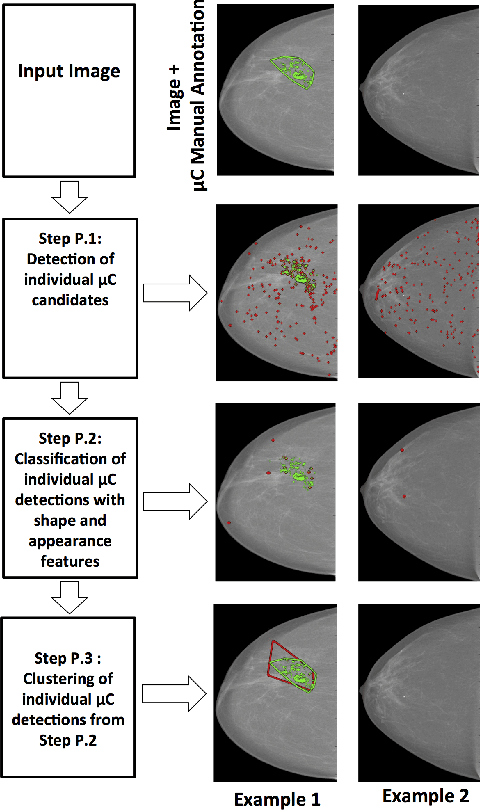

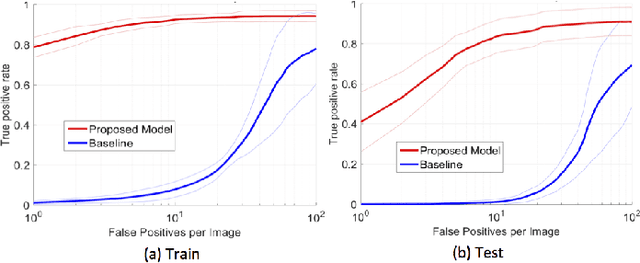

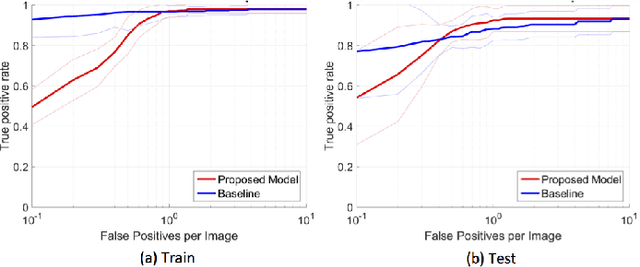

Automated Detection of Individual Micro-calcifications from Mammograms using a Multi-stage Cascade Approach

Oct 07, 2016

Abstract:In mammography, the efficacy of computer-aided detection methods depends, in part, on the robust localisation of micro-calcifications ($\mu$C). Currently, the most effective methods are based on three steps: 1) detection of individual $\mu$C candidates, 2) clustering of individual $\mu$C candidates, and 3) classification of $\mu$C clusters. Where the second step is motivated both to reduce the number of false positive detections from the first step and on the evidence that malignancy depends on a relatively large number of $\mu$C detections within a certain area. In this paper, we propose a novel approach to $\mu$C detection, consisting of the detection \emph{and} classification of individual $\mu$C candidates, using shape and appearance features, using a cascade of boosting classifiers. The final step in our approach then clusters the remaining individual $\mu$C candidates. The main advantage of this approach lies in its ability to reject a significant number of false positive $\mu$C candidates compared to previously proposed methods. Specifically, on the INbreast dataset, we show that our approach has a true positive rate (TPR) for individual $\mu$Cs of 40\% at one false positive per image (FPI) and a TPR of 80\% at 10 FPI. These results are significantly more accurate than the current state of the art, which has a TPR of less than 1\% at one FPI and a TPR of 10\% at 10 FPI. Our results are competitive with the state of the art at the subsequent stage of detecting clusters of $\mu$Cs.

Automated 5-year Mortality Prediction using Deep Learning and Radiomics Features from Chest Computed Tomography

Jul 01, 2016

Abstract:We propose new methods for the prediction of 5-year mortality in elderly individuals using chest computed tomography (CT). The methods consist of a classifier that performs this prediction using a set of features extracted from the CT image and segmentation maps of multiple anatomic structures. We explore two approaches: 1) a unified framework based on deep learning, where features and classifier are automatically learned in a single optimisation process; and 2) a multi-stage framework based on the design and selection/extraction of hand-crafted radiomics features, followed by the classifier learning process. Experimental results, based on a dataset of 48 annotated chest CTs, show that the deep learning model produces a mean 5-year mortality prediction accuracy of 68.5%, while radiomics produces a mean accuracy that varies between 56% to 66% (depending on the feature selection/extraction method and classifier). The successful development of the proposed models has the potential to make a profound impact in preventive and personalised healthcare.

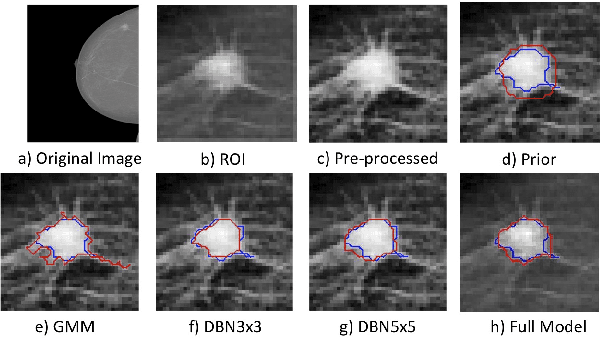

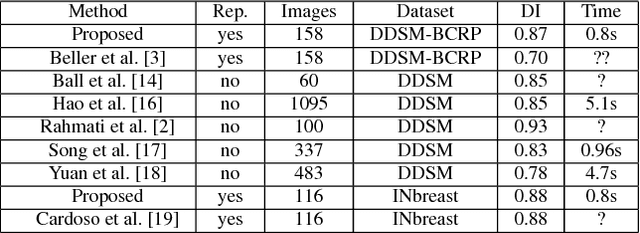

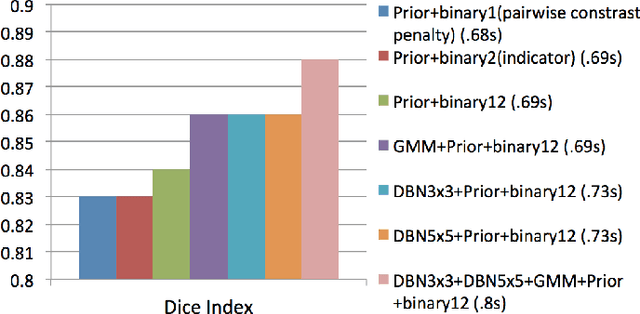

Deep Structured learning for mass segmentation from Mammograms

Dec 05, 2014

Abstract:In this paper, we present a novel method for the segmentation of breast masses from mammograms exploring structured and deep learning. Specifically, using structured support vector machine (SSVM), we formulate a model that combines different types of potential functions, including one that classifies image regions using deep learning. Our main goal with this work is to show the accuracy and efficiency improvements that these relatively new techniques can provide for the segmentation of breast masses from mammograms. We also propose an easily reproducible quantitative analysis to as- sess the performance of breast mass segmentation methodologies based on widely accepted accuracy and running time measurements on public datasets, which will facilitate further comparisons for this segmentation problem. In particular, we use two publicly available datasets (DDSM-BCRP and INbreast) and propose the computa- tion of the running time taken for the methodology to produce a mass segmentation given an input image and the use of the Dice index to quantitatively measure the segmentation accuracy. For both databases, we show that our proposed methodology produces competitive results in terms of accuracy and running time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge