Andrew Bradley

Uncertainty-Aware Autonomous Vehicles: Predicting the Road Ahead

Oct 26, 2025Abstract:Autonomous Vehicle (AV) perception systems have advanced rapidly in recent years, providing vehicles with the ability to accurately interpret their environment. Perception systems remain susceptible to errors caused by overly-confident predictions in the case of rare events or out-of-sample data. This study equips an autonomous vehicle with the ability to 'know when it is uncertain', using an uncertainty-aware image classifier as part of the AV software stack. Specifically, the study exploits the ability of Random-Set Neural Networks (RS-NNs) to explicitly quantify prediction uncertainty. Unlike traditional CNNs or Bayesian methods, RS-NNs predict belief functions over sets of classes, allowing the system to identify and signal uncertainty clearly in novel or ambiguous scenarios. The system is tested in a real-world autonomous racing vehicle software stack, with the RS-NN classifying the layout of the road ahead and providing the associated uncertainty of the prediction. Performance of the RS-NN under a range of road conditions is compared against traditional CNN and Bayesian neural networks, with the RS-NN achieving significantly higher accuracy and superior uncertainty calibration. This integration of RS-NNs into Robot Operating System (ROS)-based vehicle control pipeline demonstrates that predictive uncertainty can dynamically modulate vehicle speed, maintaining high-speed performance under confident predictions while proactively improving safety through speed reductions in uncertain scenarios. These results demonstrate the potential of uncertainty-aware neural networks - in particular RS-NNs - as a practical solution for safer and more robust autonomous driving.

ROAD-Waymo: Action Awareness at Scale for Autonomous Driving

Nov 03, 2024

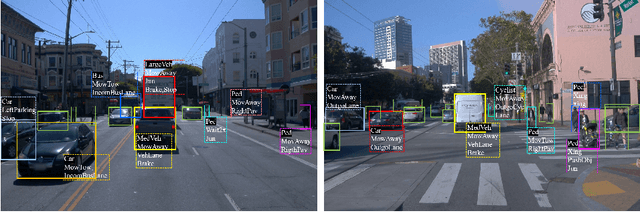

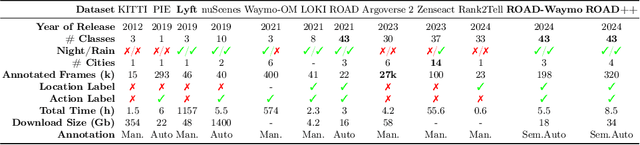

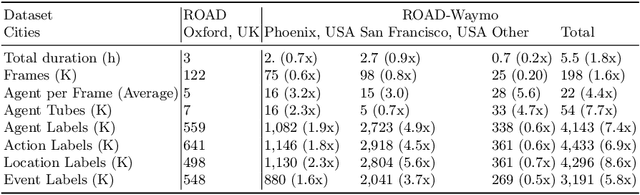

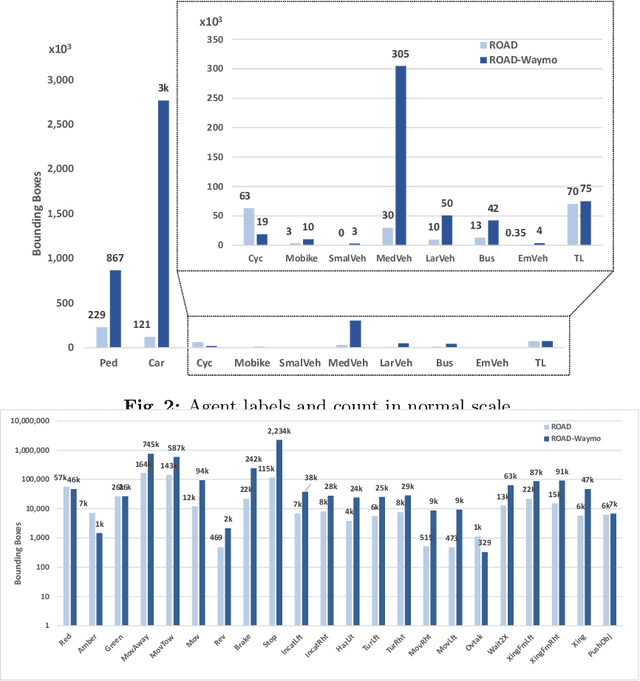

Abstract:Autonomous Vehicle (AV) perception systems require more than simply seeing, via e.g., object detection or scene segmentation. They need a holistic understanding of what is happening within the scene for safe interaction with other road users. Few datasets exist for the purpose of developing and training algorithms to comprehend the actions of other road users. This paper presents ROAD-Waymo, an extensive dataset for the development and benchmarking of techniques for agent, action, location and event detection in road scenes, provided as a layer upon the (US) Waymo Open dataset. Considerably larger and more challenging than any existing dataset (and encompassing multiple cities), it comes with 198k annotated video frames, 54k agent tubes, 3.9M bounding boxes and a total of 12.4M labels. The integrity of the dataset has been confirmed and enhanced via a novel annotation pipeline designed for automatically identifying violations of requirements specifically designed for this dataset. As ROAD-Waymo is compatible with the original (UK) ROAD dataset, it provides the opportunity to tackle domain adaptation between real-world road scenarios in different countries within a novel benchmark: ROAD++.

A Hybrid Graph Network for Complex Activity Detection in Video

Oct 30, 2023Abstract:Interpretation and understanding of video presents a challenging computer vision task in numerous fields - e.g. autonomous driving and sports analytics. Existing approaches to interpreting the actions taking place within a video clip are based upon Temporal Action Localisation (TAL), which typically identifies short-term actions. The emerging field of Complex Activity Detection (CompAD) extends this analysis to long-term activities, with a deeper understanding obtained by modelling the internal structure of a complex activity taking place within the video. We address the CompAD problem using a hybrid graph neural network which combines attention applied to a graph encoding the local (short-term) dynamic scene with a temporal graph modelling the overall long-duration activity. Our approach is as follows: i) Firstly, we propose a novel feature extraction technique which, for each video snippet, generates spatiotemporal `tubes' for the active elements (`agents') in the (local) scene by detecting individual objects, tracking them and then extracting 3D features from all the agent tubes as well as the overall scene. ii) Next, we construct a local scene graph where each node (representing either an agent tube or the scene) is connected to all other nodes. Attention is then applied to this graph to obtain an overall representation of the local dynamic scene. iii) Finally, all local scene graph representations are interconnected via a temporal graph, to estimate the complex activity class together with its start and end time. The proposed framework outperforms all previous state-of-the-art methods on all three datasets including ActivityNet-1.3, Thumos-14, and ROAD.

Temporal DINO: A Self-supervised Video Strategy to Enhance Action Prediction

Aug 20, 2023Abstract:The emerging field of action prediction plays a vital role in various computer vision applications such as autonomous driving, activity analysis and human-computer interaction. Despite significant advancements, accurately predicting future actions remains a challenging problem due to high dimensionality, complex dynamics and uncertainties inherent in video data. Traditional supervised approaches require large amounts of labelled data, which is expensive and time-consuming to obtain. This paper introduces a novel self-supervised video strategy for enhancing action prediction inspired by DINO (self-distillation with no labels). The Temporal-DINO approach employs two models; a 'student' processing past frames; and a 'teacher' processing both past and future frames, enabling a broader temporal context. During training, the teacher guides the student to learn future context by only observing past frames. The strategy is evaluated on ROAD dataset for the action prediction downstream task using 3D-ResNet, Transformer, and LSTM architectures. The experimental results showcase significant improvements in prediction performance across these architectures, with our method achieving an average enhancement of 9.9% Precision Points (PP), highlighting its effectiveness in enhancing the backbones' capabilities of capturing long-term dependencies. Furthermore, our approach demonstrates efficiency regarding the pretraining dataset size and the number of epochs required. This method overcomes limitations present in other approaches, including considering various backbone architectures, addressing multiple prediction horizons, reducing reliance on hand-crafted augmentations, and streamlining the pretraining process into a single stage. These findings highlight the potential of our approach in diverse video-based tasks such as activity recognition, motion planning, and scene understanding.

A Scenario-Based Functional Testing Approach to Improving DNN Performance

Jul 13, 2023Abstract:This paper proposes a scenario-based functional testing approach for enhancing the performance of machine learning (ML) applications. The proposed method is an iterative process that starts with testing the ML model on various scenarios to identify areas of weakness. It follows by a further testing on the suspected weak scenarios and statistically evaluate the model's performance on the scenarios to confirm the diagnosis. Once the diagnosis of weak scenarios is confirmed by test results, the treatment of the model is performed by retraining the model using a transfer learning technique with the original model as the base and applying a set of training data specifically targeting the treated scenarios plus a subset of training data selected at random from the original train dataset to prevent the so-call catastrophic forgetting effect. Finally, after the treatment, the model is assessed and evaluated again by testing on the treated scenarios as well as other scenarios to check if the treatment is effective and no side effect caused. The paper reports a case study with a real ML deep neural network (DNN) model, which is the perception system of an autonomous racing car. It is demonstrated that the method is effective in the sense that DNN model's performance can be improved. It provides an efficient method of enhancing ML model's performance with much less human and compute resource than retrain from scratch.

Never mind the metrics -- what about the uncertainty? Visualising confusion matrix metric distributions

Jun 05, 2022

Abstract:There are strong incentives to build models that demonstrate outstanding predictive performance on various datasets and benchmarks. We believe these incentives risk a narrow focus on models and on the performance metrics used to evaluate and compare them -- resulting in a growing body of literature to evaluate and compare metrics. This paper strives for a more balanced perspective on classifier performance metrics by highlighting their distributions under different models of uncertainty and showing how this uncertainty can easily eclipse differences in the empirical performance of classifiers. We begin by emphasising the fundamentally discrete nature of empirical confusion matrices and show how binary matrices can be meaningfully represented in a three dimensional compositional lattice, whose cross-sections form the basis of the space of receiver operating characteristic (ROC) curves. We develop equations, animations and interactive visualisations of the contours of performance metrics within (and beyond) this ROC space, showing how some are affected by class imbalance. We provide interactive visualisations that show the discrete posterior predictive probability mass functions of true and false positive rates in ROC space, and how these relate to uncertainty in performance metrics such as Balanced Accuracy (BA) and the Matthews Correlation Coefficient (MCC). Our hope is that these insights and visualisations will raise greater awareness of the substantial uncertainty in performance metric estimates that can arise when classifiers are evaluated on empirical datasets and benchmarks, and that classification model performance claims should be tempered by this understanding.

Simulating Malicious Attacks on VANETs for Connected and Autonomous Vehicle Cybersecurity: A Machine Learning Dataset

Feb 15, 2022

Abstract:Connected and Autonomous Vehicles (CAVs) rely on Vehicular Adhoc Networks with wireless communication between vehicles and roadside infrastructure to support safe operation. However, cybersecurity attacks pose a threat to VANETs and the safe operation of CAVs. This study proposes the use of simulation for modelling typical communication scenarios which may be subject to malicious attacks. The Eclipse MOSAIC simulation framework is used to model two typical road scenarios, including messaging between the vehicles and infrastructure - and both replay and bogus information cybersecurity attacks are introduced. The model demonstrates the impact of these attacks, and provides an open dataset to inform the development of machine learning algorithms to provide anomaly detection and mitigation solutions for enhancing secure communications and safe deployment of CAVs on the road.

Vision in adverse weather: Augmentation using CycleGANs with various object detectors for robust perception in autonomous racing

Jan 11, 2022

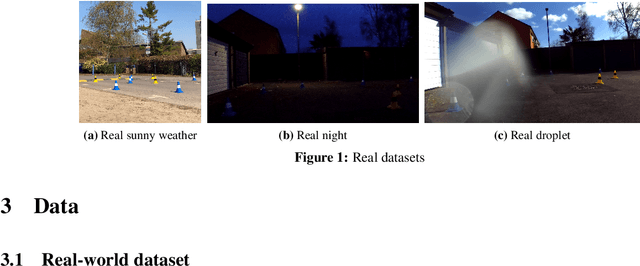

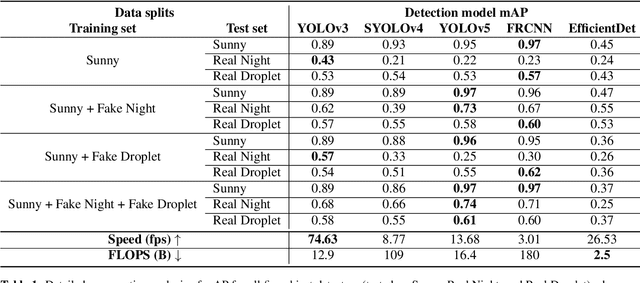

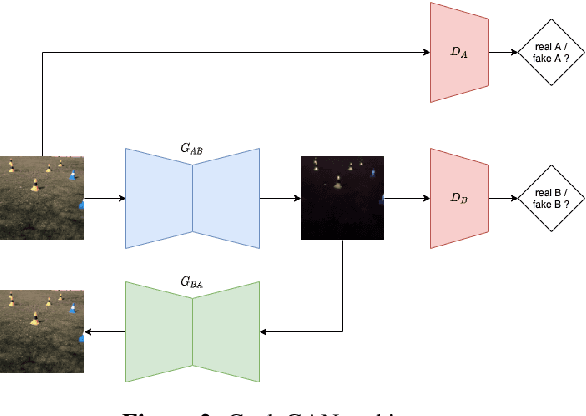

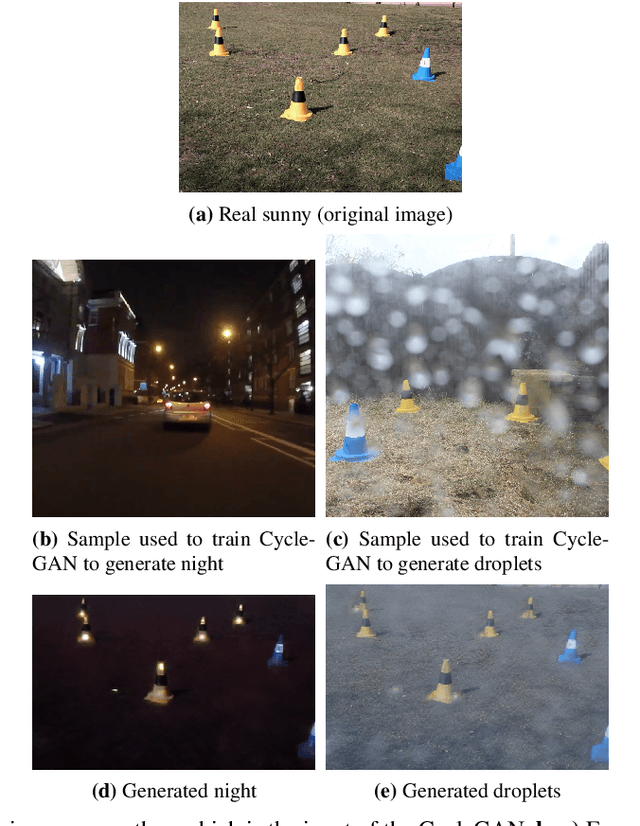

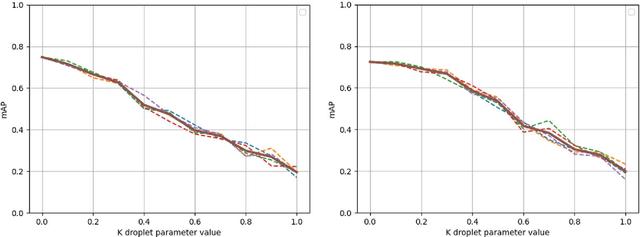

Abstract:In an autonomous driving system, perception - identification of features and objects from the environment - is crucial. In autonomous racing, high speeds and small margins demand rapid and accurate detection systems. During the race, the weather can change abruptly, causing significant degradation in perception, resulting in ineffective manoeuvres. In order to improve detection in adverse weather, deep-learning-based models typically require extensive datasets captured in such conditions - the collection of which is a tedious, laborious, and costly process. However, recent developments in CycleGAN architectures allow the synthesis of highly realistic scenes in multiple weather conditions. To this end, we introduce an approach of using synthesised adverse condition datasets in autonomous racing (generated using CycleGAN) to improve the performance of four out of five state-of-the-art detectors by an average of 42.7 and 4.4 mAP percentage points in the presence of night-time conditions and droplets, respectively. Furthermore, we present a comparative analysis of five object detectors - identifying the optimal pairing of detector and training data for use during autonomous racing in challenging conditions.

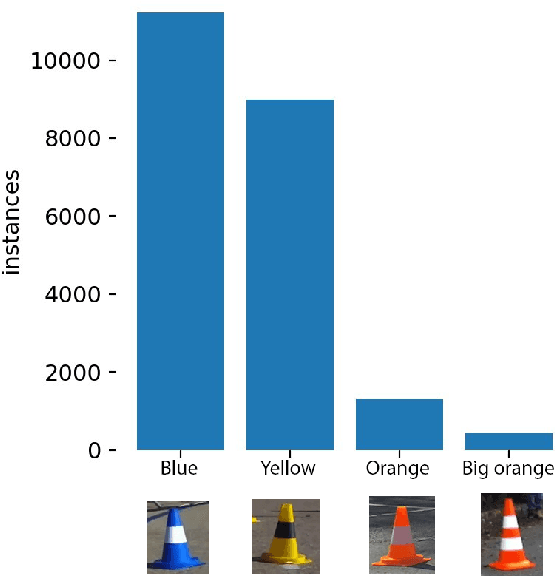

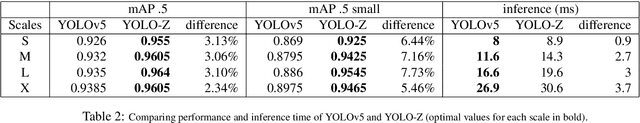

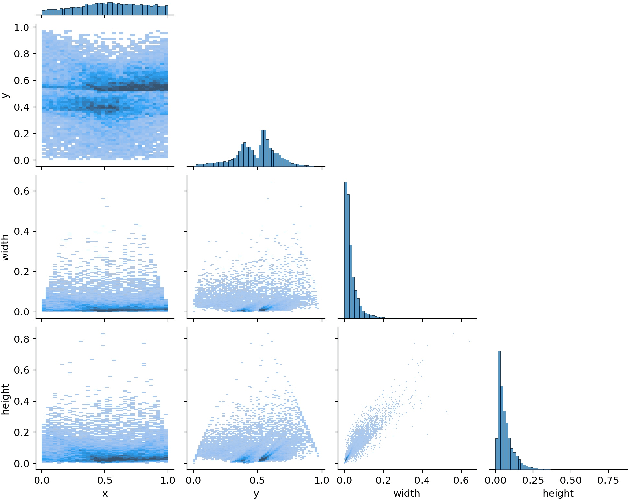

YOLO-Z: Improving small object detection in YOLOv5 for autonomous vehicles

Dec 23, 2021

Abstract:As autonomous vehicles and autonomous racing rise in popularity, so does the need for faster and more accurate detectors. While our naked eyes are able to extract contextual information almost instantly, even from far away, image resolution and computational resources limitations make detecting smaller objects (that is, objects that occupy a small pixel area in the input image) a genuinely challenging task for machines and a wide-open research field. This study explores how the popular YOLOv5 object detector can be modified to improve its performance in detecting smaller objects, with a particular application in autonomous racing. To achieve this, we investigate how replacing certain structural elements of the model (as well as their connections and other parameters) can affect performance and inference time. In doing so, we propose a series of models at different scales, which we name `YOLO-Z', and which display an improvement of up to 6.9% in mAP when detecting smaller objects at 50% IOU, at the cost of just a 3ms increase in inference time compared to the original YOLOv5. Our objective is to inform future research on the potential of adjusting a popular detector such as YOLOv5 to address specific tasks and provide insights on how specific changes can impact small object detection. Such findings, applied to the broader context of autonomous vehicles, could increase the amount of contextual information available to such systems.

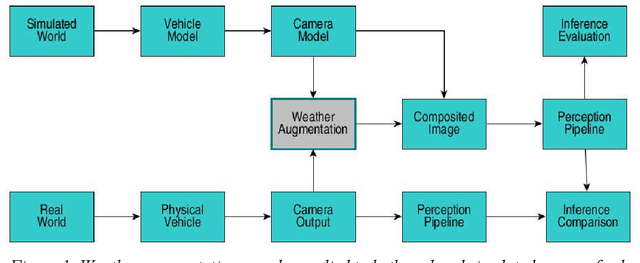

Worsening Perception: Real-time Degradation of Autonomous Vehicle Perception Performance for Simulation of Adverse Weather Conditions

Mar 05, 2021

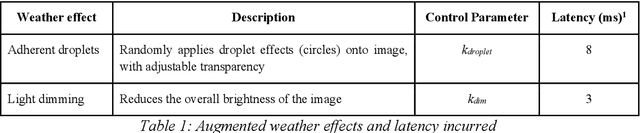

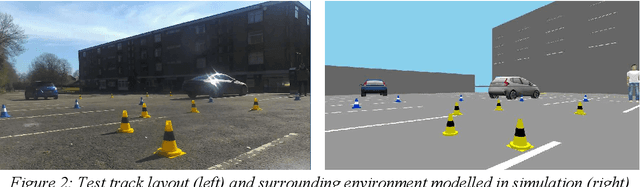

Abstract:Autonomous vehicles rely heavily upon their perception subsystems to see the environment in which they operate. Unfortunately, the effect of varying weather conditions presents a significant challenge to object detection algorithms, and thus it is imperative to test the vehicle extensively in all conditions which it may experience. However, unpredictable weather can make real-world testing in adverse conditions an expensive and time consuming task requiring access to specialist facilities, and weatherproofing of sensitive electronics. Simulation provides an alternative to real world testing, with some studies developing increasingly visually realistic representations of the real world on powerful compute hardware. Given that subsequent subsystems in the autonomous vehicle pipeline are unaware of the visual realism of the simulation, when developing modules downstream of perception the appearance is of little consequence - rather it is how the perception system performs in the prevailing weather condition that is important. This study explores the potential of using a simple, lightweight image augmentation system in an autonomous racing vehicle - focusing not on visual accuracy, but rather the effect upon perception system performance. With minimal adjustment, the prototype system developed in this study can replicate the effects of both water droplets on the camera lens, and fading light conditions. The system introduces a latency of less than 8 ms using compute hardware that is well suited to being carried in the vehicle - rendering it ideally suited to real-time implementation that can be run during experiments in simulation, and augmented reality testing in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge