Jacob Culley

Worsening Perception: Real-time Degradation of Autonomous Vehicle Perception Performance for Simulation of Adverse Weather Conditions

Mar 05, 2021

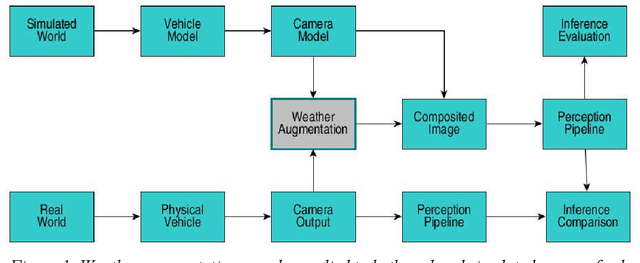

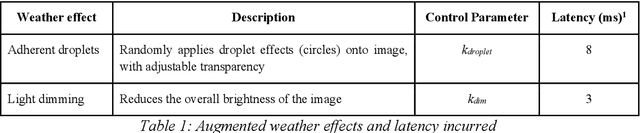

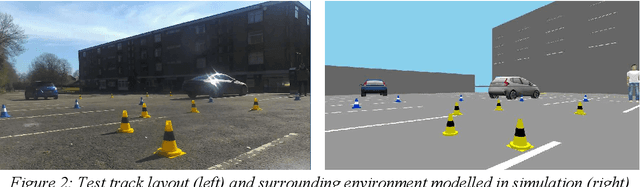

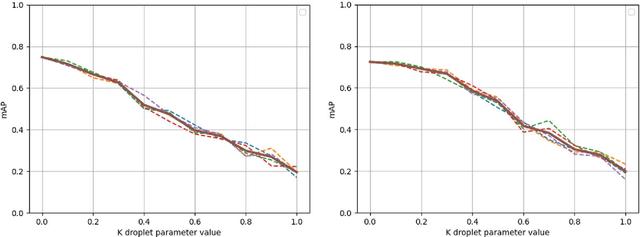

Abstract:Autonomous vehicles rely heavily upon their perception subsystems to see the environment in which they operate. Unfortunately, the effect of varying weather conditions presents a significant challenge to object detection algorithms, and thus it is imperative to test the vehicle extensively in all conditions which it may experience. However, unpredictable weather can make real-world testing in adverse conditions an expensive and time consuming task requiring access to specialist facilities, and weatherproofing of sensitive electronics. Simulation provides an alternative to real world testing, with some studies developing increasingly visually realistic representations of the real world on powerful compute hardware. Given that subsequent subsystems in the autonomous vehicle pipeline are unaware of the visual realism of the simulation, when developing modules downstream of perception the appearance is of little consequence - rather it is how the perception system performs in the prevailing weather condition that is important. This study explores the potential of using a simple, lightweight image augmentation system in an autonomous racing vehicle - focusing not on visual accuracy, but rather the effect upon perception system performance. With minimal adjustment, the prototype system developed in this study can replicate the effects of both water droplets on the camera lens, and fading light conditions. The system introduces a latency of less than 8 ms using compute hardware that is well suited to being carried in the vehicle - rendering it ideally suited to real-time implementation that can be run during experiments in simulation, and augmented reality testing in the real world.

ROAD: The ROad event Awareness Dataset for Autonomous Driving

Feb 25, 2021

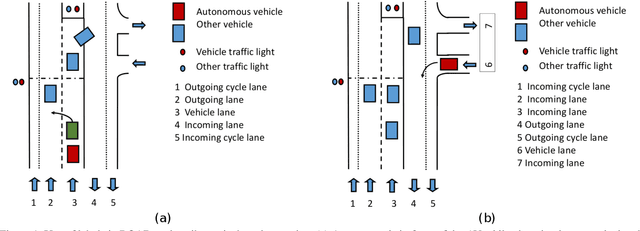

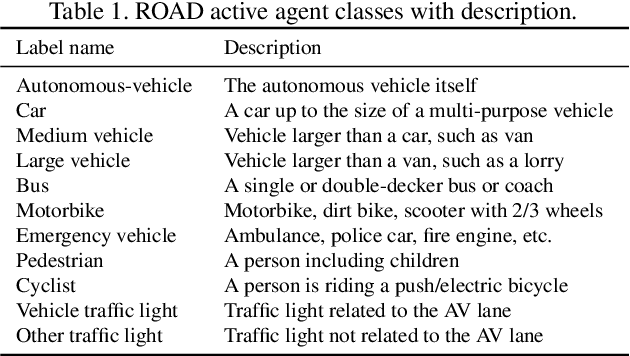

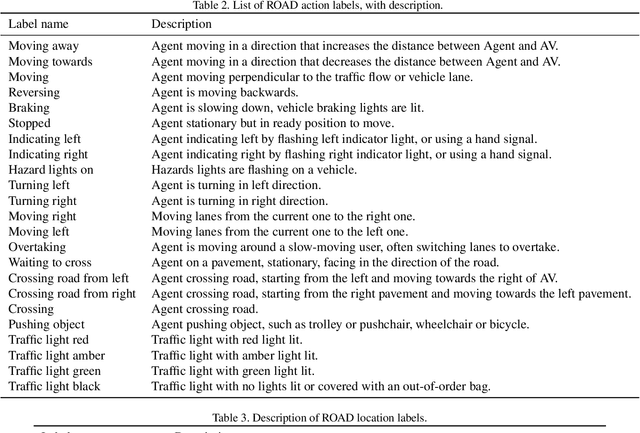

Abstract:Humans approach driving in a holistic fashion which entails, in particular, understanding road events and their evolution. Injecting these capabilities in an autonomous vehicle has thus the potential to take situational awareness and decision making closer to human-level performance. To this purpose, we introduce the ROad event Awareness Dataset (ROAD) for Autonomous Driving, to our knowledge the first of its kind. ROAD is designed to test an autonomous vehicle's ability to detect road events, defined as triplets composed by a moving agent, the action(s) it performs and the corresponding scene locations. ROAD comprises 22 videos, originally from the Oxford RobotCar Dataset, annotated with bounding boxes showing the location in the image plane of each road event. We also provide as baseline a new incremental algorithm for online road event awareness, based on inflating RetinaNet along time, which achieves a mean average precision of 16.8% and 6.1% for frame-level and video-level event detection, respectively, at 50% overlap. Though promising, these figures highlight the challenges faced by situation awareness in autonomous driving. Finally, ROAD allows scholars to investigate exciting tasks such as complex (road) activity detection, future road event anticipation and the modelling of sentient road agents in terms of mental states. Dataset can be obtained from https://github.com/gurkirt/road-dataset and baseline code from https://github.com/gurkirt/3D-RetinaNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge