Alex Stein

Exploiting Sparsity for Long Context Inference: Million Token Contexts on Commodity GPUs

Feb 10, 2025

Abstract:There is growing demand for performing inference with hundreds of thousands of input tokens on trained transformer models. Inference at this extreme scale demands significant computational resources, hindering the application of transformers at long contexts on commodity (i.e not data center scale) hardware. To address the inference time costs associated with running self-attention based transformer language models on long contexts and enable their adoption on widely available hardware, we propose a tunable mechanism that reduces the cost of the forward pass by attending to only the most relevant tokens at every generation step using a top-k selection mechanism. We showcase the efficiency gains afforded by our method by performing inference on context windows up to 1M tokens using approximately 16GB of GPU RAM. Our experiments reveal that models are capable of handling the sparsity induced by the reduced number of keys and values. By attending to less than 2% of input tokens, we achieve over 95% of model performance on common long context benchmarks (LM-Eval, AlpacaEval, and RULER).

A Simple Baseline for Predicting Events with Auto-Regressive Tabular Transformers

Oct 14, 2024Abstract:Many real-world applications of tabular data involve using historic events to predict properties of new ones, for example whether a credit card transaction is fraudulent or what rating a customer will assign a product on a retail platform. Existing approaches to event prediction include costly, brittle, and application-dependent techniques such as time-aware positional embeddings, learned row and field encodings, and oversampling methods for addressing class imbalance. Moreover, these approaches often assume specific use-cases, for example that we know the labels of all historic events or that we only predict a pre-specified label and not the data's features themselves. In this work, we propose a simple but flexible baseline using standard autoregressive LLM-style transformers with elementary positional embeddings and a causal language modeling objective. Our baseline outperforms existing approaches across popular datasets and can be employed for various use-cases. We demonstrate that the same model can predict labels, impute missing values, or model event sequences.

Transformers Can Do Arithmetic with the Right Embeddings

May 27, 2024

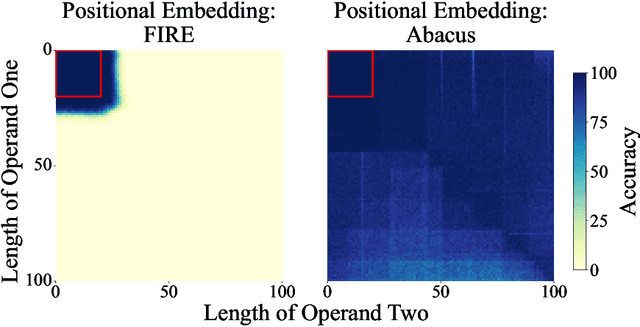

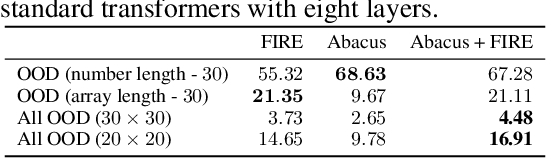

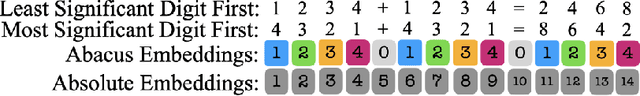

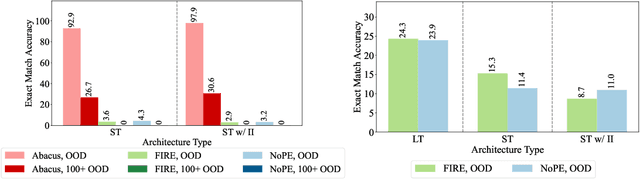

Abstract:The poor performance of transformers on arithmetic tasks seems to stem in large part from their inability to keep track of the exact position of each digit inside of a large span of digits. We mend this problem by adding an embedding to each digit that encodes its position relative to the start of the number. In addition to the boost these embeddings provide on their own, we show that this fix enables architectural modifications such as input injection and recurrent layers to improve performance even further. With positions resolved, we can study the logical extrapolation ability of transformers. Can they solve arithmetic problems that are larger and more complex than those in their training data? We find that training on only 20 digit numbers with a single GPU for one day, we can reach state-of-the-art performance, achieving up to 99% accuracy on 100 digit addition problems. Finally, we show that these gains in numeracy also unlock improvements on other multi-step reasoning tasks including sorting and multiplication.

Coercing LLMs to do and reveal anything

Feb 21, 2024

Abstract:It has recently been shown that adversarial attacks on large language models (LLMs) can "jailbreak" the model into making harmful statements. In this work, we argue that the spectrum of adversarial attacks on LLMs is much larger than merely jailbreaking. We provide a broad overview of possible attack surfaces and attack goals. Based on a series of concrete examples, we discuss, categorize and systematize attacks that coerce varied unintended behaviors, such as misdirection, model control, denial-of-service, or data extraction. We analyze these attacks in controlled experiments, and find that many of them stem from the practice of pre-training LLMs with coding capabilities, as well as the continued existence of strange "glitch" tokens in common LLM vocabularies that should be removed for security reasons.

Neural Auctions Compromise Bidder Information

Feb 28, 2023

Abstract:Single-shot auctions are commonly used as a means to sell goods, for example when selling ad space or allocating radio frequencies, however devising mechanisms for auctions with multiple bidders and multiple items can be complicated. It has been shown that neural networks can be used to approximate optimal mechanisms while satisfying the constraints that an auction be strategyproof and individually rational. We show that despite such auctions maximizing revenue, they do so at the cost of revealing private bidder information. While randomness is often used to build in privacy, in this context it comes with complications if done without care. Specifically, it can violate rationality and feasibility constraints, fundamentally change the incentive structure of the mechanism, and/or harm top-level metrics such as revenue and social welfare. We propose a method that employs stochasticity to improve privacy while meeting the requirements for auction mechanisms with only a modest sacrifice in revenue. We analyze the cost to the auction house that comes with introducing varying degrees of privacy in common auction settings. Our results show that despite current neural auctions' ability to approximate optimal mechanisms, the resulting vulnerability that comes with relying on neural networks must be accounted for.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge