Özgün Çiçek

Search for temporal cell segmentation robustness in phase-contrast microscopy videos

Dec 16, 2021Abstract:Studying cell morphology changes in time is critical to understanding cell migration mechanisms. In this work, we present a deep learning-based workflow to segment cancer cells embedded in 3D collagen matrices and imaged with phase-contrast microscopy. Our approach uses transfer learning and recurrent convolutional long-short term memory units to exploit the temporal information from the past and provide a consistent segmentation result. Lastly, we propose a geometrical-characterization approach to studying cancer cell morphology. Our approach provides stable results in time, and it is robust to the different weight initialization or training data sampling. We introduce a new annotated dataset for 2D cell segmentation and tracking, and an open-source implementation to replicate the experiments or adapt them to new image processing problems.

Recovering the Imperfect: Cell Segmentation in the Presence of Dynamically Localized Proteins

Nov 20, 2020

Abstract:Deploying off-the-shelf segmentation networks on biomedical data has become common practice, yet if structures of interest in an image sequence are visible only temporarily, existing frame-by-frame methods fail. In this paper, we provide a solution to segmentation of imperfect data through time based on temporal propagation and uncertainty estimation. We integrate uncertainty estimation into Mask R-CNN network and propagate motion-corrected segmentation masks from frames with low uncertainty to those frames with high uncertainty to handle temporary loss of signal for segmentation. We demonstrate the value of this approach over frame-by-frame segmentation and regular temporal propagation on data from human embryonic kidney (HEK293T) cells transiently transfected with a fluorescent protein that moves in and out of the nucleus over time. The method presented here will empower microscopic experiments aimed at understanding molecular and cellular function.

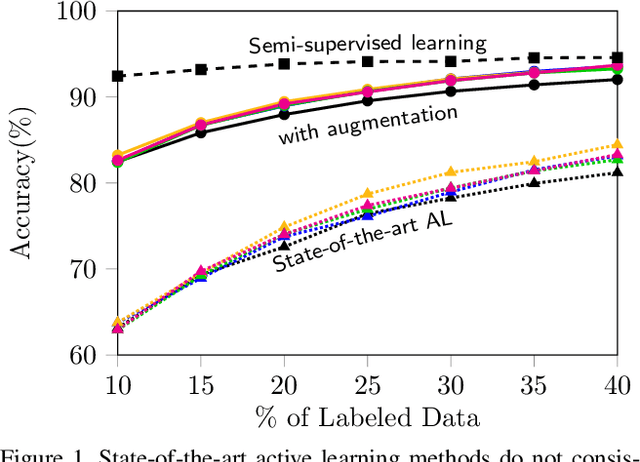

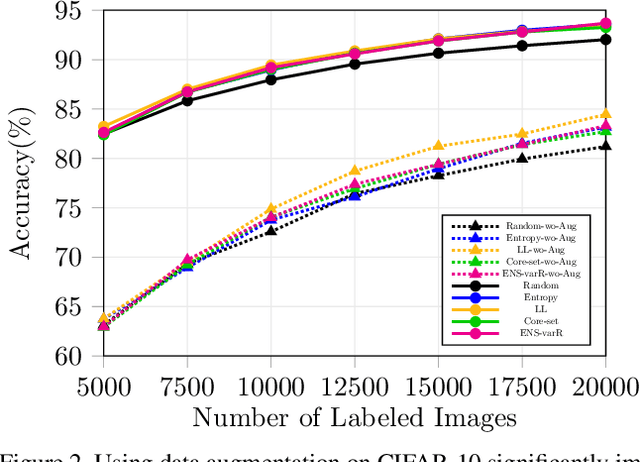

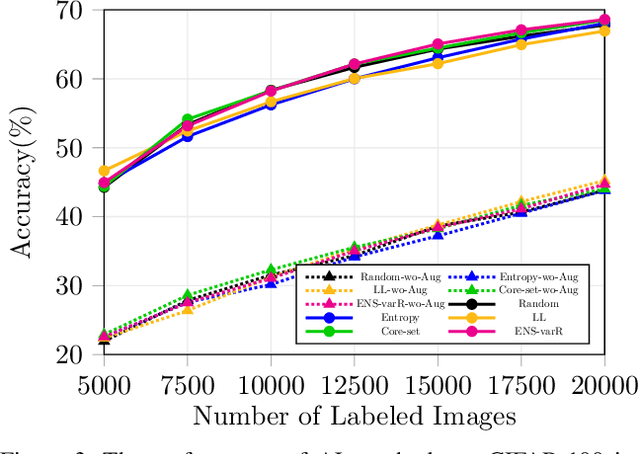

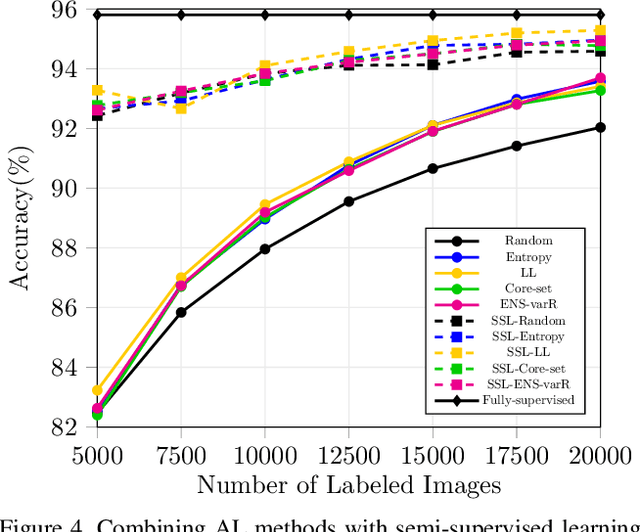

Parting with Illusions about Deep Active Learning

Dec 11, 2019

Abstract:Active learning aims to reduce the high labeling cost involved in training machine learning models on large datasets by efficiently labeling only the most informative samples. Recently, deep active learning has shown success on various tasks. However, the conventional evaluation scheme used for deep active learning is below par. Current methods disregard some apparent parallel work in the closely related fields. Active learning methods are quite sensitive w.r.t. changes in the training procedure like data augmentation. They improve by a large-margin when integrated with semi-supervised learning, but barely perform better than the random baseline. We re-implement various latest active learning approaches for image classification and evaluate them under more realistic settings. We further validate our findings for semantic segmentation. Based on our observations, we realistically assess the current state of the field and propose a more suitable evaluation protocol.

Learning Representations for Predicting Future Activities

May 09, 2019

Abstract:Foreseeing the future is one of the key factors of intelligence. It involves understanding of the past and current environment as well as decent experience of its possible dynamics. In this work, we address future prediction at the abstract level of activities. We propose a network module for learning embeddings of the environment's dynamics in a self-supervised way. To take the ambiguities and high variances in the future activities into account, we use a multi-hypotheses scheme that can represent multiple futures. We demonstrate the approach by classifying future activities on the Epic-Kitchens and Breakfast datasets. Moreover, we generate captions that describe the future activities

Uncertainty Estimates and Multi-Hypotheses Networks for Optical Flow

Aug 06, 2018

Abstract:Optical flow estimation can be formulated as an end-to-end supervised learning problem, which yields estimates with a superior accuracy-runtime tradeoff compared to alternative methodology. In this paper, we make such networks estimate their local uncertainty about the correctness of their prediction, which is vital information when building decisions on top of the estimations. For the first time we compare several strategies and techniques to estimate uncertainty in a large-scale computer vision task like optical flow estimation. Moreover, we introduce a new network architecture and loss function that enforce complementary hypotheses and provide uncertainty estimates efficiently with a single forward pass and without the need for sampling or ensembles. We demonstrate the quality of the uncertainty estimates, which is clearly above previous confidence measures on optical flow and allows for interactive frame rates.

3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation

Jun 21, 2016

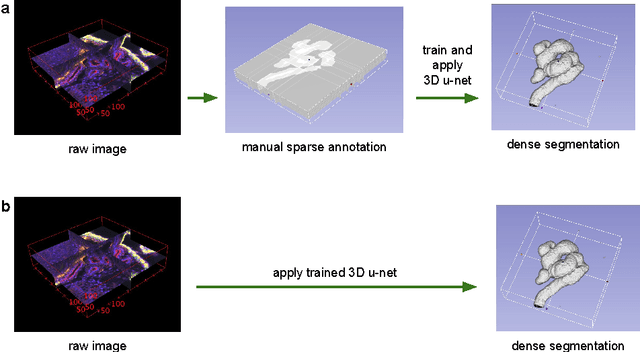

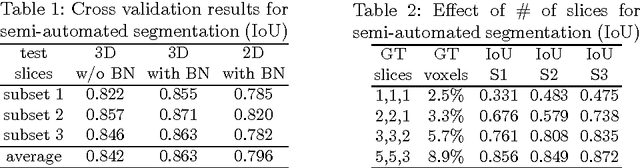

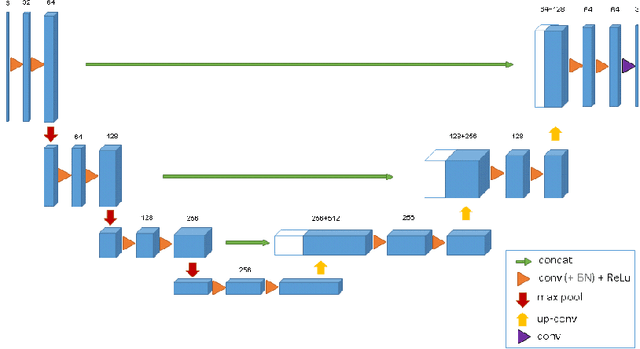

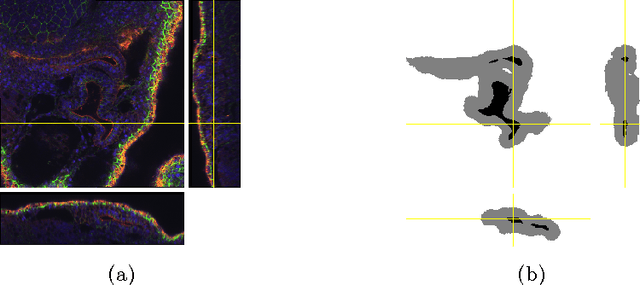

Abstract:This paper introduces a network for volumetric segmentation that learns from sparsely annotated volumetric images. We outline two attractive use cases of this method: (1) In a semi-automated setup, the user annotates some slices in the volume to be segmented. The network learns from these sparse annotations and provides a dense 3D segmentation. (2) In a fully-automated setup, we assume that a representative, sparsely annotated training set exists. Trained on this data set, the network densely segments new volumetric images. The proposed network extends the previous u-net architecture from Ronneberger et al. by replacing all 2D operations with their 3D counterparts. The implementation performs on-the-fly elastic deformations for efficient data augmentation during training. It is trained end-to-end from scratch, i.e., no pre-trained network is required. We test the performance of the proposed method on a complex, highly variable 3D structure, the Xenopus kidney, and achieve good results for both use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge