Silvio Galesso

Orbis: Overcoming Challenges of Long-Horizon Prediction in Driving World Models

Jul 17, 2025Abstract:Existing world models for autonomous driving struggle with long-horizon generation and generalization to challenging scenarios. In this work, we develop a model using simple design choices, and without additional supervision or sensors, such as maps, depth, or multiple cameras. We show that our model yields state-of-the-art performance, despite having only 469M parameters and being trained on 280h of video data. It particularly stands out in difficult scenarios like turning maneuvers and urban traffic. We test whether discrete token models possibly have advantages over continuous models based on flow matching. To this end, we set up a hybrid tokenizer that is compatible with both approaches and allows for a side-by-side comparison. Our study concludes in favor of the continuous autoregressive model, which is less brittle on individual design choices and more powerful than the model built on discrete tokens. Code, models and qualitative results are publicly available at https://lmb-freiburg.github.io/orbis.github.io/.

Detect, Classify, Act: Categorizing Industrial Anomalies with Multi-Modal Large Language Models

May 05, 2025Abstract:Recent advances in visual industrial anomaly detection have demonstrated exceptional performance in identifying and segmenting anomalous regions while maintaining fast inference speeds. However, anomaly classification-distinguishing different types of anomalies-remains largely unexplored despite its critical importance in real-world inspection tasks. To address this gap, we propose VELM, a novel LLM-based pipeline for anomaly classification. Given the critical importance of inference speed, we first apply an unsupervised anomaly detection method as a vision expert to assess the normality of an observation. If an anomaly is detected, the LLM then classifies its type. A key challenge in developing and evaluating anomaly classification models is the lack of precise annotations of anomaly classes in existing datasets. To address this limitation, we introduce MVTec-AC and VisA-AC, refined versions of the widely used MVTec-AD and VisA datasets, which include accurate anomaly class labels for rigorous evaluation. Our approach achieves a state-of-the-art anomaly classification accuracy of 80.4% on MVTec-AD, exceeding the prior baselines by 5%, and 84% on MVTec-AC, demonstrating the effectiveness of VELM in understanding and categorizing anomalies. We hope our methodology and benchmark inspire further research in anomaly classification, helping bridge the gap between detection and comprehensive anomaly characterization.

Diffusion for Out-of-Distribution Detection on Road Scenes and Beyond

Jul 22, 2024

Abstract:In recent years, research on out-of-distribution (OoD) detection for semantic segmentation has mainly focused on road scenes -- a domain with a constrained amount of semantic diversity. In this work, we challenge this constraint and extend the domain of this task to general natural images. To this end, we introduce: 1. the ADE-OoD benchmark, which is based on the ADE20k dataset and includes images from diverse domains with a high semantic diversity, and 2. a novel approach that uses Diffusion score matching for OoD detection (DOoD) and is robust to the increased semantic diversity. ADE-OoD features indoor and outdoor images, defines 150 semantic categories as in-distribution, and contains a variety of OoD objects. For DOoD, we train a diffusion model with an MLP architecture on semantic in-distribution embeddings and build on the score matching interpretation to compute pixel-wise OoD scores at inference time. On common road scene OoD benchmarks, DOoD performs on par or better than the state of the art, without using outliers for training or making assumptions about the data domain. On ADE-OoD, DOoD outperforms previous approaches, but leaves much room for future improvements.

Compositional Servoing by Recombining Demonstrations

Oct 06, 2023Abstract:Learning-based manipulation policies from image inputs often show weak task transfer capabilities. In contrast, visual servoing methods allow efficient task transfer in high-precision scenarios while requiring only a few demonstrations. In this work, we present a framework that formulates the visual servoing task as graph traversal. Our method not only extends the robustness of visual servoing, but also enables multitask capability based on a few task-specific demonstrations. We construct demonstration graphs by splitting existing demonstrations and recombining them. In order to traverse the demonstration graph in the inference case, we utilize a similarity function that helps select the best demonstration for a specific task. This enables us to compute the shortest path through the graph. Ultimately, we show that recombining demonstrations leads to higher task-respective success. We present extensive simulation and real-world experimental results that demonstrate the efficacy of our approach.

Far Away in the Deep Space: Nearest-Neighbor-Based Dense Out-of-Distribution Detection

Nov 12, 2022

Abstract:The key to out-of-distribution detection is density estimation of the in-distribution data or of its feature representations. While good parametric solutions to this problem exist for well curated classification data, these are less suitable for complex domains, such as semantic segmentation. In this paper, we show that a k-Nearest-Neighbors approach can achieve surprisingly good results with small reference datasets and runtimes, and be robust with respect to hyperparameters, such as the number of neighbors and the choice of the support set size. Moreover, we show that it combines well with anomaly scores from standard parametric approaches, and we find that transformer features are particularly well suited to detect novel objects in combination with k-Nearest-Neighbors. Ultimately, the approach is simple and non-invasive, i.e., it does not affect the primary segmentation performance, avoids training on examples of anomalies, and achieves state-of-the-art results on the common benchmarks with +23% and +16% AP improvements on on RoadAnomaly and StreetHazards respectively.

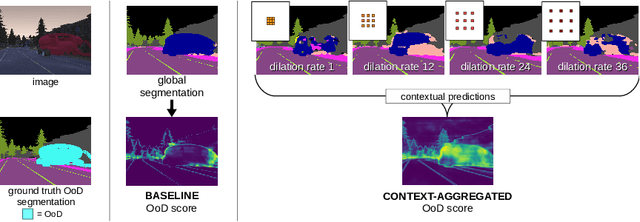

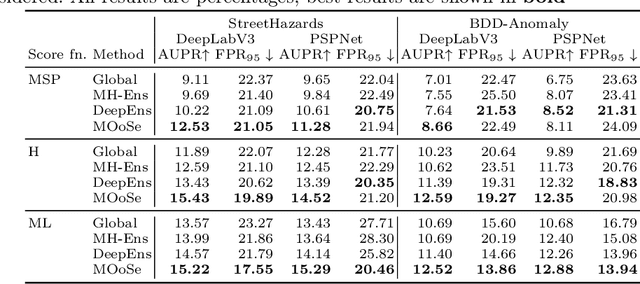

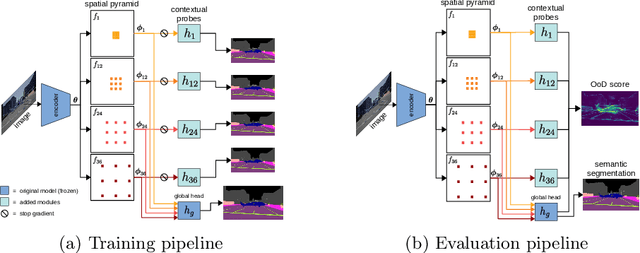

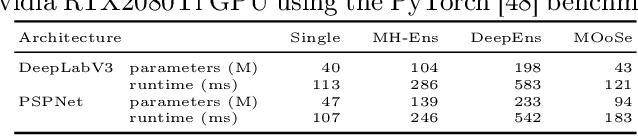

Probing Contextual Diversity for Dense Out-of-Distribution Detection

Aug 30, 2022

Abstract:Detection of out-of-distribution (OoD) samples in the context of image classification has recently become an area of interest and active study, along with the topic of uncertainty estimation, to which it is closely related. In this paper we explore the task of OoD segmentation, which has been studied less than its classification counterpart and presents additional challenges. Segmentation is a dense prediction task for which the model's outcome for each pixel depends on its surroundings. The receptive field and the reliance on context play a role for distinguishing different classes and, correspondingly, for spotting OoD entities. We introduce MOoSe, an efficient strategy to leverage the various levels of context represented within semantic segmentation models and show that even a simple aggregation of multi-scale representations has consistently positive effects on OoD detection and uncertainty estimation.

Essentials for Class Incremental Learning

Feb 18, 2021

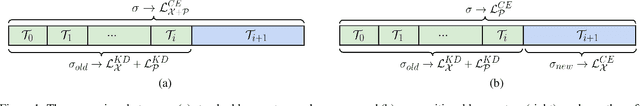

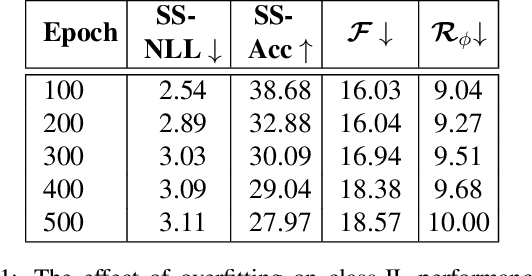

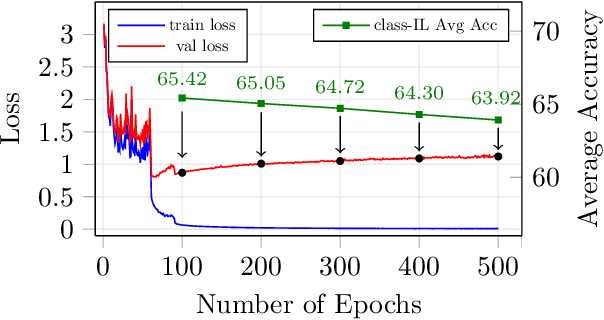

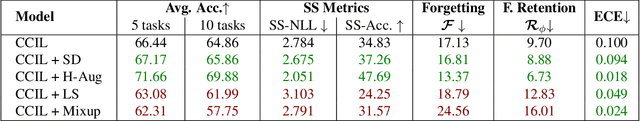

Abstract:Contemporary neural networks are limited in their ability to learn from evolving streams of training data. When trained sequentially on new or evolving tasks, their accuracy drops sharply, making them unsuitable for many real-world applications. In this work, we shed light on the causes of this well-known yet unsolved phenomenon - often referred to as catastrophic forgetting - in a class-incremental setup. We show that a combination of simple components and a loss that balances intra-task and inter-task learning can already resolve forgetting to the same extent as more complex measures proposed in literature. Moreover, we identify poor quality of the learned representation as another reason for catastrophic forgetting in class-IL. We show that performance is correlated with secondary class information (dark knowledge) learned by the model and it can be improved by an appropriate regularizer. With these lessons learned, class-incremental learning results on CIFAR-100 and ImageNet improve over the state-of-the-art by a large margin, while keeping the approach simple.

Uncertainty Estimates and Multi-Hypotheses Networks for Optical Flow

Aug 06, 2018

Abstract:Optical flow estimation can be formulated as an end-to-end supervised learning problem, which yields estimates with a superior accuracy-runtime tradeoff compared to alternative methodology. In this paper, we make such networks estimate their local uncertainty about the correctness of their prediction, which is vital information when building decisions on top of the estimations. For the first time we compare several strategies and techniques to estimate uncertainty in a large-scale computer vision task like optical flow estimation. Moreover, we introduce a new network architecture and loss function that enforce complementary hypotheses and provide uncertainty estimates efficiently with a single forward pass and without the need for sampling or ensembles. We demonstrate the quality of the uncertainty estimates, which is clearly above previous confidence measures on optical flow and allows for interactive frame rates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge