Zois Boukouvalas

Using LLMs to discover emerging coded antisemitic hate-speech in extremist social media

Jan 23, 2024Abstract:Online hate speech proliferation has created a difficult problem for social media platforms. A particular challenge relates to the use of coded language by groups interested in both creating a sense of belonging for its users and evading detection. Coded language evolves quickly and its use varies over time. This paper proposes a methodology for detecting emerging coded hate-laden terminology. The methodology is tested in the context of online antisemitic discourse. The approach considers posts scraped from social media platforms, often used by extremist users. The posts are scraped using seed expressions related to previously known discourse of hatred towards Jews. The method begins by identifying the expressions most representative of each post and calculating their frequency in the whole corpus. It filters out grammatically incoherent expressions as well as previously encountered ones so as to focus on emergent well-formed terminology. This is followed by an assessment of semantic similarity to known antisemitic terminology using a fine-tuned large language model, and subsequent filtering out of the expressions that are too distant from known expressions of hatred. Emergent antisemitic expressions containing terms clearly relating to Jewish topics are then removed to return only coded expressions of hatred.

A Semi-Supervised Framework for Misinformation Detection

Apr 22, 2023Abstract:The spread of misinformation in social media outlets has become a prevalent societal problem and is the cause of many kinds of social unrest. Curtailing its prevalence is of great importance and machine learning has shown significant promise. However, there are two main challenges when applying machine learning to this problem. First, while much too prevalent in one respect, misinformation, actually, represents only a minor proportion of all the postings seen on social media. Second, labeling the massive amount of data necessary to train a useful classifier becomes impractical. Considering these challenges, we propose a simple semi-supervised learning framework in order to deal with extreme class imbalances that has the advantage, over other approaches, of using actual rather than simulated data to inflate the minority class. We tested our framework on two sets of Covid-related Twitter data and obtained significant improvement in F1-measure on extremely imbalanced scenarios, as compared to simple classical and deep-learning data generation methods such as SMOTE, ADASYN, or GAN-based data generation.

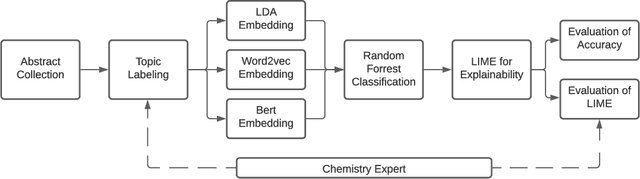

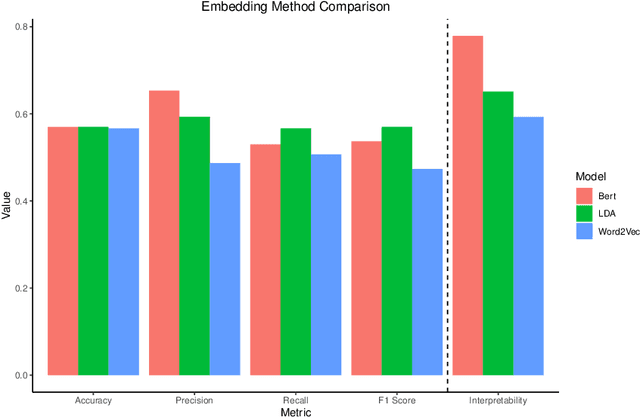

Assessing the trade-off between prediction accuracy and interpretability for topic modeling on energetic materials corpora

Jun 01, 2022

Abstract:As the amount and variety of energetics research increases, machine aware topic identification is necessary to streamline future research pipelines. The makeup of an automatic topic identification process consists of creating document representations and performing classification. However, the implementation of these processes on energetics research imposes new challenges. Energetics datasets contain many scientific terms that are necessary to understand the context of a document but may require more complex document representations. Secondly, the predictions from classification must be understandable and trusted by the chemists within the pipeline. In this work, we study the trade-off between prediction accuracy and interpretability by implementing three document embedding methods that vary in computational complexity. With our accuracy results, we also introduce local interpretability model-agnostic explanations (LIME) of each prediction to provide a localized understanding of each prediction and to validate classifier decisions with our team of energetics experts. This study was carried out on a novel labeled energetics dataset created and validated by our team of energetics experts.

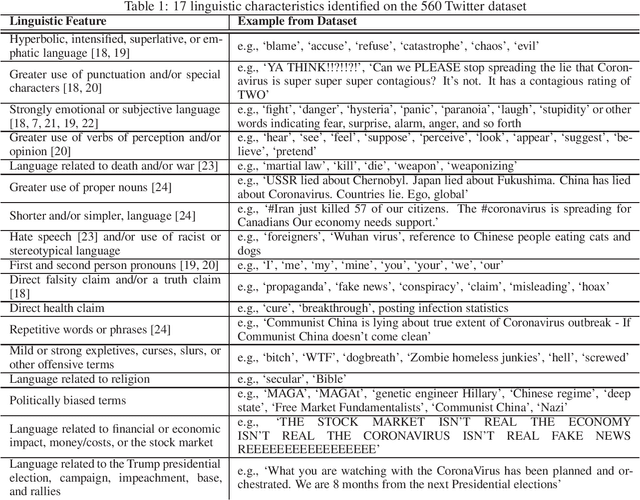

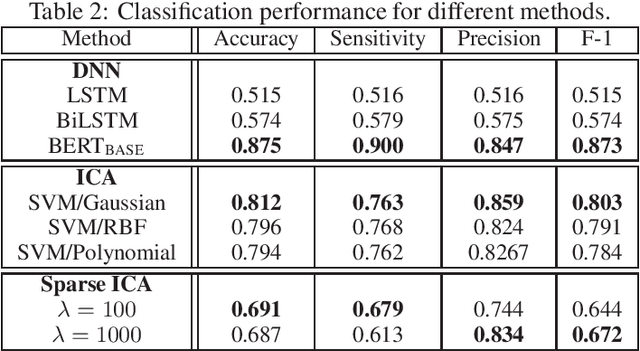

Independent Component Analysis for Trustworthy Cyberspace during High Impact Events: An Application to Covid-19

Jun 06, 2020

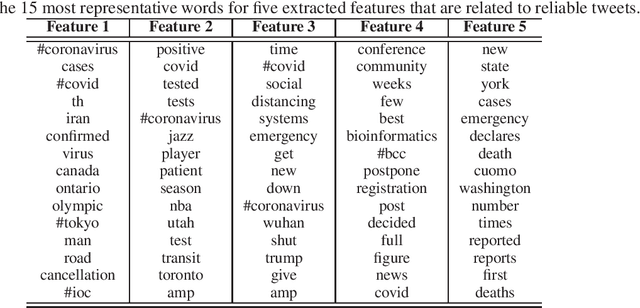

Abstract:Social media has become an important communication channel during high impact events, such as the COVID-19 pandemic. As misinformation in social media can rapidly spread, creating social unrest, curtailing the spread of misinformation during such events is a significant data challenge. While recent solutions that are based on machine learning have shown promise for the detection of misinformation, most widely used methods include approaches that rely on either handcrafted features that cannot be optimal for all scenarios, or those that are based on deep learning where the interpretation of the prediction results is not directly accessible. In this work, we propose a data-driven solution that is based on the ICA model, such that knowledge discovery and detection of misinformation are achieved jointly. To demonstrate the effectiveness of our method and compare its performance with deep learning methods, we developed a labeled COVID-19 Twitter dataset based on socio-linguistic criteria.

Deep learning for molecular generation and optimization - a review of the state of the art

Mar 11, 2019

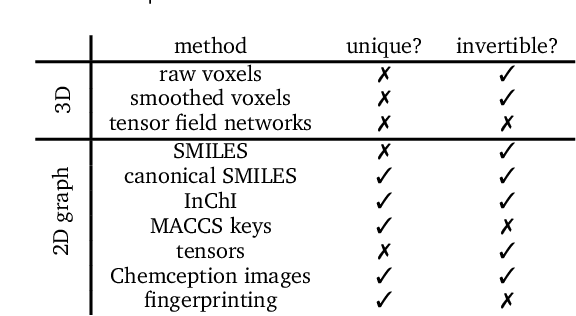

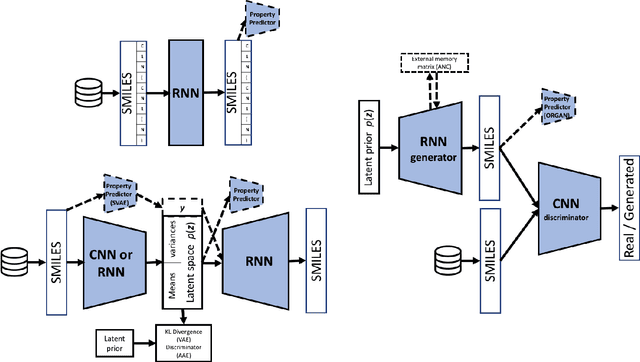

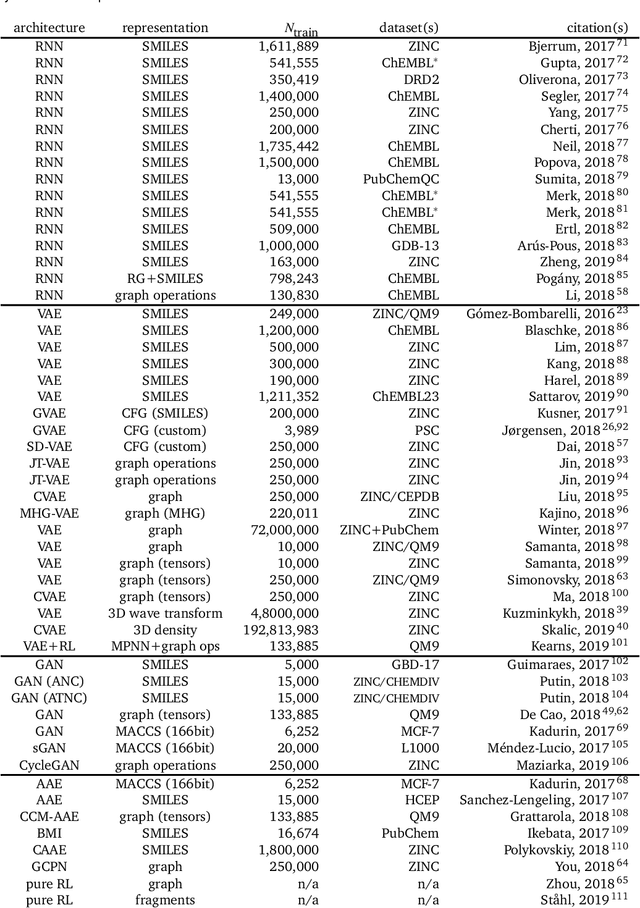

Abstract:In the space of only a few years, deep generative modeling has revolutionized how we think of artificial creativity, yielding autonomous systems which produce original images, music, and text. Inspired by these successes, researchers are now applying deep generative modeling techniques to the generation and optimization of molecules - in our review we found 45 papers on the subject published in the past two years. These works point to a future where such systems will be used to generate lead molecules, greatly reducing resources spent downstream synthesizing and characterizing bad leads in the lab. In this review we survey the increasingly complex landscape of models and representation schemes that have been proposed. The four classes of techniques we describe are recursive neural networks, autoencoders, generative adversarial networks, and reinforcement learning. After first discussing some of the mathematical fundamentals of each technique, we draw high level connections and comparisons with other techniques and expose the pros and cons of each. Several important high level themes emerge as a result of this work, including the shift away from the SMILES string representation of molecules towards more sophisticated representations such as graph grammars and 3D representations, the importance of reward function design, the need for better standards for benchmarking and testing, and the benefits of adversarial training and reinforcement learning over maximum likelihood based training.

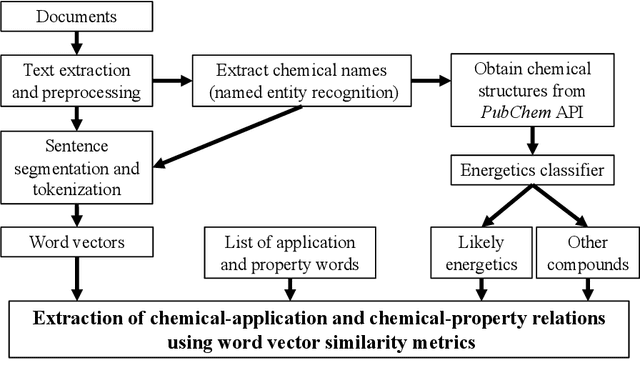

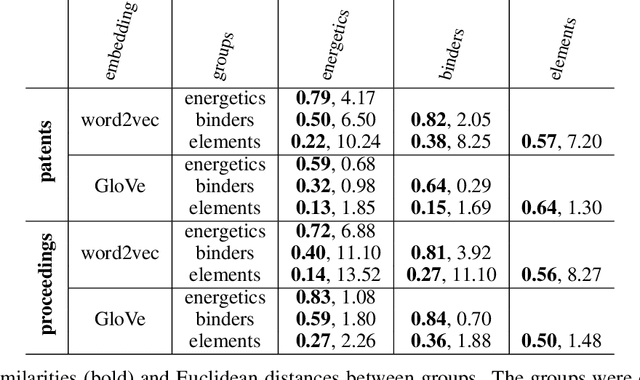

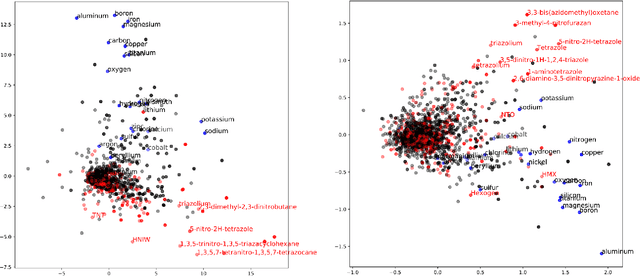

Using natural language processing techniques to extract information on the properties and functionalities of energetic materials from large text corpora

Mar 01, 2019

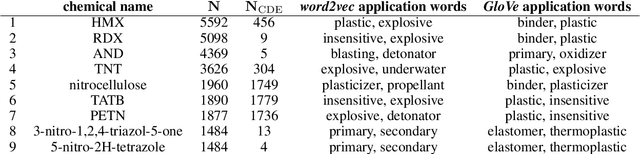

Abstract:The number of scientific journal articles and reports being published about energetic materials every year is growing exponentially, and therefore extracting relevant information and actionable insights from the latest research is becoming a considerable challenge. In this work we explore how techniques from natural language processing and machine learning can be used to automatically extract chemical insights from large collections of documents. We first describe how to download and process documents from a variety of sources - journal articles, conference proceedings (including NTREM), the US Patent & Trademark Office, and the Defense Technical Information Center archive on archive.org. We present a custom NLP pipeline which uses open source NLP tools to identify the names of chemical compounds and relates them to function words ("underwater", "rocket", "pyrotechnic") and property words ("elastomer", "non-toxic"). After explaining how word embeddings work we compare the utility of two popular word embeddings - word2vec and GloVe. Chemical-chemical and chemical-application relationships are obtained by doing computations with word vectors. We show that word embeddings capture latent information about energetic materials, so that related materials appear close together in the word embedding space.

Independent Vector Analysis for Data Fusion Prior to Molecular Property Prediction with Machine Learning

Nov 01, 2018

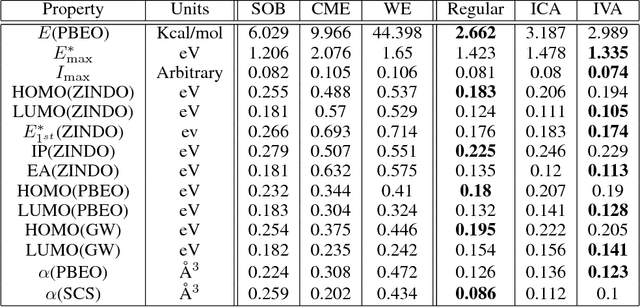

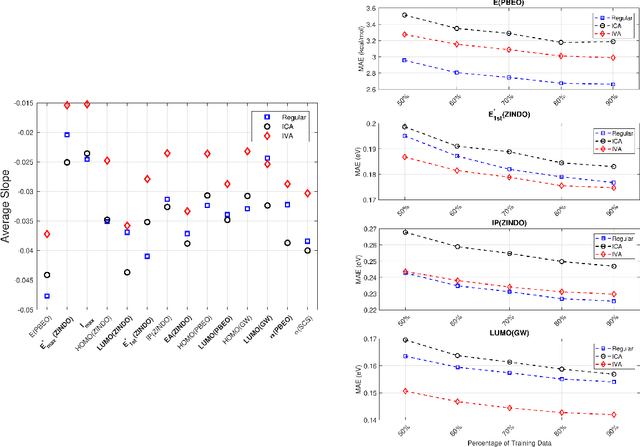

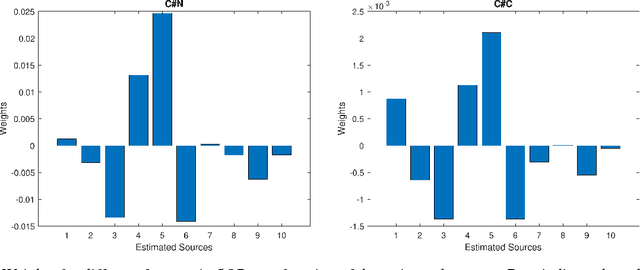

Abstract:Due to its high computational speed and accuracy compared to ab-initio quantum chemistry and forcefield modeling, the prediction of molecular properties using machine learning has received great attention in the fields of materials design and drug discovery. A main ingredient required for machine learning is a training dataset consisting of molecular features\textemdash for example fingerprint bits, chemical descriptors, etc. that adequately characterize the corresponding molecules. However, choosing features for any application is highly non-trivial. No "universal" method for feature selection exists. In this work, we propose a data fusion framework that uses Independent Vector Analysis to exploit underlying complementary information contained in different molecular featurization methods, bringing us a step closer to automated feature generation. Our approach takes an arbitrary number of individual feature vectors and automatically generates a single, compact (low dimensional) set of molecular features that can be used to enhance the prediction performance of regression models. At the same time our methodology retains the possibility of interpreting the generated features to discover relationships between molecular structures and properties. We demonstrate this on the QM7b dataset for the prediction of several properties such as atomization energy, polarizability, frontier orbital eigenvalues, ionization potential, electron affinity, and excitation energies. In addition, we show how our method helps improve the prediction of experimental binding affinities for a set of human BACE-1 inhibitors. To encourage more widespread use of IVA we have developed the PyIVA Python package, an open source code which is available for download on Github.

Development of ICA and IVA Algorithms with Application to Medical Image Analysis

Jan 25, 2018

Abstract:Independent component analysis (ICA) is a widely used BSS method that can uniquely achieve source recovery, subject to only scaling and permutation ambiguities, through the assumption of statistical independence on the part of the latent sources. Independent vector analysis (IVA) extends the applicability of ICA by jointly decomposing multiple datasets through the exploitation of the dependencies across datasets. Though both ICA and IVA algorithms cast in the maximum likelihood (ML) framework enable the use of all available statistical information in reality, they often deviate from their theoretical optimality properties due to improper estimation of the probability density function (PDF). This motivates the development of flexible ICA and IVA algorithms that closely adhere to the underlying statistical description of the data. Although it is attractive minimize the assumptions, important prior information about the data, such as sparsity, is usually available. If incorporated into the ICA model, use of this additional information can relax the independence assumption, resulting in an improvement in the overall separation performance. Therefore, the development of a unified mathematical framework that can take into account both statistical independence and sparsity is of great interest. In this work, we first introduce a flexible ICA algorithm that uses an effective PDF estimator to accurately capture the underlying statistical properties of the data. We then discuss several techniques to accurately estimate the parameters of the multivariate generalized Gaussian distribution, and how to integrate them into the IVA model. Finally, we provide a mathematical framework that enables direct control over the influence of statistical independence and sparsity, and use this framework to develop an effective ICA algorithm that can jointly exploit these two forms of diversity.

Independent Component Analysis by Entropy Maximization with Kernels

Oct 22, 2016

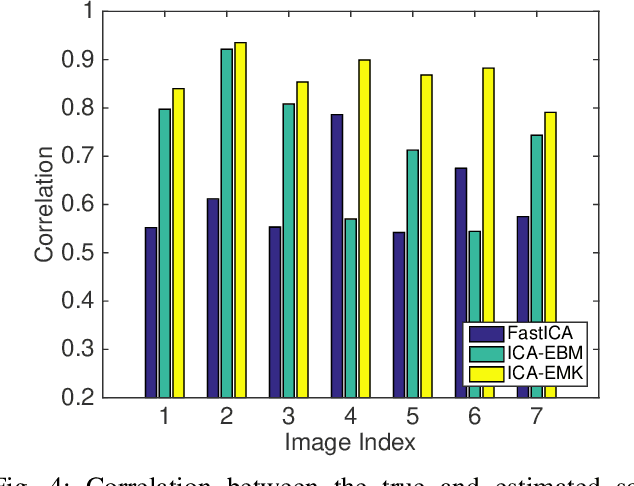

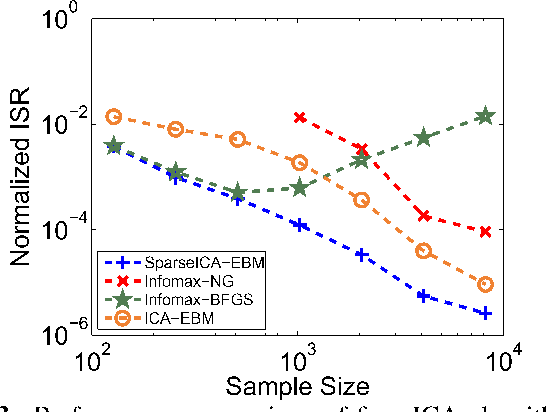

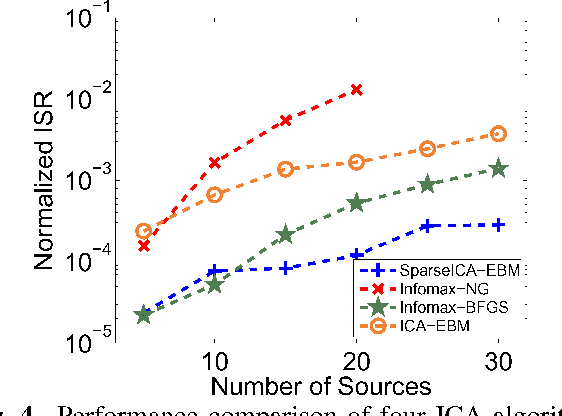

Abstract:Independent component analysis (ICA) is the most popular method for blind source separation (BSS) with a diverse set of applications, such as biomedical signal processing, video and image analysis, and communications. Maximum likelihood (ML), an optimal theoretical framework for ICA, requires knowledge of the true underlying probability density function (PDF) of the latent sources, which, in many applications, is unknown. ICA algorithms cast in the ML framework often deviate from its theoretical optimality properties due to poor estimation of the source PDF. Therefore, accurate estimation of source PDFs is critical in order to avoid model mismatch and poor ICA performance. In this paper, we propose a new and efficient ICA algorithm based on entropy maximization with kernels, (ICA-EMK), which uses both global and local measuring functions as constraints to dynamically estimate the PDF of the sources with reasonable complexity. In addition, the new algorithm performs optimization with respect to each of the cost function gradient directions separately, enabling parallel implementations on multi-core computers. We demonstrate the superior performance of ICA-EMK over competing ICA algorithms using simulated as well as real-world data.

Enhancing ICA Performance by Exploiting Sparsity: Application to FMRI Analysis

Oct 19, 2016

Abstract:Independent component analysis (ICA) is a powerful method for blind source separation based on the assumption that sources are statistically independent. Though ICA has proven useful and has been employed in many applications, complete statistical independence can be too restrictive an assumption in practice. Additionally, important prior information about the data, such as sparsity, is usually available. Sparsity is a natural property of the data, a form of diversity, which, if incorporated into the ICA model, can relax the independence assumption, resulting in an improvement in the overall separation performance. In this work, we propose a new variant of ICA by entropy bound minimization (ICA-EBM)-a flexible, yet parameter-free algorithm-through the direct exploitation of sparsity. Using this new SparseICA-EBM algorithm, we study the synergy of independence and sparsity through simulations on synthetic as well as functional magnetic resonance imaging (fMRI)-like data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge