Aritran Piplai

Minerva: Reinforcement Learning with Verifiable Rewards for Cyber Threat Intelligence LLMs

Jan 31, 2026Abstract:Cyber threat intelligence (CTI) analysts routinely convert noisy, unstructured security artifacts into standardized, automation-ready representations. Although large language models (LLMs) show promise for this task, existing approaches remain brittle when producing structured CTI outputs and have largely relied on supervised fine-tuning (SFT). In contrast, CTI standards and community-maintained resources define canonical identifiers and schemas that enable deterministic verification of model outputs. We leverage this structure to study reinforcement learning with verifiable rewards (RLVR) for CTI tasks. We introduce \textit{Minerva}, a unified dataset and training pipeline spanning multiple CTI subtasks, each paired with task-specific verifiers that score structured outputs and identifier predictions. To address reward sparsity during rollout, we propose a lightweight self-training mechanism that generates additional verified trajectories and distills them back into the model. Experiments across LLM backbones show consistent improvements in accuracy and robustness over SFT across multiple benchmarks.

MAD-OOD: A Deep Learning Cluster-Driven Framework for an Out-of-Distribution Malware Detection and Classification

Dec 19, 2025Abstract:Out of distribution (OOD) detection remains a critical challenge in malware classification due to the substantial intra family variability introduced by polymorphic and metamorphic malware variants. Most existing deep learning based malware detectors rely on closed world assumptions and fail to adequately model this intra class variation, resulting in degraded performance when confronted with previously unseen malware families. This paper presents MADOOD, a novel two stage, cluster driven deep learning framework for robust OOD malware detection and classification. In the first stage, malware family embeddings are modeled using class conditional spherical decision boundaries derived from Gaussian Discriminant Analysis (GDA), enabling statistically grounded separation of indistribution and OOD samples without requiring OOD data during training. Z score based distance analysis across multiple class centroids is employed to reliably identify anomalous samples in the latent space. In the second stage, a deep neural network integrates cluster based predictions, refined embeddings, and supervised classifier outputs to enhance final classification accuracy. Extensive evaluations on benchmark malware datasets comprising 25 known families and multiple novel OOD variants demonstrate that MADOOD significantly outperforms state of the art OOD detection methods, achieving an AUC of up to 0.911 on unseen malware families. The proposed framework provides a scalable, interpretable, and statistically principled solution for real world malware detection and anomaly identification in evolving cybersecurity environments.

FALCON: Autonomous Cyber Threat Intelligence Mining with LLMs for IDS Rule Generation

Aug 26, 2025Abstract:Signature-based Intrusion Detection Systems (IDS) detect malicious activities by matching network or host activity against predefined rules. These rules are derived from extensive Cyber Threat Intelligence (CTI), which includes attack signatures and behavioral patterns obtained through automated tools and manual threat analysis, such as sandboxing. The CTI is then transformed into actionable rules for the IDS engine, enabling real-time detection and prevention. However, the constant evolution of cyber threats necessitates frequent rule updates, which delay deployment time and weaken overall security readiness. Recent advancements in agentic systems powered by Large Language Models (LLMs) offer the potential for autonomous IDS rule generation with internal evaluation. We introduce FALCON, an autonomous agentic framework that generates deployable IDS rules from CTI data in real-time and evaluates them using built-in multi-phased validators. To demonstrate versatility, we target both network (Snort) and host-based (YARA) mediums and construct a comprehensive dataset of IDS rules with their corresponding CTIs. Our evaluations indicate FALCON excels in automatic rule generation, with an average of 95% accuracy validated by qualitative evaluation with 84% inter-rater agreement among multiple cybersecurity analysts across all metrics. These results underscore the feasibility and effectiveness of LLM-driven data mining for real-time cyber threat mitigation.

Evaluating Large Language Models for Phishing Detection, Self-Consistency, Faithfulness, and Explainability

Jun 16, 2025Abstract:Phishing attacks remain one of the most prevalent and persistent cybersecurity threat with attackers continuously evolving and intensifying tactics to evade the general detection system. Despite significant advances in artificial intelligence and machine learning, faithfully reproducing the interpretable reasoning with classification and explainability that underpin phishing judgments remains challenging. Due to recent advancement in Natural Language Processing, Large Language Models (LLMs) show a promising direction and potential for improving domain specific phishing classification tasks. However, enhancing the reliability and robustness of classification models requires not only accurate predictions from LLMs but also consistent and trustworthy explanations aligning with those predictions. Therefore, a key question remains: can LLMs not only classify phishing emails accurately but also generate explanations that are reliably aligned with their predictions and internally self-consistent? To answer these questions, we have fine-tuned transformer based models, including BERT, Llama models, and Wizard, to improve domain relevance and make them more tailored to phishing specific distinctions, using Binary Sequence Classification, Contrastive Learning (CL) and Direct Preference Optimization (DPO). To that end, we examined their performance in phishing classification and explainability by applying the ConsistenCy measure based on SHAPley values (CC SHAP), which measures prediction explanation token alignment to test the model's internal faithfulness and consistency and uncover the rationale behind its predictions and reasoning. Overall, our findings show that Llama models exhibit stronger prediction explanation token alignment with higher CC SHAP scores despite lacking reliable decision making accuracy, whereas Wizard achieves better prediction accuracy but lower CC SHAP scores.

Semantic-Aware Contrastive Fine-Tuning: Boosting Multimodal Malware Classification with Discriminative Embeddings

Apr 25, 2025Abstract:The rapid evolution of malware variants requires robust classification methods to enhance cybersecurity. While Large Language Models (LLMs) offer potential for generating malware descriptions to aid family classification, their utility is limited by semantic embedding overlaps and misalignment with binary behavioral features. We propose a contrastive fine-tuning (CFT) method that refines LLM embeddings via targeted selection of hard negative samples based on cosine similarity, enabling LLMs to distinguish between closely related malware families. Our approach combines high-similarity negatives to enhance discriminative power and mid-tier negatives to increase embedding diversity, optimizing both precision and generalization. Evaluated on the CIC-AndMal-2020 and BODMAS datasets, our refined embeddings are integrated into a multimodal classifier within a Model-Agnostic Meta-Learning (MAML) framework on a few-shot setting. Experiments demonstrate significant improvements: our method achieves 63.15% classification accuracy with as few as 20 samples on CIC-AndMal-2020, outperforming baselines by 11--21 percentage points and surpassing prior negative sampling strategies. Ablation studies confirm the superiority of similarity-based selection over random sampling, with gains of 10-23%. Additionally, fine-tuned LLMs generate attribute-aware descriptions that generalize to unseen variants, bridging textual and binary feature gaps. This work advances malware classification by enabling nuanced semantic distinctions and provides a scalable framework for adapting LLMs to cybersecurity challenges.

Enhancing classroom teaching with LLMs and RAG

Nov 07, 2024

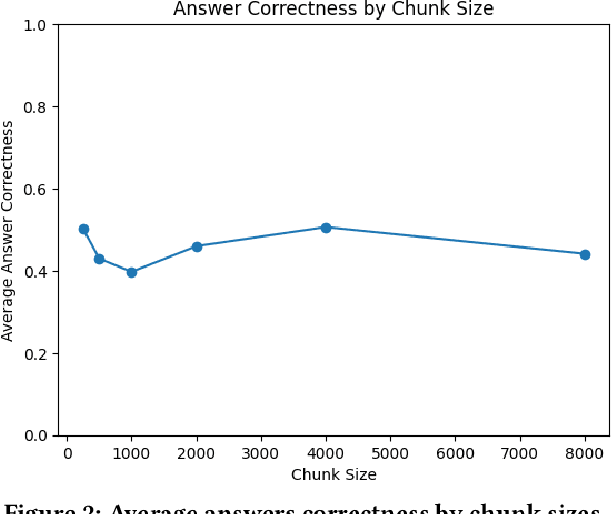

Abstract:Large Language Models have become a valuable source of information for our daily inquiries. However, after training, its data source quickly becomes out-of-date, making RAG a useful tool for providing even more recent or pertinent data. In this work, we investigate how RAG pipelines, with the course materials serving as a data source, might help students in K-12 education. The initial research utilizes Reddit as a data source for up-to-date cybersecurity information. Chunk size is evaluated to determine the optimal amount of context needed to generate accurate answers. After running the experiment for different chunk sizes, answer correctness was evaluated using RAGAs with average answer correctness not exceeding 50 percent for any chunk size. This suggests that Reddit is not a good source to mine for data for questions about cybersecurity threats. The methodology was successful in evaluating the data source, which has implications for its use to evaluate educational resources for effectiveness.

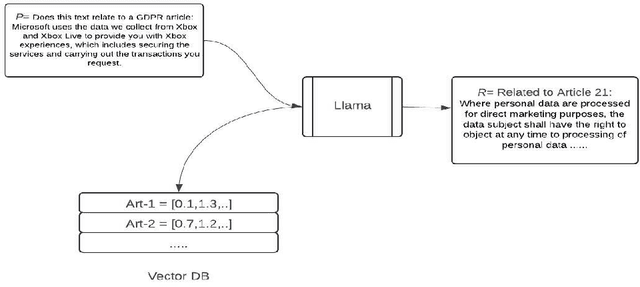

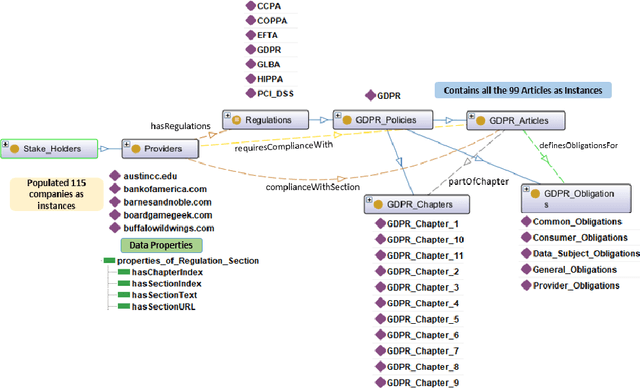

PrivComp-KG : Leveraging Knowledge Graph and Large Language Models for Privacy Policy Compliance Verification

Apr 30, 2024

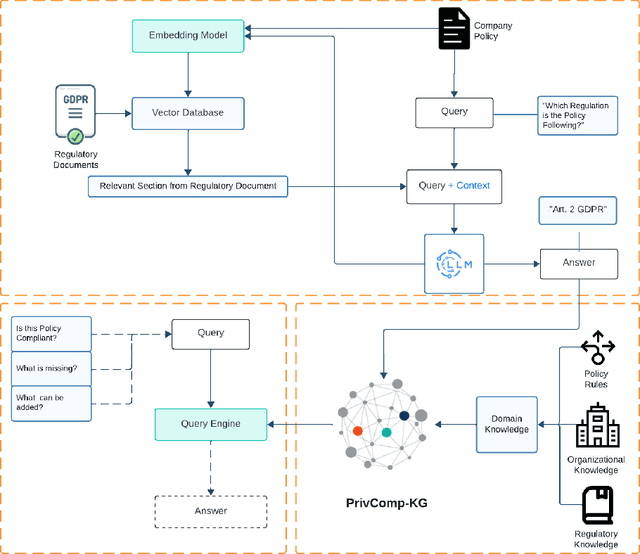

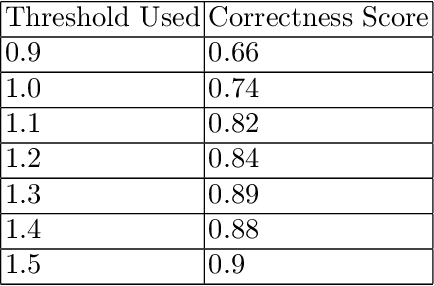

Abstract:Data protection and privacy is becoming increasingly crucial in the digital era. Numerous companies depend on third-party vendors and service providers to carry out critical functions within their operations, encompassing tasks such as data handling and storage. However, this reliance introduces potential vulnerabilities, as these vendors' security measures and practices may not always align with the standards expected by regulatory bodies. Businesses are required, often under the penalty of law, to ensure compliance with the evolving regulatory rules. Interpreting and implementing these regulations pose challenges due to their complexity. Regulatory documents are extensive, demanding significant effort for interpretation, while vendor-drafted privacy policies often lack the detail required for full legal compliance, leading to ambiguity. To ensure a concise interpretation of the regulatory requirements and compliance of organizational privacy policy with said regulations, we propose a Large Language Model (LLM) and Semantic Web based approach for privacy compliance. In this paper, we develop the novel Privacy Policy Compliance Verification Knowledge Graph, PrivComp-KG. It is designed to efficiently store and retrieve comprehensive information concerning privacy policies, regulatory frameworks, and domain-specific knowledge pertaining to the legal landscape of privacy. Using Retrieval Augmented Generation, we identify the relevant sections in a privacy policy with corresponding regulatory rules. This information about individual privacy policies is populated into the PrivComp-KG. Combining this with the domain context and rules, the PrivComp-KG can be queried to check for compliance with privacy policies by each vendor against relevant policy regulations. We demonstrate the relevance of the PrivComp-KG, by verifying compliance of privacy policy documents for various organizations.

Deep Learning-Based Speech and Vision Synthesis to Improve Phishing Attack Detection through a Multi-layer Adaptive Framework

Feb 27, 2024Abstract:The ever-evolving ways attacker continues to im prove their phishing techniques to bypass existing state-of-the-art phishing detection methods pose a mountain of challenges to researchers in both industry and academia research due to the inability of current approaches to detect complex phishing attack. Thus, current anti-phishing methods remain vulnerable to complex phishing because of the increasingly sophistication tactics adopted by attacker coupled with the rate at which new tactics are being developed to evade detection. In this research, we proposed an adaptable framework that combines Deep learning and Randon Forest to read images, synthesize speech from deep-fake videos, and natural language processing at various predictions layered to significantly increase the performance of machine learning models for phishing attack detection.

An Investigation into the Performances of the State-of-the-art Machine Learning Approaches for Various Cyber-attack Detection: A Survey

Feb 26, 2024Abstract:To secure computers and information systems from attackers taking advantage of vulnerabilities in the system to commit cybercrime, several methods have been proposed for real-time detection of vulnerabilities to improve security around information systems. Of all the proposed methods, machine learning had been the most effective method in securing a system with capabilities ranging from early detection of software vulnerabilities to real-time detection of ongoing compromise in a system. As there are different types of cyberattacks, each of the existing state-of-the-art machine learning models depends on different algorithms for training which also impact their suitability for detection of a particular type of cyberattack. In this research, we analyzed each of the current state-of-theart machine learning models for different types of cyberattack detection from the past 10 years with a major emphasis on the most recent works for comparative study to identify the knowledge gap where work is still needed to be done with regard to detection of each category of cyberattack

LOCALINTEL: Generating Organizational Threat Intelligence from Global and Local Cyber Knowledge

Jan 18, 2024Abstract:Security Operations Center (SoC) analysts gather threat reports from openly accessible global threat databases and customize them manually to suit a particular organization's needs. These analysts also depend on internal repositories, which act as private local knowledge database for an organization. Credible cyber intelligence, critical operational details, and relevant organizational information are all stored in these local knowledge databases. Analysts undertake a labor intensive task utilizing these global and local knowledge databases to manually create organization's unique threat response and mitigation strategies. Recently, Large Language Models (LLMs) have shown the capability to efficiently process large diverse knowledge sources. We leverage this ability to process global and local knowledge databases to automate the generation of organization-specific threat intelligence. In this work, we present LOCALINTEL, a novel automated knowledge contextualization system that, upon prompting, retrieves threat reports from the global threat repositories and uses its local knowledge database to contextualize them for a specific organization. LOCALINTEL comprises of three key phases: global threat intelligence retrieval, local knowledge retrieval, and contextualized completion generation. The former retrieves intelligence from global threat repositories, while the second retrieves pertinent knowledge from the local knowledge database. Finally, the fusion of these knowledge sources is orchestrated through a generator to produce a contextualized completion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge