Subash Neupane

UAT-LITE: Inference-Time Uncertainty-Aware Attention for Pretrained Transformers

Feb 03, 2026Abstract:Neural NLP models are often miscalibrated, assigning high confidence to incorrect predictions, which undermines selective prediction and high-stakes deployment. Post-hoc calibration methods adjust output probabilities but leave internal computation unchanged, while ensemble and Bayesian approaches improve uncertainty at substantial training or storage cost. We propose UAT-LITE, an inference-time framework that makes self-attention uncertainty-aware using approximate Bayesian inference via Monte Carlo dropout in pretrained transformer classifiers. Token-level epistemic uncertainty is estimated from stochastic forward passes and used to modulate self-attention during contextualization, without modifying pretrained weights or training objectives. We additionally introduce a layerwise variance decomposition to diagnose how predictive uncertainty accumulates across transformer depth. Across the SQuAD 2.0 answerability, MNLI, and SST-2, UAT-LITE reduces Expected Calibration Error by approximately 20% on average relative to a fine-tuned BERT-base baseline while preserving task accuracy, and improves selective prediction and robustness under distribution shift.

Towards a HIPAA Compliant Agentic AI System in Healthcare

Apr 24, 2025Abstract:Agentic AI systems powered by Large Language Models (LLMs) as their foundational reasoning engine, are transforming clinical workflows such as medical report generation and clinical summarization by autonomously analyzing sensitive healthcare data and executing decisions with minimal human oversight. However, their adoption demands strict compliance with regulatory frameworks such as Health Insurance Portability and Accountability Act (HIPAA), particularly when handling Protected Health Information (PHI). This work-in-progress paper introduces a HIPAA-compliant Agentic AI framework that enforces regulatory compliance through dynamic, context-aware policy enforcement. Our framework integrates three core mechanisms: (1) Attribute-Based Access Control (ABAC) for granular PHI governance, (2) a hybrid PHI sanitization pipeline combining regex patterns and BERT-based model to minimize leakage, and (3) immutable audit trails for compliance verification.

CLINICSUM: Utilizing Language Models for Generating Clinical Summaries from Patient-Doctor Conversations

Dec 05, 2024

Abstract:This paper presents ClinicSum, a novel framework designed to automatically generate clinical summaries from patient-doctor conversations. It utilizes a two-module architecture: a retrieval-based filtering module that extracts Subjective, Objective, Assessment, and Plan (SOAP) information from conversation transcripts, and an inference module powered by fine-tuned Pre-trained Language Models (PLMs), which leverage the extracted SOAP data to generate abstracted clinical summaries. To fine-tune the PLM, we created a training dataset of consisting 1,473 conversations-summaries pair by consolidating two publicly available datasets, FigShare and MTS-Dialog, with ground truth summaries validated by Subject Matter Experts (SMEs). ClinicSum's effectiveness is evaluated through both automatic metrics (e.g., ROUGE, BERTScore) and expert human assessments. Results show that ClinicSum outperforms state-of-the-art PLMs, demonstrating superior precision, recall, and F-1 scores in automatic evaluations and receiving high preference from SMEs in human assessment, making it a robust solution for automated clinical summarization.

IRSKG: Unified Intrusion Response System Knowledge Graph Ontology for Cyber Defense

Nov 23, 2024

Abstract:Cyberattacks are becoming increasingly difficult to detect and prevent due to their sophistication. In response, Autonomous Intelligent Cyber-defense Agents (AICAs) are emerging as crucial solutions. One prominent AICA agent is the Intrusion Response System (IRS), which is critical for mitigating threats after detection. IRS uses several Tactics, Techniques, and Procedures (TTPs) to mitigate attacks and restore the infrastructure to normal operations. Continuous monitoring of the enterprise infrastructure is an essential TTP the IRS uses. However, each system serves different purposes to meet operational needs. Integrating these disparate sources for continuous monitoring increases pre-processing complexity and limits automation, eventually prolonging critical response time for attackers to exploit. We propose a unified IRS Knowledge Graph ontology (IRSKG) that streamlines the onboarding of new enterprise systems as a source for the AICAs. Our ontology can capture system monitoring logs and supplemental data, such as a rules repository containing the administrator-defined policies to dictate the IRS responses. Besides, our ontology permits us to incorporate dynamic changes to adapt to the evolving cyber-threat landscape. This robust yet concise design allows machine learning models to train effectively and recover a compromised system to its desired state autonomously with explainability.

A Survey on Privacy Attacks Against Digital Twin Systems in AI-Robotics

Jun 27, 2024

Abstract:Industry 4.0 has witnessed the rise of complex robots fueled by the integration of Artificial Intelligence/Machine Learning (AI/ML) and Digital Twin (DT) technologies. While these technologies offer numerous benefits, they also introduce potential privacy and security risks. This paper surveys privacy attacks targeting robots enabled by AI and DT models. Exfiltration and data leakage of ML models are discussed in addition to the potential extraction of models derived from first-principles (e.g., physics-based). We also discuss design considerations with DT-integrated robotics touching on the impact of ML model training, responsible AI and DT safeguards, data governance and ethical considerations on the effectiveness of these attacks. We advocate for a trusted autonomy approach, emphasizing the need to combine robotics, AI, and DT technologies with robust ethical frameworks and trustworthiness principles for secure and reliable AI robotic systems.

From Questions to Insightful Answers: Building an Informed Chatbot for University Resources

May 13, 2024

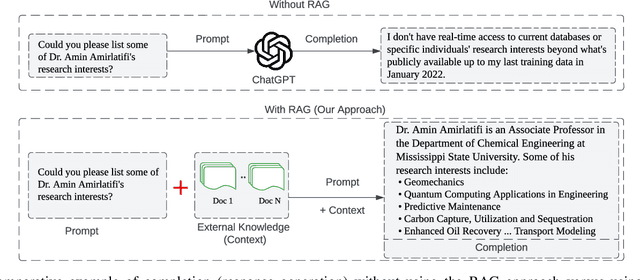

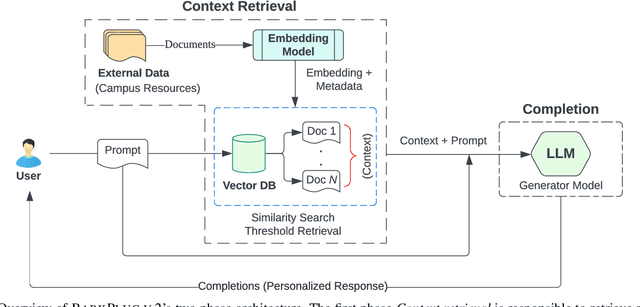

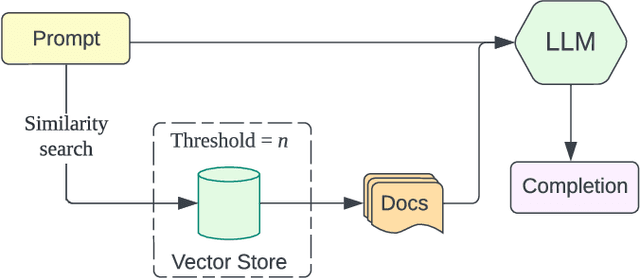

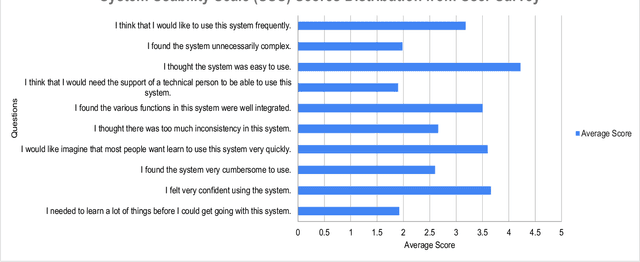

Abstract:This paper presents BARKPLUG V.2, a Large Language Model (LLM)-based chatbot system built using Retrieval Augmented Generation (RAG) pipelines to enhance the user experience and access to information within academic settings.The objective of BARKPLUG V.2 is to provide information to users about various campus resources, including academic departments, programs, campus facilities, and student resources at a university setting in an interactive fashion. Our system leverages university data as an external data corpus and ingests it into our RAG pipelines for domain-specific question-answering tasks. We evaluate the effectiveness of our system in generating accurate and pertinent responses for Mississippi State University, as a case study, using quantitative measures, employing frameworks such as Retrieval Augmented Generation Assessment(RAGAS). Furthermore, we evaluate the usability of this system via subjective satisfaction surveys using the System Usability Scale (SUS). Our system demonstrates impressive quantitative performance, with a mean RAGAS score of 0.96, and experience, as validated by usability assessments.

MedInsight: A Multi-Source Context Augmentation Framework for Generating Patient-Centric Medical Responses using Large Language Models

Mar 13, 2024

Abstract:Large Language Models (LLMs) have shown impressive capabilities in generating human-like responses. However, their lack of domain-specific knowledge limits their applicability in healthcare settings, where contextual and comprehensive responses are vital. To address this challenge and enable the generation of patient-centric responses that are contextually relevant and comprehensive, we propose MedInsight:a novel retrieval augmented framework that augments LLM inputs (prompts) with relevant background information from multiple sources. MedInsight extracts pertinent details from the patient's medical record or consultation transcript. It then integrates information from authoritative medical textbooks and curated web resources based on the patient's health history and condition. By constructing an augmented context combining the patient's record with relevant medical knowledge, MedInsight generates enriched, patient-specific responses tailored for healthcare applications such as diagnosis, treatment recommendations, or patient education. Experiments on the MTSamples dataset validate MedInsight's effectiveness in generating contextually appropriate medical responses. Quantitative evaluation using the Ragas metric and TruLens for answer similarity and answer correctness demonstrates the model's efficacy. Furthermore, human evaluation studies involving Subject Matter Expert (SMEs) confirm MedInsight's utility, with moderate inter-rater agreement on the relevance and correctness of the generated responses.

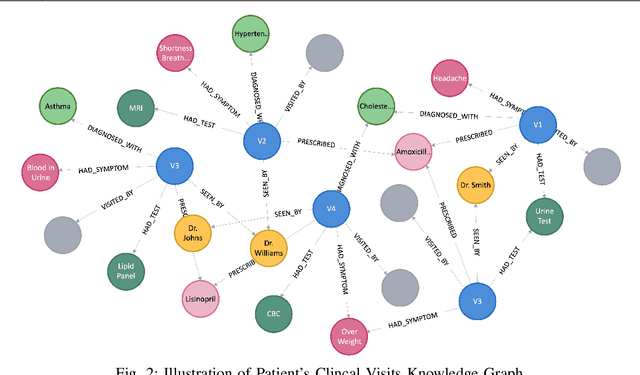

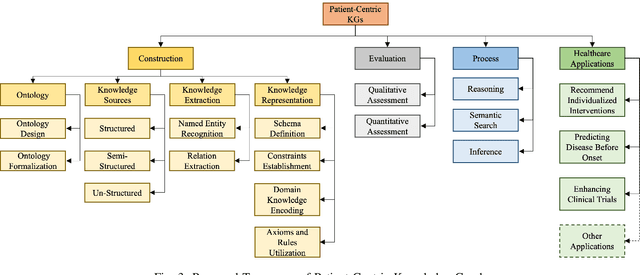

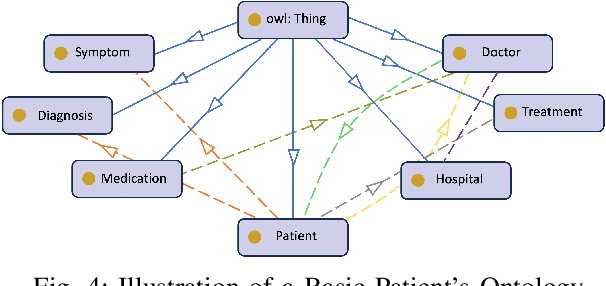

Patient-Centric Knowledge Graphs: A Survey of Current Methods, Challenges, and Applications

Feb 20, 2024

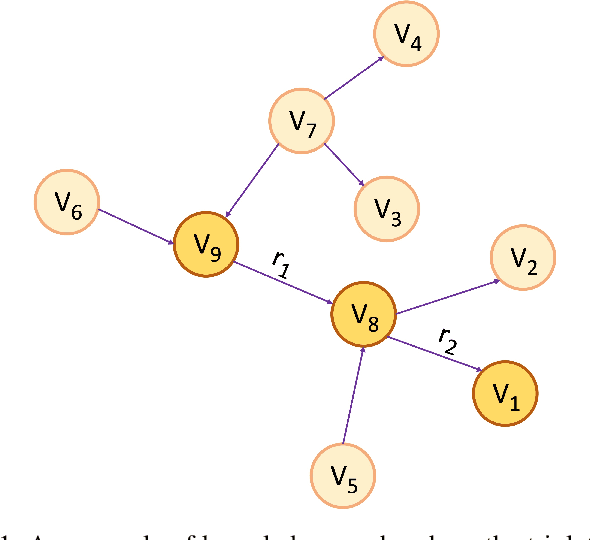

Abstract:Patient-Centric Knowledge Graphs (PCKGs) represent an important shift in healthcare that focuses on individualized patient care by mapping the patient's health information in a holistic and multi-dimensional way. PCKGs integrate various types of health data to provide healthcare professionals with a comprehensive understanding of a patient's health, enabling more personalized and effective care. This literature review explores the methodologies, challenges, and opportunities associated with PCKGs, focusing on their role in integrating disparate healthcare data and enhancing patient care through a unified health perspective. In addition, this review also discusses the complexities of PCKG development, including ontology design, data integration techniques, knowledge extraction, and structured representation of knowledge. It highlights advanced techniques such as reasoning, semantic search, and inference mechanisms essential in constructing and evaluating PCKGs for actionable healthcare insights. We further explore the practical applications of PCKGs in personalized medicine, emphasizing their significance in improving disease prediction and formulating effective treatment plans. Overall, this review provides a foundational perspective on the current state-of-the-art and best practices of PCKGs, guiding future research and applications in this dynamic field.

LOCALINTEL: Generating Organizational Threat Intelligence from Global and Local Cyber Knowledge

Jan 18, 2024Abstract:Security Operations Center (SoC) analysts gather threat reports from openly accessible global threat databases and customize them manually to suit a particular organization's needs. These analysts also depend on internal repositories, which act as private local knowledge database for an organization. Credible cyber intelligence, critical operational details, and relevant organizational information are all stored in these local knowledge databases. Analysts undertake a labor intensive task utilizing these global and local knowledge databases to manually create organization's unique threat response and mitigation strategies. Recently, Large Language Models (LLMs) have shown the capability to efficiently process large diverse knowledge sources. We leverage this ability to process global and local knowledge databases to automate the generation of organization-specific threat intelligence. In this work, we present LOCALINTEL, a novel automated knowledge contextualization system that, upon prompting, retrieves threat reports from the global threat repositories and uses its local knowledge database to contextualize them for a specific organization. LOCALINTEL comprises of three key phases: global threat intelligence retrieval, local knowledge retrieval, and contextualized completion generation. The former retrieves intelligence from global threat repositories, while the second retrieves pertinent knowledge from the local knowledge database. Finally, the fusion of these knowledge sources is orchestrated through a generator to produce a contextualized completion.

Use of Graph Neural Networks in Aiding Defensive Cyber Operations

Jan 11, 2024Abstract:In an increasingly interconnected world, where information is the lifeblood of modern society, regular cyber-attacks sabotage the confidentiality, integrity, and availability of digital systems and information. Additionally, cyber-attacks differ depending on the objective and evolve rapidly to disguise defensive systems. However, a typical cyber-attack demonstrates a series of stages from attack initiation to final resolution, called an attack life cycle. These diverse characteristics and the relentless evolution of cyber attacks have led cyber defense to adopt modern approaches like Machine Learning to bolster defensive measures and break the attack life cycle. Among the adopted ML approaches, Graph Neural Networks have emerged as a promising approach for enhancing the effectiveness of defensive measures due to their ability to process and learn from heterogeneous cyber threat data. In this paper, we look into the application of GNNs in aiding to break each stage of one of the most renowned attack life cycles, the Lockheed Martin Cyber Kill Chain. We address each phase of CKC and discuss how GNNs contribute to preparing and preventing an attack from a defensive standpoint. Furthermore, We also discuss open research areas and further improvement scopes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge