Deepti Gupta

AI Regulation and Capitalist Growth: Balancing Innovation, Ethics, and Global Governance

Apr 01, 2025

Abstract:Artificial Intelligence (AI) is increasingly central to economic growth, promising new efficiencies and markets. This economic significance has sparked debate over AI regulation: do rules and oversight bolster long term growth by building trust and safeguarding the public, or do they constrain innovation and free enterprise? This paper examines the balance between AI regulation and capitalist ideals, focusing on how different approaches to AI data privacy can impact innovation in AI-driven applications. The central question is whether AI regulation enhances or inhibits growth in a capitalist economy. Our analysis synthesizes historical precedents, the current U.S. regulatory landscape, economic projections, legal challenges, and case studies of recent AI policies. We discuss that carefully calibrated AI data privacy regulations-balancing innovation incentives with the public interest can foster sustainable growth by building trust and ensuring responsible data use, while excessive regulation may risk stifling innovation and entrenching incumbents.

Towards Adaptive AI Governance: Comparative Insights from the U.S., EU, and Asia

Apr 01, 2025

Abstract:Artificial intelligence (AI) trends vary significantly across global regions, shaping the trajectory of innovation, regulation, and societal impact. This variation influences how different regions approach AI development, balancing technological progress with ethical and regulatory considerations. This study conducts a comparative analysis of AI trends in the United States (US), the European Union (EU), and Asia, focusing on three key dimensions: generative AI, ethical oversight, and industrial applications. The US prioritizes market-driven innovation with minimal regulatory constraints, the EU enforces a precautionary risk-based framework emphasizing ethical safeguards, and Asia employs state-guided AI strategies that balance rapid deployment with regulatory oversight. Although these approaches reflect different economic models and policy priorities, their divergence poses challenges to international collaboration, regulatory harmonization, and the development of global AI standards. To address these challenges, this paper synthesizes regional strengths to propose an adaptive AI governance framework that integrates risk-tiered oversight, innovation accelerators, and strategic alignment mechanisms. By bridging governance gaps, this study offers actionable insights for fostering responsible AI development while ensuring a balance between technological progress, ethical imperatives, and regulatory coherence.

PrivComp-KG : Leveraging Knowledge Graph and Large Language Models for Privacy Policy Compliance Verification

Apr 30, 2024

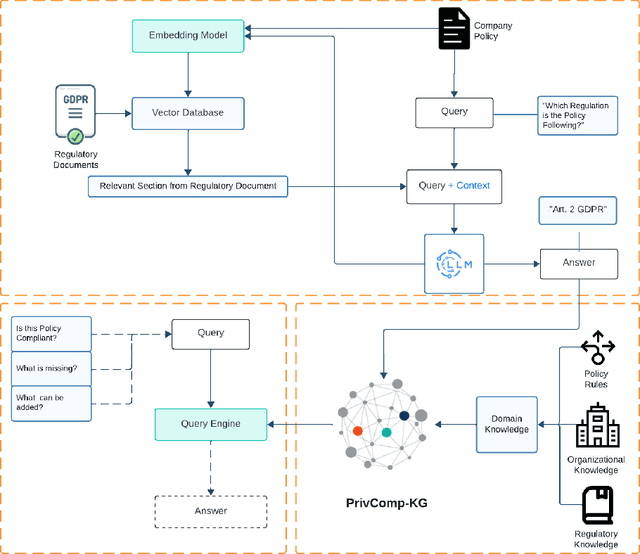

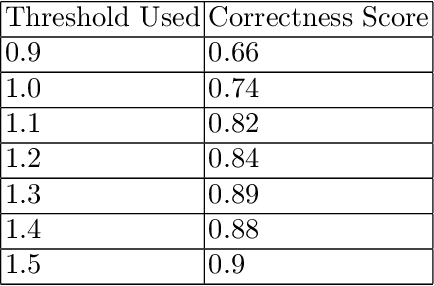

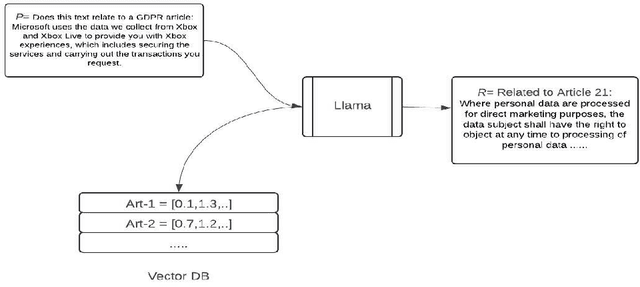

Abstract:Data protection and privacy is becoming increasingly crucial in the digital era. Numerous companies depend on third-party vendors and service providers to carry out critical functions within their operations, encompassing tasks such as data handling and storage. However, this reliance introduces potential vulnerabilities, as these vendors' security measures and practices may not always align with the standards expected by regulatory bodies. Businesses are required, often under the penalty of law, to ensure compliance with the evolving regulatory rules. Interpreting and implementing these regulations pose challenges due to their complexity. Regulatory documents are extensive, demanding significant effort for interpretation, while vendor-drafted privacy policies often lack the detail required for full legal compliance, leading to ambiguity. To ensure a concise interpretation of the regulatory requirements and compliance of organizational privacy policy with said regulations, we propose a Large Language Model (LLM) and Semantic Web based approach for privacy compliance. In this paper, we develop the novel Privacy Policy Compliance Verification Knowledge Graph, PrivComp-KG. It is designed to efficiently store and retrieve comprehensive information concerning privacy policies, regulatory frameworks, and domain-specific knowledge pertaining to the legal landscape of privacy. Using Retrieval Augmented Generation, we identify the relevant sections in a privacy policy with corresponding regulatory rules. This information about individual privacy policies is populated into the PrivComp-KG. Combining this with the domain context and rules, the PrivComp-KG can be queried to check for compliance with privacy policies by each vendor against relevant policy regulations. We demonstrate the relevance of the PrivComp-KG, by verifying compliance of privacy policy documents for various organizations.

A Learning-based Declarative Privacy-Preserving Framework for Federated Data Management

Jan 22, 2024Abstract:It is challenging to balance the privacy and accuracy for federated query processing over multiple private data silos. In this work, we will demonstrate an end-to-end workflow for automating an emerging privacy-preserving technique that uses a deep learning model trained using the Differentially-Private Stochastic Gradient Descent (DP-SGD) algorithm to replace portions of actual data to answer a query. Our proposed novel declarative privacy-preserving workflow allows users to specify "what private information to protect" rather than "how to protect". Under the hood, the system automatically chooses query-model transformation plans as well as hyper-parameters. At the same time, the proposed workflow also allows human experts to review and tune the selected privacy-preserving mechanism for audit/compliance, and optimization purposes.

Privacy-Preserving Data Sharing in Agriculture: Enforcing Policy Rules for Secure and Confidential Data Synthesis

Nov 27, 2023

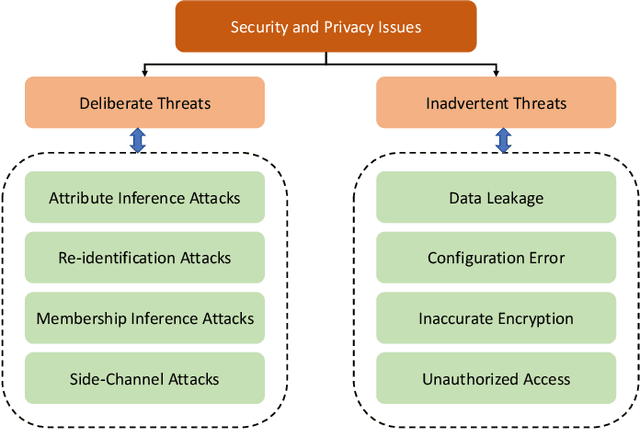

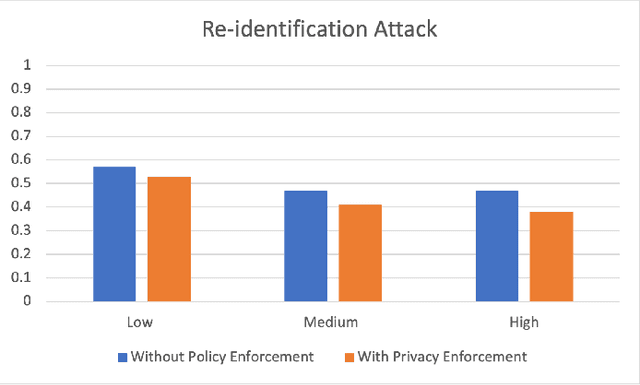

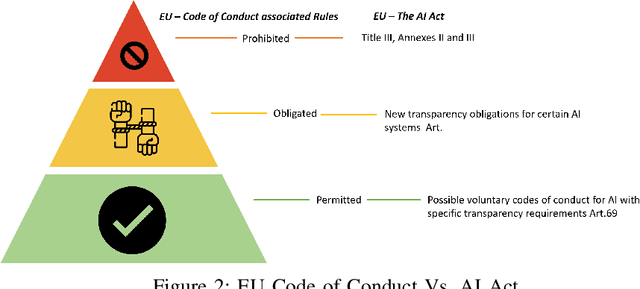

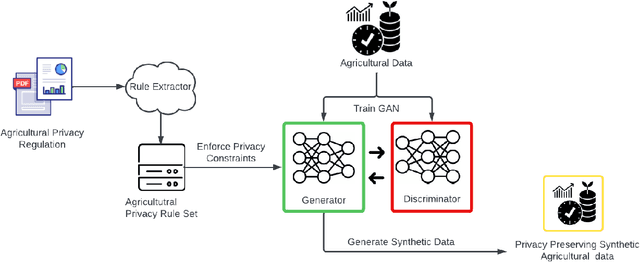

Abstract:Big Data empowers the farming community with the information needed to optimize resource usage, increase productivity, and enhance the sustainability of agricultural practices. The use of Big Data in farming requires the collection and analysis of data from various sources such as sensors, satellites, and farmer surveys. While Big Data can provide the farming community with valuable insights and improve efficiency, there is significant concern regarding the security of this data as well as the privacy of the participants. Privacy regulations, such as the EU GDPR, the EU Code of Conduct on agricultural data sharing by contractual agreement, and the proposed EU AI law, have been created to address the issue of data privacy and provide specific guidelines on when and how data can be shared between organizations. To make confidential agricultural data widely available for Big Data analysis without violating the privacy of the data subjects, we consider privacy-preserving methods of data sharing in agriculture. Deep learning-based synthetic data generation has been proposed for privacy-preserving data sharing. However, there is a lack of compliance with documented data privacy policies in such privacy-preserving efforts. In this study, we propose a novel framework for enforcing privacy policy rules in privacy-preserving data generation algorithms. We explore several available agricultural codes of conduct, extract knowledge related to the privacy constraints in data, and use the extracted knowledge to define privacy bounds in a privacy-preserving generative model. We use our framework to generate synthetic agricultural data and present experimental results that demonstrate the utility of the synthetic dataset in downstream tasks. We also show that our framework can evade potential threats and secure data based on applicable regulatory policy rules.

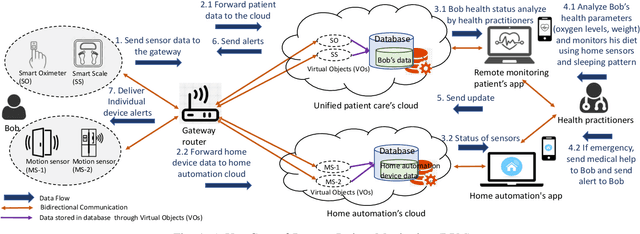

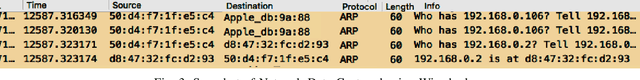

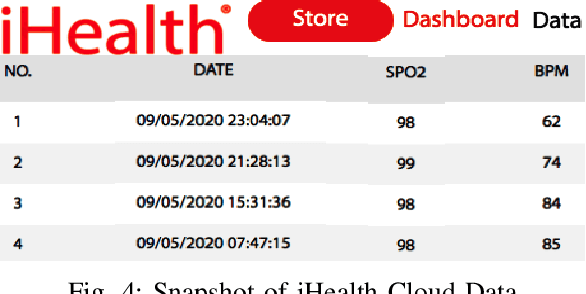

Detecting Anomalous User Behavior in Remote Patient Monitoring

Jun 22, 2021

Abstract:The growth in Remote Patient Monitoring (RPM) services using wearable and non-wearable Internet of Medical Things (IoMT) promises to improve the quality of diagnosis and facilitate timely treatment for a gamut of medical conditions. At the same time, the proliferation of IoMT devices increases the potential for malicious activities that can lead to catastrophic results including theft of personal information, data breach, and compromised medical devices, putting human lives at risk. IoMT devices generate tremendous amount of data that reflect user behavior patterns including both personal and day-to-day social activities along with daily routine health monitoring. In this context, there are possibilities of anomalies generated due to various reasons including unexpected user behavior, faulty sensor, or abnormal values from malicious/compromised devices. To address this problem, there is an imminent need to develop a framework for securing the smart health care infrastructure to identify and mitigate anomalies. In this paper, we present an anomaly detection model for RPM utilizing IoMT and smart home devices. We propose Hidden Markov Model (HMM) based anomaly detection that analyzes normal user behavior in the context of RPM comprising both smart home and smart health devices, and identifies anomalous user behavior. We design a testbed with multiple IoMT devices and home sensors to collect data and use the HMM model to train using network and user behavioral data. Proposed HMM based anomaly detection model achieved over 98% accuracy in identifying the anomalies in the context of RPM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge