Tosin Ige

MAD-OOD: A Deep Learning Cluster-Driven Framework for an Out-of-Distribution Malware Detection and Classification

Dec 19, 2025Abstract:Out of distribution (OOD) detection remains a critical challenge in malware classification due to the substantial intra family variability introduced by polymorphic and metamorphic malware variants. Most existing deep learning based malware detectors rely on closed world assumptions and fail to adequately model this intra class variation, resulting in degraded performance when confronted with previously unseen malware families. This paper presents MADOOD, a novel two stage, cluster driven deep learning framework for robust OOD malware detection and classification. In the first stage, malware family embeddings are modeled using class conditional spherical decision boundaries derived from Gaussian Discriminant Analysis (GDA), enabling statistically grounded separation of indistribution and OOD samples without requiring OOD data during training. Z score based distance analysis across multiple class centroids is employed to reliably identify anomalous samples in the latent space. In the second stage, a deep neural network integrates cluster based predictions, refined embeddings, and supervised classifier outputs to enhance final classification accuracy. Extensive evaluations on benchmark malware datasets comprising 25 known families and multiple novel OOD variants demonstrate that MADOOD significantly outperforms state of the art OOD detection methods, achieving an AUC of up to 0.911 on unseen malware families. The proposed framework provides a scalable, interpretable, and statistically principled solution for real world malware detection and anomaly identification in evolving cybersecurity environments.

Deep Learning-Based Speech and Vision Synthesis to Improve Phishing Attack Detection through a Multi-layer Adaptive Framework

Feb 27, 2024Abstract:The ever-evolving ways attacker continues to im prove their phishing techniques to bypass existing state-of-the-art phishing detection methods pose a mountain of challenges to researchers in both industry and academia research due to the inability of current approaches to detect complex phishing attack. Thus, current anti-phishing methods remain vulnerable to complex phishing because of the increasingly sophistication tactics adopted by attacker coupled with the rate at which new tactics are being developed to evade detection. In this research, we proposed an adaptable framework that combines Deep learning and Randon Forest to read images, synthesize speech from deep-fake videos, and natural language processing at various predictions layered to significantly increase the performance of machine learning models for phishing attack detection.

An Investigation into the Performances of the State-of-the-art Machine Learning Approaches for Various Cyber-attack Detection: A Survey

Feb 26, 2024Abstract:To secure computers and information systems from attackers taking advantage of vulnerabilities in the system to commit cybercrime, several methods have been proposed for real-time detection of vulnerabilities to improve security around information systems. Of all the proposed methods, machine learning had been the most effective method in securing a system with capabilities ranging from early detection of software vulnerabilities to real-time detection of ongoing compromise in a system. As there are different types of cyberattacks, each of the existing state-of-the-art machine learning models depends on different algorithms for training which also impact their suitability for detection of a particular type of cyberattack. In this research, we analyzed each of the current state-of-theart machine learning models for different types of cyberattack detection from the past 10 years with a major emphasis on the most recent works for comparative study to identify the knowledge gap where work is still needed to be done with regard to detection of each category of cyberattack

Performance Comparison and Implementation of Bayesian Variants for Network Intrusion Detection

Aug 22, 2023

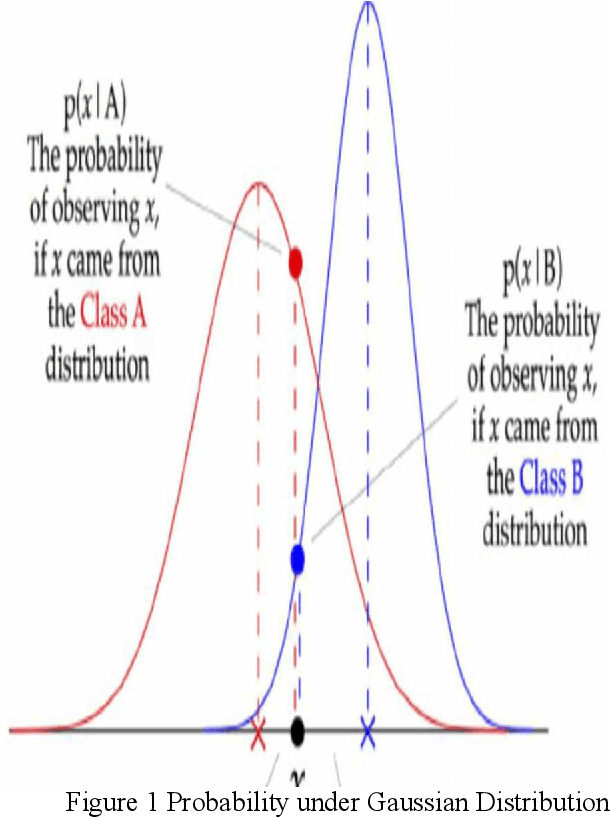

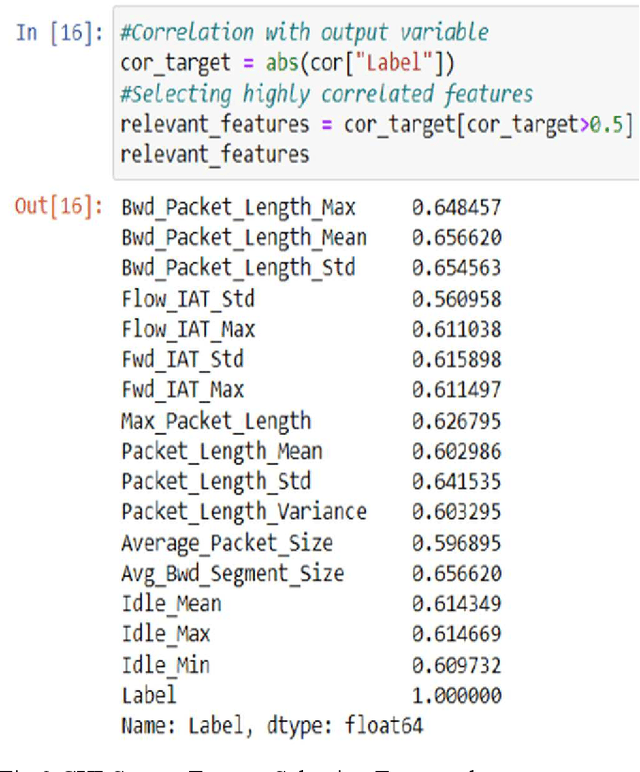

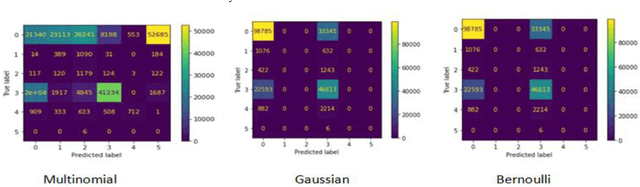

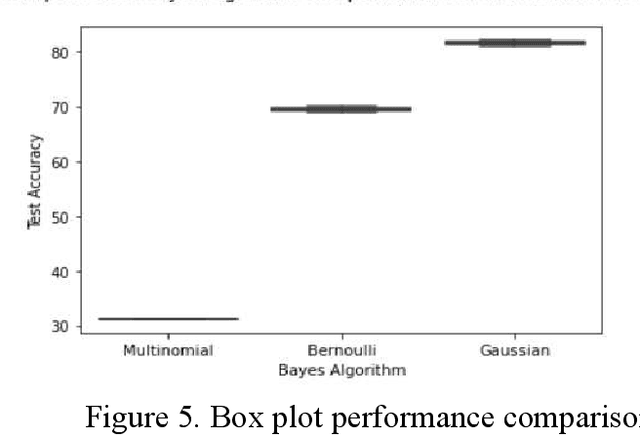

Abstract:Bayesian classifiers perform well when each of the features is completely independent of the other which is not always valid in real world application. The aim of this study is to implement and compare the performances of each variant of Bayesian classifier (Multinomial, Bernoulli, and Gaussian) on anomaly detection in network intrusion, and to investigate whether there is any association between each variant assumption and their performance. Our investigation showed that each variant of Bayesian algorithm blindly follows its assumption regardless of feature property, and that the assumption is the single most important factor that influences their accuracy. Experimental results show that Bernoulli has accuracy of 69.9% test (71% train), Multinomial has accuracy of 31.2% test (31.2% train), while Gaussian has accuracy of 81.69% test (82.84% train). Going deeper, we investigated and found that each Naive Bayes variants performances and accuracy is largely due to each classifier assumption, Gaussian classifier performed best on anomaly detection due to its assumption that features follow normal distributions which are continuous, while multinomial classifier have a dismal performance as it simply assumes discreet and multinomial distribution.

Ambient Technology & Intelligence

May 18, 2023Abstract:Today, we have a mixture of young and older individuals, people with special needs, and people who can care for themselves. Over 1 billion people are estimated to be disabled; this figure corresponds to about 15% of the world's population, with 3.8% (approximately 190 million people) accounting for people aged 15 and up (Organization, 2011). The number of people with disabilities is upward due to the increase in chronic health conditions and many other things. These and other factors have made the need for proper care facilities urgent in today's society. Several care facilities are built to help people with disabilities live their everyday lives and not be left out of the community.

Enhancing Border Security and Countering Terrorism Through Computer Vision: a Field of Artificial Intelligence

Mar 06, 2023Abstract:Border security had been a persistent problem in international border especially when it get to the issue of preventing illegal movement of weapons, contraband, drugs, and combating issue of illegal or undocumented immigrant while at the same time ensuring that lawful trade, economic prosperity coupled with national sovereignty across the border is maintained. In this research work, we used open source computer vision (Open CV) and adaboost algorithm to develop a model which can detect a moving object a far off, classify it, automatically snap full image and face of the individual separately, and then run a background check on them against worldwide databases while making a prediction about an individual being a potential threat, intending immigrant, potential terrorists or extremist and then raise sound alarm. Our model can be deployed on any camera device and be mounted at any international border. There are two stages involved, we first developed a model based on open CV computer vision algorithm, with the ability to detect human movement from afar, it will automatically snap both the face and the full image of the person separately, and the second stage is the automatic triggering of background check against the moving object. This ensures it check the moving object against several databases worldwide and is able to determine the admissibility of the person afar off. If the individual is inadmissible, it will automatically alert the border officials with the image of the person and other details, and if the bypass the border officials, the system is able to detect and alert the authority with his images and other details. All these operations will be done afar off by the AI powered camera before the individual reach the border

* 10 pages, 8 figures, Conference publication

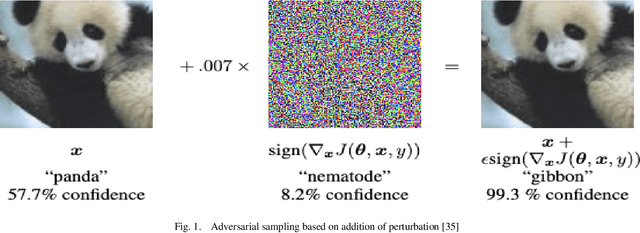

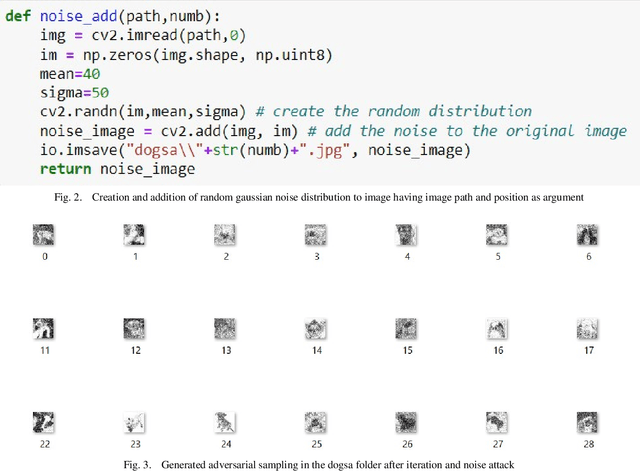

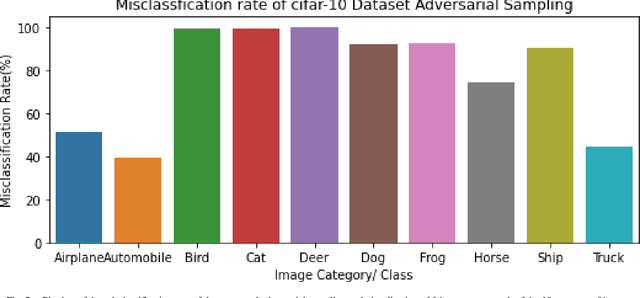

Adversarial Sampling for Fairness Testing in Deep Neural Network

Mar 06, 2023

Abstract:In this research, we focus on the usage of adversarial sampling to test for the fairness in the prediction of deep neural network model across different classes of image in a given dataset. While several framework had been proposed to ensure robustness of machine learning model against adversarial attack, some of which includes adversarial training algorithm. There is still the pitfall that adversarial training algorithm tends to cause disparity in accuracy and robustness among different group. Our research is aimed at using adversarial sampling to test for fairness in the prediction of deep neural network model across different classes or categories of image in a given dataset. We successfully demonstrated a new method of ensuring fairness across various group of input in deep neural network classifier. We trained our neural network model on the original image, and without training our model on the perturbed or attacked image. When we feed the adversarial samplings to our model, it was able to predict the original category/ class of the image the adversarial sample belongs to. We also introduced and used the separation of concern concept from software engineering whereby there is an additional standalone filter layer that filters perturbed image by heavily removing the noise or attack before automatically passing it to the network for classification, we were able to have accuracy of 93.3%. Cifar-10 dataset have ten categories of dataset, and so, in order to account for fairness, we applied our hypothesis across each categories of dataset and were able to get a consistent result and accuracy.

* 7 pages, 5 figures, International Journal of Advanced Computer Science and Application

AI Powered Anti-Cyber Bullying System using Machine Learning Algorithm of Multinomial Naive Bayes and Optimized Linear Support Vector Machine

Jul 25, 2022

Abstract:"Unless and until our society recognizes cyber bullying for what it is, the suffering of thousands of silent victims will continue." ~ Anna Maria Chavez. There had been series of research on cyber bullying which are unable to provide reliable solution to cyber bullying. In this research work, we were able to provide a permanent solution to this by developing a model capable of detecting and intercepting bullying incoming and outgoing messages with 92% accuracy. We also developed a chatbot automation messaging system to test our model leading to the development of Artificial Intelligence powered anti-cyber bullying system using machine learning algorithm of Multinomial Naive Bayes (MNB) and optimized linear Support Vector Machine (SVM). Our model is able to detect and intercept bullying outgoing and incoming bullying messages and take immediate action.

* 5 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge