William Marfo

Reducing Communication Overhead in Federated Learning for Network Anomaly Detection with Adaptive Client Selection

Mar 19, 2025

Abstract:Communication overhead in federated learning (FL) poses a significant challenge for network anomaly detection systems, where diverse client configurations and network conditions impact efficiency and detection accuracy. Existing approaches attempt optimization individually but struggle to balance reduced overhead with performance. This paper presents an adaptive FL framework combining batch size optimization, client selection, and asynchronous updates for efficient anomaly detection. Using UNSW-NB15 for general network traffic and ROAD for automotive networks, our framework reduces communication overhead by 97.6% (700.0s to 16.8s) while maintaining comparable accuracy (95.10% vs. 95.12%). The Mann-Whitney U test confirms significant improvements (p < 0.05). Profiling analysis reveals efficiency gains via reduced GPU operations and memory transfers, ensuring robust detection across varying client conditions.

Federated Learning for Efficient Condition Monitoring and Anomaly Detection in Industrial Cyber-Physical Systems

Jan 28, 2025

Abstract:Detecting and localizing anomalies in cyber-physical systems (CPS) has become increasingly challenging as systems grow in complexity, particularly due to varying sensor reliability and node failures in distributed environments. While federated learning (FL) provides a foundation for distributed model training, existing approaches often lack mechanisms to address these CPS-specific challenges. This paper introduces an enhanced FL framework with three key innovations: adaptive model aggregation based on sensor reliability, dynamic node selection for resource optimization, and Weibull-based checkpointing for fault tolerance. The proposed framework ensures reliable condition monitoring while tackling the computational and reliability challenges of industrial CPS deployments. Experiments on the NASA Bearing and Hydraulic System datasets demonstrate superior performance compared to state-of-the-art FL methods, achieving 99.5% AUC-ROC in anomaly detection and maintaining accuracy even under node failures. Statistical validation using the Mann-Whitney U test confirms significant improvements, with a p-value less than 0.05, in both detection accuracy and computational efficiency across various operational scenarios.

Adaptive Client Selection in Federated Learning: A Network Anomaly Detection Use Case

Jan 25, 2025Abstract:Federated Learning (FL) has become a widely used approach for training machine learning models on decentralized data, addressing the significant privacy concerns associated with traditional centralized methods. However, the efficiency of FL relies on effective client selection and robust privacy preservation mechanisms. Ineffective client selection can result in suboptimal model performance, while inadequate privacy measures risk exposing sensitive data. This paper introduces a client selection framework for FL that incorporates differential privacy and fault tolerance. The proposed adaptive approach dynamically adjusts the number of selected clients based on model performance and system constraints, ensuring privacy through the addition of calibrated noise. The method is evaluated on a network anomaly detection use case using the UNSW-NB15 and ROAD datasets. Results demonstrate up to a 7% improvement in accuracy and a 25% reduction in training time compared to the FedL2P approach. Additionally, the study highlights trade-offs between privacy budgets and model performance, with higher privacy budgets leading to reduced noise and improved accuracy. While the fault tolerance mechanism introduces a slight performance decrease, it enhances robustness against client failures. Statistical validation using the Mann-Whitney U test confirms the significance of these improvements, with results achieving a p-value of less than 0.05.

Detecting Masquerade Attacks in Controller Area Networks Using Graph Machine Learning

Aug 10, 2024

Abstract:Modern vehicles rely on a myriad of electronic control units (ECUs) interconnected via controller area networks (CANs) for critical operations. Despite their ubiquitous use and reliability, CANs are susceptible to sophisticated cyberattacks, particularly masquerade attacks, which inject false data that mimic legitimate messages at the expected frequency. These attacks pose severe risks such as unintended acceleration, brake deactivation, and rogue steering. Traditional intrusion detection systems (IDS) often struggle to detect these subtle intrusions due to their seamless integration into normal traffic. This paper introduces a novel framework for detecting masquerade attacks in the CAN bus using graph machine learning (ML). We hypothesize that the integration of shallow graph embeddings with time series features derived from CAN frames enhances the detection of masquerade attacks. We show that by representing CAN bus frames as message sequence graphs (MSGs) and enriching each node with contextual statistical attributes from time series, we can enhance detection capabilities across various attack patterns compared to using only graph-based features. Our method ensures a comprehensive and dynamic analysis of CAN frame interactions, improving robustness and efficiency. Extensive experiments on the ROAD dataset validate the effectiveness of our approach, demonstrating statistically significant improvements in the detection rates of masquerade attacks compared to a baseline that uses only graph-based features, as confirmed by Mann-Whitney U and Kolmogorov-Smirnov tests (p < 0.05).

Network Anomaly Detection Using Federated Learning

Mar 13, 2023Abstract:Due to the veracity and heterogeneity in network traffic, detecting anomalous events is challenging. The computational load on global servers is a significant challenge in terms of efficiency, accuracy, and scalability. Our primary motivation is to introduce a robust and scalable framework that enables efficient network anomaly detection. We address the issue of scalability and efficiency for network anomaly detection by leveraging federated learning, in which multiple participants train a global model jointly. Unlike centralized training architectures, federated learning does not require participants to upload their training data to the server, preventing attackers from exploiting the training data. Moreover, most prior works have focused on traditional centralized machine learning, making federated machine learning under-explored in network anomaly detection. Therefore, we propose a deep neural network framework that could work on low to mid-end devices detecting network anomalies while checking if a request from a specific IP address is malicious or not. Compared to multiple traditional centralized machine learning models, the deep neural federated model reduces training time overhead. The proposed method performs better than baseline machine learning techniques on the UNSW-NB15 data set as measured by experiments conducted with an accuracy of 97.21% and a faster computation time.

Adversarial Sampling for Fairness Testing in Deep Neural Network

Mar 06, 2023

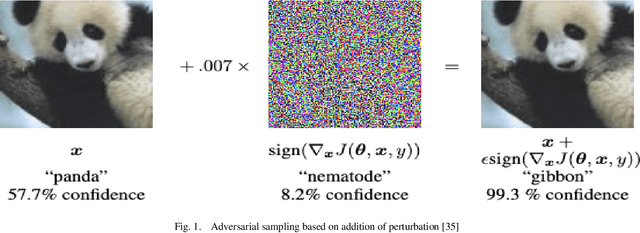

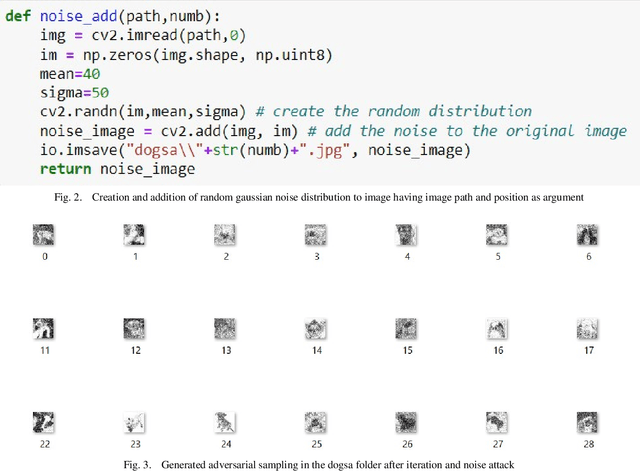

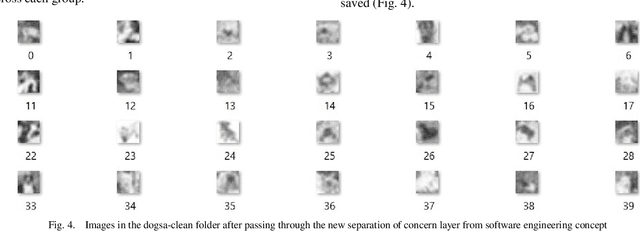

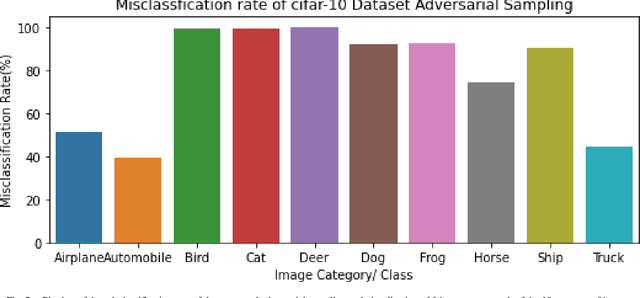

Abstract:In this research, we focus on the usage of adversarial sampling to test for the fairness in the prediction of deep neural network model across different classes of image in a given dataset. While several framework had been proposed to ensure robustness of machine learning model against adversarial attack, some of which includes adversarial training algorithm. There is still the pitfall that adversarial training algorithm tends to cause disparity in accuracy and robustness among different group. Our research is aimed at using adversarial sampling to test for fairness in the prediction of deep neural network model across different classes or categories of image in a given dataset. We successfully demonstrated a new method of ensuring fairness across various group of input in deep neural network classifier. We trained our neural network model on the original image, and without training our model on the perturbed or attacked image. When we feed the adversarial samplings to our model, it was able to predict the original category/ class of the image the adversarial sample belongs to. We also introduced and used the separation of concern concept from software engineering whereby there is an additional standalone filter layer that filters perturbed image by heavily removing the noise or attack before automatically passing it to the network for classification, we were able to have accuracy of 93.3%. Cifar-10 dataset have ten categories of dataset, and so, in order to account for fairness, we applied our hypothesis across each categories of dataset and were able to get a consistent result and accuracy.

* 7 pages, 5 figures, International Journal of Advanced Computer Science and Application

Condition monitoring and anomaly detection in cyber-physical systems

Jan 22, 2023Abstract:The modern industrial environment is equipping myriads of smart manufacturing machines where the state of each device can be monitored continuously. Such monitoring can help identify possible future failures and develop a cost-effective maintenance plan. However, it is a daunting task to perform early detection with low false positives and negatives from the huge volume of collected data. This requires developing a holistic machine learning framework to address the issues in condition monitoring of high-priority components and develop efficient techniques to detect anomalies that can detect and possibly localize the faulty components. This paper presents a comparative analysis of recent machine learning approaches for robust, cost-effective anomaly detection in cyber-physical systems. While detection has been extensively studied, very few researchers have analyzed the localization of the anomalies. We show that supervised learning outperforms unsupervised algorithms. For supervised cases, we achieve near-perfect accuracy of 98 percent (specifically for tree-based algorithms). In contrast, the best-case accuracy in the unsupervised cases was 63 percent :the area under the receiver operating characteristic curve (AUC) exhibits similar outcomes as an additional metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge