Zhongzhou Zhang

Double Banking on Knowledge: Customized Modulation and Prototypes for Multi-Modality Semi-supervised Medical Image Segmentation

Oct 23, 2024

Abstract:Multi-modality (MM) semi-supervised learning (SSL) based medical image segmentation has recently gained increasing attention for its ability to utilize MM data and reduce reliance on labeled images. However, current methods face several challenges: (1) Complex network designs hinder scalability to scenarios with more than two modalities. (2) Focusing solely on modality-invariant representation while neglecting modality-specific features, leads to incomplete MM learning. (3) Leveraging unlabeled data with generative methods can be unreliable for SSL. To address these problems, we propose Double Bank Dual Consistency (DBDC), a novel MM-SSL approach for medical image segmentation. To address challenge (1), we propose a modality all-in-one segmentation network that accommodates data from any number of modalities, removing the limitation on modality count. To address challenge (2), we design two learnable plug-in banks, Modality-Level Modulation bank (MLMB) and Modality-Level Prototype (MLPB) bank, to capture both modality-invariant and modality-specific knowledge. These banks are updated using our proposed Modality Prototype Contrastive Learning (MPCL). Additionally, we design Modality Adaptive Weighting (MAW) to dynamically adjust learning weights for each modality, ensuring balanced MM learning as different modalities learn at different rates. Finally, to address challenge (3), we introduce a Dual Consistency (DC) strategy that enforces consistency at both the image and feature levels without relying on generative methods. We evaluate our method on a 2-to-4 modality segmentation task using three open-source datasets, and extensive experiments show that our method outperforms state-of-the-art approaches.

Personalized Forgetting Mechanism with Concept-Driven Knowledge Tracing

Apr 18, 2024

Abstract:Knowledge Tracing (KT) aims to trace changes in students' knowledge states throughout their entire learning process by analyzing their historical learning data and predicting their future learning performance. Existing forgetting curve theory based knowledge tracing models only consider the general forgetting caused by time intervals, ignoring the individualization of students and the causal relationship of the forgetting process. To address these problems, we propose a Concept-driven Personalized Forgetting knowledge tracing model (CPF) which integrates hierarchical relationships between knowledge concepts and incorporates students' personalized cognitive abilities. First, we integrate the students' personalized capabilities into both the learning and forgetting processes to explicitly distinguish students' individual learning gains and forgetting rates according to their cognitive abilities. Second, we take into account the hierarchical relationships between knowledge points and design a precursor-successor knowledge concept matrix to simulate the causal relationship in the forgetting process, while also integrating the potential impact of forgetting prior knowledge points on subsequent ones. The proposed personalized forgetting mechanism can not only be applied to the learning of specifc knowledge concepts but also the life-long learning process. Extensive experimental results on three public datasets show that our CPF outperforms current forgetting curve theory based methods in predicting student performance, demonstrating CPF can better simulate changes in students' knowledge status through the personalized forgetting mechanism.

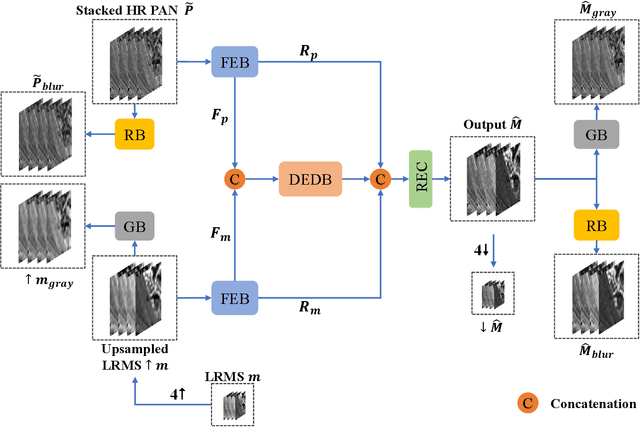

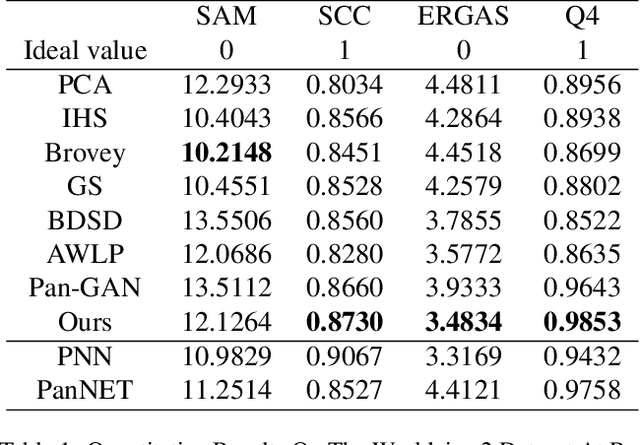

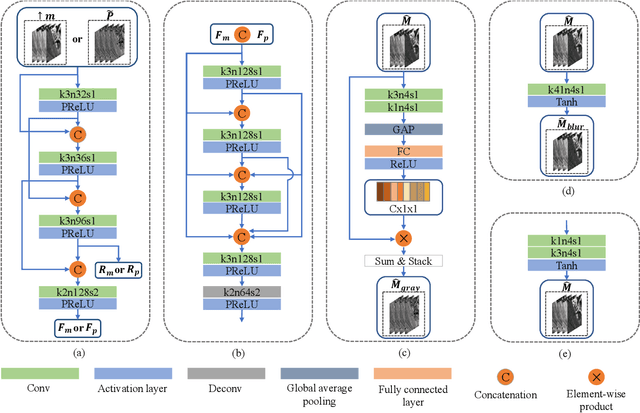

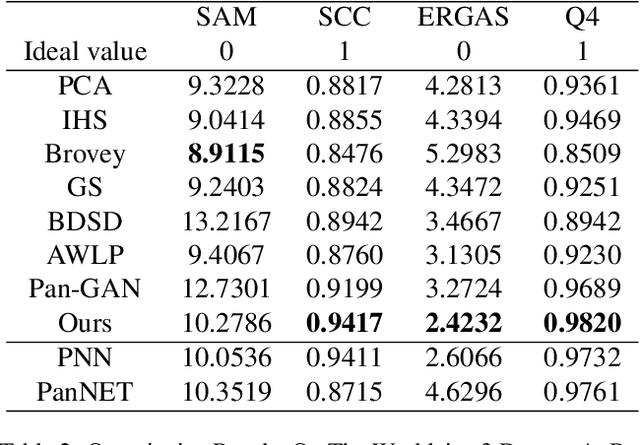

LDP-Net: An Unsupervised Pansharpening Network Based on Learnable Degradation Processes

Nov 24, 2021

Abstract:Pansharpening in remote sensing image aims at acquiring a high-resolution multispectral (HRMS) image directly by fusing a low-resolution multispectral (LRMS) image with a panchromatic (PAN) image. The main concern is how to effectively combine the rich spectral information of LRMS image with the abundant spatial information of PAN image. Recently, many methods based on deep learning have been proposed for the pansharpening task. However, these methods usually has two main drawbacks: 1) requiring HRMS for supervised learning; and 2) simply ignoring the latent relation between the MS and PAN image and fusing them directly. To solve these problems, we propose a novel unsupervised network based on learnable degradation processes, dubbed as LDP-Net. A reblurring block and a graying block are designed to learn the corresponding degradation processes, respectively. In addition, a novel hybrid loss function is proposed to constrain both spatial and spectral consistency between the pansharpened image and the PAN and LRMS images at different resolutions. Experiments on Worldview2 and Worldview3 images demonstrate that our proposed LDP-Net can fuse PAN and LRMS images effectively without the help of HRMS samples, achieving promising performance in terms of both qualitative visual effects and quantitative metrics.

Domain Adaptive SiamRPN++ for Object Tracking in the Wild

Jun 15, 2021

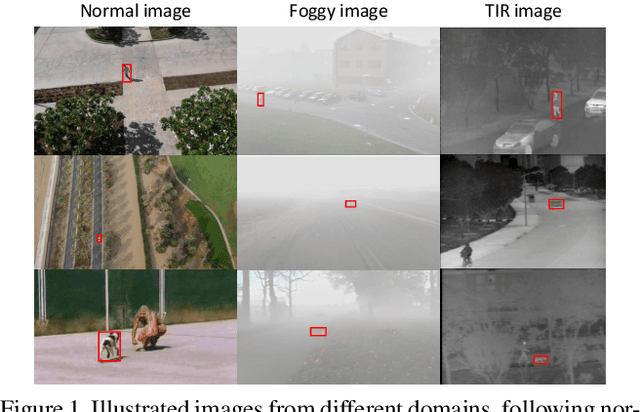

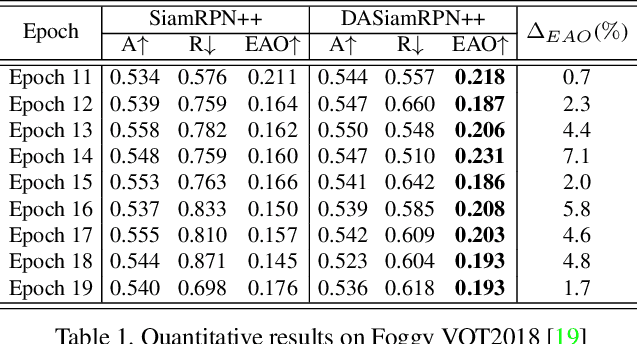

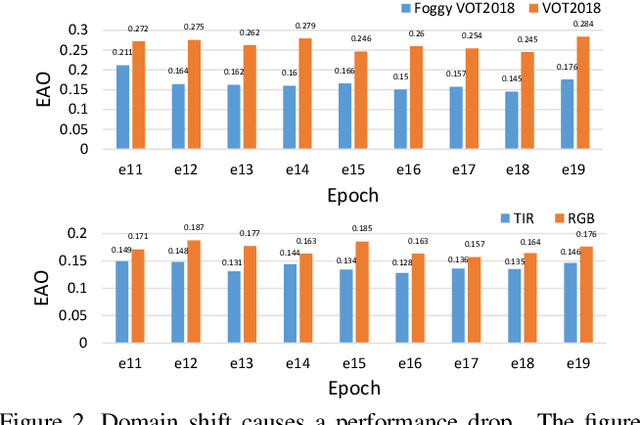

Abstract:Benefit from large-scale training data, recent advances in Siamese-based object tracking have achieved compelling results on the normal sequences. Whilst Siamese-based trackers assume training and test data follow an identical distribution. Suppose there is a set of foggy or rainy test sequences, it cannot be guaranteed that the trackers trained on the normal images perform well on the data belonging to other domains. The problem of domain shift among training and test data has already been discussed in object detection and semantic segmentation areas, which, however, has not been investigated for visual tracking. To this end, based on SiamRPN++, we introduce a Domain Adaptive SiamRPN++, namely DASiamRPN++, to improve the cross-domain transferability and robustness of a tracker. Inspired by A-distance theory, we present two domain adaptive modules, Pixel Domain Adaptation (PDA) and Semantic Domain Adaptation (SDA). The PDA module aligns the feature maps of template and search region images to eliminate the pixel-level domain shift caused by weather, illumination, etc. The SDA module aligns the feature representations of the tracking target's appearance to eliminate the semantic-level domain shift. PDA and SDA modules reduce the domain disparity by learning domain classifiers in an adversarial training manner. The domain classifiers enforce the network to learn domain-invariant feature representations. Extensive experiments are performed on the standard datasets of two different domains, including synthetic foggy and TIR sequences, which demonstrate the transferability and domain adaptability of the proposed tracker.

Hard Negative Samples Emphasis Tracker without Anchors

Aug 08, 2020

Abstract:Trackers based on Siamese network have shown tremendous success, because of their balance between accuracy and speed. Nevertheless, with tracking scenarios becoming more and more sophisticated, most existing Siamese-based approaches ignore the addressing of the problem that distinguishes the tracking target from hard negative samples in the tracking phase. The features learned by these networks lack of discrimination, which significantly weakens the robustness of Siamese-based trackers and leads to suboptimal performance. To address this issue, we propose a simple yet efficient hard negative samples emphasis method, which constrains Siamese network to learn features that are aware of hard negative samples and enhance the discrimination of embedding features. Through a distance constraint, we force to shorten the distance between exemplar vector and positive vectors, meanwhile, enlarge the distance between exemplar vector and hard negative vectors. Furthermore, we explore a novel anchor-free tracking framework in a per-pixel prediction fashion, which can significantly reduce the number of hyper-parameters and simplify the tracking process by taking full advantage of the representation of convolutional neural network. Extensive experiments on six standard benchmark datasets demonstrate that the proposed method can perform favorable results against state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge