Yuwei Dai

A multitask framework for automated interpretation of multi-frame right upper quadrant ultrasound in clinical decision support

Jan 17, 2026Abstract:Ultrasound is a cornerstone of emergency and hepatobiliary imaging, yet its interpretation remains highly operator-dependent and time-sensitive. Here, we present a multitask vision-language agent (VLM) developed to assist with comprehensive right upper quadrant (RUQ) ultrasound interpretation across the full diagnostic workflow. The system was trained on a large, multi-center dataset comprising a primary cohort from Johns Hopkins Medical Institutions (9,189 cases, 594,099 images) and externally validated on cohorts from Stanford University (108 cases, 3,240 images) and a major Chinese medical center (257 cases, 3,178 images). Built on the Qwen2.5-VL-7B architecture, the agent integrates frame-level visual understanding with report-grounded language reasoning to perform three tasks: (i) classification of 18 hepatobiliary and gallbladder conditions, (ii) generation of clinically coherent diagnostic reports, and (iii) surgical decision support based on ultrasound findings and clinical data. The model achieved high diagnostic accuracy across all tasks, generated reports that were indistinguishable from expert-written versions in blinded evaluations, and demonstrated superior factual accuracy and information density on content-based metrics. The agent further identified patients requiring cholecystectomy with high precision, supporting real-time decision-making. These results highlight the potential of generalist vision-language models to improve diagnostic consistency, reporting efficiency, and surgical triage in real-world ultrasound practice.

Dataset and Benchmark for Enhancing Critical Retained Foreign Object Detection

Jul 09, 2025Abstract:Critical retained foreign objects (RFOs), including surgical instruments like sponges and needles, pose serious patient safety risks and carry significant financial and legal implications for healthcare institutions. Detecting critical RFOs using artificial intelligence remains challenging due to their rarity and the limited availability of chest X-ray datasets that specifically feature critical RFOs cases. Existing datasets only contain non-critical RFOs, like necklace or zipper, further limiting their utility for developing clinically impactful detection algorithms. To address these limitations, we introduce "Hopkins RFOs Bench", the first and largest dataset of its kind, containing 144 chest X-ray images of critical RFO cases collected over 18 years from the Johns Hopkins Health System. Using this dataset, we benchmark several state-of-the-art object detection models, highlighting the need for enhanced detection methodologies for critical RFO cases. Recognizing data scarcity challenges, we further explore image synthetic methods to bridge this gap. We evaluate two advanced synthetic image methods, DeepDRR-RFO, a physics-based method, and RoentGen-RFO, a diffusion-based method, for creating realistic radiographs featuring critical RFOs. Our comprehensive analysis identifies the strengths and limitations of each synthetic method, providing insights into effectively utilizing synthetic data to enhance model training. The Hopkins RFOs Bench and our findings significantly advance the development of reliable, generalizable AI-driven solutions for detecting critical RFOs in clinical chest X-rays.

Beyond the LUMIR challenge: The pathway to foundational registration models

May 30, 2025

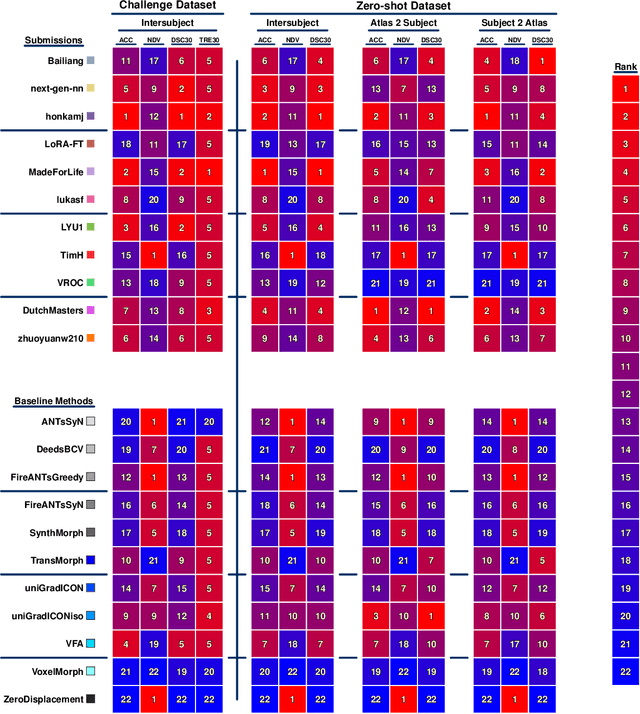

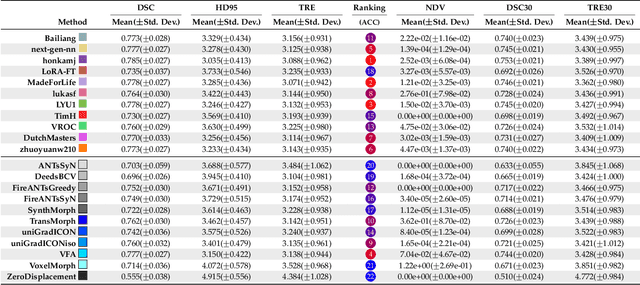

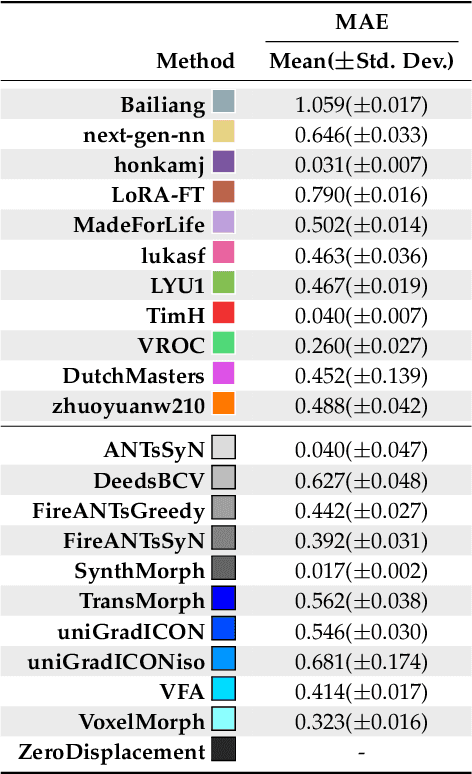

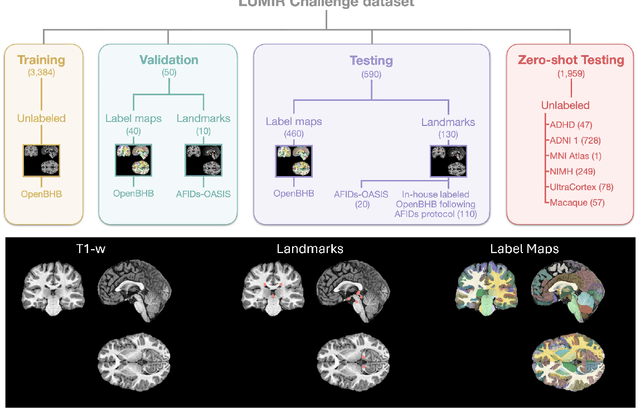

Abstract:Medical image challenges have played a transformative role in advancing the field, catalyzing algorithmic innovation and establishing new performance standards across diverse clinical applications. Image registration, a foundational task in neuroimaging pipelines, has similarly benefited from the Learn2Reg initiative. Building on this foundation, we introduce the Large-scale Unsupervised Brain MRI Image Registration (LUMIR) challenge, a next-generation benchmark designed to assess and advance unsupervised brain MRI registration. Distinct from prior challenges that leveraged anatomical label maps for supervision, LUMIR removes this dependency by providing over 4,000 preprocessed T1-weighted brain MRIs for training without any label maps, encouraging biologically plausible deformation modeling through self-supervision. In addition to evaluating performance on 590 held-out test subjects, LUMIR introduces a rigorous suite of zero-shot generalization tasks, spanning out-of-domain imaging modalities (e.g., FLAIR, T2-weighted, T2*-weighted), disease populations (e.g., Alzheimer's disease), acquisition protocols (e.g., 9.4T MRI), and species (e.g., macaque brains). A total of 1,158 subjects and over 4,000 image pairs were included for evaluation. Performance was assessed using both segmentation-based metrics (Dice coefficient, 95th percentile Hausdorff distance) and landmark-based registration accuracy (target registration error). Across both in-domain and zero-shot tasks, deep learning-based methods consistently achieved state-of-the-art accuracy while producing anatomically plausible deformation fields. The top-performing deep learning-based models demonstrated diffeomorphic properties and inverse consistency, outperforming several leading optimization-based methods, and showing strong robustness to most domain shifts, the exception being a drop in performance on out-of-domain contrasts.

Learn to Optimize Resource Allocation under QoS Constraint of AR

Jan 27, 2025Abstract:This paper studies the uplink and downlink power allocation for interactive augmented reality (AR) services, where live video captured by an AR device is uploaded to the network edge and then the augmented video is subsequently downloaded. By modeling the AR transmission process as a tandem queuing system, we derive an upper bound for the probabilistic quality of service (QoS) requirement concerning end-to-end latency and reliability. The resource allocation with the QoS constraints results in a functional optimization problem. To address it, we design a deep neural network to learn the power allocation policy, leveraging the structure of optimal power allocation to enhance learning performance. Simulation results demonstrate that the proposed method effectively reduces transmit powers while meeting the QoS requirement.

Optimizing Prompt Strategies for SAM: Advancing lesion Segmentation Across Diverse Medical Imaging Modalities

Dec 28, 2024

Abstract:Purpose: To evaluate various Segmental Anything Model (SAM) prompt strategies across four lesions datasets and to subsequently develop a reinforcement learning (RL) agent to optimize SAM prompt placement. Materials and Methods: This retrospective study included patients with four independent ovarian, lung, renal, and breast tumor datasets. Manual segmentation and SAM-assisted segmentation were performed for all lesions. A RL model was developed to predict and select SAM points to maximize segmentation performance. Statistical analysis of segmentation was conducted using pairwise t-tests. Results: Results show that increasing the number of prompt points significantly improves segmentation accuracy, with Dice coefficients rising from 0.272 for a single point to 0.806 for five or more points in ovarian tumors. The prompt location also influenced performance, with surface and union-based prompts outperforming center-based prompts, achieving mean Dice coefficients of 0.604 and 0.724 for ovarian and breast tumors, respectively. The RL agent achieved a peak Dice coefficient of 0.595 for ovarian tumors, outperforming random and alternative RL strategies. Additionally, it significantly reduced segmentation time, achieving a nearly 10-fold improvement compared to manual methods using SAM. Conclusion: While increased SAM prompts and non-centered prompts generally improved segmentation accuracy, each pathology and modality has specific optimal thresholds and placement strategies. Our RL agent achieved superior performance compared to other agents while achieving a significant reduction in segmentation time.

Optimizing prompt strategies for the Segment Anything Model are explored, focusing on prompt location, number, and reinforcement learning-based agent for prompt placement across four lesion datasets

Dec 23, 2024

Abstract:Purpose: To evaluate various Segmental Anything Model (SAM) prompt strategies across four lesions datasets and to subsequently develop a reinforcement learning (RL) agent to optimize SAM prompt placement. Materials and Methods: This retrospective study included patients with four independent ovarian, lung, renal, and breast tumor datasets. Manual segmentation and SAM-assisted segmentation were performed for all lesions. A RL model was developed to predict and select SAM points to maximize segmentation performance. Statistical analysis of segmentation was conducted using pairwise t-tests. Results: Results show that increasing the number of prompt points significantly improves segmentation accuracy, with Dice coefficients rising from 0.272 for a single point to 0.806 for five or more points in ovarian tumors. The prompt location also influenced performance, with surface and union-based prompts outperforming center-based prompts, achieving mean Dice coefficients of 0.604 and 0.724 for ovarian and breast tumors, respectively. The RL agent achieved a peak Dice coefficient of 0.595 for ovarian tumors, outperforming random and alternative RL strategies. Additionally, it significantly reduced segmentation time, achieving a nearly 10-fold improvement compared to manual methods using SAM. Conclusion: While increased SAM prompts and non-centered prompts generally improved segmentation accuracy, each pathology and modality has specific optimal thresholds and placement strategies. Our RL agent achieved superior performance compared to other agents while achieving a significant reduction in segmentation time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge