Yujian Li

AEANet: Affinity Enhanced Attentional Networks for Arbitrary Style Transfer

Sep 24, 2024

Abstract:Arbitrary artistic style transfer is a research area that combines rational academic study with emotive artistic creation. It aims to create a new image from a content image according to a target artistic style, maintaining the content's textural structural information while incorporating the artistic characteristics of the style image. However, existing style transfer methods often significantly damage the texture lines of the content image during the style transformation. To address these issues, we propose affinity-enhanced attentional network, which include the content affinity-enhanced attention (CAEA) module, the style affinity-enhanced attention (SAEA) module, and the hybrid attention (HA) module. The CAEA and SAEA modules first use attention to enhance content and style representations, followed by a detail enhanced (DE) module to reinforce detail features. The hybrid attention module adjusts the style feature distribution based on the content feature distribution. We also introduce the local dissimilarity loss based on affinity attention, which better preserves the affinity with content and style images. Experiments demonstrate that our work achieves better results in arbitrary style transfer than other state-of-the-art methods.

A Cluster-Based Statistical Channel Model for Integrated Sensing and Communication Channels

Mar 01, 2024

Abstract:The emerging 6G network envisions integrated sensing and communication (ISAC) as a promising solution to meet growing demand for native perception ability. To optimize and evaluate ISAC systems and techniques, it is crucial to have an accurate and realistic wireless channel model. However, some important features of ISAC channels have not been well characterized, for example, most existing ISAC channel models consider communication channels and sensing channels independently, whereas ignoring correlation under the consistent environment. Moreover, sensing channels have not been well modeled in the existing standard-level channel models. Therefore, in order to better model ISAC channel, a cluster-based statistical channel model is proposed in this paper, which is based on measurements conducted at 28 GHz. In the proposed model, a new framework based on 3GPP standard is proposed, which includes communication clusters and sensing clusters. Clustering and tracking algorithms are used to extract and analyze ISAC channel characteristics. Furthermore, some special sensing cluster structures such as shared sensing cluster, newborn sensing cluster, etc., are defined to model correlation and difference between communication and sensing channels. Finally, accuracy of the proposed model is validated based on measurements and simulations.

Probabilistic K-means Clustering via Nonlinear Programming

Jan 10, 2020

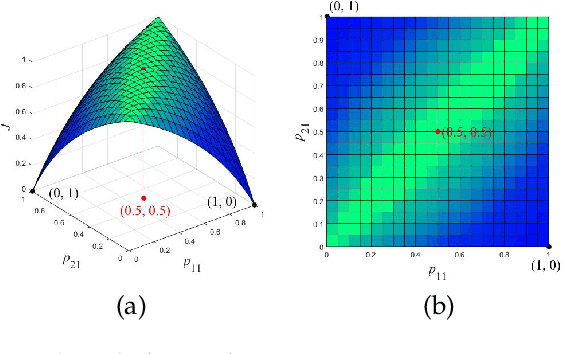

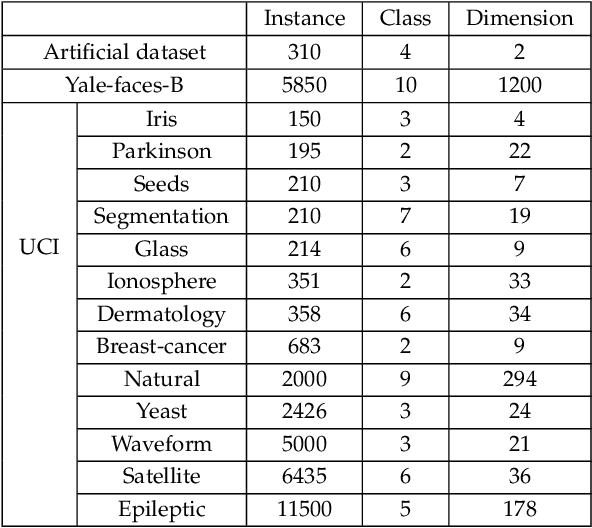

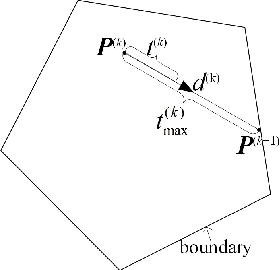

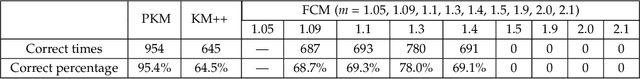

Abstract:K-means is a classical clustering algorithm with wide applications. However, soft K-means, or fuzzy c-means at m=1, remains unsolved since 1981. To address this challenging open problem, we propose a novel clustering model, i.e. Probabilistic K-Means (PKM), which is also a nonlinear programming model constrained on linear equalities and linear inequalities. In theory, we can solve the model by active gradient projection, while inefficiently. Thus, we further propose maximum-step active gradient projection and fast maximum-step active gradient projection to solve it more efficiently. By experiments, we evaluate the performance of PKM and how well the proposed methods solve it in five aspects: initialization robustness, clustering performance, descending stability, iteration number, and convergence speed.

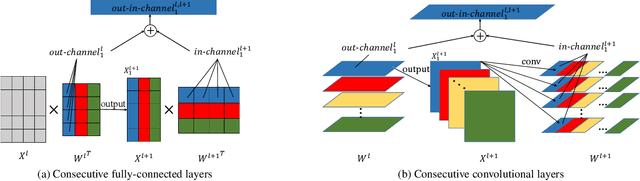

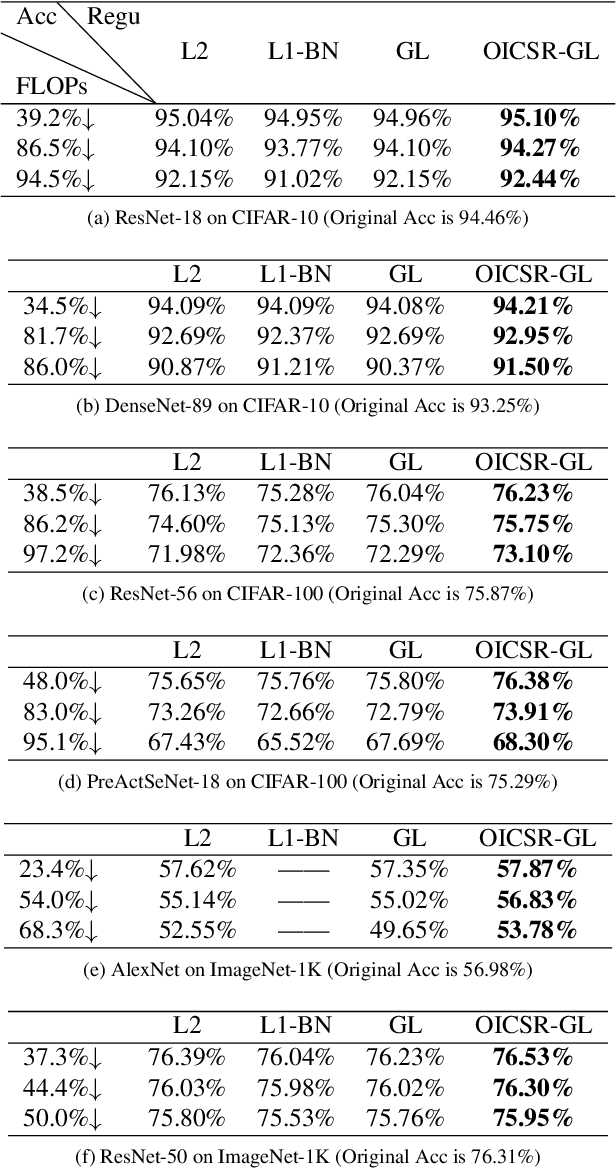

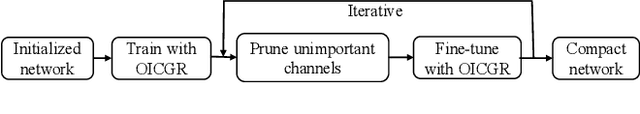

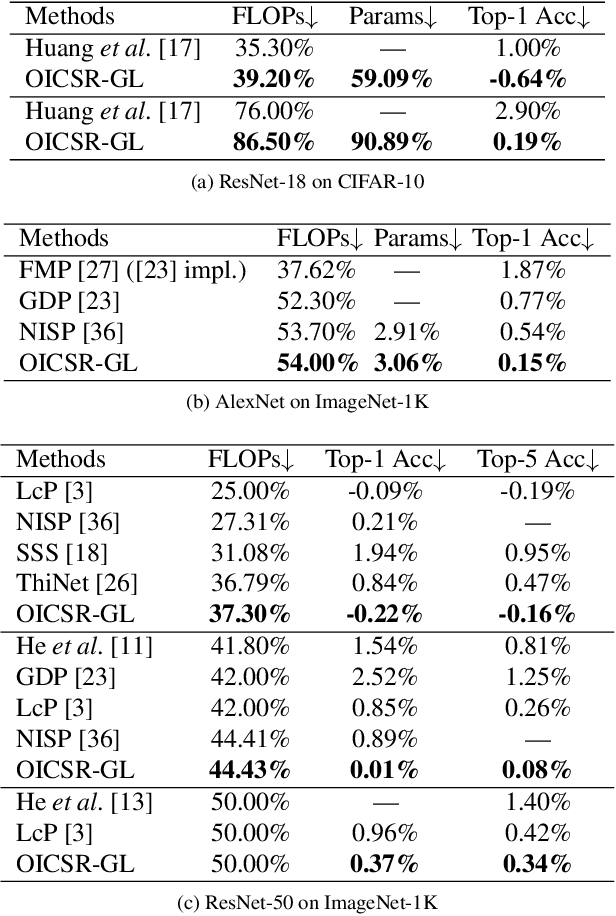

OICSR: Out-In-Channel Sparsity Regularization for Compact Deep Neural Networks

Jun 06, 2019

Abstract:Channel pruning can significantly accelerate and compress deep neural networks. Many channel pruning works utilize structured sparsity regularization to zero out all the weights in some channels and automatically obtain structure-sparse network in training stage. However, these methods apply structured sparsity regularization on each layer separately where the correlations between consecutive layers are omitted. In this paper, we first combine one out-channel in current layer and the corresponding in-channel in next layer as a regularization group, namely out-in-channel. Our proposed Out-In-Channel Sparsity Regularization (OICSR) considers correlations between successive layers to further retain predictive power of the compact network. Training with OICSR thoroughly transfers discriminative features into a fraction of out-in-channels. Correspondingly, OICSR measures channel importance based on statistics computed from two consecutive layers, not individual layer. Finally, a global greedy pruning algorithm is designed to remove redundant out-in-channels in an iterative way. Our method is comprehensively evaluated with various CNN architectures including CifarNet, AlexNet, ResNet, DenseNet and PreActSeNet on CIFAR-10, CIFAR-100 and ImageNet-1K datasets. Notably, on ImageNet-1K, we reduce 37.2% FLOPs on ResNet-50 while outperforming the original model by 0.22% top-1 accuracy.

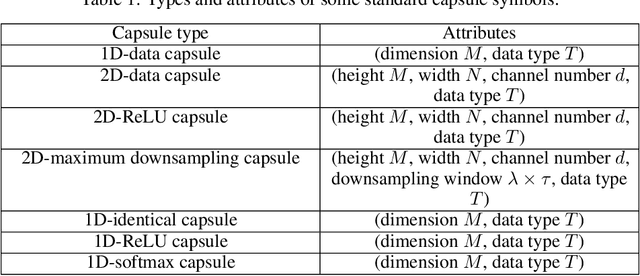

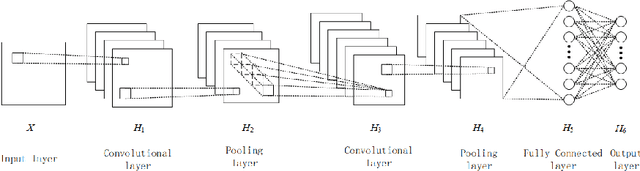

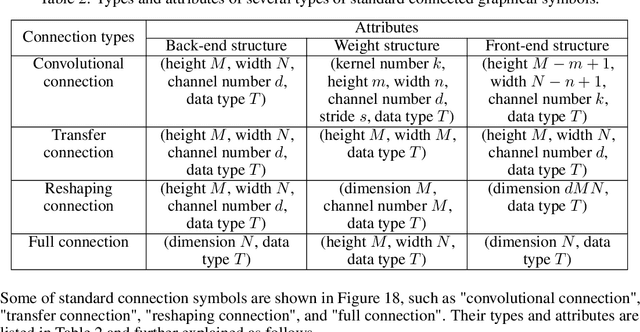

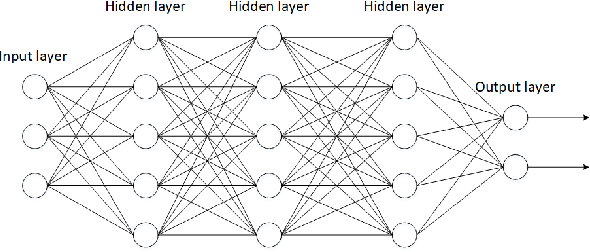

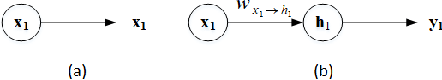

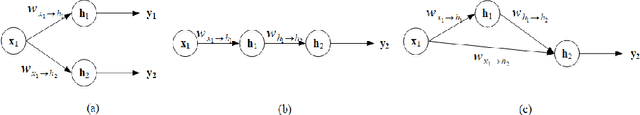

A Capsule-unified Framework of Deep Neural Networks for Graphical Programming

Mar 13, 2019

Abstract:Recently, the growth of deep learning has produced a large number of deep neural networks. How to describe these networks unifiedly is becoming an important issue. We first formalize neural networks in a mathematical definition, give their directed graph representations, and prove a generation theorem about the induced networks of connected directed acyclic graphs. Then, using the concept of capsule to extend neural networks, we set up a capsule-unified framework for deep learning, including a mathematical definition of capsules, an induced model for capsule networks and a universal backpropagation algorithm for training them. Finally, we discuss potential applications of the framework to graphical programming with standard graphical symbols of capsules, neurons, and connections.

Theory of Cognitive Relativity: A Promising Paradigm for True AI

Dec 01, 2018

Abstract:The rise of deep learning has brought artificial intelligence (AI) to the forefront. The ultimate goal of AI is to realize a machine with human mind and consciousness, but existing achievements mainly simulate intelligent behavior on computer platforms. These achievements all belong to weak AI rather than strong AI. How to achieve strong AI is not known yet in the field of intelligence science. Currently, this field is calling for a new paradigm, especially Theory of Cognitive Relativity (TCR). The TCR aims to summarize a simple and elegant set of first principles about the nature of intelligence, at least including the Principle of World's Relativity and the Principle of Symbol's Relativity. The Principle of World's Relativity states that the subjective world an intelligent agent can observe is strongly constrained by the way it perceives the objective world. The Principle of Symbol's Relativity states that an intelligent agent can use any physical symbol system to describe what it observes in its subjective world. The two principles are derived from scientific facts and life experience. Thought experiments show that they are important to understand high-level intelligence and necessary to establish a scientific theory of mind and consciousness. Other than brain-like intelligence, it indeed advocates a promising change in direction to realize true AI, i.e. strong AI with artificial consciousness, particularly different from humans' and animals'. Furthermore, a TCR creed has been presented and extended to reveal the secrets of consciousness and to guide realization of conscious machines. In the sense that true AI could be diversely implemented in a brain-different way, the TCR would probably drive an intelligence revolution in combination with some other first principles.

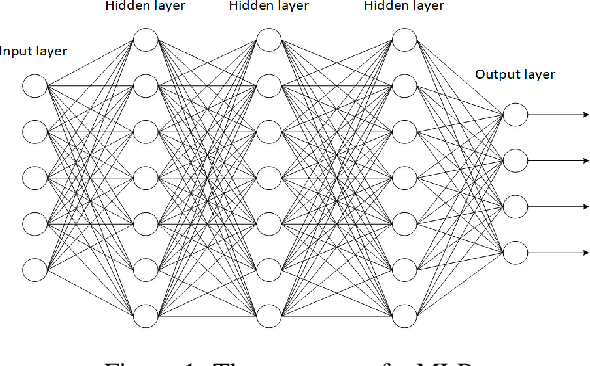

A Unified Framework of Deep Neural Networks by Capsules

May 10, 2018

Abstract:With the growth of deep learning, how to describe deep neural networks unifiedly is becoming an important issue. We first formalize neural networks mathematically with their directed graph representations, and prove a generation theorem about the induced networks of connected directed acyclic graphs. Then, we set up a unified framework for deep learning with capsule networks. This capsule framework could simplify the description of existing deep neural networks, and provide a theoretical basis of graphic designing and programming techniques for deep learning models, thus would be of great significance to the advancement of deep learning.

Can Machines Think in Radio Language?

Dec 17, 2017

Abstract:People can think in auditory, visual and tactile forms of language, so can machines principally. But is it possible for them to think in radio language? According to a first principle presented for general intelligence, i.e. the principle of language's relativity, the answer may give an exceptional solution for robot astronauts to talk with each other in space exploration.

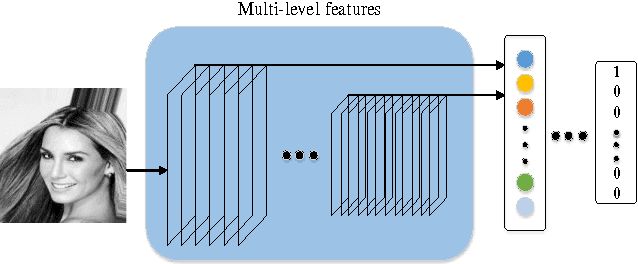

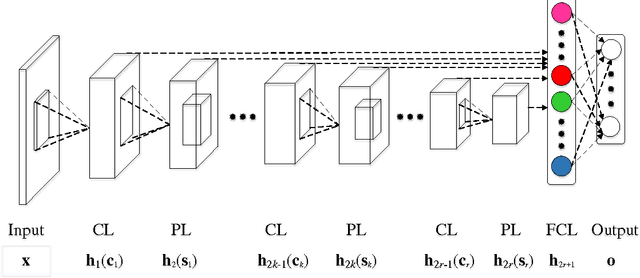

A concatenating framework of shortcut convolutional neural networks

Oct 03, 2017

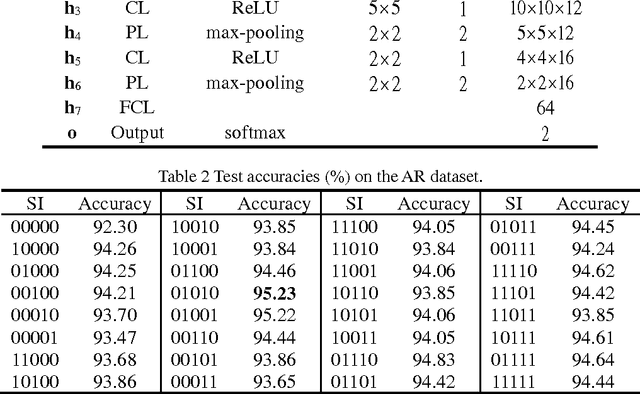

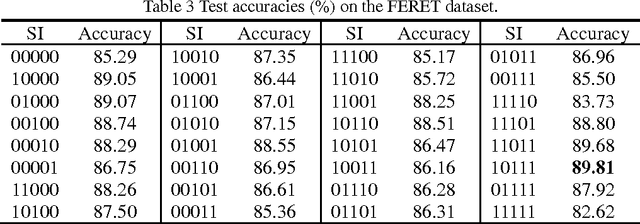

Abstract:It is well accepted that convolutional neural networks play an important role in learning excellent features for image classification and recognition. However, in tradition they only allow adjacent layers connected, limiting integration of multi-scale information. To further improve their performance, we present a concatenating framework of shortcut convolutional neural networks. This framework can concatenate multi-scale features by shortcut connections to the fully-connected layer that is directly fed to the output layer. We do a large number of experiments to investigate performance of the shortcut convolutional neural networks on many benchmark visual datasets for different tasks. The datasets include AR, FERET, FaceScrub, CelebA for gender classification, CUReT for texture classification, MNIST for digit recognition, and CIFAR-10 for object recognition. Experimental results show that the shortcut convolutional neural networks can achieve better results than the traditional ones on these tasks, with more stability in different settings of pooling schemes, activation functions, optimizations, initializations, kernel numbers and kernel sizes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge