A Capsule-unified Framework of Deep Neural Networks for Graphical Programming

Paper and Code

Mar 13, 2019

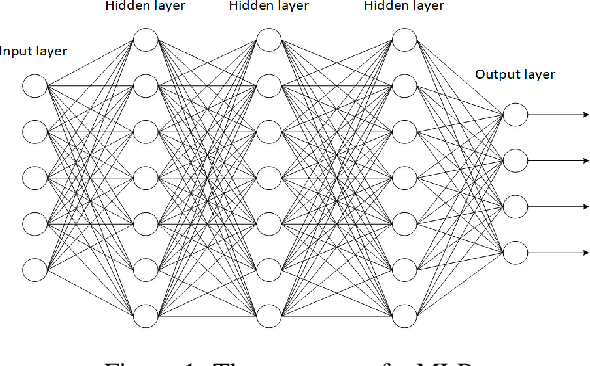

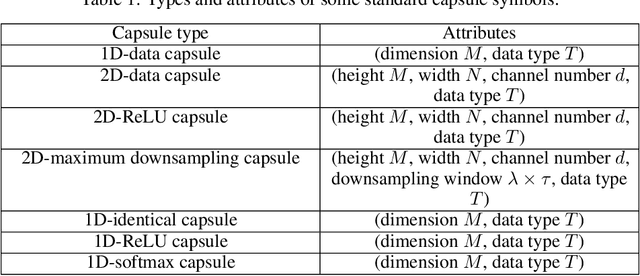

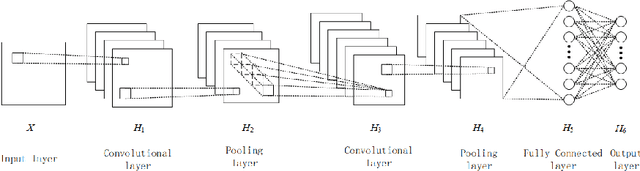

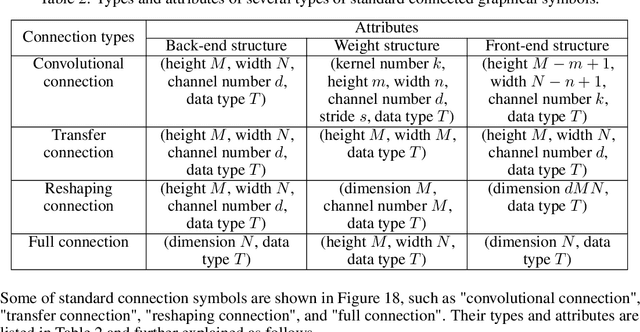

Recently, the growth of deep learning has produced a large number of deep neural networks. How to describe these networks unifiedly is becoming an important issue. We first formalize neural networks in a mathematical definition, give their directed graph representations, and prove a generation theorem about the induced networks of connected directed acyclic graphs. Then, using the concept of capsule to extend neural networks, we set up a capsule-unified framework for deep learning, including a mathematical definition of capsules, an induced model for capsule networks and a universal backpropagation algorithm for training them. Finally, we discuss potential applications of the framework to graphical programming with standard graphical symbols of capsules, neurons, and connections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge