Yogesh Simmhan

Optimizing Federated Learning for Scalable Power-demand Forecasting in Microgrids

Aug 11, 2025Abstract:Real-time monitoring of power consumption in cities and micro-grids through the Internet of Things (IoT) can help forecast future demand and optimize grid operations. But moving all consumer-level usage data to the cloud for predictions and analysis at fine time scales can expose activity patterns. Federated Learning~(FL) is a privacy-sensitive collaborative DNN training approach that retains data on edge devices, trains the models on private data locally, and aggregates the local models in the cloud. But key challenges exist: (i) clients can have non-independently identically distributed~(non-IID) data, and (ii) the learning should be computationally cheap while scaling to 1000s of (unseen) clients. In this paper, we develop and evaluate several optimizations to FL training across edge and cloud for time-series demand forecasting in micro-grids and city-scale utilities using DNNs to achieve a high prediction accuracy while minimizing the training cost. We showcase the benefit of using exponentially weighted loss while training and show that it further improves the prediction of the final model. Finally, we evaluate these strategies by validating over 1000s of clients for three states in the US from the OpenEIA corpus, and performing FL both in a pseudo-distributed setting and a Pi edge cluster. The results highlight the benefits of the proposed methods over baselines like ARIMA and DNNs trained for individual consumers, which are not scalable.

D3FL: Data Distribution and Detrending for Robust Federated Learning in Non-linear Time-series Data

Jul 15, 2025Abstract:With advancements in computing and communication technologies, the Internet of Things (IoT) has seen significant growth. IoT devices typically collect data from various sensors, such as temperature, humidity, and energy meters. Much of this data is temporal in nature. Traditionally, data from IoT devices is centralized for analysis, but this approach introduces delays and increased communication costs. Federated learning (FL) has emerged as an effective alternative, allowing for model training across distributed devices without the need to centralize data. In many applications, such as smart home energy and environmental monitoring, the data collected by IoT devices across different locations can exhibit significant variation in trends and seasonal patterns. Accurately forecasting such non-stationary, non-linear time-series data is crucial for applications like energy consumption estimation and weather forecasting. However, these data variations can severely impact prediction accuracy. The key contributions of this paper are: (1) Investigating how non-linear, non-stationary time-series data distributions, like generalized extreme value (gen-extreme) and log norm distributions, affect FL performance. (2) Analyzing how different detrending techniques for non-linear time-series data influence the forecasting model's performance in a FL setup. We generated several synthetic time-series datasets using non-linear data distributions and trained an LSTM-based forecasting model using both centralized and FL approaches. Additionally, we evaluated the impact of detrending on real-world datasets with non-linear time-series data distributions. Our experimental results show that: (1) FL performs worse than centralized approaches when dealing with non-linear data distributions. (2) The use of appropriate detrending techniques improves FL performance, reducing loss across different data distributions.

Towards Perception-based Collision Avoidance for UAVs when Guiding the Visually Impaired

Jun 17, 2025Abstract:Autonomous navigation by drones using onboard sensors combined with machine learning and computer vision algorithms is impacting a number of domains, including agriculture, logistics, and disaster management. In this paper, we examine the use of drones for assisting visually impaired people (VIPs) in navigating through outdoor urban environments. Specifically, we present a perception-based path planning system for local planning around the neighborhood of the VIP, integrated with a global planner based on GPS and maps for coarse planning. We represent the problem using a geometric formulation and propose a multi DNN based framework for obstacle avoidance of the UAV as well as the VIP. Our evaluations conducted on a drone human system in a university campus environment verifies the feasibility of our algorithms in three scenarios; when the VIP walks on a footpath, near parked vehicles, and in a crowded street.

Optimizing Federated Learning using Remote Embeddings for Graph Neural Networks

Jun 14, 2025Abstract:Graph Neural Networks (GNNs) have experienced rapid advancements in recent years due to their ability to learn meaningful representations from graph data structures. Federated Learning (FL) has emerged as a viable machine learning approach for training a shared model on decentralized data, addressing privacy concerns while leveraging parallelism. Existing methods that address the unique requirements of federated GNN training using remote embeddings to enhance convergence accuracy are limited by their diminished performance due to large communication costs with a shared embedding server. In this paper, we present OpES, an optimized federated GNN training framework that uses remote neighbourhood pruning, and overlaps pushing of embeddings to the server with local training to reduce the network costs and training time. The modest drop in per-round accuracy due to pre-emptive push of embeddings is out-stripped by the reduction in per-round training time for large and dense graphs like Reddit and Products, converging up to $\approx2\times$ faster than the state-of-the-art technique using an embedding server and giving up to $20\%$ better accuracy than vanilla federated GNN learning.

Distance Estimation to Support Assistive Drones for the Visually Impaired using Robust Calibration

Mar 31, 2025Abstract:Autonomous navigation by drones using onboard sensors, combined with deep learning and computer vision algorithms, is impacting a number of domains. We examine the use of drones to autonomously assist Visually Impaired People (VIPs) in navigating outdoor environments while avoiding obstacles. Here, we present NOVA, a robust calibration technique using depth maps to estimate absolute distances to obstacles in a campus environment. NOVA uses a dynamic-update method that can adapt to adversarial scenarios. We compare NOVA with SOTA depth map approaches, and with geometric and regression-based baseline models, for distance estimation to VIPs and other obstacles in diverse and dynamic conditions. We also provide exhaustive evaluations to validate the robustness and generalizability of our methods. NOVA predicts distances to VIP with an error <30cm and to different obstacles like cars and bicycles with a maximum of 60cm error, which are better than the baselines. NOVA also clearly out-performs SOTA depth map methods, by upto 5.3-14.6x.

AIOpsLab: A Holistic Framework to Evaluate AI Agents for Enabling Autonomous Clouds

Jan 12, 2025

Abstract:AI for IT Operations (AIOps) aims to automate complex operational tasks, such as fault localization and root cause analysis, to reduce human workload and minimize customer impact. While traditional DevOps tools and AIOps algorithms often focus on addressing isolated operational tasks, recent advances in Large Language Models (LLMs) and AI agents are revolutionizing AIOps by enabling end-to-end and multitask automation. This paper envisions a future where AI agents autonomously manage operational tasks throughout the entire incident lifecycle, leading to self-healing cloud systems, a paradigm we term AgentOps. Realizing this vision requires a comprehensive framework to guide the design, development, and evaluation of these agents. To this end, we present AIOPSLAB, a framework that not only deploys microservice cloud environments, injects faults, generates workloads, and exports telemetry data but also orchestrates these components and provides interfaces for interacting with and evaluating agents. We discuss the key requirements for such a holistic framework and demonstrate how AIOPSLAB can facilitate the evaluation of next-generation AIOps agents. Through evaluations of state-of-the-art LLM agents within the benchmark created by AIOPSLAB, we provide insights into their capabilities and limitations in handling complex operational tasks in cloud environments.

PowerTrain: Fast, Generalizable Time and Power Prediction Models to Optimize DNN Training on Accelerated Edges

Jul 18, 2024Abstract:Accelerated edge devices, like Nvidia's Jetson with 1000+ CUDA cores, are increasingly used for DNN training and federated learning, rather than just for inferencing workloads. A unique feature of these compact devices is their fine-grained control over CPU, GPU, memory frequencies, and active CPU cores, which can limit their power envelope in a constrained setting while throttling the compute performance. Given this vast 10k+ parameter space, selecting a power mode for dynamically arriving training workloads to exploit power-performance trade-offs requires costly profiling for each new workload, or is done \textit{ad hoc}. We propose \textit{PowerTrain}, a transfer-learning approach to accurately predict the power and time consumed when training a given DNN workload (model + dataset) using any specified power mode (CPU/GPU/memory frequencies, core-count). It requires a one-time offline profiling of $1000$s of power modes for a reference DNN workload on a single Jetson device (Orin AGX) to build Neural Network (NN) based prediction models for time and power. These NN models are subsequently transferred (retrained) for a new DNN workload, or even a different Jetson device, with minimal additional profiling of just $50$ power modes to make accurate time and power predictions. These are then used to rapidly construct the Pareto front and select the optimal power mode for the new workload. PowerTrain's predictions are robust to new workloads, exhibiting a low MAPE of $<6\%$ for power and $<15\%$ for time on six new training workloads for up to $4400$ power modes, when transferred from a ResNet reference workload on Orin AGX. It is also resilient when transferred to two entirely new Jetson devices with prediction errors of $<14.5\%$ and $<11\%$. These outperform baseline predictions by more than $10\%$ and baseline optimizations by up to $45\%$ on time and $88\%$ on power.

Building AI Agents for Autonomous Clouds: Challenges and Design Principles

Jul 16, 2024

Abstract:The rapid growth in the use of Large Language Models (LLMs) and AI Agents as part of software development and deployment is revolutionizing the information technology landscape. While code generation receives significant attention, a higher-impact application lies in using AI agents for operational resilience of cloud services, which currently require significant human effort and domain knowledge. There is a growing interest in AI for IT Operations (AIOps) which aims to automate complex operational tasks, like fault localization and root cause analysis, thereby reducing human intervention and customer impact. However, achieving the vision of autonomous and self-healing clouds though AIOps is hampered by the lack of standardized frameworks for building, evaluating, and improving AIOps agents. This vision paper lays the groundwork for such a framework by first framing the requirements and then discussing design decisions that satisfy them. We also propose AIOpsLab, a prototype implementation leveraging agent-cloud-interface that orchestrates an application, injects real-time faults using chaos engineering, and interfaces with an agent to localize and resolve the faults. We report promising results and lay the groundwork to build a modular and robust framework for building, evaluating, and improving agents for autonomous clouds.

CORNET 2.0: A Co-Simulation Middleware forRobot Networks

Sep 14, 2021

Abstract:We present a networked co-simulation framework for multi-robot systems applications. We require a simulation framework that captures both physical interactions and communications aspects to effectively design such complex systems. This is necessary to co-design the multi-robots' autonomy logic and the communication protocols. The proposed framework extends existing tools to simulate the robot's autonomy and network-related aspects. We have used Gazebo with ROS/ROS2 to develop the autonomy logic for robots and mininet-WiFi as the network simulator to capture the cyber-physical systems properties of the multi-robot system. This framework addresses the need to seamlessly integrate the two simulation environments by synchronizing mobility and time, allowing for easy migration of the algorithms to real platforms.

Heuristic Algorithms for Co-scheduling of Edge Analytics and Routes for UAV Fleet Missions

Feb 06, 2021

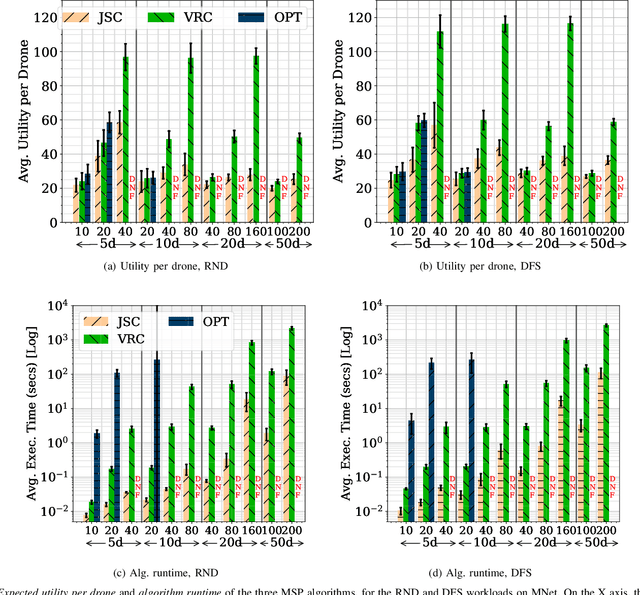

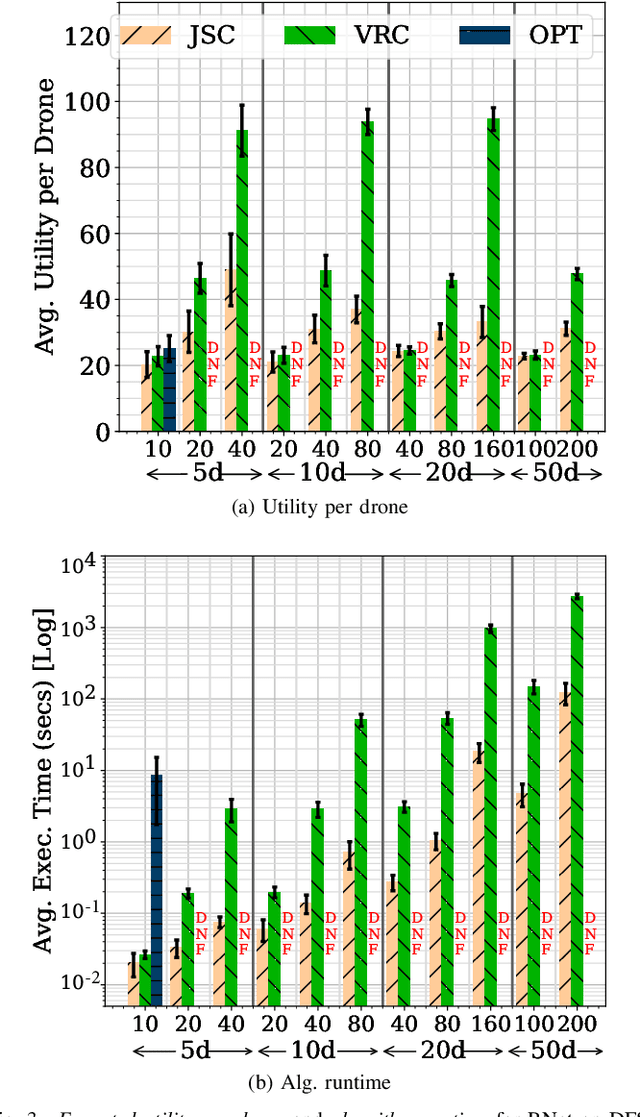

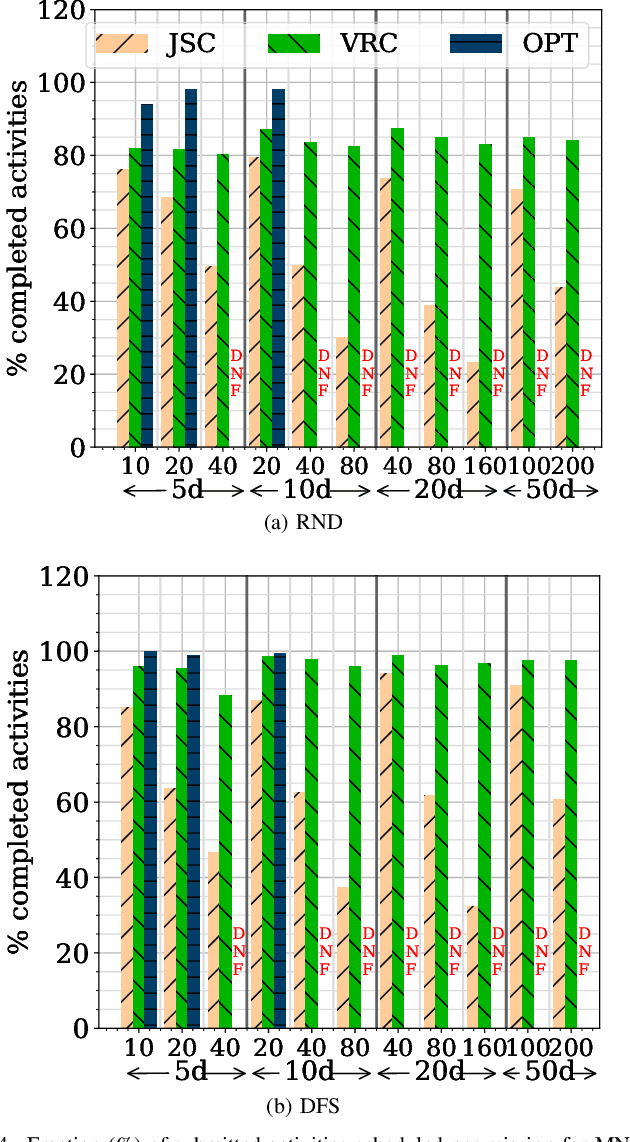

Abstract:Unmanned Aerial Vehicles (UAVs) or drones are increasingly used for urban applications like traffic monitoring and construction surveys. Autonomous navigation allows drones to visit waypoints and accomplish activities as part of their mission. A common activity is to hover and observe a location using on-board cameras. Advances in Deep Neural Networks (DNNs) allow such videos to be analyzed for automated decision making. UAVs also host edge computing capability for on-board inferencing by such DNNs. To this end, for a fleet of drones, we propose a novel Mission Scheduling Problem (MSP) that co-schedules the flight routes to visit and record video at waypoints, and their subsequent on-board edge analytics. The proposed schedule maximizes the utility from the activities while meeting activity deadlines as well as energy and computing constraints. We first prove that MSP is NP-hard and then optimally solve it by formulating a mixed integer linear programming (MILP) problem. Next, we design two efficient heuristic algorithms, JSC and VRC, that provide fast sub-optimal solutions. Evaluation of these three schedulers using real drone traces demonstrate utility-runtime trade-offs under diverse workloads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge