Yijie Peng

LLM-Inspired Pretrain-Then-Finetune for Small-Data, Large-Scale Optimization

Feb 03, 2026Abstract:We consider small-data, large-scale decision problems in which a firm must make many operational decisions simultaneously (e.g., across a large product portfolio) while observing only a few, potentially noisy, data points per instance. Inspired by the success of large language models (LLMs), we propose a pretrain-then-finetune approach built on a designed Transformer model to address this challenge. The model is first pretrained on large-scale, domain-informed synthetic data that encode managerial knowledge and structural features of the decision environment, and is then fine-tuned on real observations. This new pipeline offers two complementary advantages: pretraining injects domain knowledge into the learning process and enables the training of high-capacity models using abundant synthetic data, while finetuning adapts the pretrained model to the operational environment and improves alignment with the true data-generating regime. While we have leveraged the Transformer's state-of-the-art representational capacity, particularly its attention mechanism, to efficiently extract cross-task structure, our approach is not an off-the-shelf application. Instead, it relies on problem-specific architectural design and a tailored training procedure to match the decision setting. Theoretically, we develop the first comprehensive error analysis regarding Transformer learning in relevant contexts, establishing nonasymptotic guarantees that validate the method's effectiveness. Critically, our analysis reveals how pretraining and fine-tuning jointly determine performance, with the dominant contribution governed by whichever is more favorable. In particular, finetuning exhibits an economies-of-scale effect, whereby transfer learning becomes increasingly effective as the number of instances grows.

Stochastic Approximation Methods for Distortion Risk Measure Optimization

Oct 06, 2025Abstract:Distortion Risk Measures (DRMs) capture risk preferences in decision-making and serve as general criteria for managing uncertainty. This paper proposes gradient descent algorithms for DRM optimization based on two dual representations: the Distortion-Measure (DM) form and Quantile-Function (QF) form. The DM-form employs a three-timescale algorithm to track quantiles, compute their gradients, and update decision variables, utilizing the Generalized Likelihood Ratio and kernel-based density estimation. The QF-form provides a simpler two-timescale approach that avoids the need for complex quantile gradient estimation. A hybrid form integrates both approaches, applying the DM-form for robust performance around distortion function jumps and the QF-form for efficiency in smooth regions. Proofs of strong convergence and convergence rates for the proposed algorithms are provided. In particular, the DM-form achieves an optimal rate of $O(k^{-4/7})$, while the QF-form attains a faster rate of $O(k^{-2/3})$. Numerical experiments confirm their effectiveness and demonstrate substantial improvements over baselines in robust portfolio selection tasks. The method's scalability is further illustrated through integration into deep reinforcement learning. Specifically, a DRM-based Proximal Policy Optimization algorithm is developed and applied to multi-echelon dynamic inventory management, showcasing its practical applicability.

Closing the Loop: Coordinating Inventory and Recommendation via Deep Reinforcement Learning on Multiple Timescales

Oct 05, 2025Abstract:Effective cross-functional coordination is essential for enhancing firm-wide profitability, particularly in the face of growing organizational complexity and scale. Recent advances in artificial intelligence, especially in reinforcement learning (RL), offer promising avenues to address this fundamental challenge. This paper proposes a unified multi-agent RL framework tailored for joint optimization across distinct functional modules, exemplified via coordinating inventory replenishment and personalized product recommendation. We first develop an integrated theoretical model to capture the intricate interplay between these functions and derive analytical benchmarks that characterize optimal coordination. The analysis reveals synchronized adjustment patterns across products and over time, highlighting the importance of coordinated decision-making. Leveraging these insights, we design a novel multi-timescale multi-agent RL architecture that decomposes policy components according to departmental functions and assigns distinct learning speeds based on task complexity and responsiveness. Our model-free multi-agent design improves scalability and deployment flexibility, while multi-timescale updates enhance convergence stability and adaptability across heterogeneous decisions. We further establish the asymptotic convergence of the proposed algorithm. Extensive simulation experiments demonstrate that the proposed approach significantly improves profitability relative to siloed decision-making frameworks, while the behaviors of the trained RL agents align closely with the managerial insights from our theoretical model. Taken together, this work provides a scalable, interpretable RL-based solution to enable effective cross-functional coordination in complex business settings.

Forward Learning with Differential Privacy

Apr 01, 2025

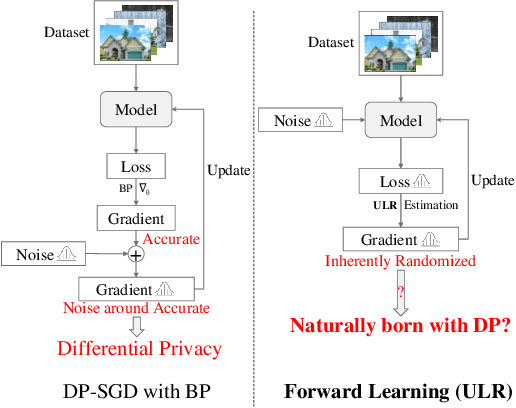

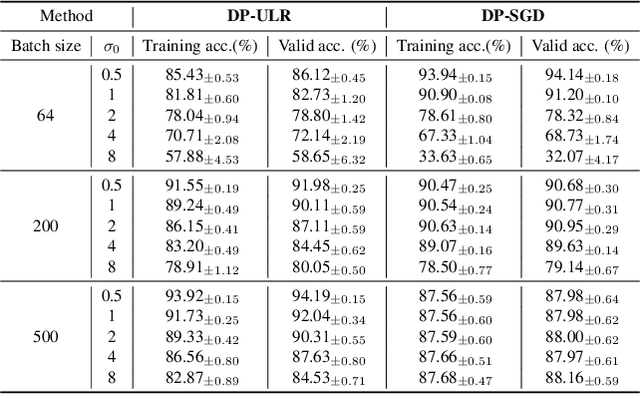

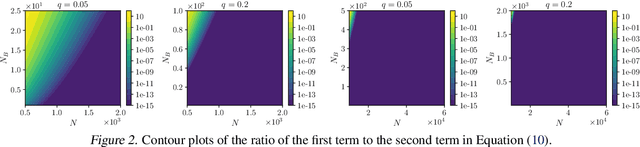

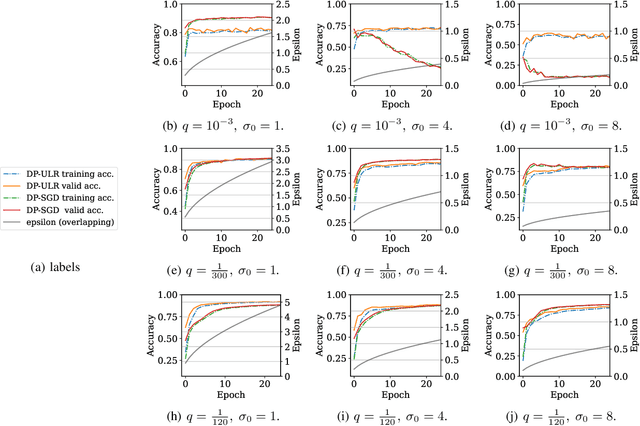

Abstract:Differential privacy (DP) in deep learning is a critical concern as it ensures the confidentiality of training data while maintaining model utility. Existing DP training algorithms provide privacy guarantees by clipping and then injecting external noise into sample gradients computed by the backpropagation algorithm. Different from backpropagation, forward-learning algorithms based on perturbation inherently add noise during the forward pass and utilize randomness to estimate the gradients. Although these algorithms are non-privatized, the introduction of noise during the forward pass indirectly provides internal randomness protection to the model parameters and their gradients, suggesting the potential for naturally providing differential privacy. In this paper, we propose a \blue{privatized} forward-learning algorithm, Differential Private Unified Likelihood Ratio (DP-ULR), and demonstrate its differential privacy guarantees. DP-ULR features a novel batch sampling operation with rejection, of which we provide theoretical analysis in conjunction with classic differential privacy mechanisms. DP-ULR is also underpinned by a theoretically guided privacy controller that dynamically adjusts noise levels to manage privacy costs in each training step. Our experiments indicate that DP-ULR achieves competitive performance compared to traditional differential privacy training algorithms based on backpropagation, maintaining nearly the same privacy loss limits.

CoNNect: A Swiss-Army-Knife Regularizer for Pruning of Neural Networks

Feb 02, 2025

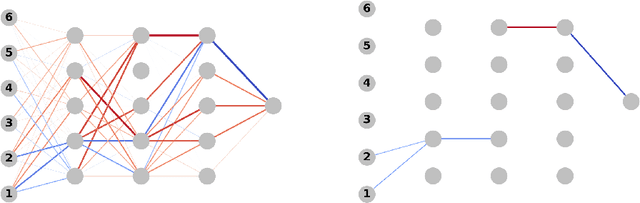

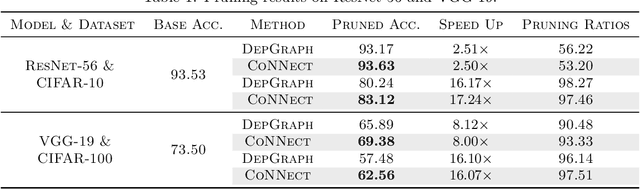

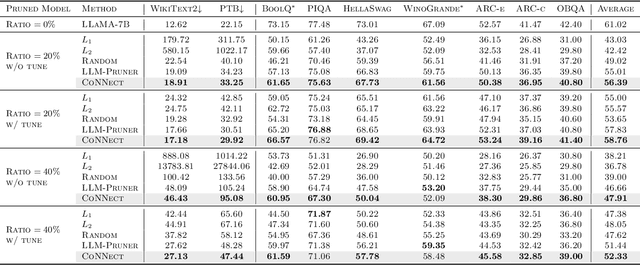

Abstract:Pruning encompasses a range of techniques aimed at increasing the sparsity of neural networks (NNs). These techniques can generally be framed as minimizing a loss function subject to an $L_0$-norm constraint. This paper introduces CoNNect, a novel differentiable regularizer for sparse NN training that ensures connectivity between input and output layers. CoNNect integrates with established pruning strategies and supports both structured and unstructured pruning. We proof that CoNNect approximates $L_0$-regularization, guaranteeing maximally connected network structures while avoiding issues like layer collapse. Numerical experiments demonstrate that CoNNect improves classical pruning strategies and enhances state-of-the-art one-shot pruners, such as DepGraph and LLM-pruner.

Eliminating Ratio Bias for Gradient-based Simulated Parameter Estimation

Nov 20, 2024

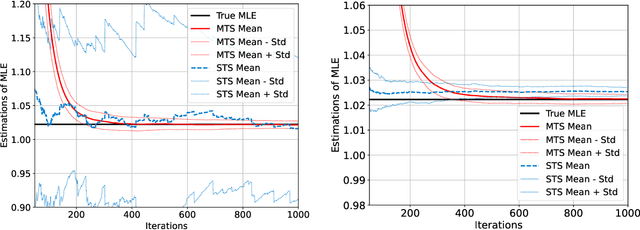

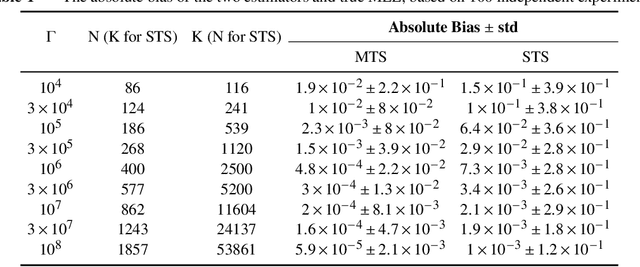

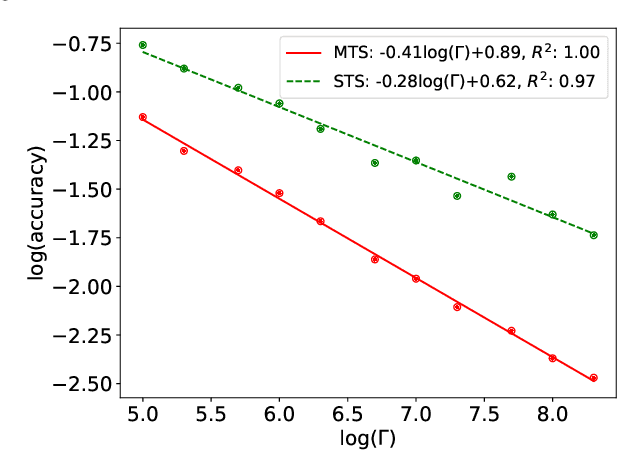

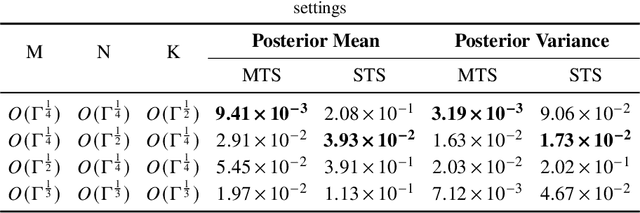

Abstract:This article addresses the challenge of parameter calibration in stochastic models where the likelihood function is not analytically available. We propose a gradient-based simulated parameter estimation framework, leveraging a multi-time scale algorithm that tackles the issue of ratio bias in both maximum likelihood estimation and posterior density estimation problems. Additionally, we introduce a nested simulation optimization structure, providing theoretical analyses including strong convergence, asymptotic normality, convergence rate, and budget allocation strategies for the proposed algorithm. The framework is further extended to neural network training, offering a novel perspective on stochastic approximation in machine learning. Numerical experiments show that our algorithm can improve the estimation accuracy and save computational costs.

Series Expansion of Probability of Correct Selection for Improved Finite Budget Allocation in Ranking and Selection

Nov 16, 2024

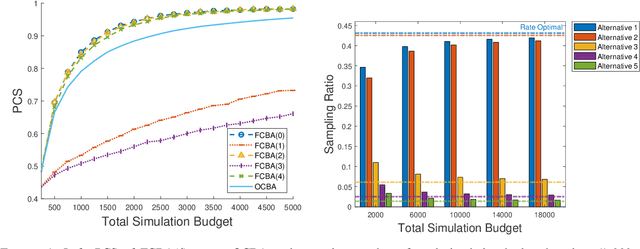

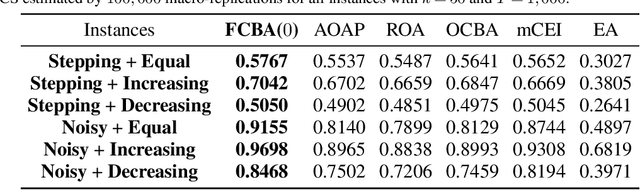

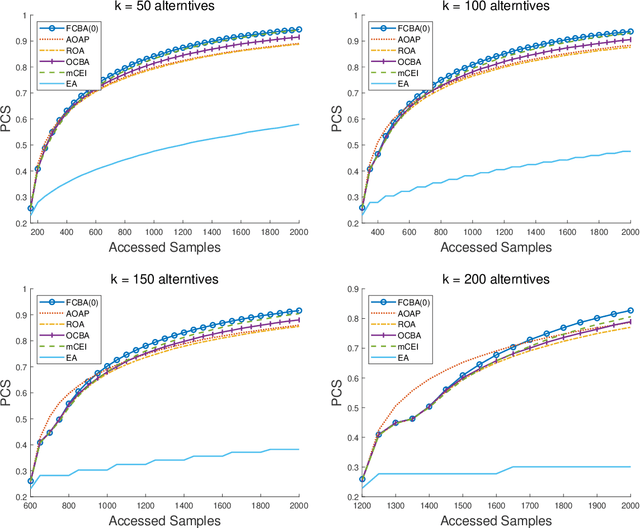

Abstract:This paper addresses the challenge of improving finite sample performance in Ranking and Selection by developing a Bahadur-Rao type expansion for the Probability of Correct Selection (PCS). While traditional large deviations approximations captures PCS behavior in the asymptotic regime, they can lack precision in finite sample settings. Our approach enhances PCS approximation under limited simulation budgets, providing more accurate characterization of optimal sampling ratios and optimality conditions dependent of budgets. Algorithmically, we propose a novel finite budget allocation (FCBA) policy, which sequentially estimates the optimality conditions and accordingly balances the sampling ratios. We illustrate numerically on toy examples that our FCBA policy achieves superior PCS performance compared to tested traditional methods. As an extension, we note that the non-monotonic PCS behavior described in the literature for low-confidence scenarios can be attributed to the negligence of simultaneous incorrect binary comparisons in PCS approximations. We provide a refined expansion and a tailored allocation strategy to handle low-confidence scenarios, addressing the non-monotonicity issue.

Dual-Agent Deep Reinforcement Learning for Dynamic Pricing and Replenishment

Oct 28, 2024Abstract:We study the dynamic pricing and replenishment problems under inconsistent decision frequencies. Different from the traditional demand assumption, the discreteness of demand and the parameter within the Poisson distribution as a function of price introduce complexity into analyzing the problem property. We demonstrate the concavity of the single-period profit function with respect to product price and inventory within their respective domains. The demand model is enhanced by integrating a decision tree-based machine learning approach, trained on comprehensive market data. Employing a two-timescale stochastic approximation scheme, we address the discrepancies in decision frequencies between pricing and replenishment, ensuring convergence to local optimum. We further refine our methodology by incorporating deep reinforcement learning (DRL) techniques and propose a fast-slow dual-agent DRL algorithm. In this approach, two agents handle pricing and inventory and are updated on different scales. Numerical results from both single and multiple products scenarios validate the effectiveness of our methods.

FLOPS: Forward Learning with OPtimal Sampling

Oct 08, 2024

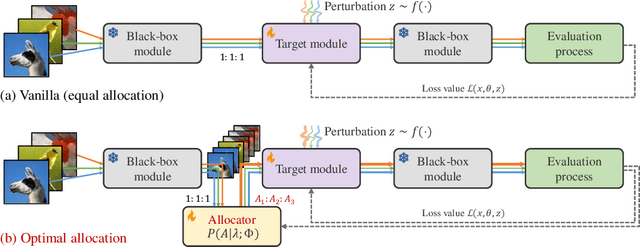

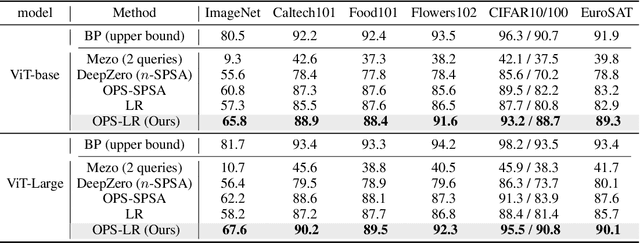

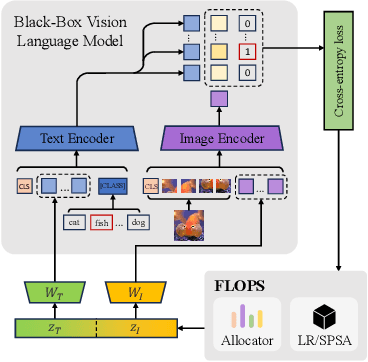

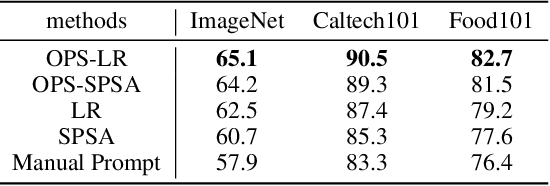

Abstract:Given the limitations of backpropagation, perturbation-based gradient computation methods have recently gained focus for learning with only forward passes, also referred to as queries. Conventional forward learning consumes enormous queries on each data point for accurate gradient estimation through Monte Carlo sampling, which hinders the scalability of those algorithms. However, not all data points deserve equal queries for gradient estimation. In this paper, we study the problem of improving the forward learning efficiency from a novel perspective: how to reduce the gradient estimation variance with minimum cost? For this, we propose to allocate the optimal number of queries over each data in one batch during training to achieve a good balance between estimation accuracy and computational efficiency. Specifically, with a simplified proxy objective and a reparameterization technique, we derive a novel plug-and-play query allocator with minimal parameters. Theoretical results are carried out to verify its optimality. We conduct extensive experiments for fine-tuning Vision Transformers on various datasets and further deploy the allocator to two black-box applications: prompt tuning and multimodal alignment for foundation models. All findings demonstrate that our proposed allocator significantly enhances the scalability of forward-learning algorithms, paving the way for real-world applications.

Forward Learning for Gradient-based Black-box Saliency Map Generation

Mar 22, 2024

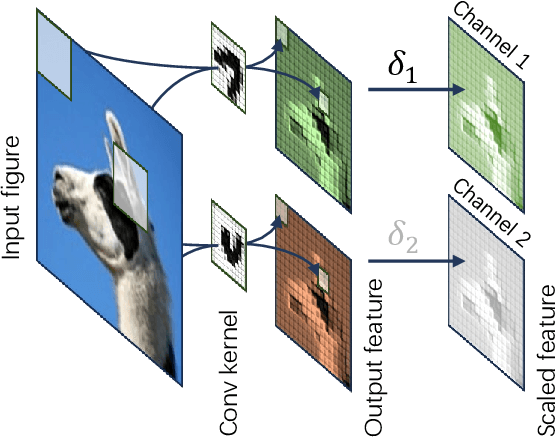

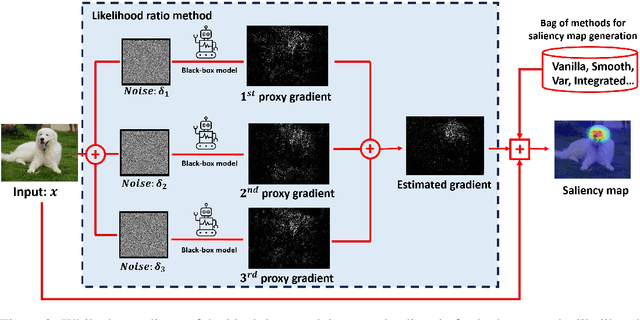

Abstract:Gradient-based saliency maps are widely used to explain deep neural network decisions. However, as models become deeper and more black-box, such as in closed-source APIs like ChatGPT, computing gradients become challenging, hindering conventional explanation methods. In this work, we introduce a novel unified framework for estimating gradients in black-box settings and generating saliency maps to interpret model decisions. We employ the likelihood ratio method to estimate output-to-input gradients and utilize them for saliency map generation. Additionally, we propose blockwise computation techniques to enhance estimation accuracy. Extensive experiments in black-box settings validate the effectiveness of our method, demonstrating accurate gradient estimation and explainability of generated saliency maps. Furthermore, we showcase the scalability of our approach by applying it to explain GPT-Vision, revealing the continued relevance of gradient-based explanation methods in the era of large, closed-source, and black-box models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge