Yasuyuki Matsushita

Spectral Sensitivity Estimation with an Uncalibrated Diffraction Grating

Aug 01, 2025Abstract:This paper introduces a practical and accurate calibration method for camera spectral sensitivity using a diffraction grating. Accurate calibration of camera spectral sensitivity is crucial for various computer vision tasks, including color correction, illumination estimation, and material analysis. Unlike existing approaches that require specialized narrow-band filters or reference targets with known spectral reflectances, our method only requires an uncalibrated diffraction grating sheet, readily available off-the-shelf. By capturing images of the direct illumination and its diffracted pattern through the grating sheet, our method estimates both the camera spectral sensitivity and the diffraction grating parameters in a closed-form manner. Experiments on synthetic and real-world data demonstrate that our method outperforms conventional reference target-based methods, underscoring its effectiveness and practicality.

HoGS: Unified Near and Far Object Reconstruction via Homogeneous Gaussian Splatting

Mar 25, 2025

Abstract:Novel view synthesis has demonstrated impressive progress recently, with 3D Gaussian splatting (3DGS) offering efficient training time and photorealistic real-time rendering. However, reliance on Cartesian coordinates limits 3DGS's performance on distant objects, which is important for reconstructing unbounded outdoor environments. We found that, despite its ultimate simplicity, using homogeneous coordinates, a concept on the projective geometry, for the 3DGS pipeline remarkably improves the rendering accuracies of distant objects. We therefore propose Homogeneous Gaussian Splatting (HoGS) incorporating homogeneous coordinates into the 3DGS framework, providing a unified representation for enhancing near and distant objects. HoGS effectively manages both expansive spatial positions and scales particularly in outdoor unbounded environments by adopting projective geometry principles. Experiments show that HoGS significantly enhances accuracy in reconstructing distant objects while maintaining high-quality rendering of nearby objects, along with fast training speed and real-time rendering capability. Our implementations are available on our project page https://kh129.github.io/hogs/.

Shadow Art Kanji: Inverse Rendering Application

Mar 15, 2025Abstract:Finding a balance between artistic beauty and machine-generated imagery is always a difficult task. This project seeks to create 3D models that, when illuminated, cast shadows resembling Kanji characters. It aims to combine artistic expression with computational techniques, providing an accurate and efficient approach to visualizing these Japanese characters through shadows.

Multi-View Azimuth Stereo via Tangent Space Consistency

Mar 29, 2023Abstract:We present a method for 3D reconstruction only using calibrated multi-view surface azimuth maps. Our method, multi-view azimuth stereo, is effective for textureless or specular surfaces, which are difficult for conventional multi-view stereo methods. We introduce the concept of tangent space consistency: Multi-view azimuth observations of a surface point should be lifted to the same tangent space. Leveraging this consistency, we recover the shape by optimizing a neural implicit surface representation. Our method harnesses the robust azimuth estimation capabilities of photometric stereo methods or polarization imaging while bypassing potentially complex zenith angle estimation. Experiments using azimuth maps from various sources validate the accurate shape recovery with our method, even without zenith angles.

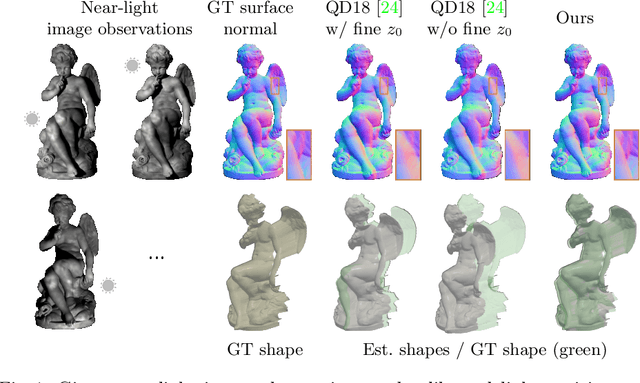

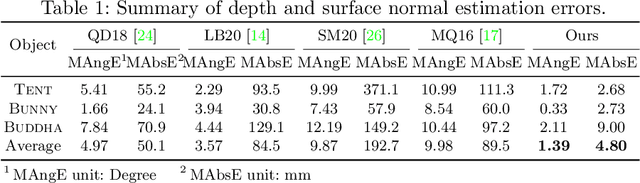

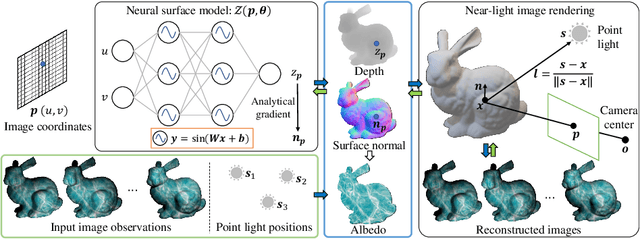

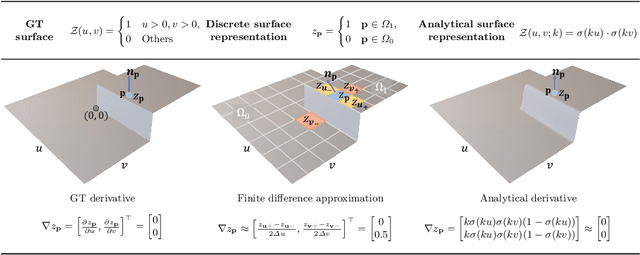

Edge-preserving Near-light Photometric Stereo with Neural Surfaces

Jul 11, 2022

Abstract:This paper presents a near-light photometric stereo method that faithfully preserves sharp depth edges in the 3D reconstruction. Unlike previous methods that rely on finite differentiation for approximating depth partial derivatives and surface normals, we introduce an analytically differentiable neural surface in near-light photometric stereo for avoiding differentiation errors at sharp depth edges, where the depth is represented as a neural function of the image coordinates. By further formulating the Lambertian albedo as a dependent variable resulting from the surface normal and depth, our method is insusceptible to inaccurate depth initialization. Experiments on both synthetic and real-world scenes demonstrate the effectiveness of our method for detailed shape recovery with edge preservation.

Lighting, Reflectance and Geometry Estimation from 360$^{\circ}$ Panoramic Stereo

Apr 20, 2021

Abstract:We propose a method for estimating high-definition spatially-varying lighting, reflectance, and geometry of a scene from 360$^{\circ}$ stereo images. Our model takes advantage of the 360$^{\circ}$ input to observe the entire scene with geometric detail, then jointly estimates the scene's properties with physical constraints. We first reconstruct a near-field environment light for predicting the lighting at any 3D location within the scene. Then we present a deep learning model that leverages the stereo information to infer the reflectance and surface normal. Lastly, we incorporate the physical constraints between lighting and geometry to refine the reflectance of the scene. Both quantitative and qualitative experiments show that our method, benefiting from the 360$^{\circ}$ observation of the scene, outperforms prior state-of-the-art methods and enables more augmented reality applications such as mirror-objects insertion.

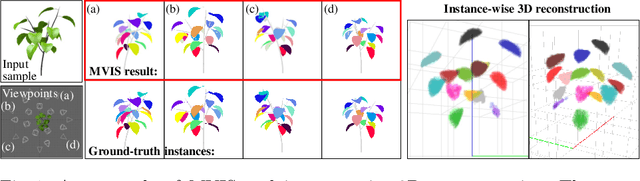

Descriptor-Free Multi-View Region Matching for Instance-Wise 3D Reconstruction

Nov 27, 2020

Abstract:This paper proposes a multi-view extension of instance segmentation without relying on texture or shape descriptor matching. Multi-view instance segmentation becomes challenging for scenes with repetitive textures and shapes, e.g., plant leaves, due to the difficulty of multi-view matching using texture or shape descriptors. To this end, we propose a multi-view region matching method based on epipolar geometry, which does not rely on any feature descriptors. We further show that the epipolar region matching can be easily integrated into instance segmentation and effective for instance-wise 3D reconstruction. Experiments demonstrate the improved accuracy of multi-view instance matching and the 3D reconstruction compared to the baseline methods.

Deep Photometric Stereo for Non-Lambertian Surfaces

Jul 26, 2020

Abstract:This paper addresses the problem of photometric stereo, in both calibrated and uncalibrated scenarios, for non-Lambertian surfaces based on deep learning. We first introduce a fully convolutional deep network for calibrated photometric stereo, which we call PS-FCN. Unlike traditional approaches that adopt simplified reflectance models to make the problem tractable, our method directly learns the mapping from reflectance observations to surface normal, and is able to handle surfaces with general and unknown isotropic reflectance. At test time, PS-FCN takes an arbitrary number of images and their associated light directions as input and predicts a surface normal map of the scene in a fast feed-forward pass. To deal with the uncalibrated scenario where light directions are unknown, we introduce a new convolutional network, named LCNet, to estimate light directions from input images. The estimated light directions and the input images are then fed to PS-FCN to determine the surface normals. Our method does not require a pre-defined set of light directions and can handle multiple images in an order-agnostic manner. Thorough evaluation of our approach on both synthetic and real datasets shows that it outperforms state-of-the-art methods in both calibrated and uncalibrated scenarios.

Self-calibrating Deep Photometric Stereo Networks

Mar 18, 2019

Abstract:This paper proposes an uncalibrated photometric stereo method for non-Lambertian scenes based on deep learning. Unlike previous approaches that heavily rely on assumptions of specific reflectances and light source distributions, our method is able to determine both shape and light directions of a scene with unknown arbitrary reflectances observed under unknown varying light directions. To achieve this goal, we propose a two-stage deep learning architecture, called SDPS-Net, which can effectively take advantage of intermediate supervision, resulting in reduced learning difficulty compared to a single-stage model. Experiments on both synthetic and real datasets show that our proposed approach significantly outperforms previous uncalibrated photometric stereo methods.

Shape-conditioned Image Generation by Learning Latent Appearance Representation from Unpaired Data

Nov 29, 2018

Abstract:Conditional image generation is effective for diverse tasks including training data synthesis for learning-based computer vision. However, despite the recent advances in generative adversarial networks (GANs), it is still a challenging task to generate images with detailed conditioning on object shapes. Existing methods for conditional image generation use category labels and/or keypoints and are only give limited control over object categories. In this work, we present SCGAN, an architecture to generate images with a desired shape specified by an input normal map. The shape-conditioned image generation task is achieved by explicitly modeling the image appearance via a latent appearance vector. The network is trained using unpaired training samples of real images and rendered normal maps. This approach enables us to generate images of arbitrary object categories with the target shape and diverse image appearances. We show the effectiveness of our method through both qualitative and quantitative evaluation on training data generation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge