Xingshu Chen

Network Alignment

Apr 15, 2025Abstract:Complex networks are frequently employed to model physical or virtual complex systems. When certain entities exist across multiple systems simultaneously, unveiling their corresponding relationships across the networks becomes crucial. This problem, known as network alignment, holds significant importance. It enhances our understanding of complex system structures and behaviours, facilitates the validation and extension of theoretical physics research about studying complex systems, and fosters diverse practical applications across various fields. However, due to variations in the structure, characteristics, and properties of complex networks across different fields, the study of network alignment is often isolated within each domain, with even the terminologies and concepts lacking uniformity. This review comprehensively summarizes the latest advancements in network alignment research, focusing on analyzing network alignment characteristics and progress in various domains such as social network analysis, bioinformatics, computational linguistics and privacy protection. It provides a detailed analysis of various methods' implementation principles, processes, and performance differences, including structure consistency-based methods, network embedding-based methods, and graph neural network-based (GNN-based) methods. Additionally, the methods for network alignment under different conditions, such as in attributed networks, heterogeneous networks, directed networks, and dynamic networks, are presented. Furthermore, the challenges and the open issues for future studies are also discussed.

Prompt Packer: Deceiving LLMs through Compositional Instruction with Hidden Attacks

Oct 16, 2023

Abstract:Recently, Large language models (LLMs) with powerful general capabilities have been increasingly integrated into various Web applications, while undergoing alignment training to ensure that the generated content aligns with user intent and ethics. Unfortunately, they remain the risk of generating harmful content like hate speech and criminal activities in practical applications. Current approaches primarily rely on detecting, collecting, and training against harmful prompts to prevent such risks. However, they typically focused on the "superficial" harmful prompts with a solitary intent, ignoring composite attack instructions with multiple intentions that can easily elicit harmful content in real-world scenarios. In this paper, we introduce an innovative technique for obfuscating harmful instructions: Compositional Instruction Attacks (CIA), which refers to attacking by combination and encapsulation of multiple instructions. CIA hides harmful prompts within instructions of harmless intentions, making it impossible for the model to identify underlying malicious intentions. Furthermore, we implement two transformation methods, known as T-CIA and W-CIA, to automatically disguise harmful instructions as talking or writing tasks, making them appear harmless to LLMs. We evaluated CIA on GPT-4, ChatGPT, and ChatGLM2 with two safety assessment datasets and two harmful prompt datasets. It achieves an attack success rate of 95%+ on safety assessment datasets, and 83%+ for GPT-4, 91%+ for ChatGPT (gpt-3.5-turbo backed) and ChatGLM2-6B on harmful prompt datasets. Our approach reveals the vulnerability of LLMs to such compositional instruction attacks that harbor underlying harmful intentions, contributing significantly to LLM security development. Warning: this paper may contain offensive or upsetting content!

RAUCG: Retrieval-Augmented Unsupervised Counter Narrative Generation for Hate Speech

Oct 09, 2023

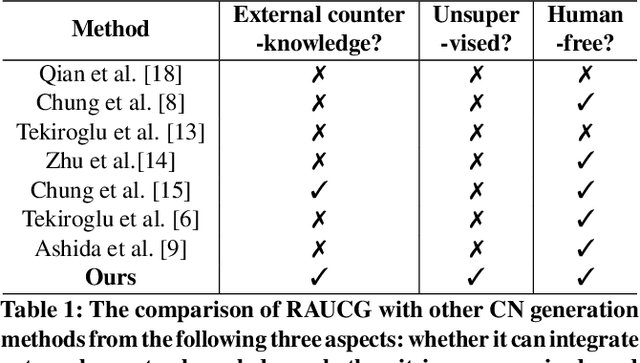

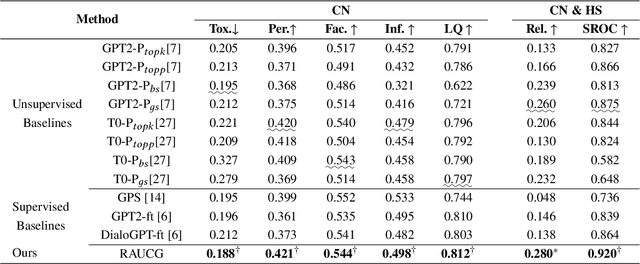

Abstract:The Counter Narrative (CN) is a promising approach to combat online hate speech (HS) without infringing on freedom of speech. In recent years, there has been a growing interest in automatically generating CNs using natural language generation techniques. However, current automatic CN generation methods mainly rely on expert-authored datasets for training, which are time-consuming and labor-intensive to acquire. Furthermore, these methods cannot directly obtain and extend counter-knowledge from external statistics, facts, or examples. To address these limitations, we propose Retrieval-Augmented Unsupervised Counter Narrative Generation (RAUCG) to automatically expand external counter-knowledge and map it into CNs in an unsupervised paradigm. Specifically, we first introduce an SSF retrieval method to retrieve counter-knowledge from the multiple perspectives of stance consistency, semantic overlap rate, and fitness for HS. Then we design an energy-based decoding mechanism by quantizing knowledge injection, countering and fluency constraints into differentiable functions, to enable the model to build mappings from counter-knowledge to CNs without expert-authored CN data. Lastly, we comprehensively evaluate model performance in terms of language quality, toxicity, persuasiveness, relevance, and success rate of countering HS, etc. Experimental results show that RAUCG outperforms strong baselines on all metrics and exhibits stronger generalization capabilities, achieving significant improvements of +2.0% in relevance and +4.5% in success rate of countering metrics. Moreover, RAUCG enabled GPT2 to outperform T0 in all metrics, despite the latter being approximately eight times larger than the former. Warning: This paper may contain offensive or upsetting content!

ClueGraphSum: Let Key Clues Guide the Cross-Lingual Abstractive Summarization

Mar 09, 2022

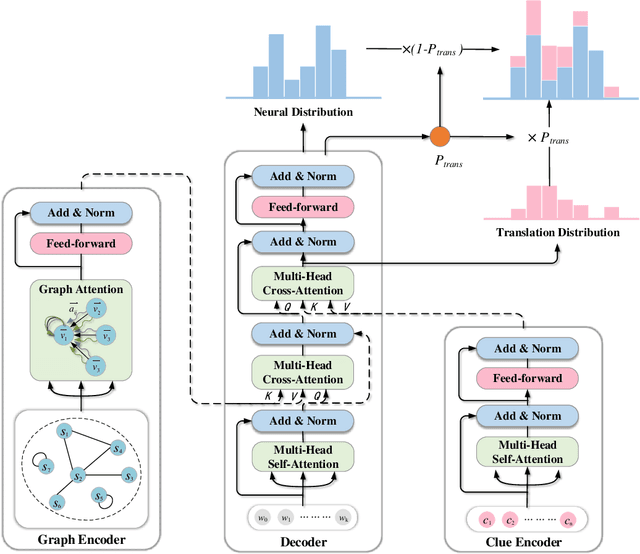

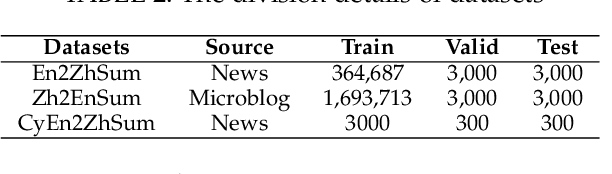

Abstract:Cross-Lingual Summarization (CLS) is the task to generate a summary in one language for an article in a different language. Previous studies on CLS mainly take pipeline methods or train the end-to-end model using the translated parallel data. However, the quality of generated cross-lingual summaries needs more further efforts to improve, and the model performance has never been evaluated on the hand-written CLS dataset. Therefore, we first propose a clue-guided cross-lingual abstractive summarization method to improve the quality of cross-lingual summaries, and then construct a novel hand-written CLS dataset for evaluation. Specifically, we extract keywords, named entities, etc. of the input article as key clues for summarization and then design a clue-guided algorithm to transform an article into a graph with less noisy sentences. One Graph encoder is built to learn sentence semantics and article structures and one Clue encoder is built to encode and translate key clues, ensuring the information of important parts are reserved in the generated summary. These two encoders are connected by one decoder to directly learn cross-lingual semantics. Experimental results show that our method has stronger robustness for longer inputs and substantially improves the performance over the strong baseline, achieving an improvement of 8.55 ROUGE-1 (English-to-Chinese summarization) and 2.13 MoverScore (Chinese-to-English summarization) scores over the existing SOTA.

Detecting Offensive Language on Social Networks: An End-to-end Detection Method based on Graph Attention Networks

Mar 04, 2022

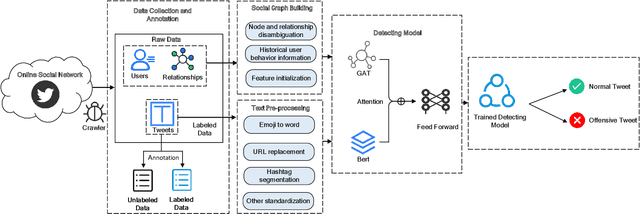

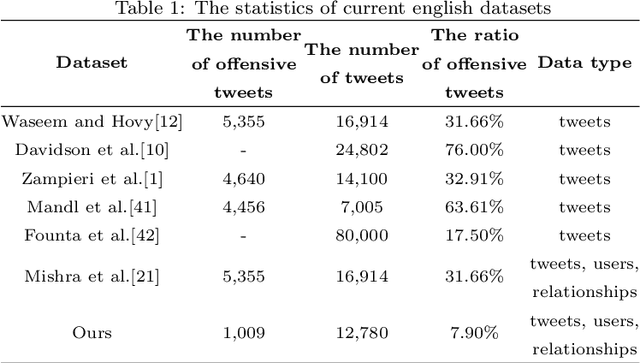

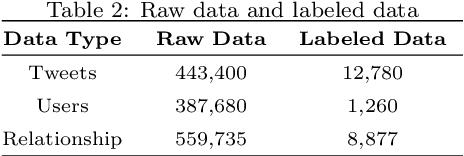

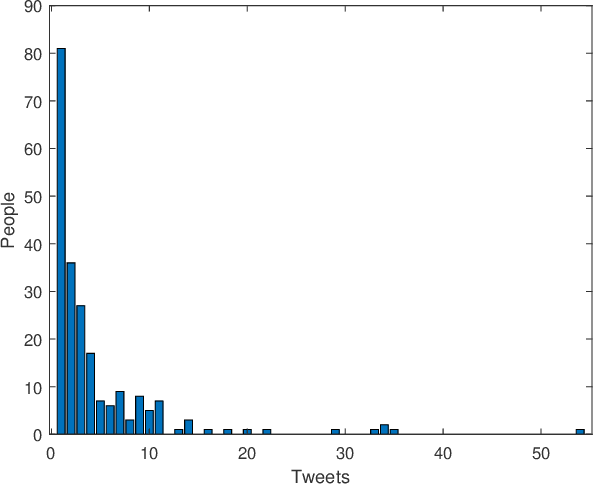

Abstract:The pervasiveness of offensive language on the social network has caused adverse effects on society, such as abusive behavior online. It is urgent to detect offensive language and curb its spread. Existing research shows that methods with community structure features effectively improve the performance of offensive language detection. However, the existing models deal with community structure independently, which seriously affects the effectiveness of detection models. In this paper, we propose an end-to-end method based on community structure and text features for offensive language detection (CT-OLD). Specifically, the community structure features are directly captured by the graph attention network layer, and the text embeddings are taken from the last hidden layer of BERT. Attention mechanisms and position encoding are used to fuse these features. Meanwhile, we add user opinion to the community structure for representing user features. The user opinion is represented by user historical behavior information, which outperforms that represented by text information. Besides the above point, the distribution of users and tweets is unbalanced in the popular datasets, which limits the generalization ability of the model. To address this issue, we construct and release a dataset with reasonable user distribution. Our method outperforms baselines with the F1 score of 89.94%. The results show that the end-to-end model effectively learns the potential information of community structure and text, and user historical behavior information is more suitable for user opinion representation.

Analyzing Adversarial Robustness of Deep Neural Networks in Pixel Space: a Semantic Perspective

Jun 18, 2021

Abstract:The vulnerability of deep neural networks to adversarial examples, which are crafted maliciously by modifying the inputs with imperceptible perturbations to misled the network produce incorrect outputs, reveals the lack of robustness and poses security concerns. Previous works study the adversarial robustness of image classifiers on image level and use all the pixel information in an image indiscriminately, lacking of exploration of regions with different semantic meanings in the pixel space of an image. In this work, we fill this gap and explore the pixel space of the adversarial image by proposing an algorithm to looking for possible perturbations pixel by pixel in different regions of the segmented image. The extensive experimental results on CIFAR-10 and ImageNet verify that searching for the modified pixel in only some pixels of an image can successfully launch the one-pixel adversarial attacks without requiring all the pixels of the entire image, and there exist multiple vulnerable points scattered in different regions of an image. We also demonstrate that the adversarial robustness of different regions on the image varies with the amount of semantic information contained.

An End-to-End Attack on Text-based CAPTCHAs Based on Cycle-Consistent Generative Adversarial Network

Aug 26, 2020

Abstract:As a widely deployed security scheme, text-based CAPTCHAs have become more and more difficult to resist machine learning-based attacks. So far, many researchers have conducted attacking research on text-based CAPTCHAs deployed by different companies (such as Microsoft, Amazon, and Apple) and achieved certain results.However, most of these attacks have some shortcomings, such as poor portability of attack methods, requiring a series of data preprocessing steps, and relying on large amounts of labeled CAPTCHAs. In this paper, we propose an efficient and simple end-to-end attack method based on cycle-consistent generative adversarial networks. Compared with previous studies, our method greatly reduces the cost of data labeling. In addition, this method has high portability. It can attack common text-based CAPTCHA schemes only by modifying a few configuration parameters, which makes the attack easier. Firstly, we train CAPTCHA synthesizers based on the cycle-GAN to generate some fake samples. Basic recognizers based on the convolutional recurrent neural network are trained with the fake data. Subsequently, an active transfer learning method is employed to optimize the basic recognizer utilizing tiny amounts of labeled real-world CAPTCHA samples. Our approach efficiently cracked the CAPTCHA schemes deployed by 10 popular websites, indicating that our attack is likely very general. Additionally, we analyzed the current most popular anti-recognition mechanisms. The results show that the combination of more anti-recognition mechanisms can improve the security of CAPTCHA, but the improvement is limited. Conversely, generating more complex CAPTCHAs may cost more resources and reduce the availability of CAPTCHAs.

Improving adversarial robustness of deep neural networks by using semantic information

Aug 18, 2020

Abstract:The vulnerability of deep neural networks (DNNs) to adversarial attack, which is an attack that can mislead state-of-the-art classifiers into making an incorrect classification with high confidence by deliberately perturbing the original inputs, raises concerns about the robustness of DNNs to such attacks. Adversarial training, which is the main heuristic method for improving adversarial robustness and the first line of defense against adversarial attacks, requires many sample-by-sample calculations to increase training size and is usually insufficiently strong for an entire network. This paper provides a new perspective on the issue of adversarial robustness, one that shifts the focus from the network as a whole to the critical part of the region close to the decision boundary corresponding to a given class. From this perspective, we propose a method to generate a single but image-agnostic adversarial perturbation that carries the semantic information implying the directions to the fragile parts on the decision boundary and causes inputs to be misclassified as a specified target. We call the adversarial training based on such perturbations "region adversarial training" (RAT), which resembles classical adversarial training but is distinguished in that it reinforces the semantic information missing in the relevant regions. Experimental results on the MNIST and CIFAR-10 datasets show that this approach greatly improves adversarial robustness even using a very small dataset from the training data; moreover, it can defend against FGSM adversarial attacks that have a completely different pattern from the model seen during retraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge