Xiaotian Wang

Exploring the Performance Improvement of Tensor Processing Engines through Transformation in the Bit-weight Dimension of MACs

Mar 08, 2025Abstract:General matrix-matrix multiplication (GEMM) is a cornerstone of AI computations, making tensor processing engines (TPEs) increasingly critical in GPUs and domain-specific architectures. Existing architectures primarily optimize dataflow or operand reuse strategies. However, considering the interaction between matrix multiplication and multiply-accumulators (MACs) offers greater optimization potential. This work introduces a novel hardware perspective on matrix multiplication, focusing on the bit-weight dimension of MACs. We propose a finer-grained TPE notation using matrix triple loops as an example, introducing new methods for designing and optimizing PE microarchitectures. Based on this notation and its transformations, we propose four optimization techniques that improve timing, area, and power consumption. Implementing our design in RTL using the SMIC-28nm process, we evaluate its effectiveness across four classic TPE architectures: systolic array, 3D-Cube, multiplier-adder tree, and 2D-Matrix. Our techniques achieve area efficiency improvements of 1.27x, 1.28x, 1.56x, and 1.44x, and energy efficiency gains of 1.04x, 1.56x, 1.49x, and 1.20x, respectively. Applied to a bit-slice architecture, our approach achieves a 12.10x improvement in energy efficiency and 2.85x in area efficiency compared to Laconic. Our Verilog HDL code, along with timing, area, and power reports, is available at https://github.com/wqzustc/High-Performance-Tensor-Processing-Engines

Spatio-Temporal Progressive Attention Model for EEG Classification in Rapid Serial Visual Presentation Task

Feb 02, 2025

Abstract:As a type of multi-dimensional sequential data, the spatial and temporal dependencies of electroencephalogram (EEG) signals should be further investigated. Thus, in this paper, we propose a novel spatial-temporal progressive attention model (STPAM) to improve EEG classification in rapid serial visual presentation (RSVP) tasks. STPAM first adopts three distinct spatial experts to learn the spatial topological information of brain regions progressively, which is used to minimize the interference of irrelevant brain regions. Concretely, the former expert filters out EEG electrodes in the relative brain regions to be used as prior knowledge for the next expert, ensuring that the subsequent experts gradually focus their attention on information from significant EEG electrodes. This process strengthens the effect of the important brain regions. Then, based on the above-obtained feature sequence with spatial information, three temporal experts are adopted to capture the temporal dependence by progressively assigning attention to the crucial EEG slices. Except for the above EEG classification method, in this paper, we build a novel Infrared RSVP EEG Dataset (IRED) which is based on dim infrared images with small targets for the first time, and conduct extensive experiments on it. The results show that our STPAM can achieve better performance than all the compared methods.

Adaptive Progressive Attention Graph Neural Network for EEG Emotion Recognition

Jan 24, 2025Abstract:In recent years, numerous neuroscientific studies have shown that human emotions are closely linked to specific brain regions, with these regions exhibiting variability across individuals and emotional states. To fully leverage these neural patterns, we propose an Adaptive Progressive Attention Graph Neural Network (APAGNN), which dynamically captures the spatial relationships among brain regions during emotional processing. The APAGNN employs three specialized experts that progressively analyze brain topology. The first expert captures global brain patterns, the second focuses on region-specific features, and the third examines emotion-related channels. This hierarchical approach enables increasingly refined analysis of neural activity. Additionally, a weight generator integrates the outputs of all three experts, balancing their contributions to produce the final predictive label. Extensive experiments on three publicly available datasets (SEED, SEED-IV and MPED) demonstrate that the proposed method enhances EEG emotion recognition performance, achieving superior results compared to baseline methods.

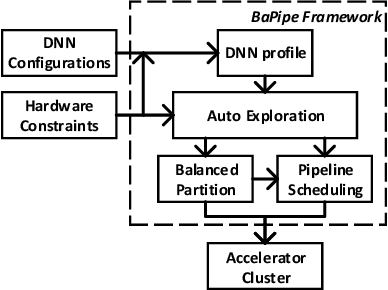

BaPipe: Exploration of Balanced Pipeline Parallelism for DNN Training

Jan 14, 2021

Abstract:The size of deep neural networks (DNNs) grows rapidly as the complexity of the machine learning algorithm increases. To satisfy the requirement of computation and memory of DNN training, distributed deep learning based on model parallelism has been widely recognized. We propose a new pipeline parallelism training framework, BaPipe, which can automatically explore pipeline parallelism training methods and balanced partition strategies for DNN distributed training. In BaPipe, each accelerator calculates the forward propagation and backward propagation of different parts of networks to implement the intra-batch pipeline parallelism strategy. BaPipe uses a new load balancing automatic exploration strategy that considers the parameters of DNN models and the computation, memory, and communication resources of accelerator clusters. We have trained different DNNs such as VGG-16, ResNet-50, and GNMT on GPU clusters and simulated the performance of different FPGA clusters. Compared with state-of-the-art data parallelism and pipeline parallelism frameworks, BaPipe provides up to 3.2x speedup and 4x memory reduction in various platforms.

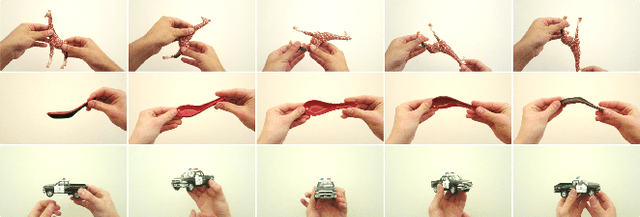

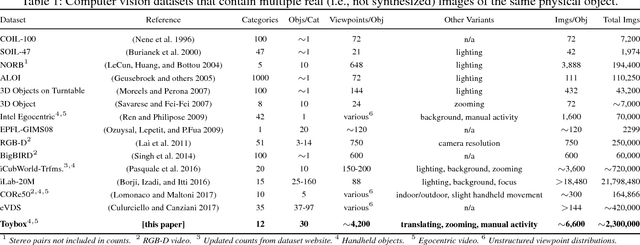

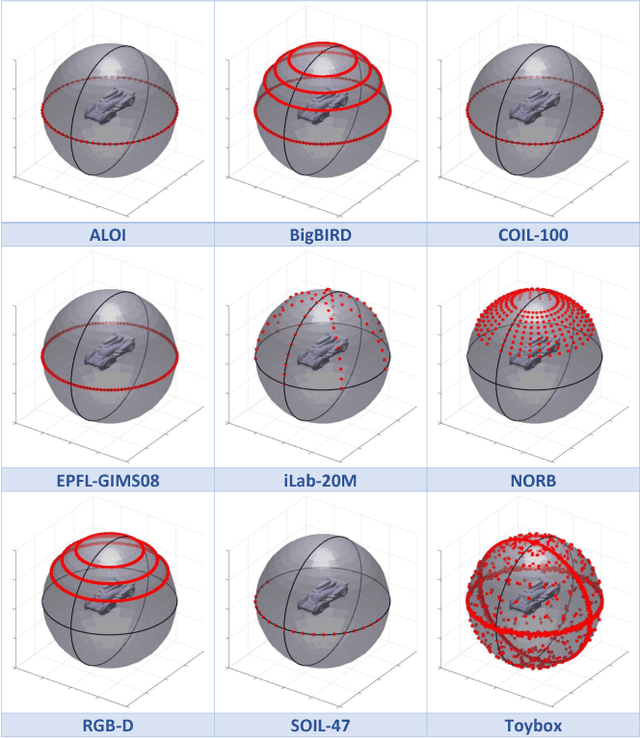

Seeing Neural Networks Through a Box of Toys: The Toybox Dataset of Visual Object Transformations

Jul 31, 2018

Abstract:Deep convolutional neural networks (CNNs) have enjoyed tremendous success in computer vision in the past several years, particularly for visual object recognition.However, how CNNs work remains poorly understood, and the training of deep CNNs is still considered more art than science. To better characterize deep CNNs and the training process, we introduce a new video dataset called Toybox. Images in Toybox come from first-person, wearable camera recordings of common household objects and toys being manually manipulated to undergo structured transformations like rotations and translations. We also present results from initial experiments using deep CNNs that begin to examine how different distributions of training data can affect visual object recognition performance, and how visual object concepts are represented within a trained network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge