Xiaohu Wu

Voronoi-grid-based Pareto Front Learning and Its Application to Collaborative Federated Learning

May 27, 2025

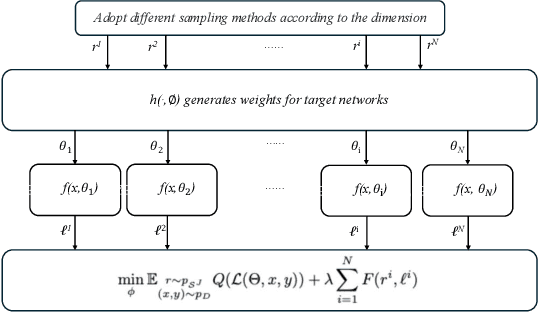

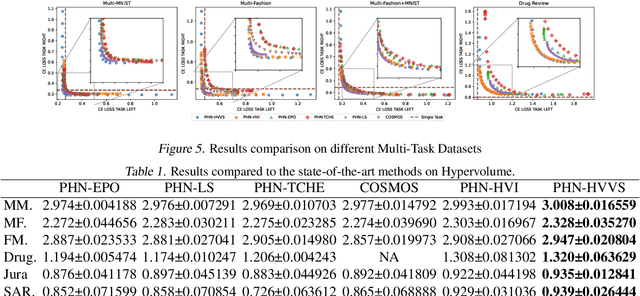

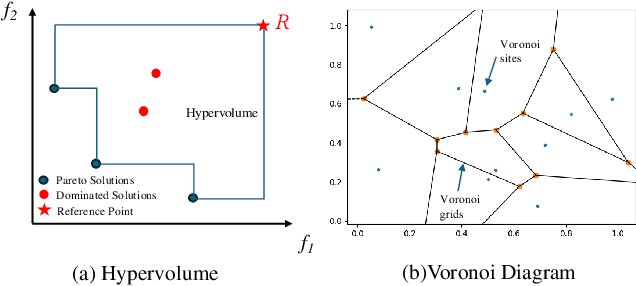

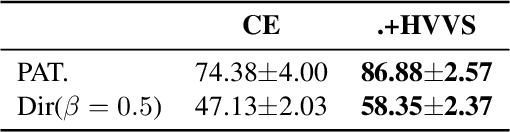

Abstract:Multi-objective optimization (MOO) exists extensively in machine learning, and aims to find a set of Pareto-optimal solutions, called the Pareto front, e.g., it is fundamental for multiple avenues of research in federated learning (FL). Pareto-Front Learning (PFL) is a powerful method implemented using Hypernetworks (PHNs) to approximate the Pareto front. This method enables the acquisition of a mapping function from a given preference vector to the solutions on the Pareto front. However, most existing PFL approaches still face two challenges: (a) sampling rays in high-dimensional spaces; (b) failing to cover the entire Pareto Front which has a convex shape. Here, we introduce a novel PFL framework, called as PHN-HVVS, which decomposes the design space into Voronoi grids and deploys a genetic algorithm (GA) for Voronoi grid partitioning within high-dimensional space. We put forward a new loss function, which effectively contributes to more extensive coverage of the resultant Pareto front and maximizes the HV Indicator. Experimental results on multiple MOO machine learning tasks demonstrate that PHN-HVVS outperforms the baselines significantly in generating Pareto front. Also, we illustrate that PHN-HVVS advances the methodologies of several recent problems in the FL field. The code is available at https://github.com/buptcmm/phnhvvs}{https://github.com/buptcmm/phnhvvs.

Personalized Federated Learning via Learning Dynamic Graphs

Mar 07, 2025Abstract:Personalized Federated Learning (PFL) aims to train a personalized model for each client that is tailored to its local data distribution, learning fails to perform well on individual clients due to variations in their local data distributions. Most existing PFL methods focus on personalizing the aggregated global model for each client, neglecting the fundamental aspect of federated learning: the regulation of how client models are aggregated. Additionally, almost all of them overlook the graph structure formed by clients in federated learning. In this paper, we propose a novel method, Personalized Federated Learning with Graph Attention Network (pFedGAT), which captures the latent graph structure between clients and dynamically determines the importance of other clients for each client, enabling fine-grained control over the aggregation process. We evaluate pFedGAT across multiple data distribution scenarios, comparing it with twelve state of the art methods on three datasets: Fashion MNIST, CIFAR-10, and CIFAR-100, and find that it consistently performs well.

Free-Rider and Conflict Aware Collaboration Formation for Cross-Silo Federated Learning

Oct 28, 2024

Abstract:Federated learning (FL) is a machine learning paradigm that allows multiple FL participants (FL-PTs) to collaborate on training models without sharing private data. Due to data heterogeneity, negative transfer may occur in the FL training process. This necessitates FL-PT selection based on their data complementarity. In cross-silo FL, organizations that engage in business activities are key sources of FL-PTs. The resulting FL ecosystem has two features: (i) self-interest, and (ii) competition among FL-PTs. This requires the desirable FL-PT selection strategy to simultaneously mitigate the problems of free riders and conflicts of interest among competitors. To this end, we propose an optimal FL collaboration formation strategy -- FedEgoists -- which ensures that: (1) a FL-PT can benefit from FL if and only if it benefits the FL ecosystem, and (2) a FL-PT will not contribute to its competitors or their supporters. It provides an efficient clustering solution to group FL-PTs into coalitions, ensuring that within each coalition, FL-PTs share the same interest. We theoretically prove that the FL-PT coalitions formed are optimal since no coalitions can collaborate together to improve the utility of any of their members. Extensive experiments on widely adopted benchmark datasets demonstrate the effectiveness of FedEgoists compared to nine state-of-the-art baseline methods, and its ability to establish efficient collaborative networks in cross-silos FL with FL-PTs that engage in business activities.

Benchmarking Data Heterogeneity Evaluation Approaches for Personalized Federated Learning

Oct 09, 2024

Abstract:There is growing research interest in measuring the statistical heterogeneity of clients' local datasets. Such measurements are used to estimate the suitability for collaborative training of personalized federated learning (PFL) models. Currently, these research endeavors are taking place in silos and there is a lack of a unified benchmark to provide a fair and convenient comparison among various approaches in common settings. We aim to bridge this important gap in this paper. The proposed benchmarking framework currently includes six representative approaches. Extensive experiments have been conducted to compare these approaches under five standard non-IID FL settings, providing much needed insights into which approaches are advantageous under which settings. The proposed framework offers useful guidance on the suitability of various data divergence measures in FL systems. It is beneficial for keeping related research activities on the right track in terms of: (1) designing PFL schemes, (2) selecting appropriate data heterogeneity evaluation approaches for specific FL application scenarios, and (3) addressing fairness issues in collaborative model training. The code is available at https://github.com/Xiaoni-61/DH-Benchmark.

MLAE: Masked LoRA Experts for Parameter-Efficient Fine-Tuning

May 29, 2024

Abstract:In response to the challenges posed by the extensive parameter updates required for full fine-tuning of large-scale pre-trained models, parameter-efficient fine-tuning (PEFT) methods, exemplified by Low-Rank Adaptation (LoRA), have emerged. LoRA simplifies the fine-tuning process but may still struggle with a certain level of redundancy in low-rank matrices and limited effectiveness from merely increasing their rank. To address these issues, a natural idea is to enhance the independence and diversity of the learning process for the low-rank matrices. Therefore, we propose Masked LoRA Experts (MLAE), an innovative approach that applies the concept of masking to PEFT. Our method incorporates a cellular decomposition strategy that transforms a low-rank matrix into independent rank-1 submatrices, or ``experts'', thus enhancing independence. Additionally, we introduce a binary mask matrix that selectively activates these experts during training to promote more diverse and anisotropic learning, based on expert-level dropout strategies. Our investigations reveal that this selective activation not only enhances performance but also fosters a more diverse acquisition of knowledge with a marked decrease in parameter similarity among MLAE, significantly boosting the quality of the model while barely increasing the parameter count. Remarkably, MLAE achieves new SOTA performance with an average accuracy score of 78.8% on the VTAB-1k benchmark and 90.9% on the FGVC benchmark, demonstrating superior performance. Our code is available at https://github.com/jie040109/MLAE.

FedCompetitors: Harmonious Collaboration in Federated Learning with Competing Participants

Dec 18, 2023Abstract:Federated learning (FL) provides a privacy-preserving approach for collaborative training of machine learning models. Given the potential data heterogeneity, it is crucial to select appropriate collaborators for each FL participant (FL-PT) based on data complementarity. Recent studies have addressed this challenge. Similarly, it is imperative to consider the inter-individual relationships among FL-PTs where some FL-PTs engage in competition. Although FL literature has acknowledged the significance of this scenario, practical methods for establishing FL ecosystems remain largely unexplored. In this paper, we extend a principle from the balance theory, namely ``the friend of my enemy is my enemy'', to ensure the absence of conflicting interests within an FL ecosystem. The extended principle and the resulting problem are formulated via graph theory and integer linear programming. A polynomial-time algorithm is proposed to determine the collaborators of each FL-PT. The solution guarantees high scalability, allowing even competing FL-PTs to smoothly join the ecosystem without conflict of interest. The proposed framework jointly considers competition and data heterogeneity. Extensive experiments on real-world and synthetic data demonstrate its efficacy compared to five alternative approaches, and its ability to establish efficient collaboration networks among FL-PTs.

MarS-FL: A Market Share-based Decision Support Framework for Participation in Federated Learning

Oct 26, 2021

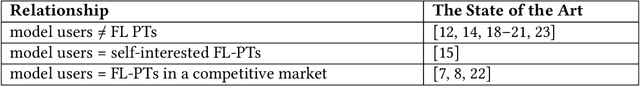

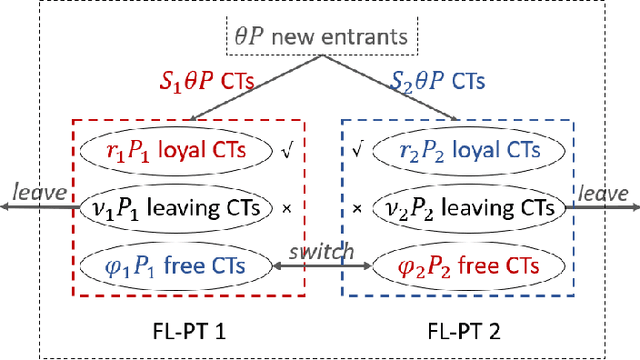

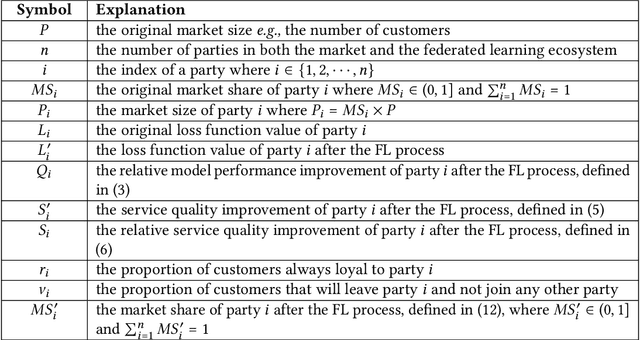

Abstract:Federated learning (FL) enables multiple participants (PTs) to build an aggregate and more powerful learning model without sharing data, thus maintaining data privacy and security. Among the key application scenarios is a competitive market where market shares represent PTs' competitiveness. An understanding of the role of FL in evolving market shares plays a key role in advancing the adoption of FL by PTs. In terms of modeling, we adapt a general economic model to the FL context and introduce two notions of $\delta$-stable market and friendliness to measure the viability of FL and the market acceptability to FL. Further, we address related decision-making issues with FL designer and PTs. First, we characterize the process by which each PT participates in FL as a non-cooperative game and prove its dominant strategy. Second, as an FL designer, the final model performance improvement of each PT should be bounded, which relates to the market conditions of a particular FL application scenario; we give a sufficient and necessary condition $Q$ to maintain the market $\delta$-stability and quantify the friendliness $\kappa$. The condition $Q$ gives a specific requirement while an FL designer allocates performance improvements among PTs. In a typical case of oligopoly, closed-form expressions of $Q$ and $\kappa$ are given. Finally, numerical results are given to show the viability of FL in a wide range of market conditions. Our results help identify optimal PT strategies, the viable operational space of an FL designer, and the market conditions under which FL is especially beneficial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge