Xavier Costa-Perez

Energy-aware Joint Orchestration of 5G and Robots: Experimental Testbed and Field Validation

Mar 25, 2025

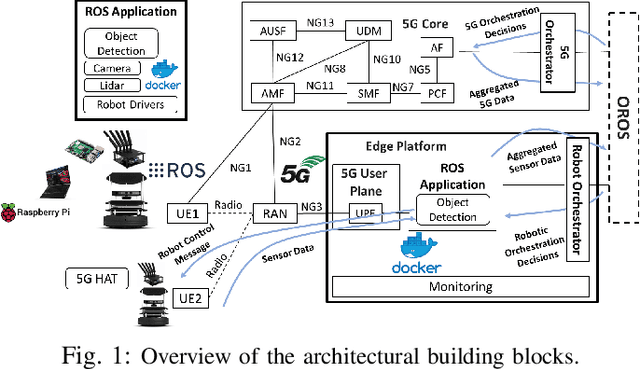

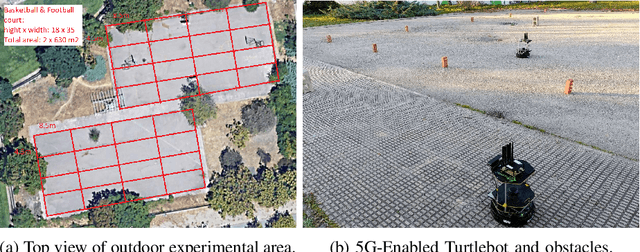

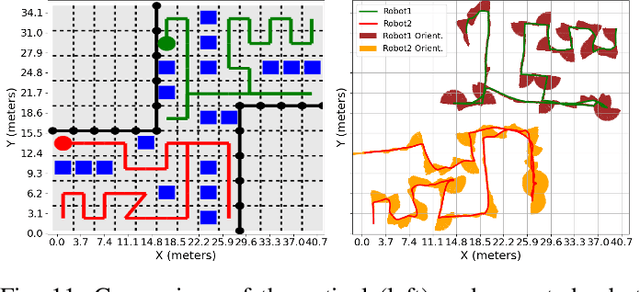

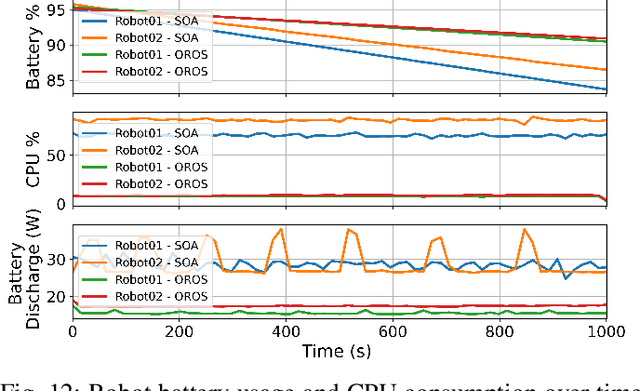

Abstract:5G mobile networks introduce a new dimension for connecting and operating mobile robots in outdoor environments, leveraging cloud-native and offloading features of 5G networks to enable fully flexible and collaborative cloud robot operations. However, the limited battery life of robots remains a significant obstacle to their effective adoption in real-world exploration scenarios. This paper explores, via field experiments, the potential energy-saving gains of OROS, a joint orchestration of 5G and Robot Operating System (ROS) that coordinates multiple 5G-connected robots both in terms of navigation and sensing, as well as optimizes their cloud-native service resource utilization while minimizing total resource and energy consumption on the robots based on real-time feedback. We designed, implemented and evaluated our proposed OROS in an experimental testbed composed of commercial off-the-shelf robots and a local 5G infrastructure deployed on a campus. The experimental results demonstrated that OROS significantly outperforms state-of-the-art approaches in terms of energy savings by offloading demanding computational tasks to the 5G edge infrastructure and dynamic energy management of on-board sensors (e.g., switching them off when they are not needed). This strategy achieves approximately 15% energy savings on the robots, thereby extending battery life, which in turn allows for longer operating times and better resource utilization.

* 14 pages, 15 figures, journal

RiLoCo: An ISAC-oriented AI Solution to Build RIS-empowered Networks

Mar 07, 2025Abstract:The advance towards 6G networks comes with the promise of unprecedented performance in sensing and communication capabilities. The feat of achieving those, while satisfying the ever-growing demands placed on wireless networks, promises revolutionary advancements in sensing and communication technologies. As 6G aims to cater to the growing demands of wireless network users, the implementation of intelligent and efficient solutions becomes essential. In particular, reconfigurable intelligent surfaces (RISs), also known as Smart Surfaces, are envisioned as a transformative technology for future 6G networks. The performance of RISs when used to augment existing devices is nevertheless largely affected by their precise location. Suboptimal deployments are also costly to correct, negating their low-cost benefits. This paper investigates the topic of optimal RISs diffusion, taking into account the improvement they provide both for the sensing and communication capabilities of the infrastructure while working with other antennas and sensors. We develop a combined metric that takes into account the properties and location of the individual devices to compute the performance of the entire infrastructure. We then use it as a foundation to build a reinforcement learning architecture that solves the RIS deployment problem. Since our metric measures the surface where given localization thresholds are achieved and the communication coverage of the area of interest, the novel framework we provide is able to seamlessly balance sensing and communication, showing its performance gain against reference solutions, where it achieves simultaneously almost the reference performance for communication and the reference performance for localization.

TelecomRAG: Taming Telecom Standards with Retrieval Augmented Generation and LLMs

Jun 11, 2024Abstract:Large Language Models (LLMs) have immense potential to transform the telecommunications industry. They could help professionals understand complex standards, generate code, and accelerate development. However, traditional LLMs struggle with the precision and source verification essential for telecom work. To address this, specialized LLM-based solutions tailored to telecommunication standards are needed. Retrieval-augmented generation (RAG) offers a way to create precise, fact-based answers. This paper proposes TelecomRAG, a framework for a Telecommunication Standards Assistant that provides accurate, detailed, and verifiable responses. Our implementation, using a knowledge base built from 3GPP Release 16 and Release 18 specification documents, demonstrates how this assistant surpasses generic LLMs, offering superior accuracy, technical depth, and verifiability, and thus significant value to the telecommunications field.

Are you a robot? Detecting Autonomous Vehicles from Behavior Analysis

Mar 14, 2024Abstract:The tremendous hype around autonomous driving is eagerly calling for emerging and novel technologies to support advanced mobility use cases. As car manufactures keep developing SAE level 3+ systems to improve the safety and comfort of passengers, traffic authorities need to establish new procedures to manage the transition from human-driven to fully-autonomous vehicles while providing a feedback-loop mechanism to fine-tune envisioned autonomous systems. Thus, a way to automatically profile autonomous vehicles and differentiate those from human-driven ones is a must. In this paper, we present a fully-fledged framework that monitors active vehicles using camera images and state information in order to determine whether vehicles are autonomous, without requiring any active notification from the vehicles themselves. Essentially, it builds on the cooperation among vehicles, which share their data acquired on the road feeding a machine learning model to identify autonomous cars. We extensively tested our solution and created the NexusStreet dataset, by means of the CARLA simulator, employing an autonomous driving control agent and a steering wheel maneuvered by licensed drivers. Experiments show it is possible to discriminate the two behaviors by analyzing video clips with an accuracy of 80%, which improves up to 93% when the target state information is available. Lastly, we deliberately degraded the state to observe how the framework performs under non-ideal data collection conditions.

Fair Resource Allocation in Virtualized O-RAN Platforms

Feb 17, 2024Abstract:O-RAN systems and their deployment in virtualized general-purpose computing platforms (O-Cloud) constitute a paradigm shift expected to bring unprecedented performance gains. However, these architectures raise new implementation challenges and threaten to worsen the already-high energy consumption of mobile networks. This paper presents first a series of experiments which assess the O-Cloud's energy costs and their dependency on the servers' hardware, capacity and data traffic properties which, typically, change over time. Next, it proposes a compute policy for assigning the base station data loads to O-Cloud servers in an energy-efficient fashion; and a radio policy that determines at near-real-time the minimum transmission block size for each user so as to avoid unnecessary energy costs. The policies balance energy savings with performance, and ensure that both of them are dispersed fairly across the servers and users, respectively. To cater for the unknown and time-varying parameters affecting the policies, we develop a novel online learning framework with fairness guarantees that apply to the entire operation horizon of the system (long-term fairness). The policies are evaluated using trace-driven simulations and are fully implemented in an O-RAN compatible system where we measure the energy costs and throughput in realistic scenarios.

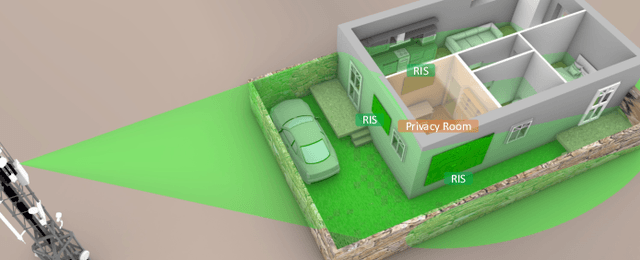

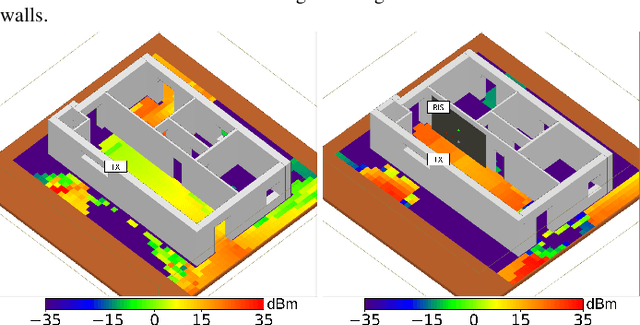

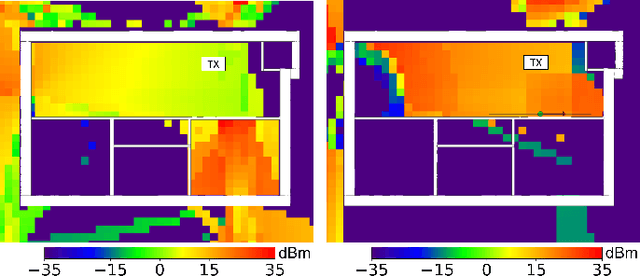

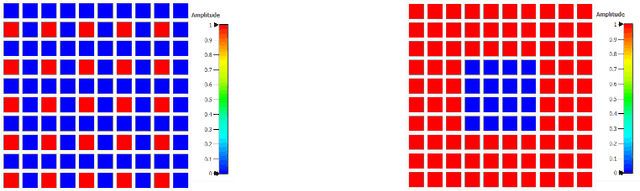

RIShield: Enabling Electromagnetic Blackout in Radiation-Sensitive Environments

Dec 20, 2023

Abstract:Reconfigurable Intelligent Surfaces (RIS) have emerged as a disruptive technology with the potential to revolutionize wireless communication systems. In this paper, we present RIShield, a novel application of RIS technology specifically designed for radiation-sensitive environments. The aim of RIShield is to enable electromagnetic blackouts, preventing radiation leakage from target areas. We propose a comprehensive framework for RIShield deployment, considering the unique challenges and requirements of radiation-sensitive environments. By strategically positioning RIS panels, we create an intelligent shielding mechanism that selectively absorbs and reflects electromagnetic waves, effectively blocking radiation transmission. To achieve optimal performance, we model the corresponding channel and design a dynamic control that adjusts the RIS configuration based on real-time radiation monitoring. By leveraging the principles of reconfiguration and intelligent control, RIShield ensures adaptive and efficient protection while minimizing signal degradation. Through full-wave and ray-tracing simulations, we demonstrate the effectiveness of RIShield in achieving significant electromagnetic attenuation. Our results highlight the potential of RIS technology to address critical concerns in radiation-sensitive environments, paving the way for safer and more secure operations in industries such as healthcare, nuclear facilities, and defense.

Risk-Aware Continuous Control with Neural Contextual Bandits

Dec 15, 2023

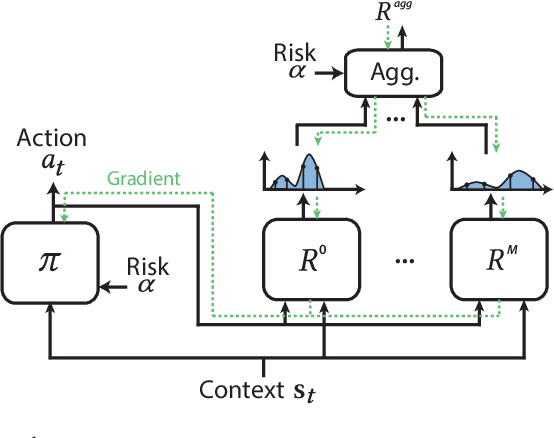

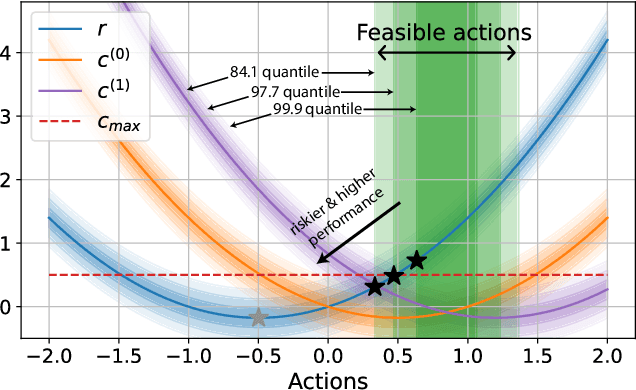

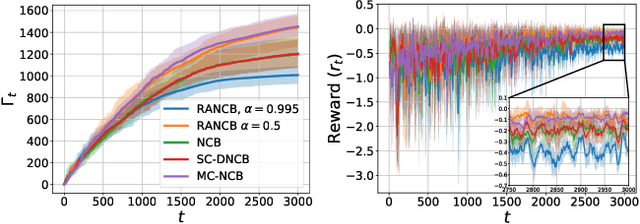

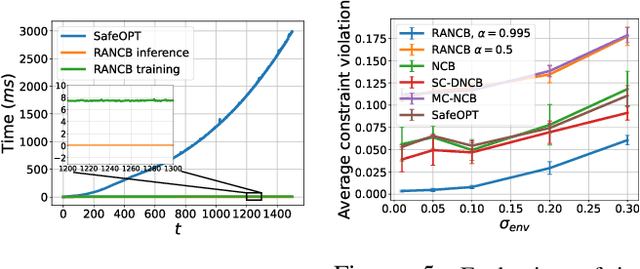

Abstract:Recent advances in learning techniques have garnered attention for their applicability to a diverse range of real-world sequential decision-making problems. Yet, many practical applications have critical constraints for operation in real environments. Most learning solutions often neglect the risk of failing to meet these constraints, hindering their implementation in real-world contexts. In this paper, we propose a risk-aware decision-making framework for contextual bandit problems, accommodating constraints and continuous action spaces. Our approach employs an actor multi-critic architecture, with each critic characterizing the distribution of performance and constraint metrics. Our framework is designed to cater to various risk levels, effectively balancing constraint satisfaction against performance. To demonstrate the effectiveness of our approach, we first compare it against state-of-the-art baseline methods in a synthetic environment, highlighting the impact of intrinsic environmental noise across different risk configurations. Finally, we evaluate our framework in a real-world use case involving a 5G mobile network where only our approach consistently satisfies the system constraint (a signal processing reliability target) with a small performance toll (8.5% increase in power consumption).

Empirical Validation of the Impedance-Based RIS Channel Model in an Indoor Scattering Environment

Dec 01, 2023Abstract:Ensuring the precision of channel modeling plays a pivotal role in the development of wireless communication systems, and this requirement remains a persistent challenge within the realm of networks supported by Reconfigurable Intelligent Surfaces (RIS). Achieving a comprehensive and reliable understanding of channel behavior in RIS-aided networks is an ongoing and complex issue that demands further exploration. In this paper, we empirically validate a recently-proposed impedance-based RIS channel model that accounts for the mutual coupling at the antenna array and precisely models the presence of scattering objects within the environment as a discrete array of loaded dipoles. To this end, we exploit real-life channel measurements collected in an office environment to demonstrate the validity of such a model and its applicability in a practical scenario. Finally, we provide numerical results demonstrating that designing the RIS configuration based upon such model leads to superior performance as compared to reference schemes.

Analytical Modelling of Raw Data for Flow-Guided In-body Nanoscale Localization

Sep 27, 2023

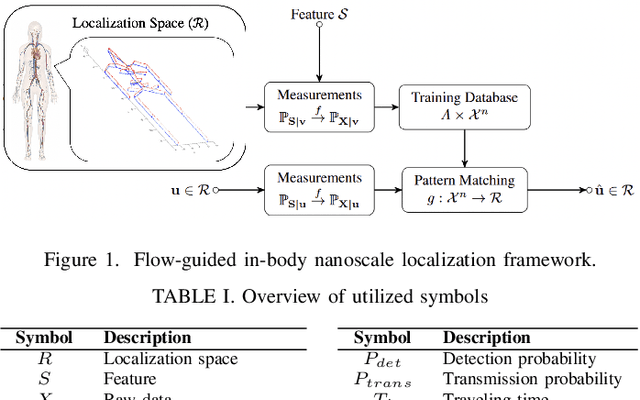

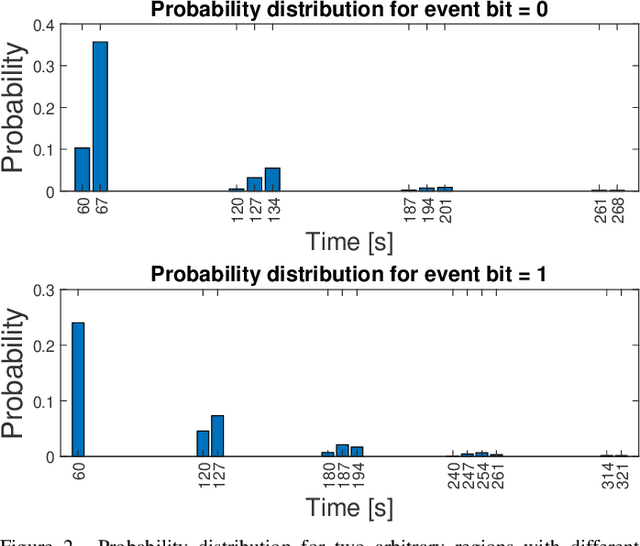

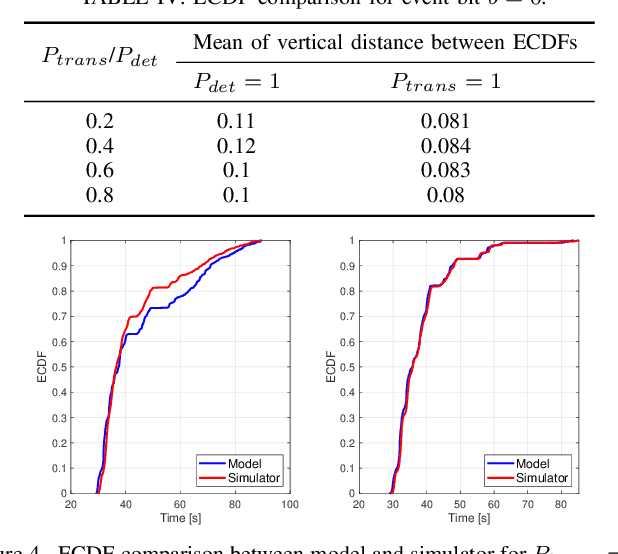

Abstract:Advancements in nanotechnology and material science are paving the way toward nanoscale devices that combine sensing, computing, data and energy storage, and wireless communication. In precision medicine, these nanodevices show promise for disease diagnostics, treatment, and monitoring from within the patients' bloodstreams. Assigning the location of a sensed biological event with the event itself, which is the main proposition of flow-guided in-body nanoscale localization, would be immensely beneficial from the perspective of precision medicine. The nanoscale nature of the nanodevices and the challenging environment that the bloodstream represents, result in current flow-guided localization approaches being constrained in their communication and energy-related capabilities. The communication and energy constraints of the nanodevices result in different features of raw data for flow-guided localization, in turn affecting its performance. An analytical modeling of the effects of imperfect communication and constrained energy causing intermittent operation of the nanodevices on the raw data produced by the nanodevices would be beneficial. Hence, we propose an analytical model of raw data for flow-guided localization, where the raw data is modeled as a function of communication and energy-related capabilities of the nanodevice. We evaluate the model by comparing its output with the one obtained through the utilization of a simulator for objective evaluation of flow-guided localization, featuring comparably higher level of realism. Our results across a number of scenarios and heterogeneous performance metrics indicate high similarity between the model and simulator-generated raw datasets.

On the Degrees of Freedom of RIS-Aided Holographic MIMO Systems

Jan 19, 2023Abstract:In this paper, we study surface-based communication systems based on different levels of channel state information for system optimization. We analyze the system performance in terms of rate and degrees of freedom (DoF). We show that the deployment of a reconfigurable intelligent surface (RIS) results in increasing the number of DoF, by extending the near-field region. Over Rician fading channels, we show that an RIS can be efficiently optimized only based on the positions of the transmitting and receiving surfaces, while providing good performance if the Rician fading factor is not too small.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge