Guillem Pascual

Analytical Modelling of Raw Data for Flow-Guided In-body Nanoscale Localization

Sep 27, 2023

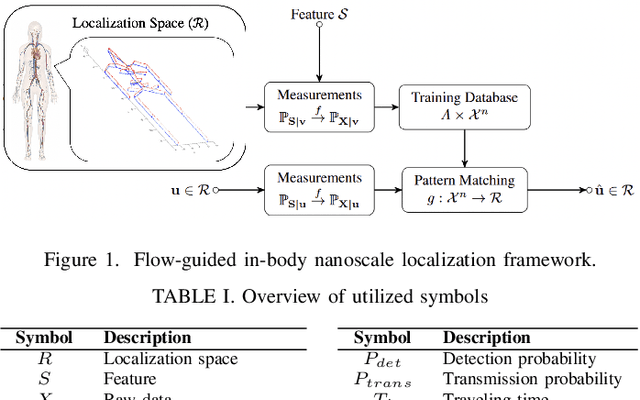

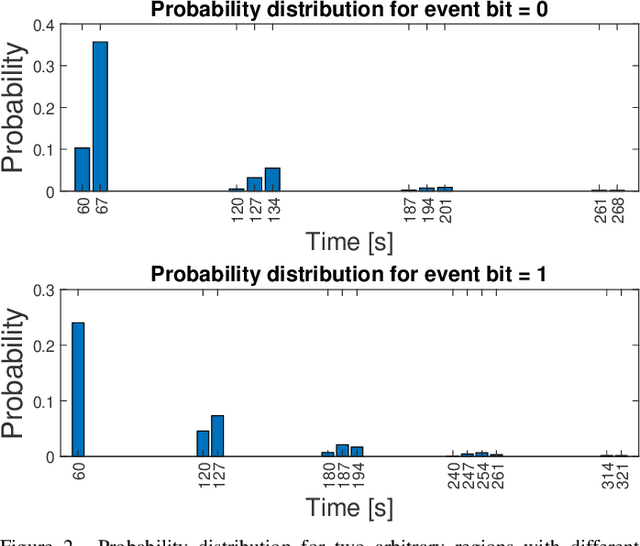

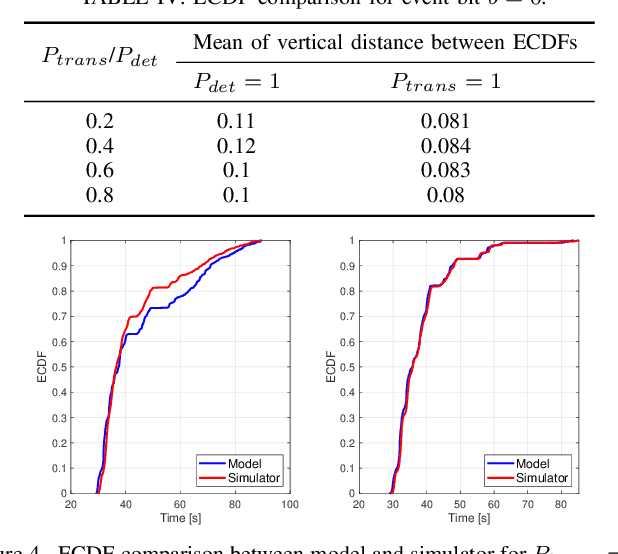

Abstract:Advancements in nanotechnology and material science are paving the way toward nanoscale devices that combine sensing, computing, data and energy storage, and wireless communication. In precision medicine, these nanodevices show promise for disease diagnostics, treatment, and monitoring from within the patients' bloodstreams. Assigning the location of a sensed biological event with the event itself, which is the main proposition of flow-guided in-body nanoscale localization, would be immensely beneficial from the perspective of precision medicine. The nanoscale nature of the nanodevices and the challenging environment that the bloodstream represents, result in current flow-guided localization approaches being constrained in their communication and energy-related capabilities. The communication and energy constraints of the nanodevices result in different features of raw data for flow-guided localization, in turn affecting its performance. An analytical modeling of the effects of imperfect communication and constrained energy causing intermittent operation of the nanodevices on the raw data produced by the nanodevices would be beneficial. Hence, we propose an analytical model of raw data for flow-guided localization, where the raw data is modeled as a function of communication and energy-related capabilities of the nanodevice. We evaluate the model by comparing its output with the one obtained through the utilization of a simulator for objective evaluation of flow-guided localization, featuring comparably higher level of realism. Our results across a number of scenarios and heterogeneous performance metrics indicate high similarity between the model and simulator-generated raw datasets.

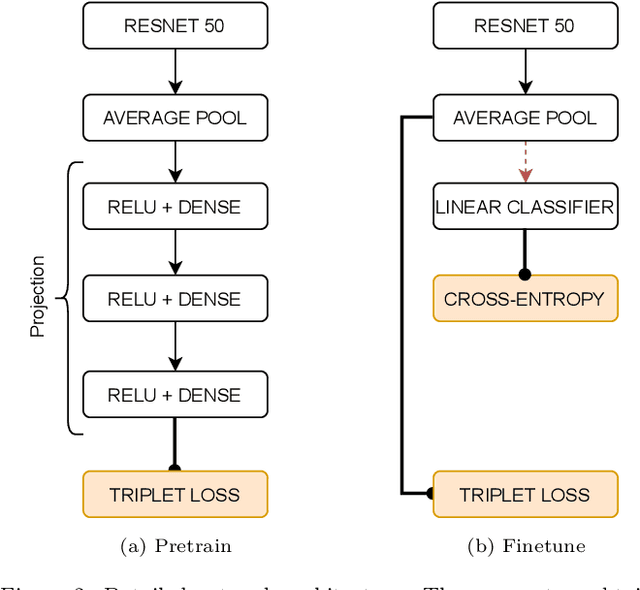

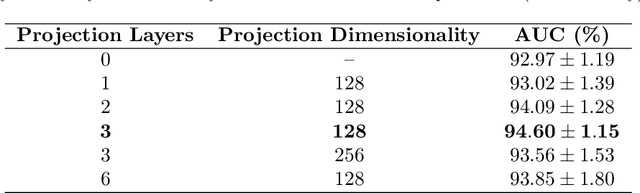

Time-based Self-supervised Learning for Wireless Capsule Endoscopy

Apr 20, 2022

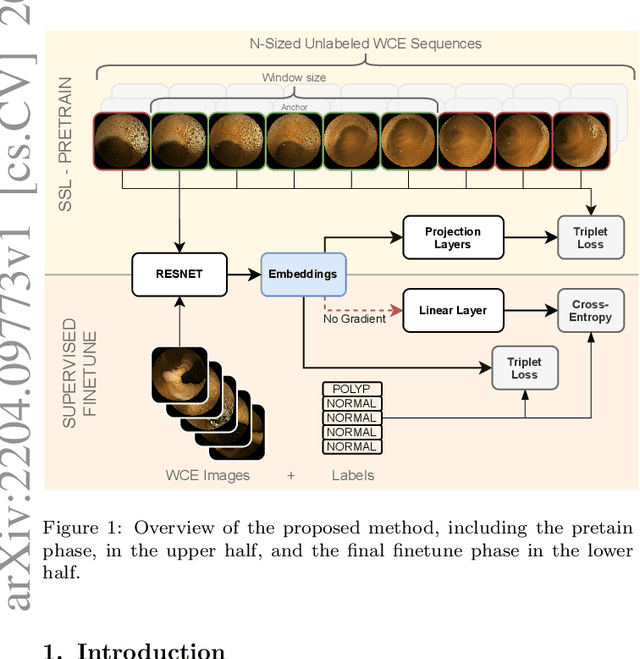

Abstract:State-of-the-art machine learning models, and especially deep learning ones, are significantly data-hungry; they require vast amounts of manually labeled samples to function correctly. However, in most medical imaging fields, obtaining said data can be challenging. Not only the volume of data is a problem, but also the imbalances within its classes; it is common to have many more images of healthy patients than of those with pathology. Computer-aided diagnostic systems suffer from these issues, usually over-designing their models to perform accurately. This work proposes using self-supervised learning for wireless endoscopy videos by introducing a custom-tailored method that does not initially need labels or appropriate balance. We prove that using the inferred inherent structure learned by our method, extracted from the temporal axis, improves the detection rate on several domain-specific applications even under severe imbalance.

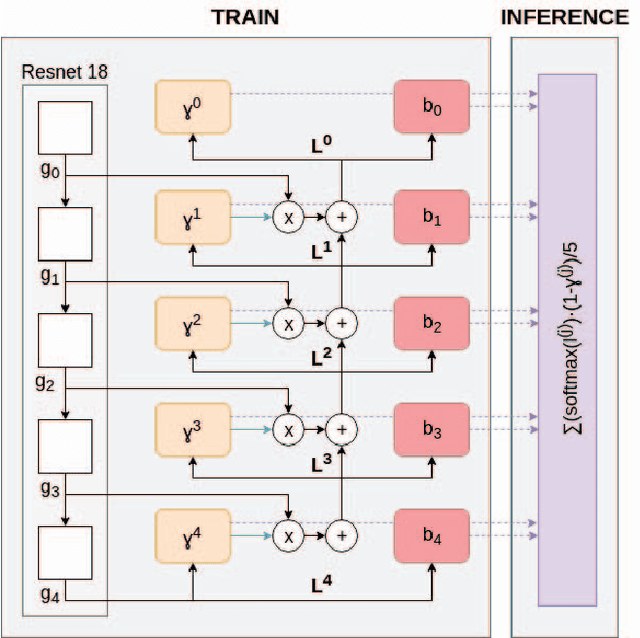

Uncertainty Gated Network for Land Cover Segmentation

May 29, 2018

Abstract:The production of thematic maps depicting land cover is one of the most common applications of remote sensing. To this end, several semantic segmentation approaches, based on deep learning, have been proposed in the literature, but land cover segmentation is still considered an open problem due to some specific problems related to remote sensing imaging. In this paper we propose a novel approach to deal with the problem of modelling multiscale contexts surrounding pixels of different land cover categories. The approach leverages the computation of a heteroscedastic measure of uncertainty when classifying individual pixels in an image. This classification uncertainty measure is used to define a set of memory gates between layers that allow a principled method to select the optimal decision for each pixel.

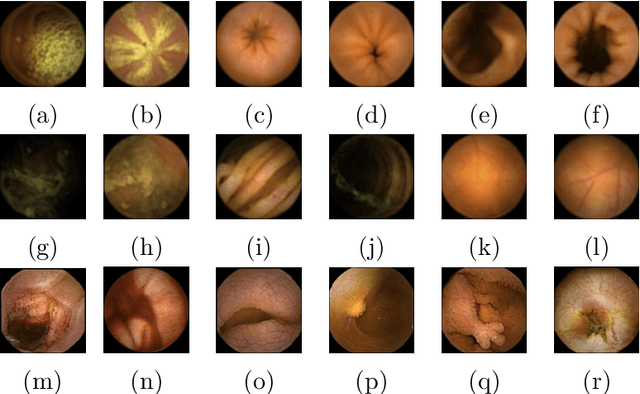

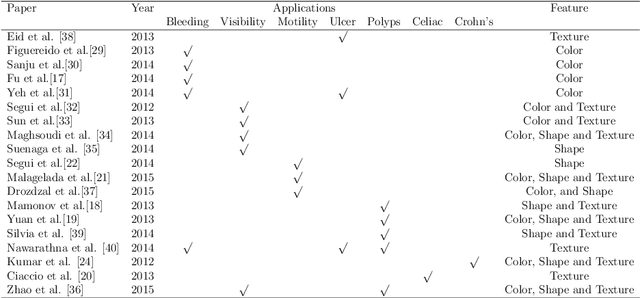

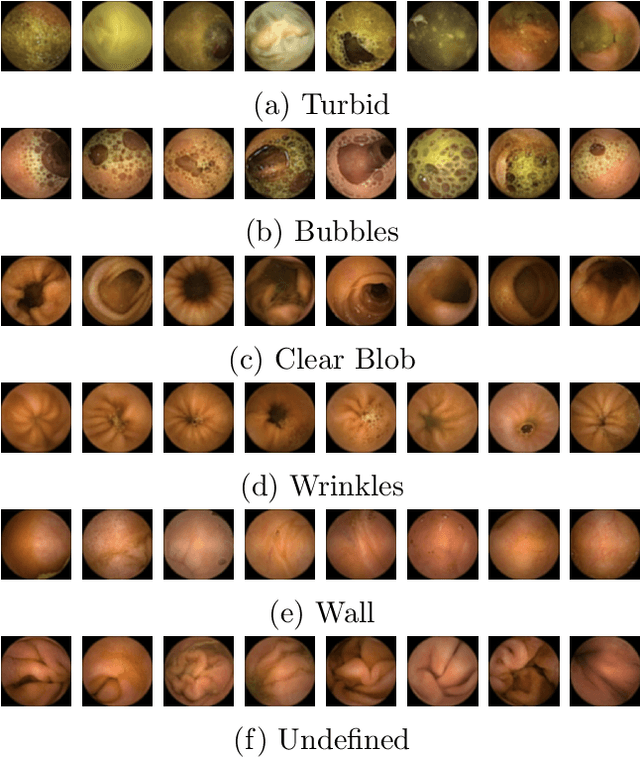

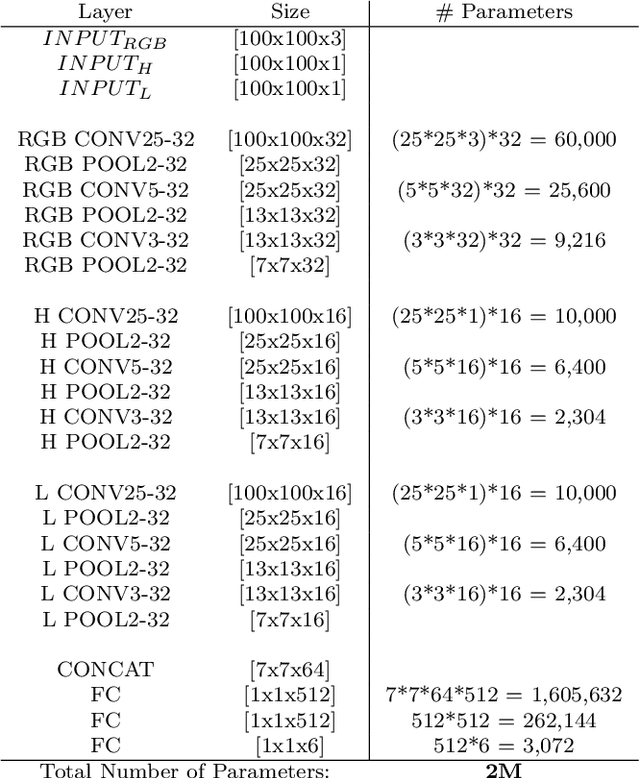

Generic Feature Learning for Wireless Capsule Endoscopy Analysis

Jul 26, 2016

Abstract:The interpretation and analysis of the wireless capsule endoscopy recording is a complex task which requires sophisticated computer aided decision (CAD) systems in order to help physicians with the video screening and, finally, with the diagnosis. Most of the CAD systems in the capsule endoscopy share a common system design, but use very different image and video representations. As a result, each time a new clinical application of WCE appears, new CAD system has to be designed from scratch. This characteristic makes the design of new CAD systems a very time consuming. Therefore, in this paper we introduce a system for small intestine motility characterization, based on Deep Convolutional Neural Networks, which avoids the laborious step of designing specific features for individual motility events. Experimental results show the superiority of the learned features over alternative classifiers constructed by using state of the art hand-crafted features. In particular, it reaches a mean classification accuracy of 96% for six intestinal motility events, outperforming the other classifiers by a large margin (a 14% relative performance increase).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge