Carolina Malagelada

WCE Polyp Detection with Triplet based Embeddings

Dec 10, 2019

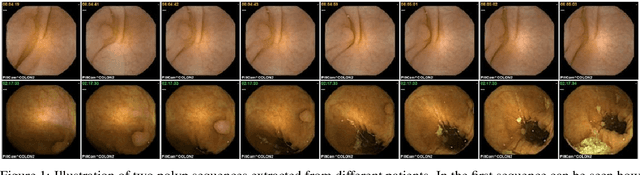

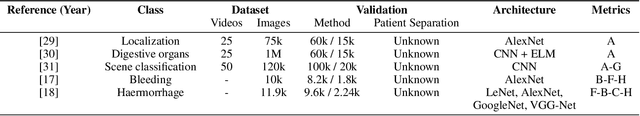

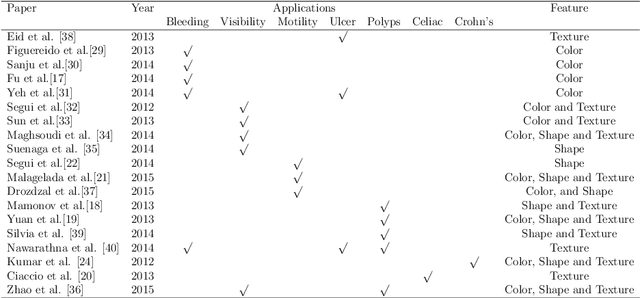

Abstract:Wireless capsule endoscopy is a medical procedure used to visualize the entire gastrointestinal tract and to diagnose intestinal conditions, such as polyps or bleeding. Current analyses are performed by manually inspecting nearly each one of the frames of the video, a tedious and error-prone task. Automatic image analysis methods can be used to reduce the time needed for physicians to evaluate a capsule endoscopy video, however these methods are still in a research phase. In this paper we focus on computer-aided polyp detection in capsule endoscopy images. This is a challenging problem because of the diversity of polyp appearance, the imbalanced dataset structure and the scarcity of data. We have developed a new polyp computer-aided decision system that combines a deep convolutional neural network and metric learning. The key point of the method is the use of the triplet loss function with the aim of improving feature extraction from the images when having small dataset. The triplet loss function allows to train robust detectors by forcing images from the same category to be represented by similar embedding vectors while ensuring that images from different categories are represented by dissimilar vectors. Empirical results show a meaningful increase of AUC values compared to baseline methods. A good performance is not the only requirement when considering the adoption of this technology to clinical practice. Trust and explainability of decisions are as important as performance. With this purpose, we also provide a method to generate visual explanations of the outcome of our polyp detector. These explanations can be used to build a physician's trust in the system and also to convey information about the inner working of the method to the designer for debugging purposes.

Generic Feature Learning for Wireless Capsule Endoscopy Analysis

Jul 26, 2016

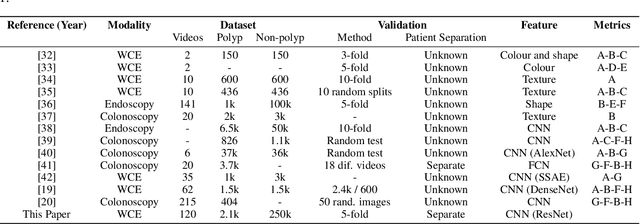

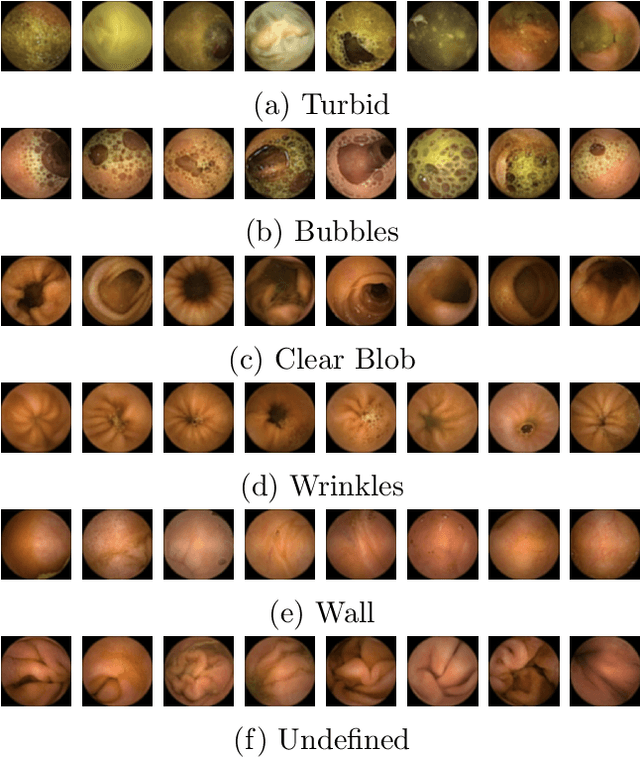

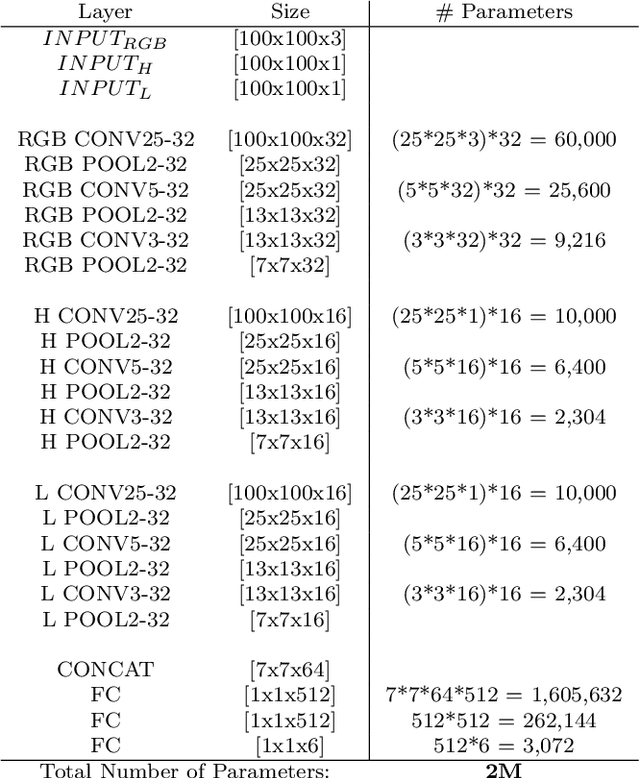

Abstract:The interpretation and analysis of the wireless capsule endoscopy recording is a complex task which requires sophisticated computer aided decision (CAD) systems in order to help physicians with the video screening and, finally, with the diagnosis. Most of the CAD systems in the capsule endoscopy share a common system design, but use very different image and video representations. As a result, each time a new clinical application of WCE appears, new CAD system has to be designed from scratch. This characteristic makes the design of new CAD systems a very time consuming. Therefore, in this paper we introduce a system for small intestine motility characterization, based on Deep Convolutional Neural Networks, which avoids the laborious step of designing specific features for individual motility events. Experimental results show the superiority of the learned features over alternative classifiers constructed by using state of the art hand-crafted features. In particular, it reaches a mean classification accuracy of 96% for six intestinal motility events, outperforming the other classifiers by a large margin (a 14% relative performance increase).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge