Wufan Chen

Cross-sectional imaging of speed-of-sound distribution using photoacoustic reversal beacons

Aug 26, 2024

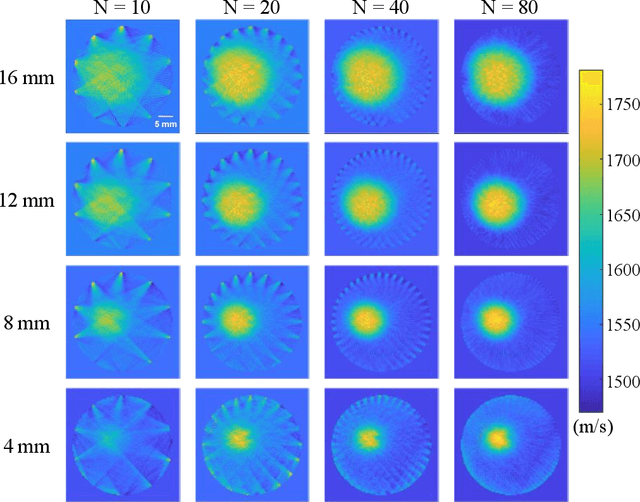

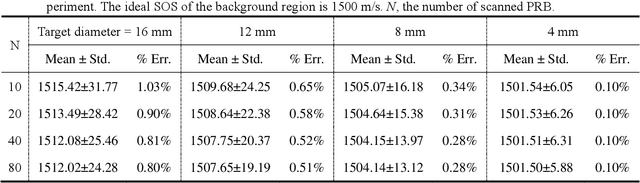

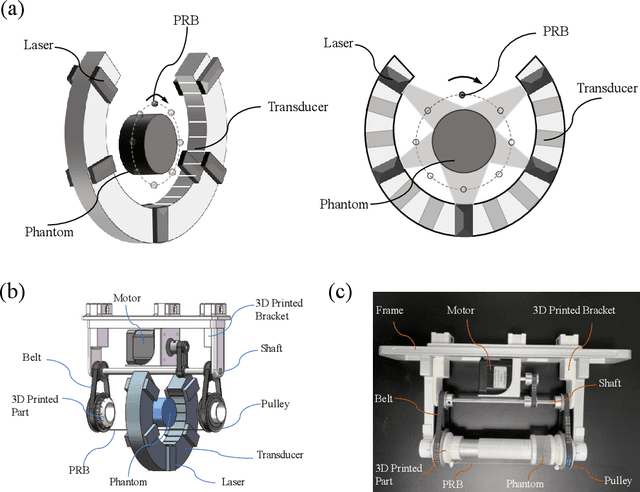

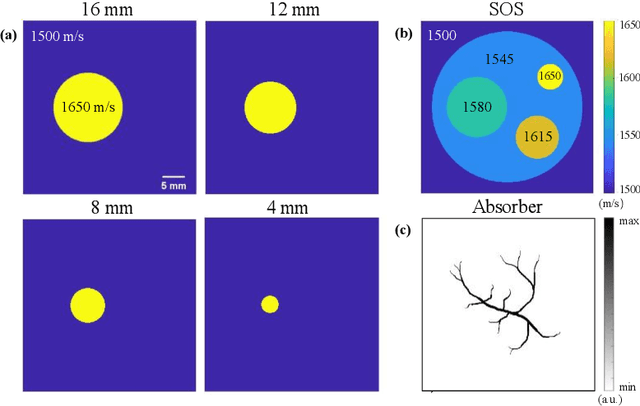

Abstract:Photoacoustic tomography (PAT) enables non-invasive cross-sectional imaging of biological tissues, but it fails to map the spatial variation of speed-of-sound (SOS) within tissues. While SOS is intimately linked to density and elastic modulus of tissues, the imaging of SOS distri-bution serves as a complementary imaging modality to PAT. Moreover, an accurate SOS map can be leveraged to correct for PAT image degradation arising from acoustic heterogene-ities. Herein, we propose a novel approach for SOS reconstruction using only PAT imaging modality. Our method is based on photoacoustic reversal beacons (PRBs), which are small light-absorbing targets with strong photoacoustic contrast. We excite and scan a number of PRBs positioned at the periphery of the target, and the generated photoacoustic waves prop-agate through the target from various directions, thereby achieve spatial sampling of the internal SOS. We formulate a linear inverse model for pixel-wise SOS reconstruction and solve it with iterative optimization technique. We validate the feasibility of the proposed method through simulations, phantoms, and ex vivo biological tissue tests. Experimental results demonstrate that our approach can achieve accurate reconstruction of SOS distribu-tion. Leveraging the obtained SOS map, we further demonstrate significantly enhanced PAT image reconstruction with acoustic correction.

Performance of Medical Image Fusion in High-level Analysis Tasks: A Mutual Enhancement Framework for Unaligned PAT and MRI Image Fusion

Jul 04, 2024

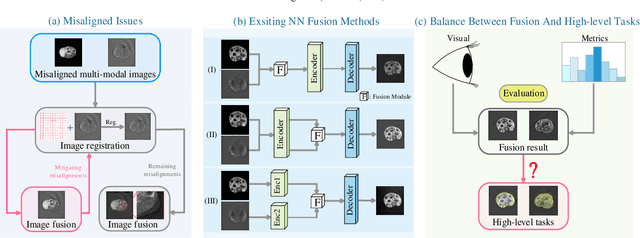

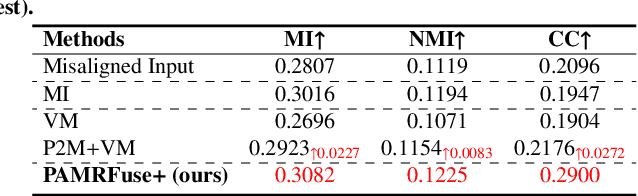

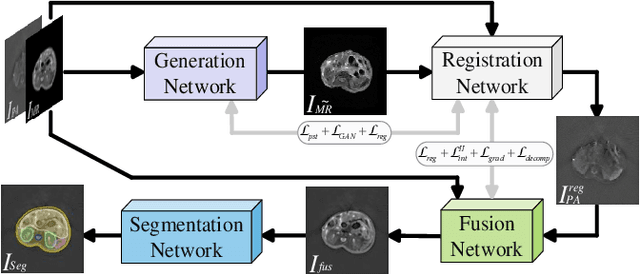

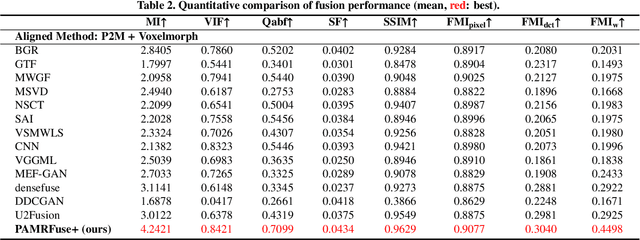

Abstract:Photoacoustic tomography (PAT) offers optical contrast, whereas magnetic resonance imaging (MRI) excels in imaging soft tissue and organ anatomy. The fusion of PAT with MRI holds promising application prospects due to their complementary advantages. Existing image fusion have made considerable progress in pre-registered images, yet spatial deformations are difficult to avoid in medical imaging scenarios. More importantly, current algorithms focus on visual quality and statistical metrics, thus overlooking the requirements of high-level tasks. To address these challenges, we proposes a unsupervised fusion model, termed PAMRFuse+, which integrates image generation and registration. Specifically, a cross-modal style transfer network is introduced to simplify cross-modal registration to single-modal registration. Subsequently, a multi-level registration network is employed to predict displacement vector fields. Furthermore, a dual-branch feature decomposition fusion network is proposed to address the challenges of cross-modal feature modeling and decomposition by integrating modality-specific and modality-shared features. PAMRFuse+ achieves satisfactory results in registering and fusing unaligned PAT-MRI datasets. Moreover, for the first time, we evaluate the performance of medical image fusion with contour segmentation and multi-organ instance segmentation. Extensive experimental demonstrations reveal the advantages of PAMRFuse+ in improving the performance of medical image analysis tasks.

Spiral Scanning and Self-Supervised Image Reconstruction Enable Ultra-Sparse Sampling Multispectral Photoacoustic Tomography

Apr 10, 2024

Abstract:Multispectral photoacoustic tomography (PAT) is an imaging modality that utilizes the photoacoustic effect to achieve non-invasive and high-contrast imaging of internal tissues. However, the hardware cost and computational demand of a multispectral PAT system consisting of up to thousands of detectors are huge. To address this challenge, we propose an ultra-sparse spiral sampling strategy for multispectral PAT, which we named U3S-PAT. Our strategy employs a sparse ring-shaped transducer that, when switching excitation wavelengths, simultaneously rotates and translates. This creates a spiral scanning pattern with multispectral angle-interlaced sampling. To solve the highly ill-conditioned image reconstruction problem, we propose a self-supervised learning method that is able to introduce structural information shared during spiral scanning. We simulate the proposed U3S-PAT method on a commercial PAT system and conduct in vivo animal experiments to verify its performance. The results show that even with a sparse sampling rate as low as 1/30, our U3S-PAT strategy achieves similar reconstruction and spectral unmixing accuracy as non-spiral dense sampling. Given its ability to dramatically reduce the time required for three-dimensional multispectral scanning, our U3S-PAT strategy has the potential to perform volumetric molecular imaging of dynamic biological activities.

Deep learning acceleration of iterative model-based light fluence correction for photoacoustic tomography

Dec 08, 2023Abstract:Photoacoustic tomography (PAT) is a promising imaging technique that can visualize the distribution of chromophores within biological tissue. However, the accuracy of PAT imaging is compromised by light fluence (LF), which hinders the quantification of light absorbers. Currently, model-based iterative methods are used for LF correction, but they require significant computational resources due to repeated LF estimation based on differential light transport models. To improve LF correction efficiency, we propose to use Fourier neural operator (FNO), a neural network specially designed for solving differential equations, to learn the forward projection of light transport in PAT. Trained using paired finite-element-based LF simulation data, our FNO model replaces the traditional computational heavy LF estimator during iterative correction, such that the correction procedure is significantly accelerated. Simulation and experimental results demonstrate that our method achieves comparable LF correction quality to traditional iterative methods while reducing the correction time by over 30 times.

3D-EPI Blip-Up/Down Acquisition with CAIPI and Joint Hankel Structured Low-Rank Reconstruction for Rapid Distortion-Free High-Resolution T2* Mapping

Dec 01, 2022Abstract:Purpose: This work aims to develop a novel distortion-free 3D-EPI acquisition and image reconstruction technique for fast and robust, high-resolution, whole-brain imaging as well as quantitative T2* mapping. Methods: 3D-Blip-Up and -Down Acquisition (3D-BUDA) sequence is designed for both single- and multi-echo 3D GRE-EPI imaging using multiple shots with blip-up and -down readouts to encode B0 field map information. Complementary k-space coverage is achieved using controlled aliasing in parallel imaging (CAIPI) sampling across the shots. For image reconstruction, an iterative hard-thresholding algorithm is employed to minimize the cost function that combines field map information informed parallel imaging with the structured low-rank constraint for multi-shot 3D-BUDA data. Extending 3D-BUDA to multi-echo imaging permits T2* mapping. For this, we propose constructing a joint Hankel matrix along both echo and shot dimensions to improve the reconstruction. Results: Experimental results on in vivo multi-echo data demonstrate that, by performing joint reconstruction along with both echo and shot dimensions, reconstruction accuracy is improved compared to standard 3D-BUDA reconstruction. CAIPI sampling is further shown to enhance the image quality. For T2* mapping, T2* values from 3D-Joint-CAIPI-BUDA and reference multi-echo GRE are within limits of agreement as quantified by Bland-Altman analysis. Conclusions: The proposed technique enables rapid 3D distortion-free high-resolution imaging and T2* mapping. Specifically, 3D-BUDA enables 1-mm isotropic whole-brain imaging in 22 s at 3 T and 9 s on a 7 T scanner. The combination of multi-echo 3D-BUDA with CAIPI acquisition and joint reconstruction enables distortion-free whole-brain T2* mapping in 47 s at 1.1x1.1x1.0 mm3 resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge