Performance of Medical Image Fusion in High-level Analysis Tasks: A Mutual Enhancement Framework for Unaligned PAT and MRI Image Fusion

Paper and Code

Jul 04, 2024

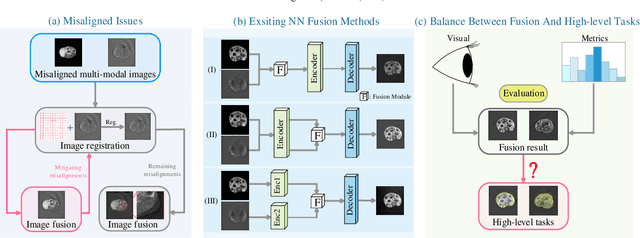

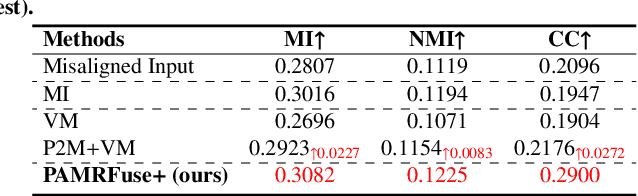

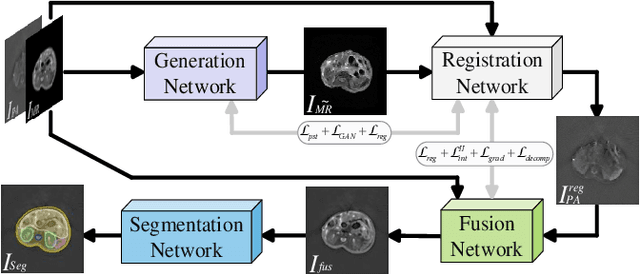

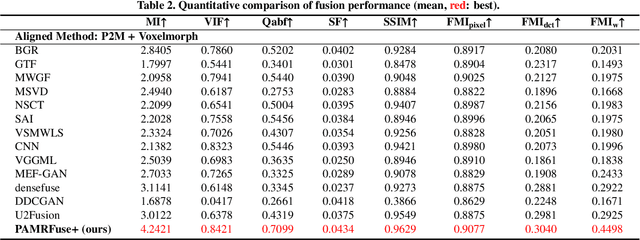

Photoacoustic tomography (PAT) offers optical contrast, whereas magnetic resonance imaging (MRI) excels in imaging soft tissue and organ anatomy. The fusion of PAT with MRI holds promising application prospects due to their complementary advantages. Existing image fusion have made considerable progress in pre-registered images, yet spatial deformations are difficult to avoid in medical imaging scenarios. More importantly, current algorithms focus on visual quality and statistical metrics, thus overlooking the requirements of high-level tasks. To address these challenges, we proposes a unsupervised fusion model, termed PAMRFuse+, which integrates image generation and registration. Specifically, a cross-modal style transfer network is introduced to simplify cross-modal registration to single-modal registration. Subsequently, a multi-level registration network is employed to predict displacement vector fields. Furthermore, a dual-branch feature decomposition fusion network is proposed to address the challenges of cross-modal feature modeling and decomposition by integrating modality-specific and modality-shared features. PAMRFuse+ achieves satisfactory results in registering and fusing unaligned PAT-MRI datasets. Moreover, for the first time, we evaluate the performance of medical image fusion with contour segmentation and multi-organ instance segmentation. Extensive experimental demonstrations reveal the advantages of PAMRFuse+ in improving the performance of medical image analysis tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge