Wenhao Hu

Hand3R: Online 4D Hand-Scene Reconstruction in the Wild

Feb 03, 2026Abstract:For Embodied AI, jointly reconstructing dynamic hands and the dense scene context is crucial for understanding physical interaction. However, most existing methods recover isolated hands in local coordinates, overlooking the surrounding 3D environment. To address this, we present Hand3R, the first online framework for joint 4D hand-scene reconstruction from monocular video. Hand3R synergizes a pre-trained hand expert with a 4D scene foundation model via a scene-aware visual prompting mechanism. By injecting high-fidelity hand priors into a persistent scene memory, our approach enables simultaneous reconstruction of accurate hand meshes and dense metric-scale scene geometry in a single forward pass. Experiments demonstrate that Hand3R bypasses the reliance on offline optimization and delivers competitive performance in both local hand reconstruction and global positioning.

GO-MLVTON: Garment Occlusion-Aware Multi-Layer Virtual Try-On with Diffusion Models

Jan 20, 2026Abstract:Existing Image-based virtual try-on (VTON) methods primarily focus on single-layer or multi-garment VTON, neglecting multi-layer VTON (ML-VTON), which involves dressing multiple layers of garments onto the human body with realistic deformation and layering to generate visually plausible outcomes. The main challenge lies in accurately modeling occlusion relationships between inner and outer garments to reduce interference from redundant inner garment features. To address this, we propose GO-MLVTON, the first multi-layer VTON method, introducing the Garment Occlusion Learning module to learn occlusion relationships and the StableDiffusion-based Garment Morphing & Fitting module to deform and fit garments onto the human body, producing high-quality multi-layer try-on results. Additionally, we present the MLG dataset for this task and propose a new metric named Layered Appearance Coherence Difference (LACD) for evaluation. Extensive experiments demonstrate the state-of-the-art performance of GO-MLVTON. Project page: https://upyuyang.github.io/go-mlvton/.

Flexible-Duplex Cell-Free Architecture for Secure Uplink Communications in Low-Altitude Wireless Networks

Jan 07, 2026Abstract:Low-altitude wireless networks (LAWNs) are expected to play a central role in future 6G infrastructures, yet uplink transmissions of uncrewed aerial vehicles (UAVs) remain vulnerable to eavesdropping due to their limited transmit power, constrained antenna resources, and highly exposed air-ground propagation conditions. To address this fundamental bottleneck, we propose a flexible-duplex cell-free (CF) architecture in which each distributed access point (AP) can dynamically operate either as a receive AP for UAV uplink collection or as a transmit AP that generates cooperative artificial noise (AN) for secrecy enhancement. Such AP-level duplex flexibility introduces an additional spatial degree of freedom that enables distributed and adaptive protection against wiretapping in LAWNs. Building upon this architecture, we formulate a max-min secrecy-rate problem that jointly optimizes AP mode selection, receive combining, and AN covariance design. This tightly coupled and nonconvex optimization is tackled by first deriving the optimal receive combiners in closed form, followed by developing a penalty dual decomposition (PDD) algorithm with guaranteed convergence to a stationary solution. To further reduce computational burden, we propose a low-complexity sequential scheme that determines AP modes via a heuristic metric and then updates the AN covariance matrices through closed-form iterations embedded in the PDD framework. Simulation results show that the proposed flexible-duplex architecture yields substantial secrecy-rate gains over CF systems with fixed AP roles. The joint optimization method attains the highest secrecy performance, while the low-complexity approach achieves over 90% of the optimal performance with an order-of-magnitude lower computational complexity, offering a practical solution for secure uplink communications in LAWNs.

RecurGS: Interactive Scene Modeling via Discrete-State Recurrent Gaussian Fusion

Dec 20, 2025Abstract:Recent advances in 3D scene representations have enabled high-fidelity novel view synthesis, yet adapting to discrete scene changes and constructing interactive 3D environments remain open challenges in vision and robotics. Existing approaches focus solely on updating a single scene without supporting novel-state synthesis. Others rely on diffusion-based object-background decoupling that works on one state at a time and cannot fuse information across multiple observations. To address these limitations, we introduce RecurGS, a recurrent fusion framework that incrementally integrates discrete Gaussian scene states into a single evolving representation capable of interaction. RecurGS detects object-level changes across consecutive states, aligns their geometric motion using semantic correspondence and Lie-algebra based SE(3) refinement, and performs recurrent updates that preserve historical structures through replay supervision. A voxelized, visibility-aware fusion module selectively incorporates newly observed regions while keeping stable areas fixed, mitigating catastrophic forgetting and enabling efficient long-horizon updates. RecurGS supports object-level manipulation, synthesizes novel scene states without requiring additional scans, and maintains photorealistic fidelity across evolving environments. Extensive experiments across synthetic and real-world datasets demonstrate that our framework delivers high-quality reconstructions with substantially improved update efficiency, providing a scalable step toward continuously interactive Gaussian worlds.

IGFuse: Interactive 3D Gaussian Scene Reconstruction via Multi-Scans Fusion

Aug 18, 2025

Abstract:Reconstructing complete and interactive 3D scenes remains a fundamental challenge in computer vision and robotics, particularly due to persistent object occlusions and limited sensor coverage. Multiview observations from a single scene scan often fail to capture the full structural details. Existing approaches typically rely on multi stage pipelines, such as segmentation, background completion, and inpainting or require per-object dense scanning, both of which are error-prone, and not easily scalable. We propose IGFuse, a novel framework that reconstructs interactive Gaussian scene by fusing observations from multiple scans, where natural object rearrangement between captures reveal previously occluded regions. Our method constructs segmentation aware Gaussian fields and enforces bi-directional photometric and semantic consistency across scans. To handle spatial misalignments, we introduce a pseudo-intermediate scene state for unified alignment, alongside collaborative co-pruning strategies to refine geometry. IGFuse enables high fidelity rendering and object level scene manipulation without dense observations or complex pipelines. Extensive experiments validate the framework's strong generalization to novel scene configurations, demonstrating its effectiveness for real world 3D reconstruction and real-to-simulation transfer. Our project page is available online.

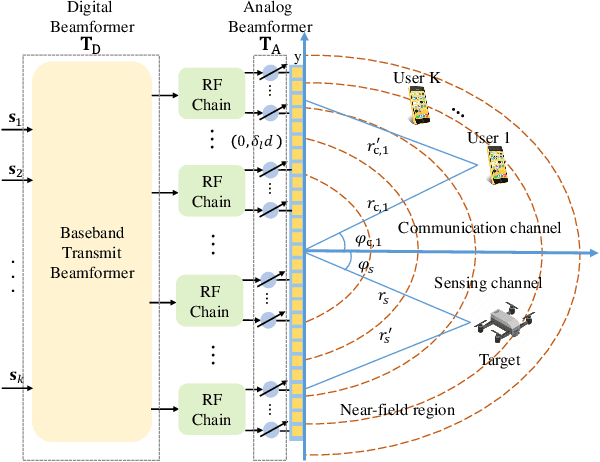

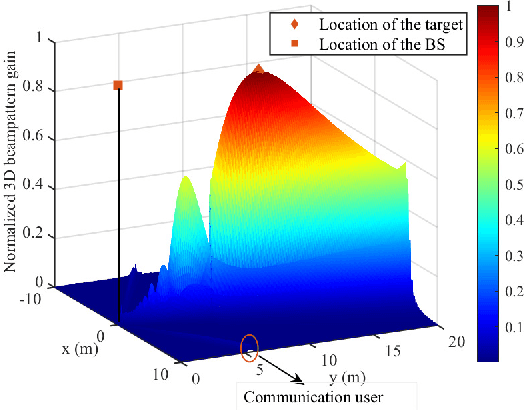

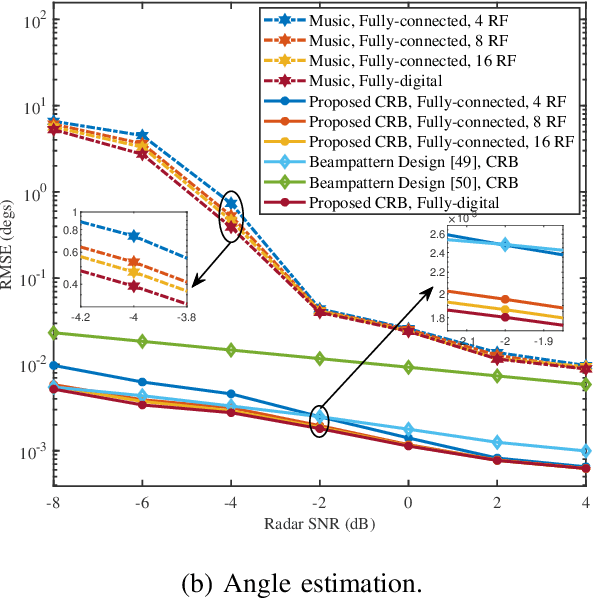

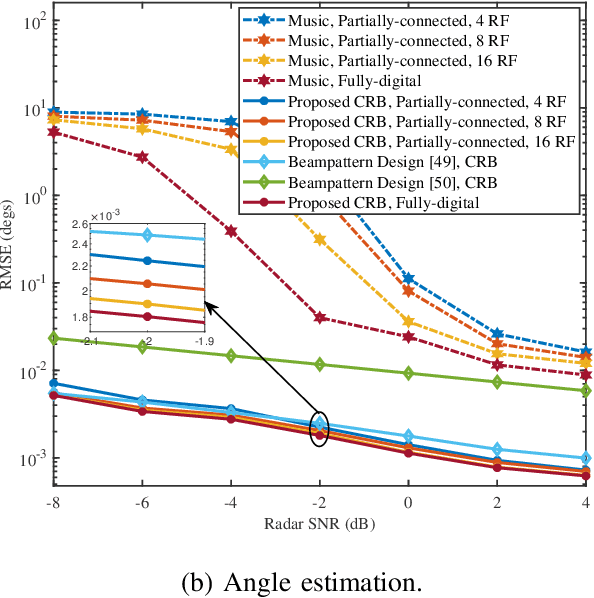

Energy-Efficient Hybrid Beamfocusing for Near-Field Integrated Sensing and Communication

Aug 06, 2025

Abstract:Integrated sensing and communication (ISAC) is a pivotal component of sixth-generation (6G) wireless networks, leveraging high-frequency bands and massive multiple-input multiple-output (M-MIMO) to deliver both high-capacity communication and high-precision sensing. However, these technological advancements lead to significant near-field effects, while the implementation of M-MIMO \mbox{is associated with considerable} hardware costs and escalated power consumption. In this context, hybrid architecture designs emerge as both hardware-efficient and energy-efficient solutions. Motivated by these considerations, we investigate the design of energy-efficient hybrid beamfocusing for near-field ISAC under two distinct target scenarios, i.e., a point target and an extended target. Specifically, we first derive the closed-form Cram\'{e}r-Rao bound (CRB) of joint angle-and-distance estimation for the point target and the Bayesian CRB (BCRB) of the target response matrix for the extended target. Building on these derived results, we minimize the CRB/BCRB by optimizing the transmit beamfocusing, while ensuring the energy efficiency (EE) of the system and the quality-of-service (QoS) for communication users. To address the resulting \mbox{nonconvex problems}, we first utilize a penalty-based successive convex approximation technique with a fully-digital beamformer to obtain a suboptimal solution. Then, we propose an efficient alternating \mbox{optimization} algorithm to design the analog-and-digital beamformer. \mbox{Simulation} results indicate that joint distance-and-angle estimation is feasible in the near-field region. However, the adopted hybrid architectures inevitably degrade the accuracy of distance estimation, compared with their fully-digital counterparts. Furthermore, enhancements in system EE would compromise the accuracy of target estimation, unveiling a nontrivial tradeoff.

DSG-World: Learning a 3D Gaussian World Model from Dual State Videos

Jun 05, 2025Abstract:Building an efficient and physically consistent world model from limited observations is a long standing challenge in vision and robotics. Many existing world modeling pipelines are based on implicit generative models, which are hard to train and often lack 3D or physical consistency. On the other hand, explicit 3D methods built from a single state often require multi-stage processing-such as segmentation, background completion, and inpainting-due to occlusions. To address this, we leverage two perturbed observations of the same scene under different object configurations. These dual states offer complementary visibility, alleviating occlusion issues during state transitions and enabling more stable and complete reconstruction. In this paper, we present DSG-World, a novel end-to-end framework that explicitly constructs a 3D Gaussian World model from Dual State observations. Our approach builds dual segmentation-aware Gaussian fields and enforces bidirectional photometric and semantic consistency. We further introduce a pseudo intermediate state for symmetric alignment and design collaborative co-pruning trategies to refine geometric completeness. DSG-World enables efficient real-to-simulation transfer purely in the explicit Gaussian representation space, supporting high-fidelity rendering and object-level scene manipulation without relying on dense observations or multi-stage pipelines. Extensive experiments demonstrate strong generalization to novel views and scene states, highlighting the effectiveness of our approach for real-world 3D reconstruction and simulation.

ICE-Pruning: An Iterative Cost-Efficient Pruning Pipeline for Deep Neural Networks

May 12, 2025Abstract:Pruning is a widely used method for compressing Deep Neural Networks (DNNs), where less relevant parameters are removed from a DNN model to reduce its size. However, removing parameters reduces model accuracy, so pruning is typically combined with fine-tuning, and sometimes other operations such as rewinding weights, to recover accuracy. A common approach is to repeatedly prune and then fine-tune, with increasing amounts of model parameters being removed in each step. While straightforward to implement, pruning pipelines that follow this approach are computationally expensive due to the need for repeated fine-tuning. In this paper we propose ICE-Pruning, an iterative pruning pipeline for DNNs that significantly decreases the time required for pruning by reducing the overall cost of fine-tuning, while maintaining a similar accuracy to existing pruning pipelines. ICE-Pruning is based on three main components: i) an automatic mechanism to determine after which pruning steps fine-tuning should be performed; ii) a freezing strategy for faster fine-tuning in each pruning step; and iii) a custom pruning-aware learning rate scheduler to further improve the accuracy of each pruning step and reduce the overall time consumption. We also propose an efficient auto-tuning stage for the hyperparameters (e.g., freezing percentage) introduced by the three components. We evaluate ICE-Pruning on several DNN models and datasets, showing that it can accelerate pruning by up to 9.61x. Code is available at https://github.com/gicLAB/ICE-Pruning

DynaCode: A Dynamic Complexity-Aware Code Benchmark for Evaluating Large Language Models in Code Generation

Mar 13, 2025Abstract:The rapid advancement of large language models (LLMs) has significantly improved their performance in code generation tasks. However, existing code benchmarks remain static, consisting of fixed datasets with predefined problems. This makes them vulnerable to memorization during training, where LLMs recall specific test cases instead of generalizing to new problems, leading to data contamination and unreliable evaluation results. To address these issues, we introduce DynaCode, a dynamic, complexity-aware benchmark that overcomes the limitations of static datasets. DynaCode evaluates LLMs systematically using a complexity-aware metric, incorporating both code complexity and call-graph structures. DynaCode achieves large-scale diversity, generating up to 189 million unique nested code problems across four distinct levels of code complexity, referred to as units, and 16 types of call graphs. Results on 12 latest LLMs show an average performance drop of 16.8% to 45.7% compared to MBPP+, a static code generation benchmark, with performance progressively decreasing as complexity increases. This demonstrates DynaCode's ability to effectively differentiate LLMs. Additionally, by leveraging call graphs, we gain insights into LLM behavior, particularly their preference for handling subfunction interactions within nested code.

Pointmap Association and Piecewise-Plane Constraint for Consistent and Compact 3D Gaussian Segmentation Field

Feb 22, 2025Abstract:Achieving a consistent and compact 3D segmentation field is crucial for maintaining semantic coherence across views and accurately representing scene structures. Previous 3D scene segmentation methods rely on video segmentation models to address inconsistencies across views, but the absence of spatial information often leads to object misassociation when object temporarily disappear and reappear. Furthermore, in the process of 3D scene reconstruction, segmentation and optimization are often treated as separate tasks. As a result, optimization typically lacks awareness of semantic category information, which can result in floaters with ambiguous segmentation. To address these challenges, we introduce CCGS, a method designed to achieve both view consistent 2D segmentation and a compact 3D Gaussian segmentation field. CCGS incorporates pointmap association and a piecewise-plane constraint. First, we establish pixel correspondence between adjacent images by minimizing the Euclidean distance between their pointmaps. We then redefine object mask overlap accordingly. The Hungarian algorithm is employed to optimize mask association by minimizing the total matching cost, while allowing for partial matches. To further enhance compactness, the piecewise-plane constraint restricts point displacement within local planes during optimization, thereby preserving structural integrity. Experimental results on ScanNet and Replica datasets demonstrate that CCGS outperforms existing methods in both 2D panoptic segmentation and 3D Gaussian segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge