Wang Yao

PIONM: A Generalized Approach to Solving Density-Constrained Mean-Field Games Equilibrium under Modified Boundary Conditions

Apr 04, 2025

Abstract:Neural network-based methods are effective for solving equilibria in Mean-Field Games (MFGs), particularly in high-dimensional settings. However, solving the coupled partial differential equations (PDEs) in MFGs limits their applicability since solving coupled PDEs is computationally expensive. Additionally, modifying boundary conditions, such as the initial state distribution or terminal value function, necessitates extensive retraining, reducing scalability. To address these challenges, we propose a generalized framework, PIONM (Physics-Informed Neural Operator NF-MKV Net), which leverages physics-informed neural operators to solve MFGs equations. PIONM utilizes neural operators to compute MFGs equilibria for arbitrary boundary conditions. The method encodes boundary conditions as input features and trains the model to align them with density evolution, modeled using discrete-time normalizing flows. Once trained, the algorithm efficiently computes the density distribution at any time step for modified boundary condition, ensuring efficient adaptation to different boundary conditions in MFGs equilibria. Unlike traditional MFGs methods constrained by fixed coefficients, PIONM efficiently computes equilibria under varying boundary conditions, including obstacles, diffusion coefficients, initial densities, and terminal functions. PIONM can adapt to modified conditions while preserving density distribution constraints, demonstrating superior scalability and generalization capabilities compared to existing methods.

NF-MKV Net: A Constraint-Preserving Neural Network Approach to Solving Mean-Field Games Equilibrium

Jan 29, 2025

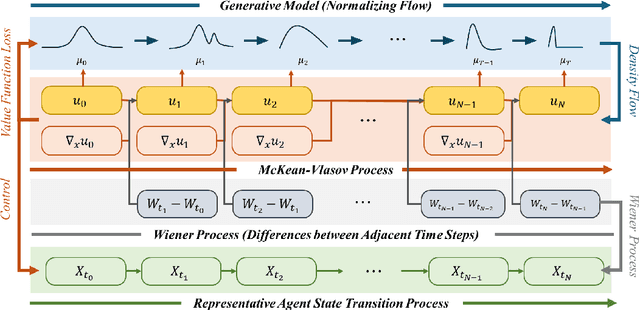

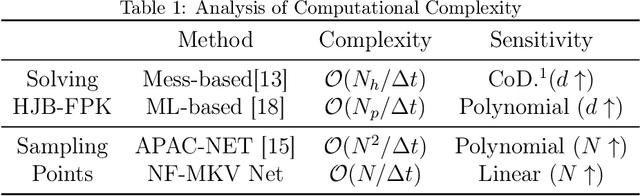

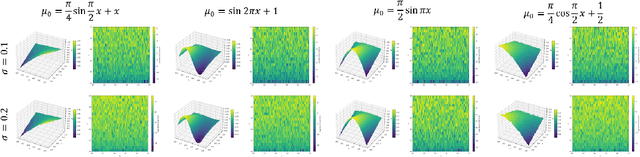

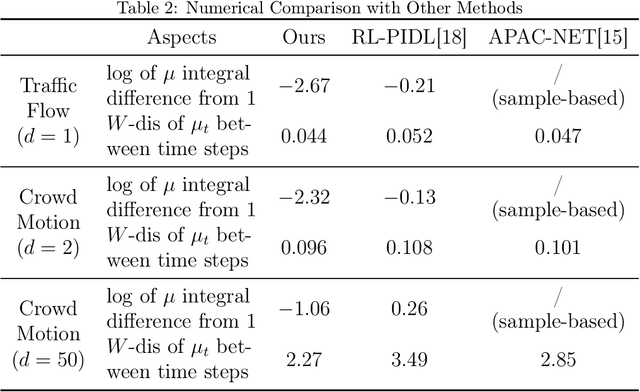

Abstract:Neural network-based methods for solving Mean-Field Games (MFGs) equilibria have garnered significant attention for their effectiveness in high-dimensional problems. However, many algorithms struggle with ensuring that the evolution of the density distribution adheres to the required mathematical constraints. This paper investigates a neural network approach to solving MFGs equilibria through a stochastic process perspective. It integrates process-regularized Normalizing Flow (NF) frameworks with state-policy-connected time-series neural networks to address McKean-Vlasov-type Forward-Backward Stochastic Differential Equation (MKV FBSDE) fixed-point problems, equivalent to MFGs equilibria.

ChildDiffusion: Unlocking the Potential of Generative AI and Controllable Augmentations for Child Facial Data using Stable Diffusion and Large Language Models

Jun 17, 2024

Abstract:In this research work we have proposed high-level ChildDiffusion framework capable of generating photorealistic child facial samples and further embedding several intelligent augmentations on child facial data using short text prompts, detailed textual guidance from LLMs, and further image to image transformation using text guidance control conditioning thus providing an opportunity to curate fully synthetic large scale child datasets. The framework is validated by rendering high-quality child faces representing ethnicity data, micro expressions, face pose variations, eye blinking effects, facial accessories, different hair colours and styles, aging, multiple and different child gender subjects in a single frame. Addressing privacy concerns regarding child data acquisition requires a comprehensive approach that involves legal, ethical, and technological considerations. Keeping this in view this framework can be adapted to synthesise child facial data which can be effectively used for numerous downstream machine learning tasks. The proposed method circumvents common issues encountered in generative AI tools, such as temporal inconsistency and limited control over the rendered outputs. As an exemplary use case we have open-sourced child ethnicity data consisting of 2.5k child facial samples of five different classes which includes African, Asian, White, South Asian/ Indian, and Hispanic races by deploying the model in production inference phase. The rendered data undergoes rigorous qualitative as well as quantitative tests to cross validate its efficacy and further fine-tuning Yolo architecture for detecting and classifying child ethnicity as an exemplary downstream machine learning task.

Synthetic Face Ageing: Evaluation, Analysis and Facilitation of Age-Robust Facial Recognition Algorithms

Jun 10, 2024

Abstract:The ability to accurately recognize an individual's face with respect to human aging factor holds significant importance for various private as well as government sectors such as customs and public security bureaus, passport office, and national database systems. Therefore, developing a robust age-invariant face recognition system is of crucial importance to address the challenges posed by ageing and maintain the reliability and accuracy of facial recognition technology. In this research work, the focus is to explore the feasibility of utilizing synthetic ageing data to improve the robustness of face recognition models that can eventually help in recognizing people at broader age intervals. To achieve this, we first design set of experiments to evaluate state-of-the-art synthetic ageing methods. In the next stage we explore the effect of age intervals on a current deep learning-based face recognition algorithm by using synthetic ageing data as well as real ageing data to perform rigorous training and validation. Moreover, these synthetic age data have been used in facilitating face recognition algorithms. Experimental results show that the recognition rate of the model trained on synthetic ageing images is 3.33% higher than the results of the baseline model when tested on images with an age gap of 40 years, which prove the potential of synthetic age data which has been quantified to enhance the performance of age-invariant face recognition systems.

Derm-T2IM: Harnessing Synthetic Skin Lesion Data via Stable Diffusion Models for Enhanced Skin Disease Classification using ViT and CNN

Jan 10, 2024Abstract:This study explores the utilization of Dermatoscopic synthetic data generated through stable diffusion models as a strategy for enhancing the robustness of machine learning model training. Synthetic data generation plays a pivotal role in mitigating challenges associated with limited labeled datasets, thereby facilitating more effective model training. In this context, we aim to incorporate enhanced data transformation techniques by extending the recent success of few-shot learning and a small amount of data representation in text-to-image latent diffusion models. The optimally tuned model is further used for rendering high-quality skin lesion synthetic data with diverse and realistic characteristics, providing a valuable supplement and diversity to the existing training data. We investigate the impact of incorporating newly generated synthetic data into the training pipeline of state-of-art machine learning models, assessing its effectiveness in enhancing model performance and generalization to unseen real-world data. Our experimental results demonstrate the efficacy of the synthetic data generated through stable diffusion models helps in improving the robustness and adaptability of end-to-end CNN and vision transformer models on two different real-world skin lesion datasets.

Synthetic Speaking Children -- Why We Need Them and How to Make Them

Nov 08, 2023Abstract:Contemporary Human Computer Interaction (HCI) research relies primarily on neural network models for machine vision and speech understanding of a system user. Such models require extensively annotated training datasets for optimal performance and when building interfaces for users from a vulnerable population such as young children, GDPR introduces significant complexities in data collection, management, and processing. Motivated by the training needs of an Edge AI smart toy platform this research explores the latest advances in generative neural technologies and provides a working proof of concept of a controllable data generation pipeline for speech driven facial training data at scale. In this context, we demonstrate how StyleGAN2 can be finetuned to create a gender balanced dataset of children's faces. This dataset includes a variety of controllable factors such as facial expressions, age variations, facial poses, and even speech-driven animations with realistic lip synchronization. By combining generative text to speech models for child voice synthesis and a 3D landmark based talking heads pipeline, we can generate highly realistic, entirely synthetic, talking child video clips. These video clips can provide valuable, and controllable, synthetic training data for neural network models, bridging the gap when real data is scarce or restricted due to privacy regulations.

Will your Doorbell Camera still recognize you as you grow old

Aug 08, 2023

Abstract:Robust authentication for low-power consumer devices such as doorbell cameras poses a valuable and unique challenge. This work explores the effect of age and aging on the performance of facial authentication methods. Two public age datasets, AgeDB and Morph-II have been used as baselines in this work. A photo-realistic age transformation method has been employed to augment a set of high-quality facial images with various age effects. Then the effect of these synthetic aging data on the high-performance deep-learning-based face recognition model is quantified by using various metrics including Receiver Operating Characteristic (ROC) curves and match score distributions. Experimental results demonstrate that long-term age effects are still a significant challenge for the state-of-the-art facial authentication method.

A Comparative Study of Image-to-Image Translation Using GANs for Synthetic Child Race Data

Aug 08, 2023Abstract:The lack of ethnic diversity in data has been a limiting factor of face recognition techniques in the literature. This is particularly the case for children where data samples are scarce and presents a challenge when seeking to adapt machine vision algorithms that are trained on adult data to work on children. This work proposes the utilization of image-to-image transformation to synthesize data of different races and thus adjust the ethnicity of children's face data. We consider ethnicity as a style and compare three different Image-to-Image neural network based methods, specifically pix2pix, CycleGAN, and CUT networks to implement Caucasian child data and Asian child data conversion. Experimental validation results on synthetic data demonstrate the feasibility of using image-to-image transformation methods to generate various synthetic child data samples with broader ethnic diversity.

ChildGAN: Large Scale Synthetic Child Facial Data Using Domain Adaptation in StyleGAN

Jul 25, 2023Abstract:In this research work, we proposed a novel ChildGAN, a pair of GAN networks for generating synthetic boys and girls facial data derived from StyleGAN2. ChildGAN is built by performing smooth domain transfer using transfer learning. It provides photo-realistic, high-quality data samples. A large-scale dataset is rendered with a variety of smart facial transformations: facial expressions, age progression, eye blink effects, head pose, skin and hair color variations, and variable lighting conditions. The dataset comprises more than 300k distinct data samples. Further, the uniqueness and characteristics of the rendered facial features are validated by running different computer vision application tests which include CNN-based child gender classifier, face localization and facial landmarks detection test, identity similarity evaluation using ArcFace, and lastly running eye detection and eye aspect ratio tests. The results demonstrate that synthetic child facial data of high quality offers an alternative to the cost and complexity of collecting a large-scale dataset from real children.

Decisive Data using Multi-Modality Optical Sensors for Advanced Vehicular Systems

Jul 25, 2023

Abstract:Optical sensors have played a pivotal role in acquiring real world data for critical applications. This data, when integrated with advanced machine learning algorithms provides meaningful information thus enhancing human vision. This paper focuses on various optical technologies for design and development of state-of-the-art out-cabin forward vision systems and in-cabin driver monitoring systems. The focused optical sensors include Longwave Thermal Imaging (LWIR) cameras, Near Infrared (NIR), Neuromorphic/ event cameras, Visible CMOS cameras and Depth cameras. Further the paper discusses different potential applications which can be employed using the unique strengths of each these optical modalities in real time environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge