Mehdi Sefidgar Dilmaghani

A Lightweight 3D-CNN for Event-Based Human Action Recognition with Privacy-Preserving Potential

Nov 05, 2025

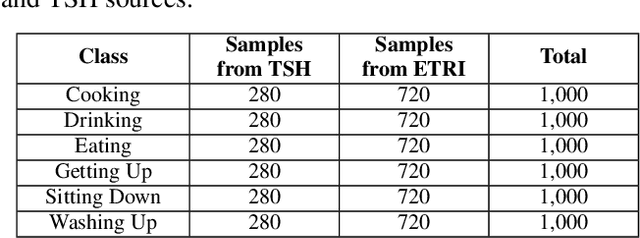

Abstract:This paper presents a lightweight three-dimensional convolutional neural network (3DCNN) for human activity recognition (HAR) using event-based vision data. Privacy preservation is a key challenge in human monitoring systems, as conventional frame-based cameras capture identifiable personal information. In contrast, event cameras record only changes in pixel intensity, providing an inherently privacy-preserving sensing modality. The proposed network effectively models both spatial and temporal dynamics while maintaining a compact design suitable for edge deployment. To address class imbalance and enhance generalization, focal loss with class reweighting and targeted data augmentation strategies are employed. The model is trained and evaluated on a composite dataset derived from the Toyota Smart Home and ETRI datasets. Experimental results demonstrate an F1-score of 0.9415 and an overall accuracy of 94.17%, outperforming benchmark 3D-CNN architectures such as C3D, ResNet3D, and MC3_18 by up to 3%. These results highlight the potential of event-based deep learning for developing accurate, efficient, and privacy-aware human action recognition systems suitable for real-world edge applications.

Autobiasing Event Cameras

Nov 01, 2024

Abstract:This paper presents an autonomous method to address challenges arising from severe lighting conditions in machine vision applications that use event cameras. To manage these conditions, the research explores the built in potential of these cameras to adjust pixel functionality, named bias settings. As cars are driven at various times and locations, shifts in lighting conditions are unavoidable. Consequently, this paper utilizes the neuromorphic YOLO-based face tracking module of a driver monitoring system as the event-based application to study. The proposed method uses numerical metrics to continuously monitor the performance of the event-based application in real-time. When the application malfunctions, the system detects this through a drop in the metrics and automatically adjusts the event cameras bias values. The Nelder-Mead simplex algorithm is employed to optimize this adjustment, with finetuning continuing until performance returns to a satisfactory level. The advantage of bias optimization lies in its ability to handle conditions such as flickering or darkness without requiring additional hardware or software. To demonstrate the capabilities of the proposed system, it was tested under conditions where detecting human faces with default bias values was impossible. These severe conditions were simulated using dim ambient light and various flickering frequencies. Following the automatic and dynamic process of bias modification, the metrics for face detection significantly improved under all conditions. Autobiasing resulted in an increase in the YOLO confidence indicators by more than 33 percent for object detection and 37 percent for face detection highlighting the effectiveness of the proposed method.

Neuromorphic Seatbelt State Detection for In-Cabin Monitoring with Event Cameras

Aug 15, 2023

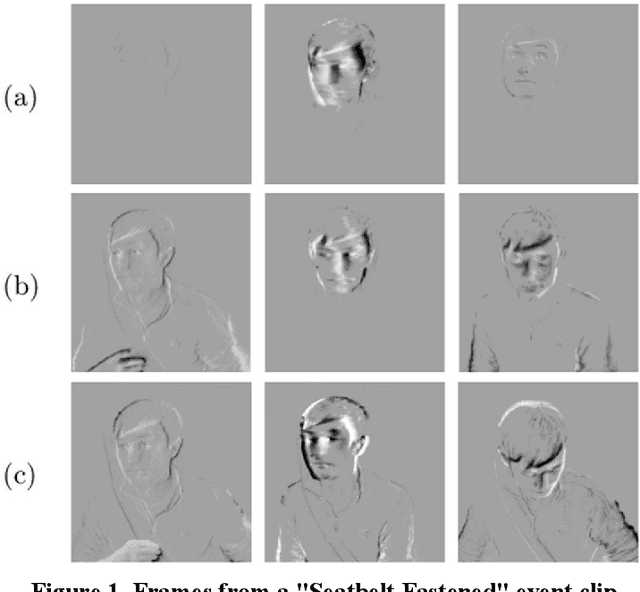

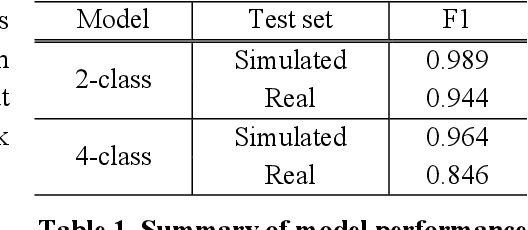

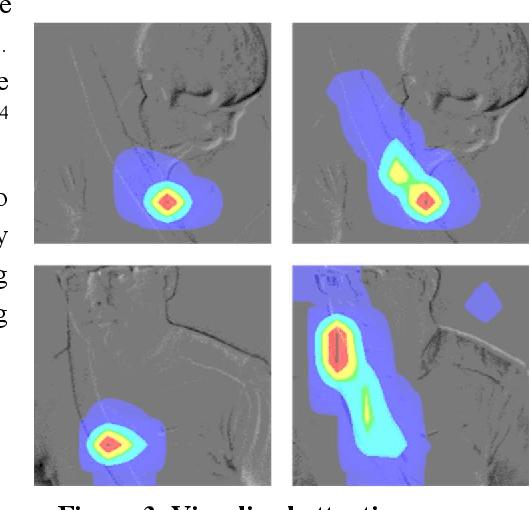

Abstract:Neuromorphic vision sensors, or event cameras, differ from conventional cameras in that they do not capture images at a specified rate. Instead, they asynchronously log local brightness changes at each pixel. As a result, event cameras only record changes in a given scene, and do so with very high temporal resolution, high dynamic range, and low power requirements. Recent research has demonstrated how these characteristics make event cameras extremely practical sensors in driver monitoring systems (DMS), enabling the tracking of high-speed eye motion and blinks. This research provides a proof of concept to expand event-based DMS techniques to include seatbelt state detection. Using an event simulator, a dataset of 108,691 synthetic neuromorphic frames of car occupants was generated from a near-infrared (NIR) dataset, and split into training, validation, and test sets for a seatbelt state detection algorithm based on a recurrent convolutional neural network (CNN). In addition, a smaller set of real event data was collected and reserved for testing. In a binary classification task, the fastened/unfastened frames were identified with an F1 score of 0.989 and 0.944 on the simulated and real test sets respectively. When the problem extended to also classify the action of fastening/unfastening the seatbelt, respective F1 scores of 0.964 and 0.846 were achieved.

* 4 pages, 3 figures, IMVIP 2023

Decisive Data using Multi-Modality Optical Sensors for Advanced Vehicular Systems

Jul 25, 2023

Abstract:Optical sensors have played a pivotal role in acquiring real world data for critical applications. This data, when integrated with advanced machine learning algorithms provides meaningful information thus enhancing human vision. This paper focuses on various optical technologies for design and development of state-of-the-art out-cabin forward vision systems and in-cabin driver monitoring systems. The focused optical sensors include Longwave Thermal Imaging (LWIR) cameras, Near Infrared (NIR), Neuromorphic/ event cameras, Visible CMOS cameras and Depth cameras. Further the paper discusses different potential applications which can be employed using the unique strengths of each these optical modalities in real time environment.

Neuromorphic Sensing for Yawn Detection in Driver Drowsiness

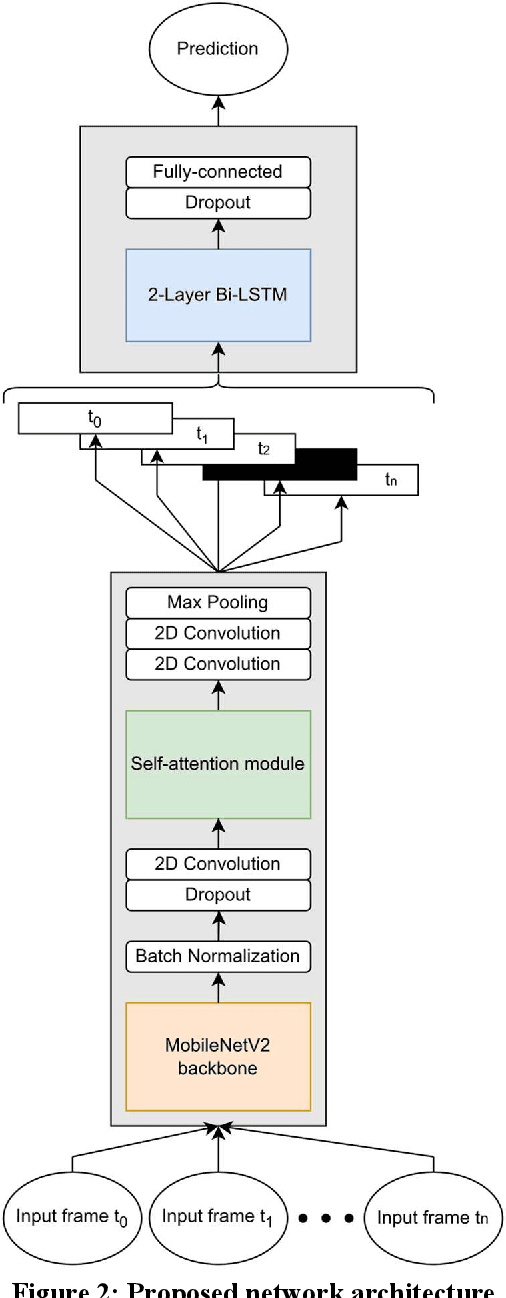

May 04, 2023Abstract:Driver monitoring systems (DMS) are a key component of vehicular safety and essential for the transition from semiautonomous to fully autonomous driving. A key task for DMS is to ascertain the cognitive state of a driver and to determine their level of tiredness. Neuromorphic vision systems, based on event camera technology, provide advanced sensing of facial characteristics, in particular the behavior of a driver's eyes. This research explores the potential to extend neuromorphic sensing techniques to analyze the entire facial region, detecting yawning behaviors that give a complimentary indicator of tiredness. A neuromorphic dataset is constructed from 952 video clips (481 yawns, 471 not-yawns) captured with an RGB color camera, with 37 subjects. A total of 95200 neuromorphic image frames are generated from this video data using a video-to-event converter. From these data 21 subjects were selected to provide a training dataset, 8 subjects were used for validation data, and the remaining 8 subjects were reserved for an "unseen" test dataset. An additional 12300 frames were generated from event simulations of a public dataset to test against other methods. A CNN with self-attention and a recurrent head was designed, trained, and tested with these data. Respective precision and recall scores of 95.9 percent and 94.7 percent were achieved on our test set, and 89.9 percent and 91 percent on the simulated public test set, demonstrating the feasibility to add yawn detection as a sensing component of a neuromorphic DMS.

Control and Evaluation of Event Cameras Output Sharpness via Bias

Oct 25, 2022Abstract:Event cameras also known as neuromorphic sensors are relatively a new technology with some privilege over the RGB cameras. The most important one is their difference in capturing the light changes in the environment, each pixel changes independently from the others when it captures a change in the environment light. To increase the users degree of freedom in controlling the output of these cameras, such as changing the sensitivity of the sensor to light changes, controlling the number of generated events and other similar operations, the camera manufacturers usually introduce some tools to make sensor level changes in camera settings. The contribution of this research is to examine and document the effects of changing the sensor settings on the sharpness as an indicator of quality of the generated stream of event data. To have a qualitative understanding this stream of event is converted to frames, then the average image gradient magnitude as an index of the number of edges and accordingly sharpness is calculated for these frames. Five different bias settings are explained and the effect of their change in the event output is surveyed and analyzed. In addition, the operation of the event camera sensing array is explained with an analogue circuit model and the functions of the bias foundations are linked with this model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge