Joe Lemley

Event-Stream Super Resolution using Sigma-Delta Neural Network

Aug 13, 2024Abstract:This study introduces a novel approach to enhance the spatial-temporal resolution of time-event pixels based on luminance changes captured by event cameras. These cameras present unique challenges due to their low resolution and the sparse, asynchronous nature of the data they collect. Current event super-resolution algorithms are not fully optimized for the distinct data structure produced by event cameras, resulting in inefficiencies in capturing the full dynamism and detail of visual scenes with improved computational complexity. To bridge this gap, our research proposes a method that integrates binary spikes with Sigma Delta Neural Networks (SDNNs), leveraging spatiotemporal constraint learning mechanism designed to simultaneously learn the spatial and temporal distributions of the event stream. The proposed network is evaluated using widely recognized benchmark datasets, including N-MNIST, CIFAR10-DVS, ASL-DVS, and Event-NFS. A comprehensive evaluation framework is employed, assessing both the accuracy, through root mean square error (RMSE), and the computational efficiency of our model. The findings demonstrate significant improvements over existing state-of-the-art methods, specifically, the proposed method outperforms state-of-the-art performance in computational efficiency, achieving a 17.04-fold improvement in event sparsity and a 32.28-fold increase in synaptic operation efficiency over traditional artificial neural networks, alongside a two-fold better performance over spiking neural networks.

Heart Rate Detection Using an Event Camera

Sep 21, 2023

Abstract:Event cameras, also known as neuromorphic cameras, are an emerging technology that offer advantages over traditional shutter and frame-based cameras, including high temporal resolution, low power consumption, and selective data acquisition. In this study, we propose to harnesses the capabilities of event-based cameras to capture subtle changes in the surface of the skin caused by the pulsatile flow of blood in the wrist region. We investigate whether an event camera could be used for continuous noninvasive monitoring of heart rate (HR). Event camera video data from 25 participants, comprising varying age groups and skin colours, was collected and analysed. Ground-truth HR measurements obtained using conventional methods were used to evaluate of the accuracy of automatic detection of HR from event camera data. Our experimental results and comparison to the performance of other non-contact HR measurement methods demonstrate the feasibility of using event cameras for pulse detection. We also acknowledge the challenges and limitations of our method, such as light-induced flickering and the sub-conscious but naturally-occurring tremors of an individual during data capture.

Neuromorphic Seatbelt State Detection for In-Cabin Monitoring with Event Cameras

Aug 15, 2023

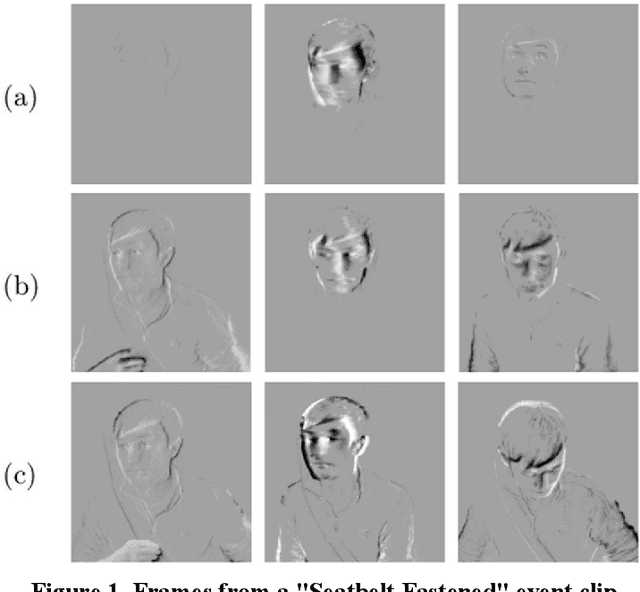

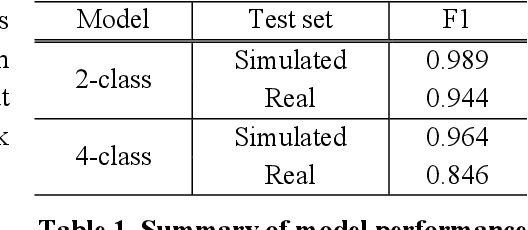

Abstract:Neuromorphic vision sensors, or event cameras, differ from conventional cameras in that they do not capture images at a specified rate. Instead, they asynchronously log local brightness changes at each pixel. As a result, event cameras only record changes in a given scene, and do so with very high temporal resolution, high dynamic range, and low power requirements. Recent research has demonstrated how these characteristics make event cameras extremely practical sensors in driver monitoring systems (DMS), enabling the tracking of high-speed eye motion and blinks. This research provides a proof of concept to expand event-based DMS techniques to include seatbelt state detection. Using an event simulator, a dataset of 108,691 synthetic neuromorphic frames of car occupants was generated from a near-infrared (NIR) dataset, and split into training, validation, and test sets for a seatbelt state detection algorithm based on a recurrent convolutional neural network (CNN). In addition, a smaller set of real event data was collected and reserved for testing. In a binary classification task, the fastened/unfastened frames were identified with an F1 score of 0.989 and 0.944 on the simulated and real test sets respectively. When the problem extended to also classify the action of fastening/unfastening the seatbelt, respective F1 scores of 0.964 and 0.846 were achieved.

* 4 pages, 3 figures, IMVIP 2023

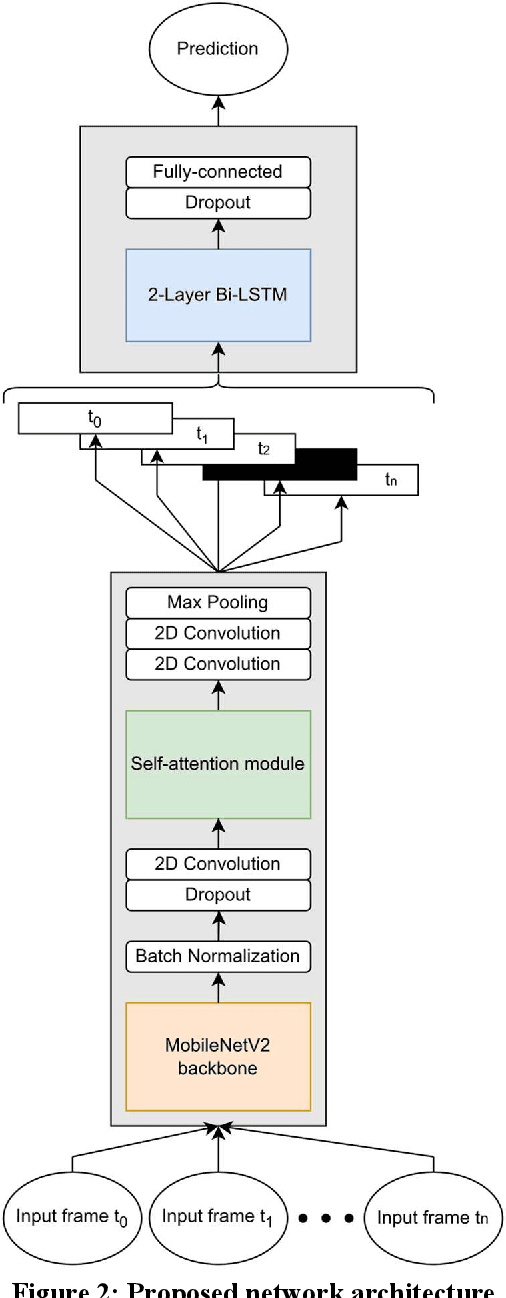

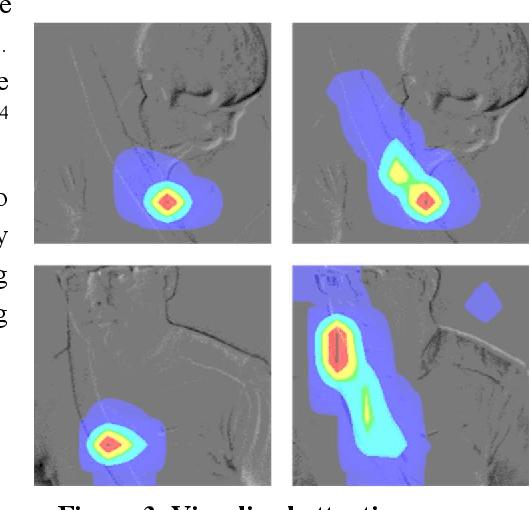

Neuromorphic Sensing for Yawn Detection in Driver Drowsiness

May 04, 2023Abstract:Driver monitoring systems (DMS) are a key component of vehicular safety and essential for the transition from semiautonomous to fully autonomous driving. A key task for DMS is to ascertain the cognitive state of a driver and to determine their level of tiredness. Neuromorphic vision systems, based on event camera technology, provide advanced sensing of facial characteristics, in particular the behavior of a driver's eyes. This research explores the potential to extend neuromorphic sensing techniques to analyze the entire facial region, detecting yawning behaviors that give a complimentary indicator of tiredness. A neuromorphic dataset is constructed from 952 video clips (481 yawns, 471 not-yawns) captured with an RGB color camera, with 37 subjects. A total of 95200 neuromorphic image frames are generated from this video data using a video-to-event converter. From these data 21 subjects were selected to provide a training dataset, 8 subjects were used for validation data, and the remaining 8 subjects were reserved for an "unseen" test dataset. An additional 12300 frames were generated from event simulations of a public dataset to test against other methods. A CNN with self-attention and a recurrent head was designed, trained, and tested with these data. Respective precision and recall scores of 95.9 percent and 94.7 percent were achieved on our test set, and 89.9 percent and 91 percent on the simulated public test set, demonstrating the feasibility to add yawn detection as a sensing component of a neuromorphic DMS.

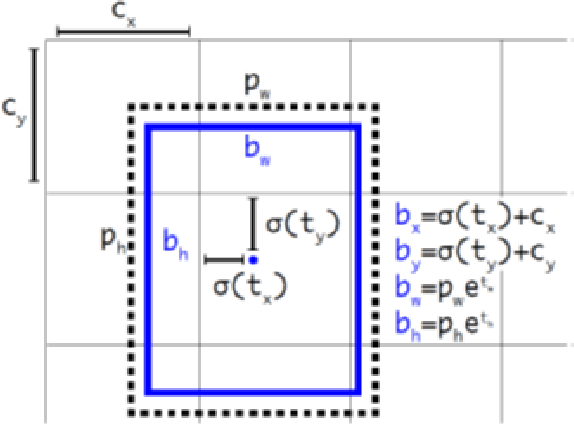

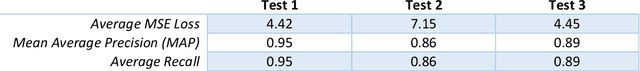

Event-based YOLO Object Detection: Proof of Concept for Forward Perception System

Jan 10, 2023Abstract:Neuromorphic vision or event vision is an advanced vision technology, where in contrast to the visible camera that outputs pixels, the event vision generates neuromorphic events every time there is a brightness change which exceeds a specific threshold in the field of view (FOV). This study focuses on leveraging neuromorphic event data for roadside object detection. This is a proof of concept towards building artificial intelligence (AI) based pipelines which can be used for forward perception systems for advanced vehicular applications. The focus is on building efficient state-of-the-art object detection networks with better inference results for fast-moving forward perception using an event camera. In this article, the event-simulated A2D2 dataset is manually annotated and trained on two different YOLOv5 networks (small and large variants). To further assess its robustness, single model testing and ensemble model testing are carried out.

Control and Evaluation of Event Cameras Output Sharpness via Bias

Oct 25, 2022Abstract:Event cameras also known as neuromorphic sensors are relatively a new technology with some privilege over the RGB cameras. The most important one is their difference in capturing the light changes in the environment, each pixel changes independently from the others when it captures a change in the environment light. To increase the users degree of freedom in controlling the output of these cameras, such as changing the sensitivity of the sensor to light changes, controlling the number of generated events and other similar operations, the camera manufacturers usually introduce some tools to make sensor level changes in camera settings. The contribution of this research is to examine and document the effects of changing the sensor settings on the sharpness as an indicator of quality of the generated stream of event data. To have a qualitative understanding this stream of event is converted to frames, then the average image gradient magnitude as an index of the number of edges and accordingly sharpness is calculated for these frames. Five different bias settings are explained and the effect of their change in the event output is surveyed and analyzed. In addition, the operation of the event camera sensing array is explained with an analogue circuit model and the functions of the bias foundations are linked with this model.

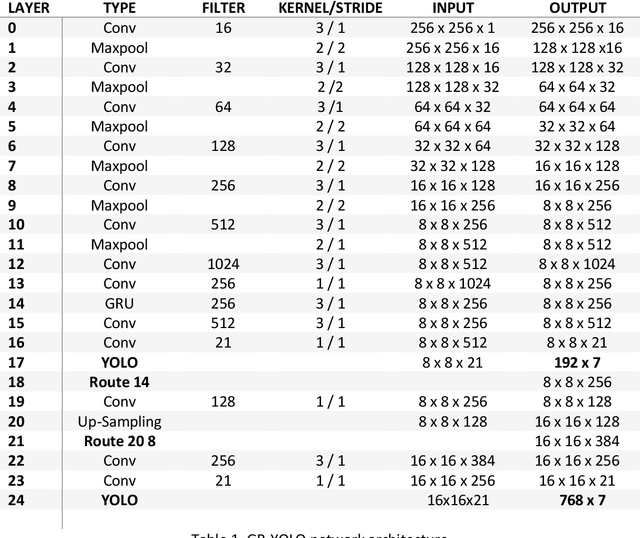

Real-Time Face & Eye Tracking and Blink Detection using Event Cameras

Oct 16, 2020

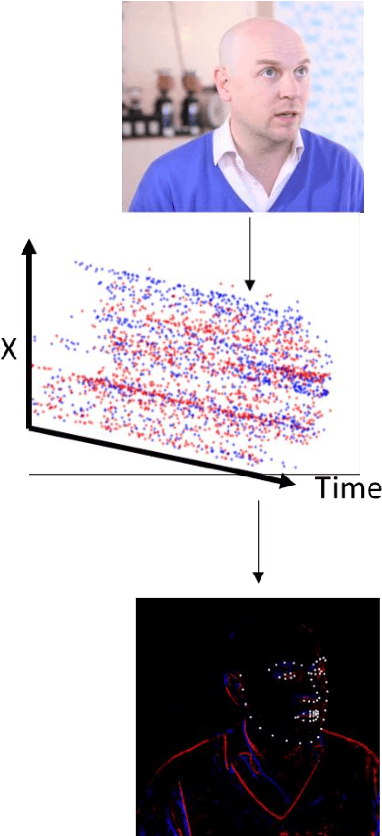

Abstract:Event cameras contain emerging, neuromorphic vision sensors that capture local light intensity changes at each pixel, generating a stream of asynchronous events. This way of acquiring visual information constitutes a departure from traditional frame based cameras and offers several significant advantages: low energy consumption, high temporal resolution, high dynamic range and low latency. Driver monitoring systems (DMS) are in-cabin safety systems designed to sense and understand a drivers physical and cognitive state. Event cameras are particularly suited to DMS due to their inherent advantages. This paper proposes a novel method to simultaneously detect and track faces and eyes for driver monitoring. A unique, fully convolutional recurrent neural network architecture is presented. To train this network, a synthetic event-based dataset is simulated with accurate bounding box annotations, called Neuromorphic HELEN. Additionally, a method to detect and analyse drivers eye blinks is proposed, exploiting the high temporal resolution of event cameras. Behaviour of blinking provides greater insights into a driver level of fatigue or drowsiness. We show that blinks have a unique temporal signature that can be better captured by event cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge