Amr Elrasad

Dataset Creation Pipeline for Camera-Based Heart Rate Estimation

Mar 02, 2023Abstract:Heart rate is one of the most vital health metrics which can be utilized to investigate and gain intuitions into various human physiological and psychological information. Estimating heart rate without the constraints of contact-based sensors thus presents itself as a very attractive field of research as it enables well-being monitoring in a wider variety of scenarios. Consequently, various techniques for camera-based heart rate estimation have been developed ranging from classical image processing to convoluted deep learning models and architectures. At the heart of such research efforts lies health and visual data acquisition, cleaning, transformation, and annotation. In this paper, we discuss how to prepare data for the task of developing or testing an algorithm or machine learning model for heart rate estimation from images of facial regions. The data prepared is to include camera frames as well as sensor readings from an electrocardiograph sensor. The proposed pipeline is divided into four main steps, namely removal of faulty data, frame and electrocardiograph timestamp de-jittering, signal denoising and filtering, and frame annotation creation. Our main contributions are a novel technique of eliminating jitter from health sensor and camera timestamps and a method to accurately time align both visual frame and electrocardiogram sensor data which is also applicable to other sensor types.

Real-Time Face & Eye Tracking and Blink Detection using Event Cameras

Oct 16, 2020

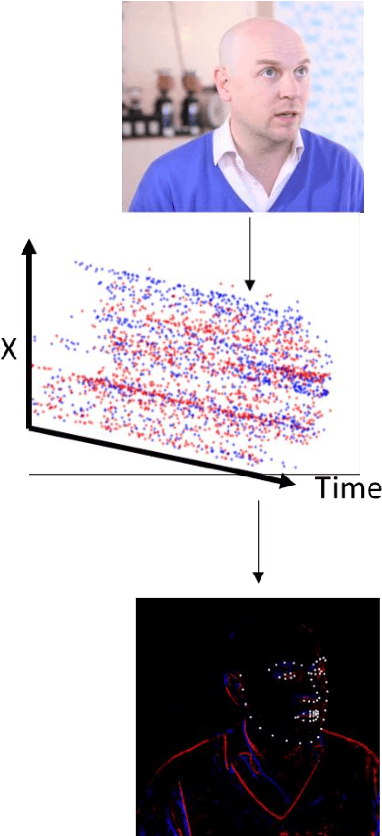

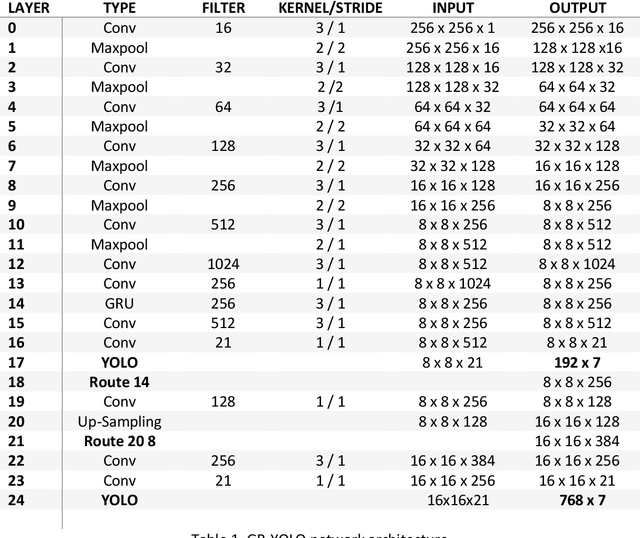

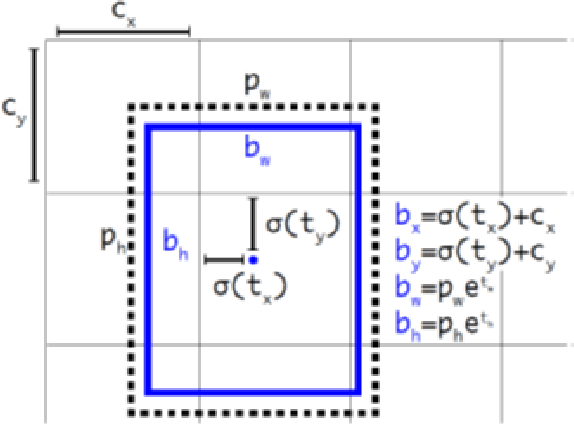

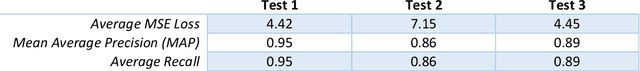

Abstract:Event cameras contain emerging, neuromorphic vision sensors that capture local light intensity changes at each pixel, generating a stream of asynchronous events. This way of acquiring visual information constitutes a departure from traditional frame based cameras and offers several significant advantages: low energy consumption, high temporal resolution, high dynamic range and low latency. Driver monitoring systems (DMS) are in-cabin safety systems designed to sense and understand a drivers physical and cognitive state. Event cameras are particularly suited to DMS due to their inherent advantages. This paper proposes a novel method to simultaneously detect and track faces and eyes for driver monitoring. A unique, fully convolutional recurrent neural network architecture is presented. To train this network, a synthetic event-based dataset is simulated with accurate bounding box annotations, called Neuromorphic HELEN. Additionally, a method to detect and analyse drivers eye blinks is proposed, exploiting the high temporal resolution of event cameras. Behaviour of blinking provides greater insights into a driver level of fatigue or drowsiness. We show that blinks have a unique temporal signature that can be better captured by event cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge